This one is a bit crazy. We have a server from ASRock Rack that is truly something different. This server offers two nodes, each with an AMD EPYC 7002/7003 CPU, their own BMC, 10Gbase-T networking, and even a GPU. If you think that sounds crazy, it just might, and in this review, we are going to see why.

ASRock Rack 1U2N2G-ROME/2T Hardware Overview

For this one, we have an accompanying video because it is such a unique platform. You can find that video here:

As always, we suggest opening this in a YouTube tab, browser, or app for a better viewing experience than the embedded player. Now, let us get to the hardware, and we are going to cover the chassis and the external hardware in this section.

ASRock Rack 1U2N2G-ROME/2T External Hardware Overview

The front of the system is where things are immediately different. The server is a 1U platform at 650mm or 25.6 in deep. The front panel of that server does not have any hot-swap storage.

The reason for this is that the server is designed with two nodes that each support AMD EPYC CPUs as well as GPUs. That means that most of the front is either a few ports or vents for airflow. Node 1 is on the left while Node 2 is on the right. As we will see, these are independent nodes.

Each node has an out-of-band management port, two USB 3 ports, a VGA port, and then two network ports. These network ports are 10Gbase-T ports powered by an Intel X550 NIC.

Importantly though, not only are these nodes not hot-swappable, but there is limited room for upgrading them. There are no real hot-swap storage expansion or NIC expansion options.

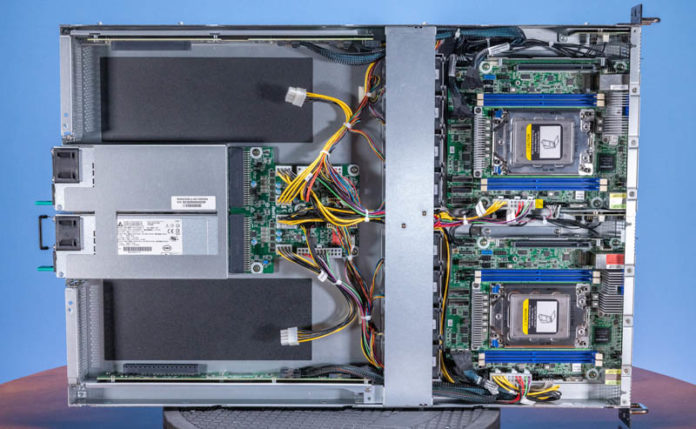

The rear of the chassis shows two primary features. There are two GPU expansion slots on either side, and in the middle, we have power supplies.

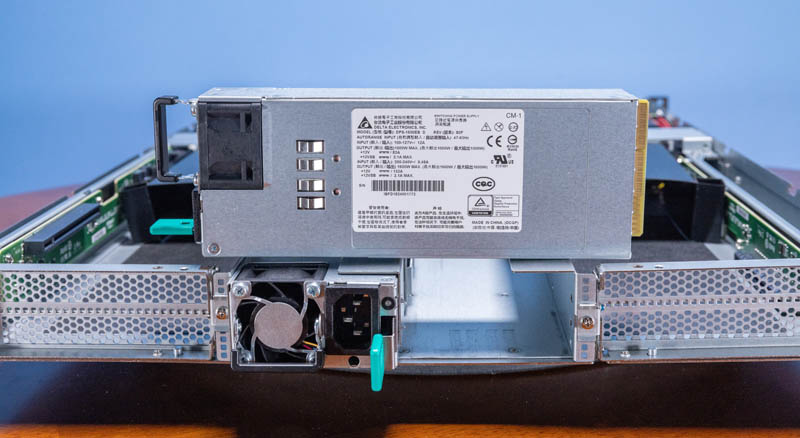

The power supplies are 1.6kW rated Delta units. There is a catch though. These power supplies are only rated at 1.6kW at 200V-240V. If you are running at 100-120V, then the power supply limit is 1kW. In a system like this, you can configure it to run at over 1kW, which is only 500W/ node.

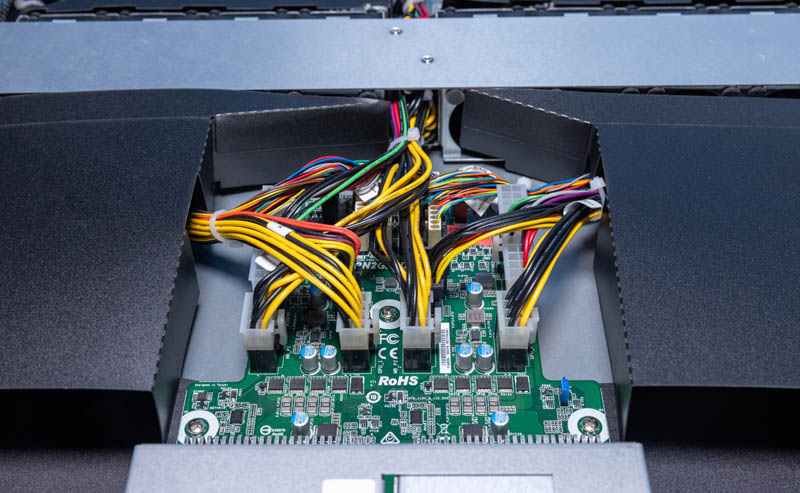

Power from the redundant PSUs is fed into a power distribution board. This power distribution board is shared between the two nodes and directly powers the motherboards, fans, and GPUs in this system.

The two GPU slots are double width for most of today’s professional and data center GPUs.

The risers on either side are designed to hold the GPUs and are cabled to the motherboards. To achieve the proper orientation on either side of the chassis, the Node 2 riser is flipped.

Next, let us take a look at the nodes.

Any idea when these will be for sale anywhere?

Because the PSUs are redundant, that would seem to suggest that you can’t perform component level work on one node without shutting down both?

I’m a little surprised to see 10Gbase-T rather than SFPs in something that is both so clearly cost optimized and which has no real room to add a NIC. Especially for a system that very, very, much doesn’t look like it’s intended to share an environment with people, or even make a whole lot of sense as a small business/branch server(since hypervisors have made it so that only very specific niches would prefer more nodes vs. a node they can carve up as needed).

Have the prices shifted enough that 10Gbase-T is becoming a reasonable alternative to DACs for 10Gb rack level switching? Is that still mostly not the favored approach; but 10Gbase-T provides immediate compatibility with 1GbE switches without totally closing the door on people who need something faster?

I see a basic dual compute node setup there.

A dual port 25GbE, 50GbE, or 100GbE network adapter in that PCIe Gen4 x16 slot and we have an inexpensive resilient cluster in a box.