ASRock Rack 1U2N2G-ROME/2T Power Consumption

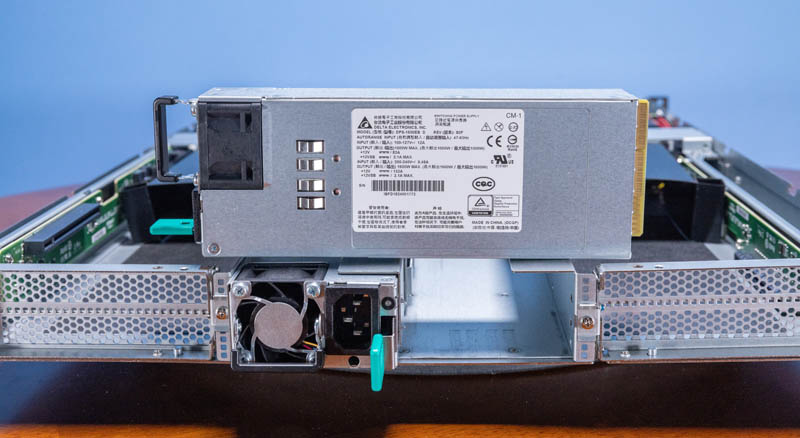

One major challenge with this system is power and cooling. After all, this is a two-node system with two AMD EPYC CPUs, two GPUs, and other components like 10Gbase-T NICs, memory, and a M.2 SSD. The challenge is on power since on 100-120V power, that only leaves a maximum of 1kW per PSU or ~500W per node.

Here we found that with a 32-core AMD EPYC 7502P processor and the NVIDIA A40, we used about 600W for that node (we measured incremental power consumption.) Swapping to an EPYC 7543P added another ~40W. We also had a lower-power setup on node 2 with the NVIDIA RTX A4500 and 4-channel optimized SKUs like the EPYC 7282. That was closer to 400W/ node.

Our sense is that running two nodes without GPUs and lower-power devices like BlueField-2 DPUs or other NICs would be fine power-wise even in a 1kW budget. One can certainly get two lower-power CPUs and GPUs in these nodes while using under 1kW. It is only at the higher end 32-64 core parts and higher power ~225W+ GPUs that we would expect to see the total system power go over 1kW. One also needs racks that can support 1kW/ U but that will be more common even for CPU-only systems in the generation that will come out later this year.

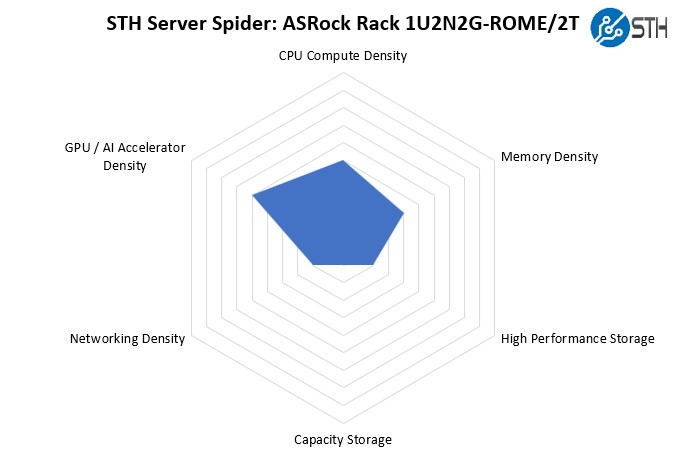

STH Server Spider: ASRock Rack 1U2N2G-ROME/2T

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This is a really interesting system since it provides two nodes in a single U but also two GPUs per U. It is far from the densest configuration as a result of sharing a chassis with two nodes. There is very little storage in this platform, and aside from the dual 10Gbase-T very little networking as well. Still, the point of this is to achieve node density, not necessarily the most components per U.

Final Words

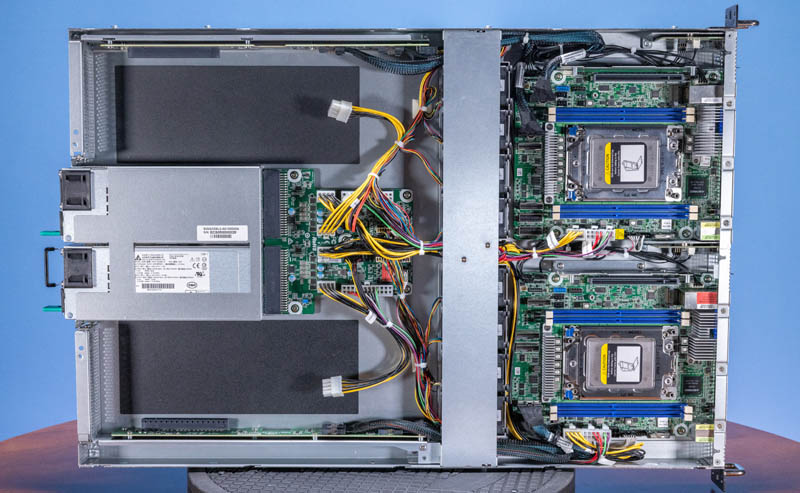

Let us be clear; the ASRock Rack 1U2N2G-ROME/2T is absolutely unique. Two AMD EPYC single-socket GPU nodes in 1U are not something you would find from a vendor like Dell, HPE, Lenovo, or Inspur.

ASRock Rack’s design of placing two nodes in a single U of rack space is different and provides a lot of density, especially for the dedicated hosting markets. The platform is also heavily cost optimized, even if that means things like the GPU risers have screws just like the bar over the fan partition. These nodes are not even hot-swappable as we see on most 2U 4-node systems.

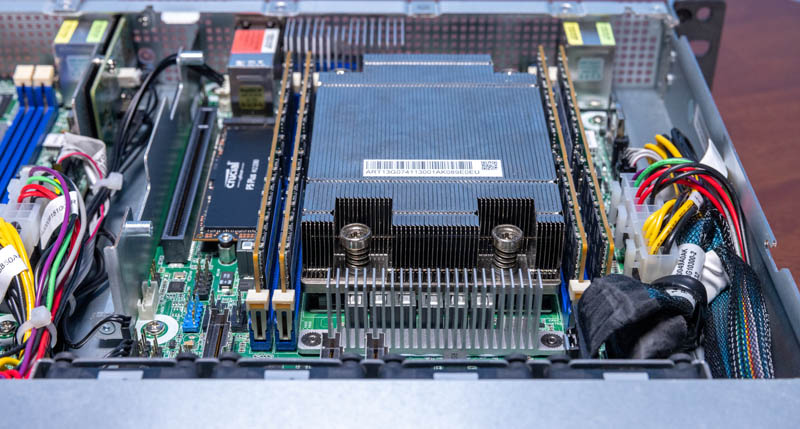

Storage and networking expansion for each node is effectively non-existent. As is hot-swap storage. It is a good thing M.2 SSDs are generally reliable. Still, each node can handle from low power 8 core CPUs up to 64-core processors. ASRock Rack supports Rome, Milan, and Milan-X generations for even more flexibility. The challenge for some will be the 4-channel memory, but in some markets, that is all they want and need, a holdover from the Intel Xeon E5 days.

One design we would be interested to see is a shorter depth version of this chassis, perhaps with two PCIe slots on a riser for half-length NICs/ SAS controllers and two M.2 slots per node. That would be immensely more flexible if one did not need GPUs. Still, handling GPUs in this form factor is really unique as well. Being able to cool a NVIDIA A40 was a bit of a surprise given this system.

To deploy a system like this, you have to be in the market for single socket nodes with GPUs attached and not much else. There are heavy cost optimizations that, along with the lack of expansion, make us think that this is quite well suited to dedicated hosting providers.

We review servers all the time at STH. The ASRock Rack 1U2N2G-ROME/2T is a server that shocked us when we realized what it was. It is really cool that there is something like this even in the market because it is so different from standard 1U servers.

Any idea when these will be for sale anywhere?

Because the PSUs are redundant, that would seem to suggest that you can’t perform component level work on one node without shutting down both?

I’m a little surprised to see 10Gbase-T rather than SFPs in something that is both so clearly cost optimized and which has no real room to add a NIC. Especially for a system that very, very, much doesn’t look like it’s intended to share an environment with people, or even make a whole lot of sense as a small business/branch server(since hypervisors have made it so that only very specific niches would prefer more nodes vs. a node they can carve up as needed).

Have the prices shifted enough that 10Gbase-T is becoming a reasonable alternative to DACs for 10Gb rack level switching? Is that still mostly not the favored approach; but 10Gbase-T provides immediate compatibility with 1GbE switches without totally closing the door on people who need something faster?

I see a basic dual compute node setup there.

A dual port 25GbE, 50GbE, or 100GbE network adapter in that PCIe Gen4 x16 slot and we have an inexpensive resilient cluster in a box.