AMD EPYC 7003 Market Positioning through Partner Platforms

We also wanted to highlight a few interesting platforms that we already have in the lab and will be reviewing over the coming days and weeks. The AMD EPYC 7003 Milan series is compatible with the updated EPYC 7002 series platforms with a BIOS update, so many existing platforms will continue. What we will see is an expansion of systems that evolve around the new chips. We wanted to show off a handful of examples around that case.

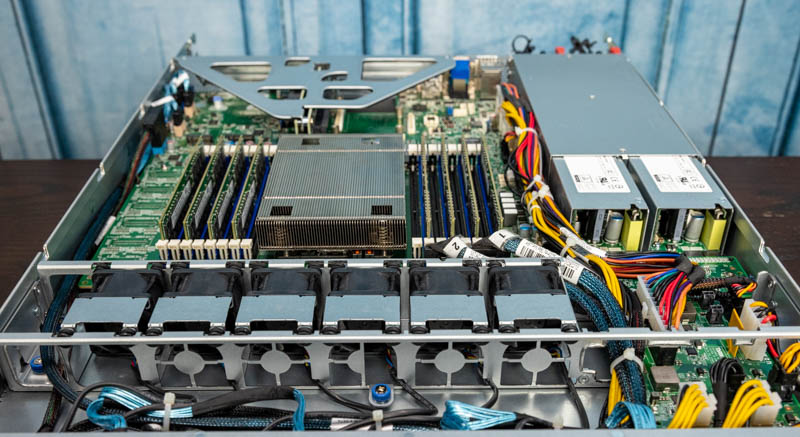

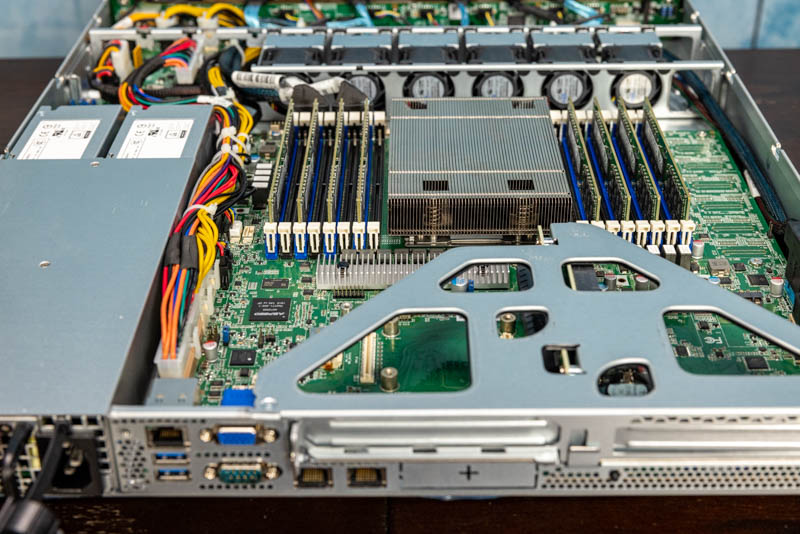

Tyan Transport CX GC68-B8036-LE 1U 1P Platform

The Tyan Transport CX GC68-B8036-LE is a 1U single-socket platform. This can take advantage of P series EPYC 7003 parts. This is important as AMD’s “low-end” strategy is essentially to push the consolidation of two low-end dual CPU sockets into the single socket market.

One can see that Tyan’s solution is a cost-optimized solution with de-populated connectors along the side opposite the power supplies. At the same time, it still offers sixteen DIMM slots and 8-channel memory support. For those upgrading Xeon E5 era systems, one can get the same or more cores and have the same number of memory in Tyan’s single-socket platform.

Since we mentioned this market dynamic earlier, we thought it would be interesting to see a system that is aligned to that dynamic.

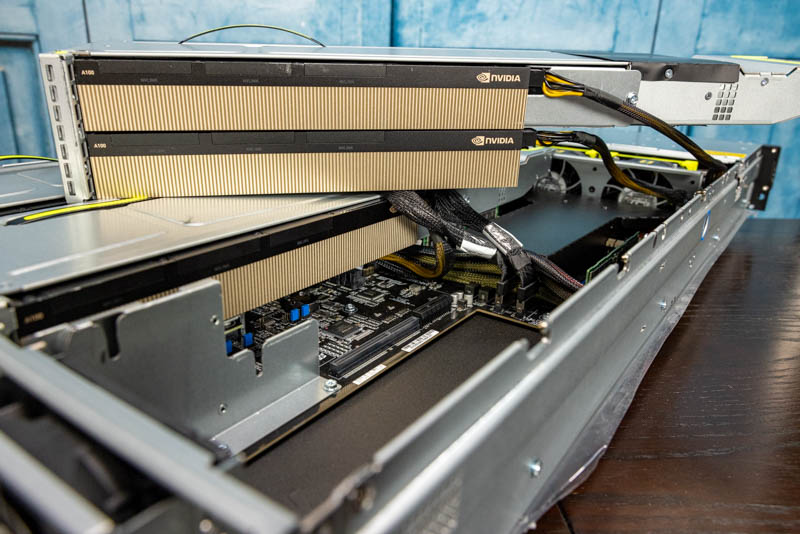

ASUS RS720A-E11-RS24U 4x NVIDIA A100 PCIe Platform

The ASUS RS720A-E11-RS24U is a dual AMD EPYC 7003 series platform that supports up to the 280W TDP EPYC 7763. Not only does one get the high-performance and high-core count CPUs, but the system is also designed for PCIe Gen4 accelerators.

For accelerators, we have four NVIDIA A100 PCIe GPUs. Especially with this generation, the PCIe Gen4 is important. Since AMD has been in the market with PCIe Gen4 since Rome in 2019, NVIDIA’s partners have started to design systems around AMD EPYC Milan CPUs with NVIDIA PCIe Gen4 accelerators and networking.

This ASUS platform is a great example of how with PCIe Gen3 the AMD EPYC 7001 was playing catch-up to Intel Xeon validating solutions on its platform. With the EPYC 7003 being a second-gen PCIe Gen4 platform, it is now Intel that is catching up since the PCIe Gen4 ecosystem works on AMD today.

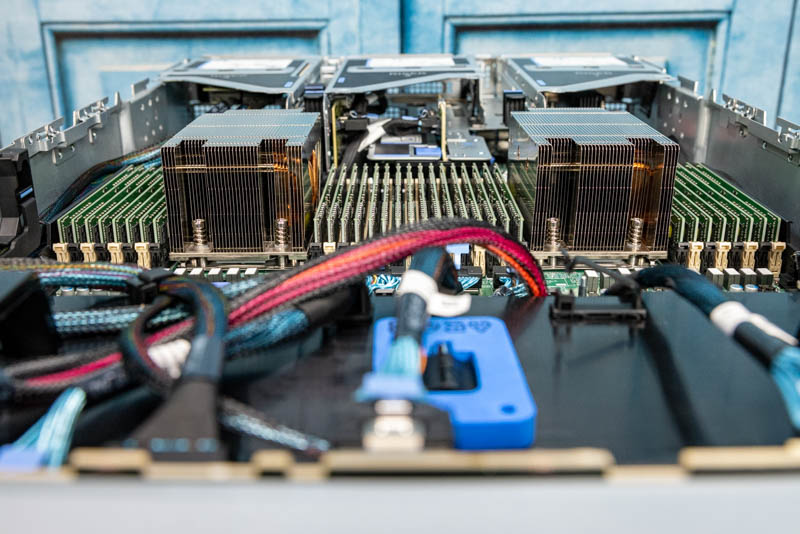

Dell EMC PowerEdge XE8545 4x NVIDIA A100 Platform

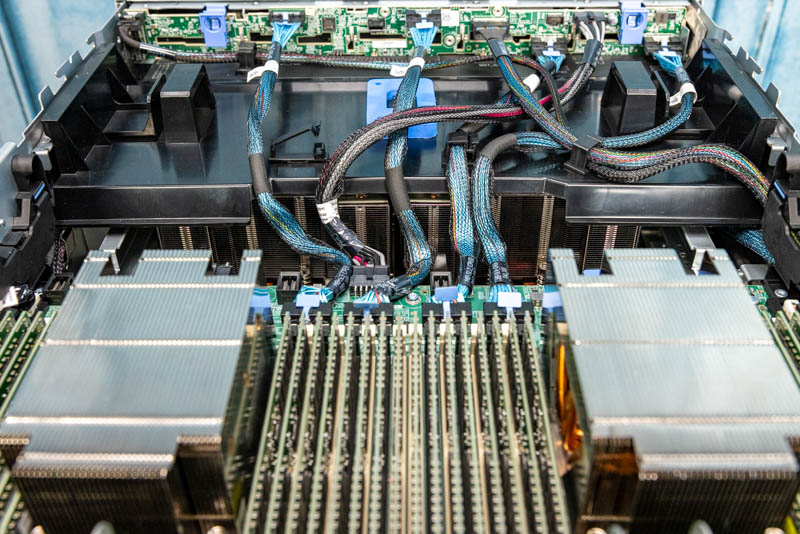

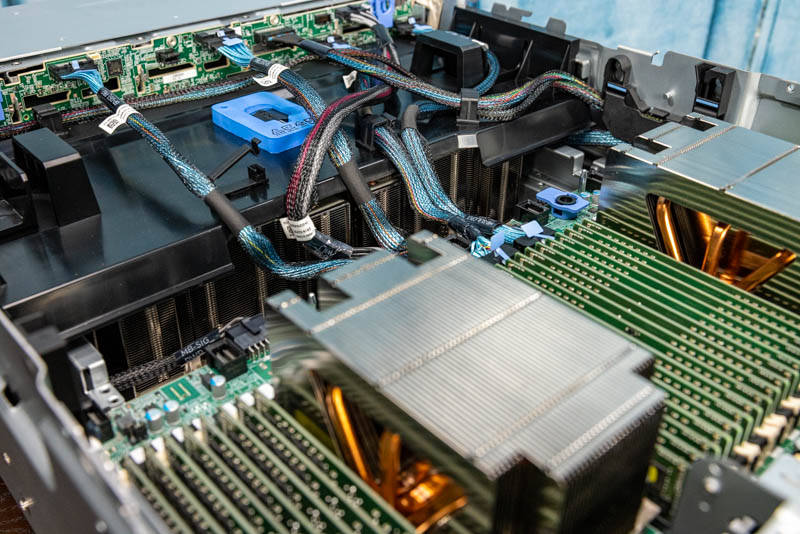

We have another system designed for the AMD EPYC 7763 from Dell. This is the Dell EMC PowerEdge XE8545. The motherboard design may look a lot like the Dell EMC PowerEdge R7525, a point we will discuss in the review, but the system is completely different.

Dell has a 4x NVIDIA A100 SXM4 solution capable of air cooling 400W NVIDIA A100 40GB models or even 500W NVIDIA A100 80GB models.

One can see the huge NVIDIA A100 heatsinks underneath the black airflow shroud at the front of the chassis.

This is a big deal. Although it is a larger system than its predecessor, this is the successor to the Dell EMC PowerEdge C4140 solution. The system needs to be larger in order to cool up to 4x 500W GPUs plus 2x 280W CPUs and all of the other components on air cooling. That is why it is a physically larger system. This is a direct example where Dell EMC has taken a high-value Intel Xeon-NVIDIA solution and adopted the AMD EPYC 7003 Milan series, displacing the older Xeon offering. AMD is winning systems in segments it could not compete in with the EPYC 7001 series.

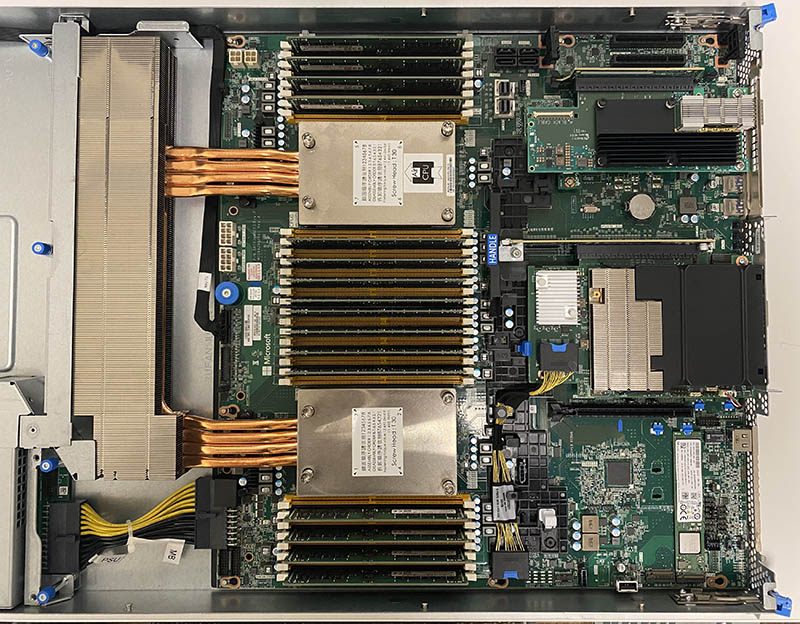

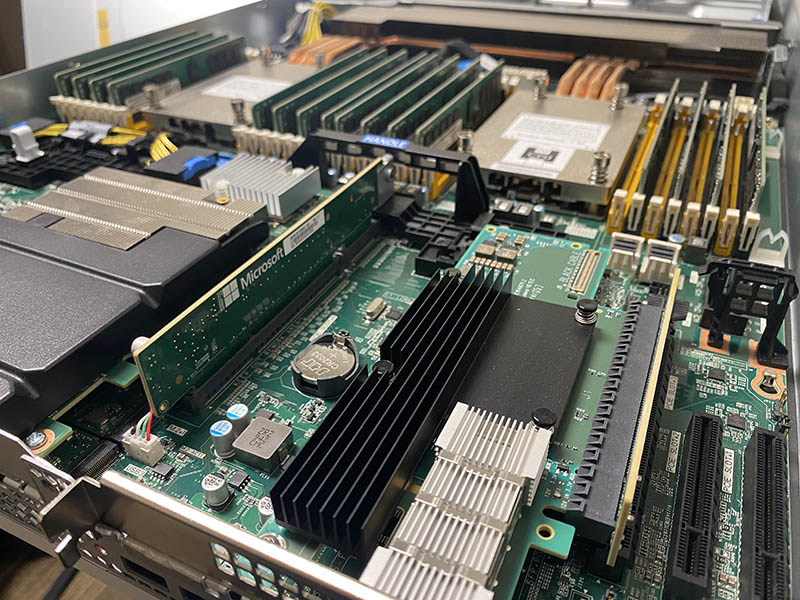

Microsoft Azure HPC HBv3

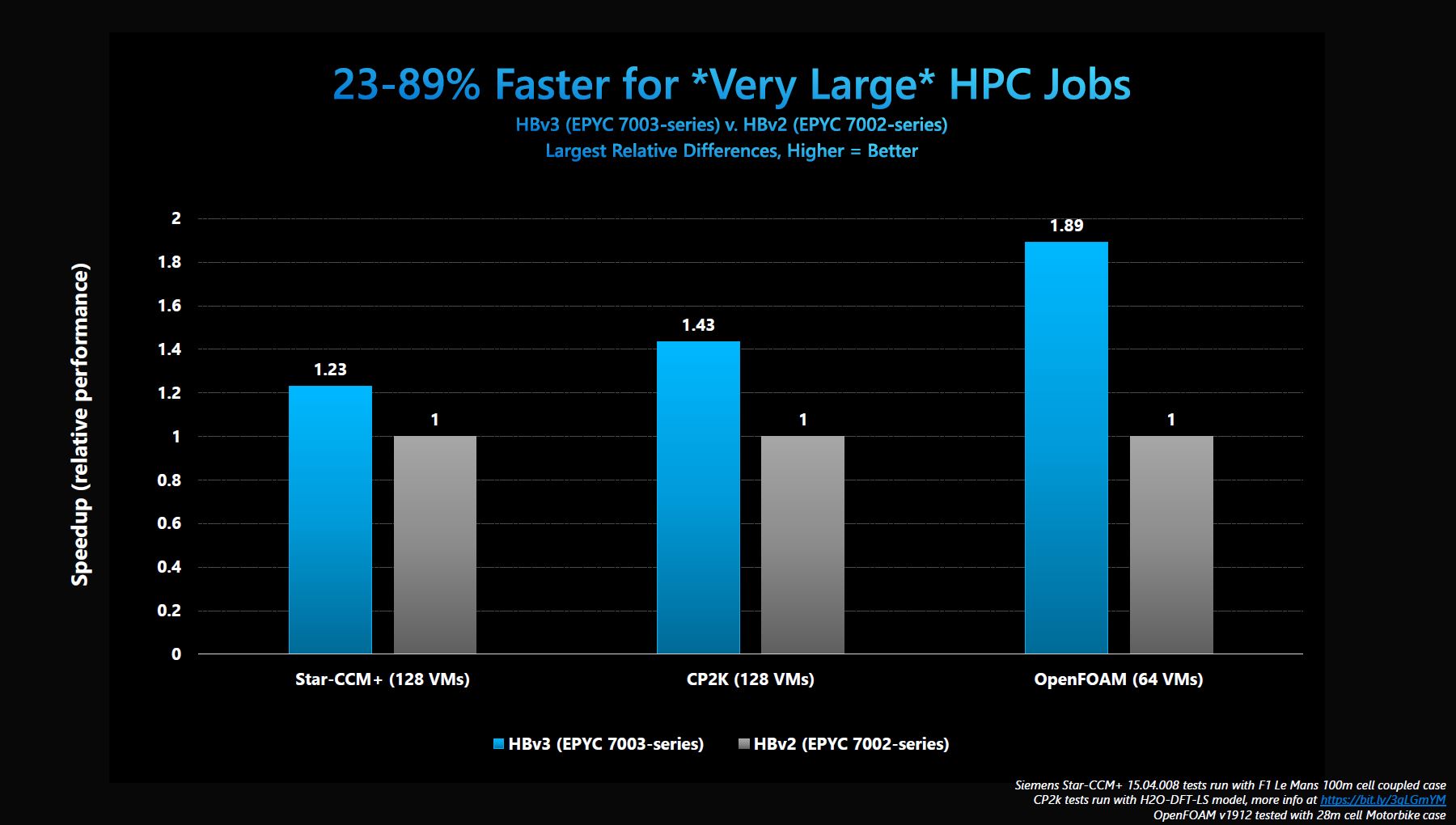

The final quick feature is for the Microsoft Azure HPC offering. Rumor has it we have quite a few readers on the Azure HPC team, so naturally we will cover this. Today, Microsoft is announcing that you can build a high-performance cluster, in its Azure cloud, using AMD Milan.

Microsoft told us that they have been taking delivery of their EPYC 7V13 64-core 240W TDP part since Q3 2020 and have been busy building these clusters. This is a huge deal. In normal procurement, buying a HPC cluster takes quite a long time. Instead, Microsoft Azure HBv3 is available the same day as the CPU is launching.

Microsoft says that the new nodes are faster, as one may imagine. It also touts its ability to use a low-impact hypervisor to change the core count/ capabilities of each physical node. Another cool feature that you may see from the hardware is that beyond a single node, these systems utilize a 200Gbps Infiniband interconnect which is a current-generation supercomputer interconnect.

We are going to do more with the Microsoft Azure HBv3 in the coming weeks to bring you more on this solution.

Next, we are going to talk Ice Lake Xeons and how we can infer the market will be impacted by their arrival.

Any reason why the 72F3 has such conservative clock speeds?

The 7443P looks as if it will become STH subscriber favorite. Testing those is hopefully prime objective :)? Speed, per core pricing, core count – there this CPU shines.

What are you trying to say with: “This is still a niche segment as consolidating 2x 2P to 1x 2P is often less attractive than 2x 2P to 1x 2P” ?

Have you heard co.s are actually thinking of EPYC for virtualization not just HPC? I’m seeing these more as HPC chips

I’d like to send appreciation for your lack of brevity. This is a great mix of architecture, performance but also market impact.

The 28 and 56 core CPUs are because of the 8-core CCXs allowing better harvesting of dies with only one dead core. Previously, you couldn’t have a 7-core die because then you would have an asymmetric CPU with one 4-core CCX and one 3-core CCX. You would have to disable 2 cores to keep the CCXs symmetric. Now with the 8-core CCXs you can disable one core per die and use the 7-core dies to make 28-core and 56-core CPUs.

CCD count per CPU may be: 2/4/8

Core count per CCD may be: 1/2/3/4/5/6/7/8

So in theory these core counts per CPU are possible: 2/4/6/8/10/12/14/16/20/24/28/32/40/48/56/64

I’m wondering if we’re going to see new Epyc Embedded solutions as well, these seem to be getting long in the tooth much like the Xeon-D.

Is there any difference between 7V13 and 7763?