If you have read any of my previous DIY server guides, you might have noticed that I am a fan of VMware’s ESXi product. I know there are other alternatives, such as Unraid and Proxmox, but ESXi is the solution I know best and is the one that I encounter most often in my daily professional life. I am also a big fan of pfSense, and some years ago, I put two of my favorite software solutions together and built myself a (very) DIY home router, running ESXi as a hypervisor with pfSense as a VM, and it has worked very well ever since.

Recently, Rohit has been covering some Topton passive firewall systems, and the Intel Core i7 Fanless units caught my eye. The hardware in my home ESXi/router system was getting old, and I saw an opportunity for an upgrade, so I requested one of the units. Patrick sent it over, and here we are.

In this article, I am going to cover a few topics. First, I will explain why I set my home router up in this fashion. Next up, I am going to discuss my reasons for upgrading to the new i7 unit. Lastly, I will cover the actual upgrade process I went through to move from my old system to the new one. Let us get started!

VMware ESXi + pfSense?

I was running pfSense long before I put it onto an ESXi host, but I wanted my router to do more for me. The original impetus was Pi-hole. If you are unaware, Pi-hole is a network-based (DNS) ad, telemetry, and malware blocker. The name Pi-hole comes from the traditional hardware where this would be installed, a Raspberry Pi, but I did not want another device that I had to keep powered on and plugged into my network. Plus, Pi-hole operates as a DNS server on your local network, and to my mind, that was the kind of thing my router could do. Later on, Patrick introduced me to Guacamole. Guacamole is a clientless remote desktop gateway that just seemed super cool, and again I was struck with the thought that this is something I wanted my router to do. And so the idea of rebuilding my router as an ESXi host was born, with a VM for each function I wanted.

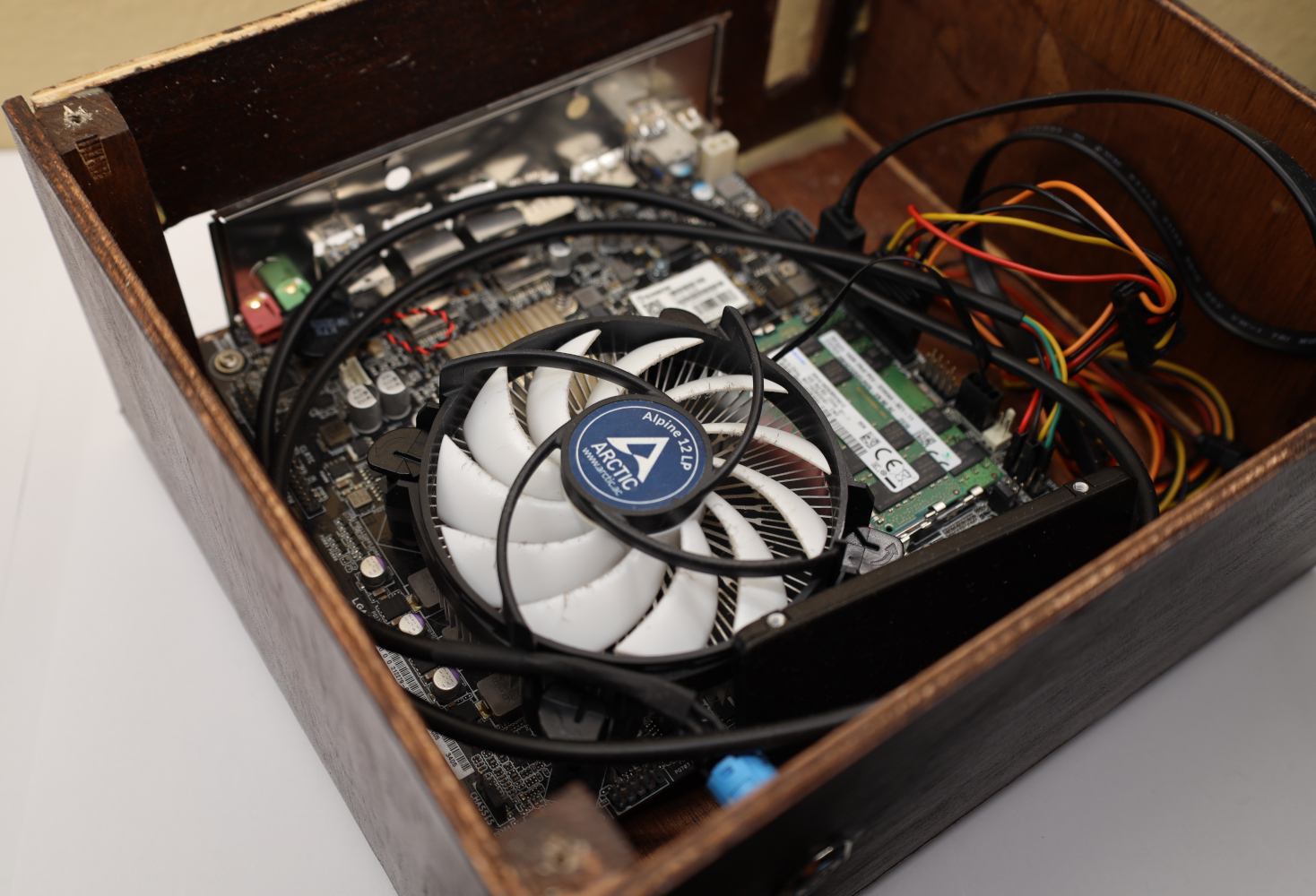

My first solution was one of the most DIY things I have ever done. In addition to assembling the system from inexpensive second-hand hardware and stuff I had lying around, I was also going through a burst of COVID-isolation-inspired dreams of learning woodworking, so I built the case myself.

It is not exactly a work of art, but it got the job done. The internals were just as basic as the exterior:

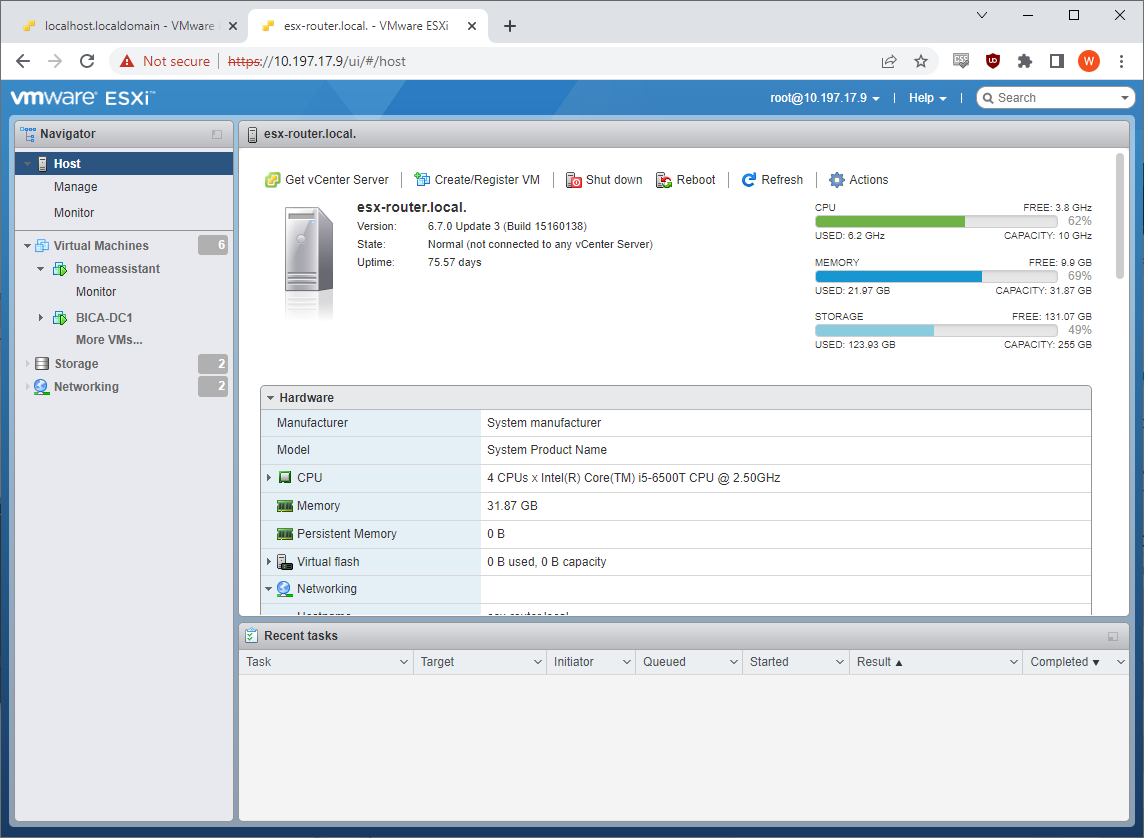

My humble ESXi system was based on an ASUS H110T/CSM motherboard. This little motherboard has a header that accepts a DC12V/19V power supply, and I happened to have an old laptop power brick hanging around. Combined with a second-hand Core i5-6500T CPU and 32GB of laptop RAM, I had myself a system. I had ESXi installed onto a 16GB M.2 from a Chromebook and had an old Samsung 850 Pro 250GB SSD in there to hold VMs. All of this was shoved into the little wooden box.

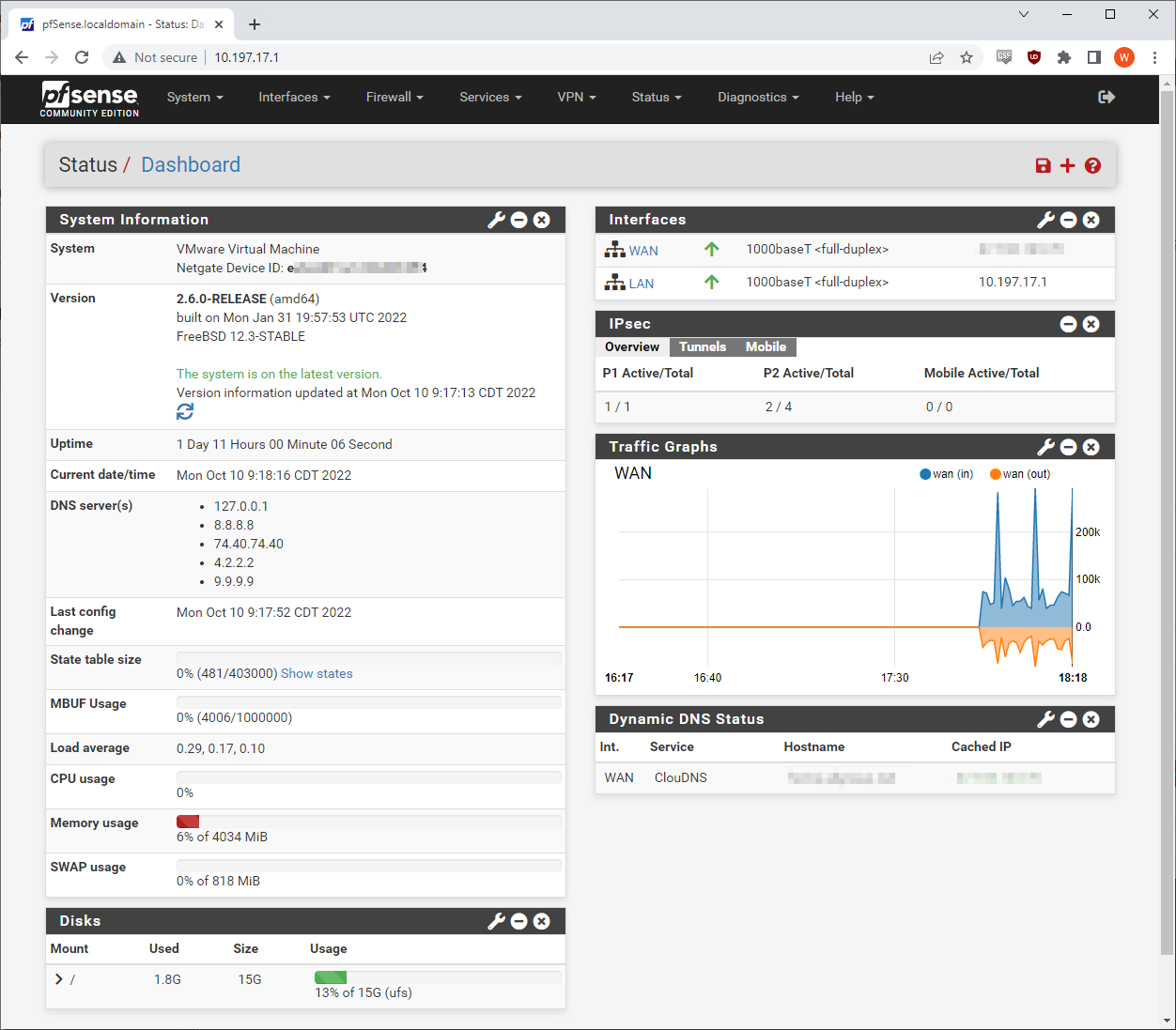

It worked great and has given me zero problems. Over time, I have found even more advantages to the ESXi router. For example, when pfSense 2.5.x came out, I could install it alongside my existing 2.4.x installation, and then easily swap between the two while I got everything for 2.5.x configured correctly. Recently when 2.6.0 came out, I could upgrade with confidence because I took a snapshot of my 2.5.x before pressing the upgrade button. If things had gone poorly, I could simply roll back to my snapshot.

Lastly, a few more VMs have found a home on my little server. I run Home Assistant for all the smart home things in my life, and it happily hums along on this little system. I also fire up test VMs from time to time, and this gives me a good place to do it.

Time for an upgrade

Alas, the time comes for all things. While my system was working fine, there were a few problems. One of the onboard NICs on the H110T/CSM is a Realtek model, and VMware changed some things in ESXi 7.0, and you cannot install drivers for Realtek NICs in 7.0 or higher.

Second, my house is beginning to transition over to 2.5GbE networking and everything in my existing system was 1GbE. I also had an idea to run my Plex transcoder on my little ESXi host, via iGPU passthrough, and the i5-6500T’s Quick Sync encoder is not particularly stellar.

Additionally, I wanted some more and faster storage for my host, and there just was not a great way to do that on the H110T/CSM.

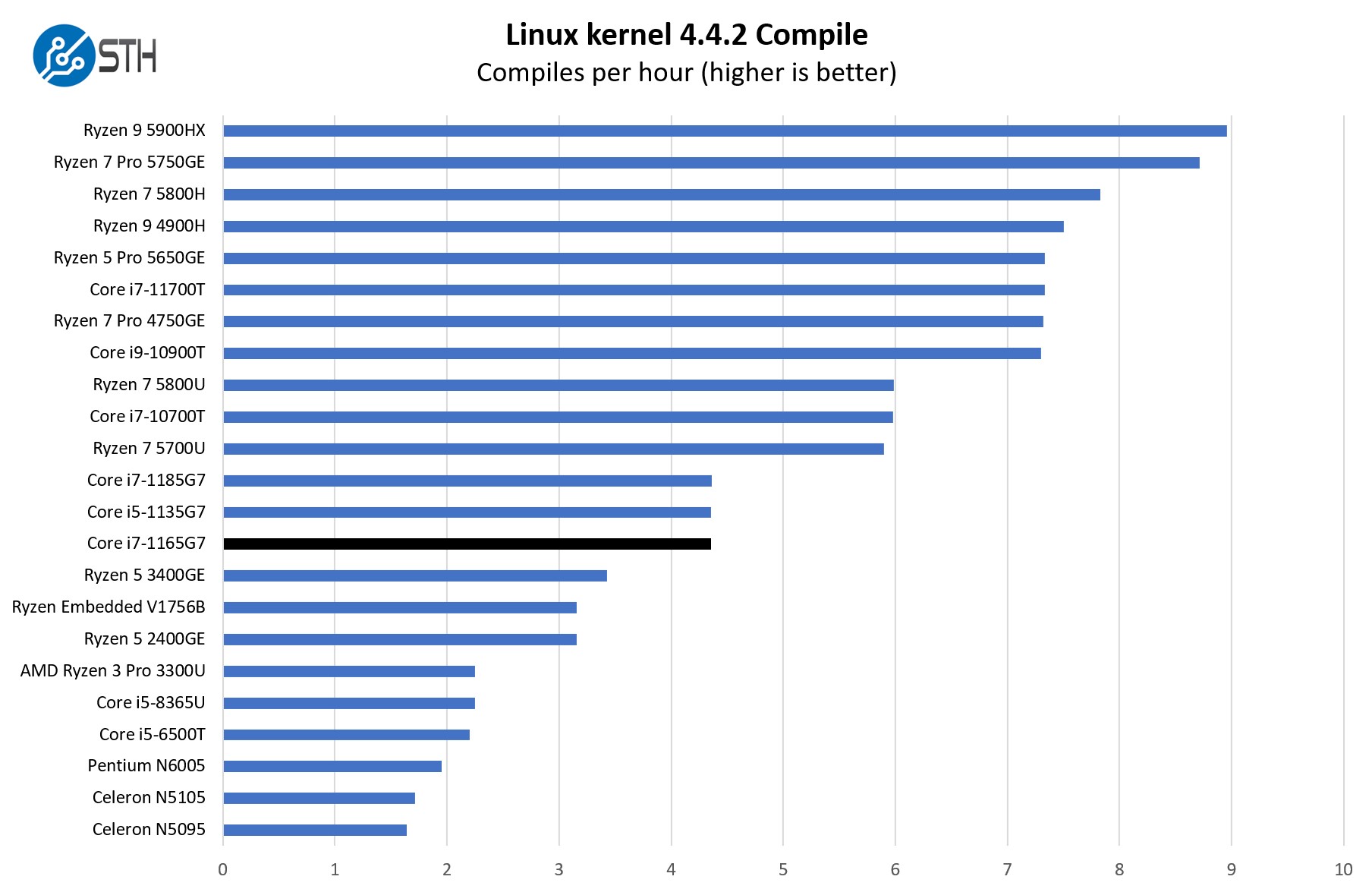

Lastly, I just saw the opportunity with the little fanless i7 units. On some of the i7-1165G7 benchmark graphs, that CPU was significantly leading my old i5-6500T while presumably consuming less power.

Once Patrick sent me the device, it was time to get to work.

Installing hardware and ESXi

Patrick sent me the Topton unit without any storage or RAM installed, so the first task was installing those. I had 16GB of spare DDR4 RAM hanging around that I could use temporarily, and once I actually put the new host into use, I will reuse the memory out of my old system since there is 32GB of it, and it is compatible with this unit. For the SSD, I had the option to use a NVMe drive on this system, so I installed a WD Red SN700 1TB drive. In my review, I found the SN700 to be a very nice drive with the potential to run a bit hot under sustained load. Thankfully, my home router is not a heavy-load environment, so I do not think it will have any problems.

Once my RAM and SSD were installed, it was time to install ESXi. I am not going to cover the actual ESXi installation process as it is not particularly complicated, but I did want to note that the Intel i225-V NICs on the Topton need an additional driver integrated into the ESXi installation to be supported. That driver can be found here, and once I had it on my installation thumb drive, all went normally.

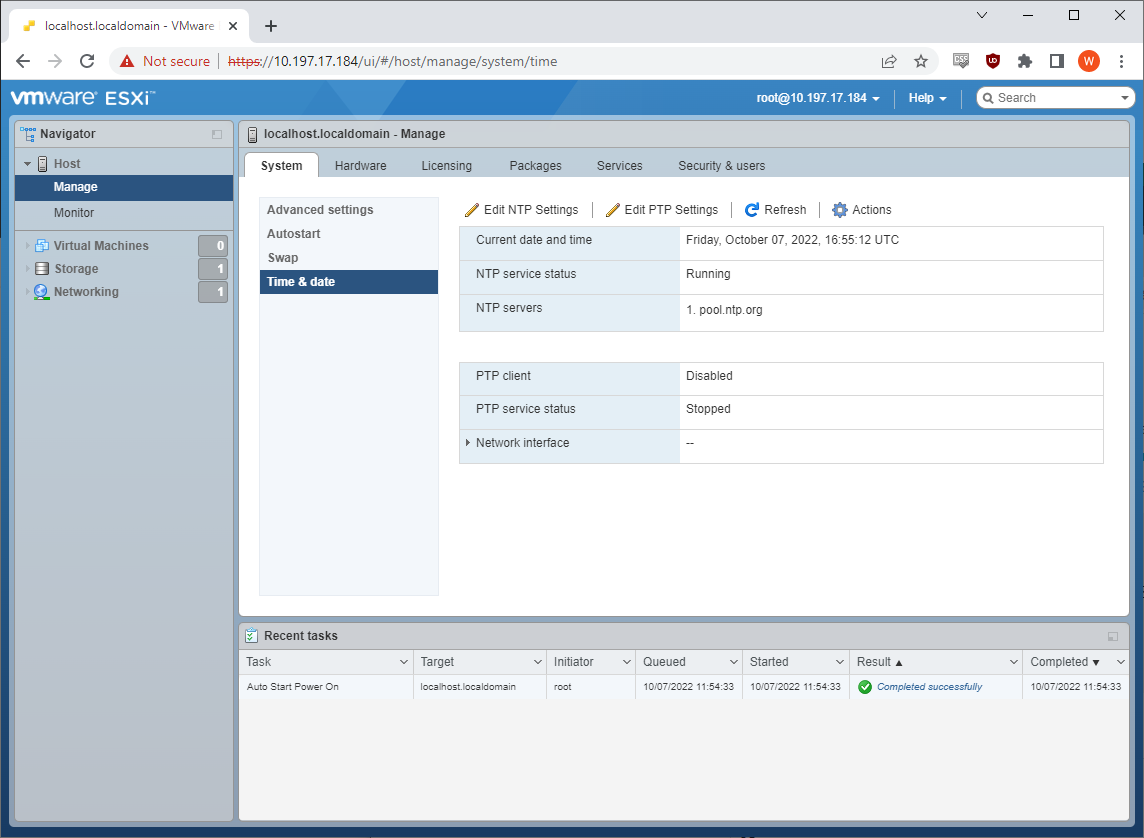

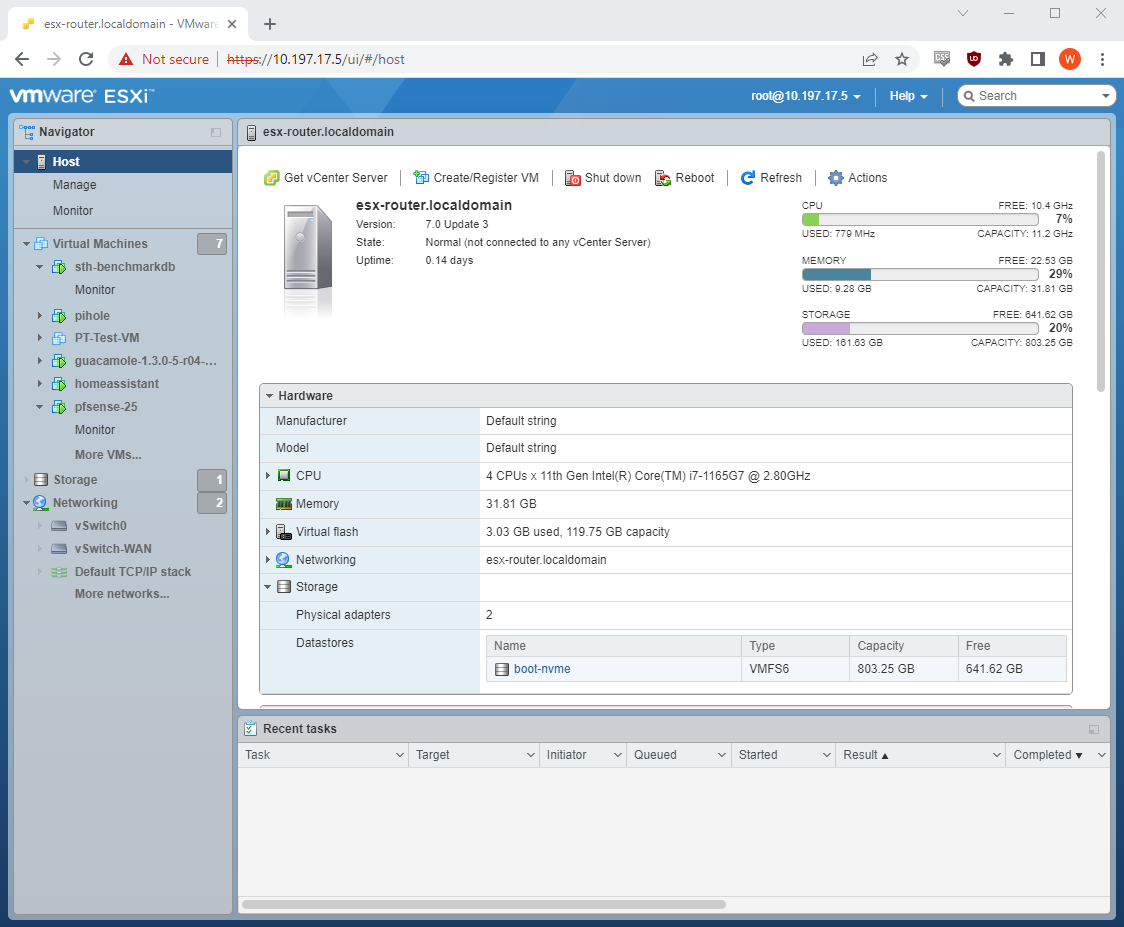

With ESXi installed, I could log in via the web interface. From here, the very first thing I always do is configure NTP, enable the Autostart feature, and apply my licensing. I own a paid ESXi host license, which will come into play when I use Veeam, but for most purposes, a free ESXi license will work fine here.

Next up was to configure my networking.

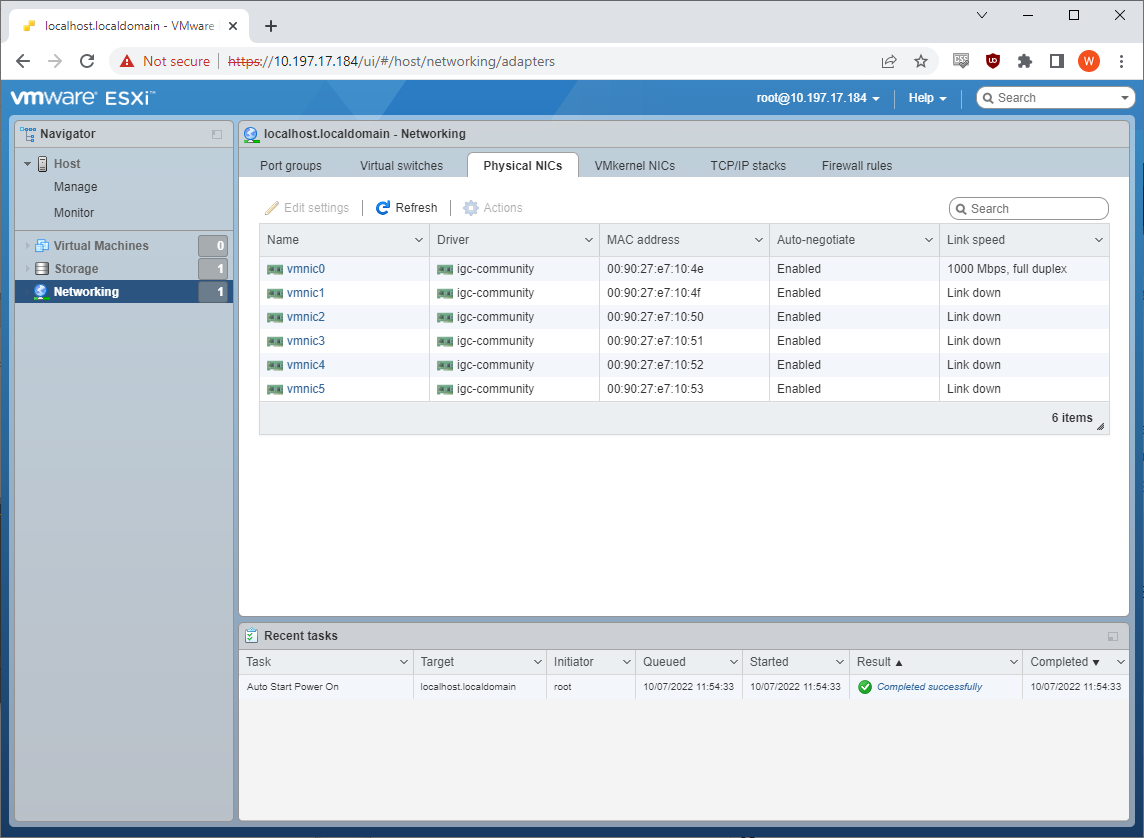

ESXi happily saw all six of the network interfaces on my system, and vmnic0 is going to act as the uplink to my home LAN. I will define vmnic1 as the physical WAN port where my internet will plug in. Doing that requires defining a virtual switch.

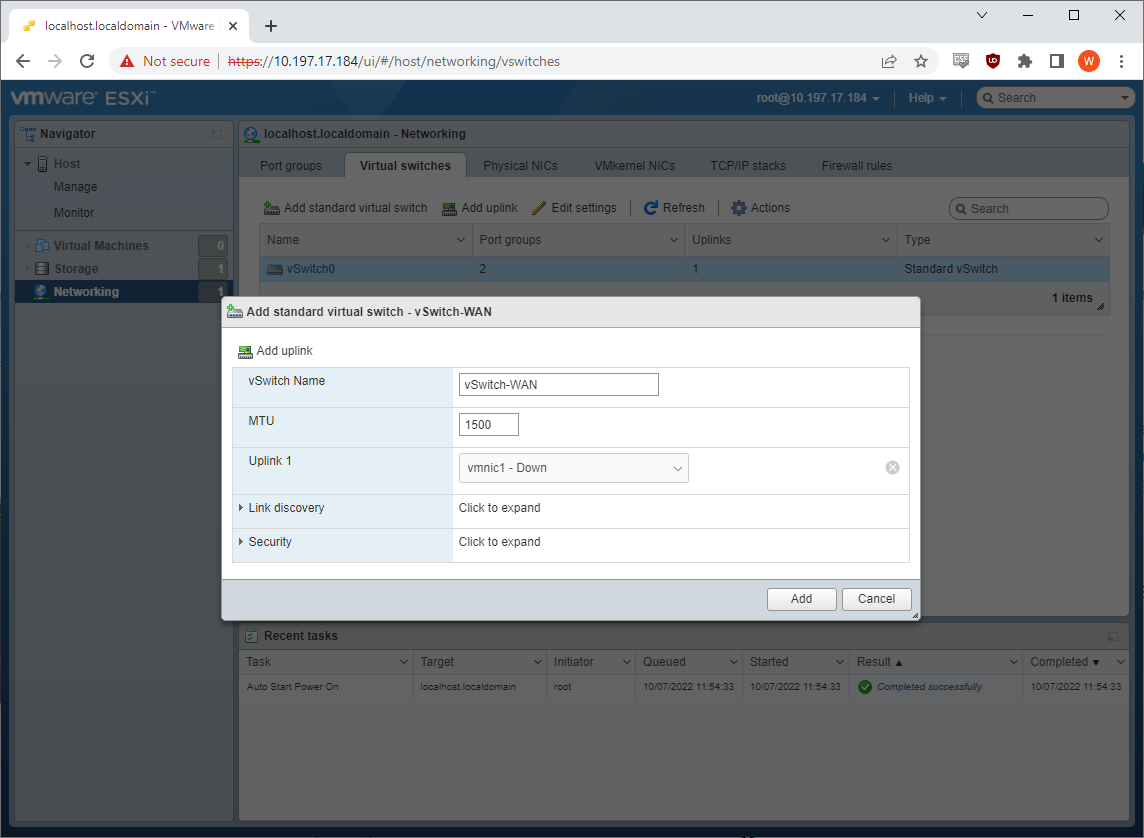

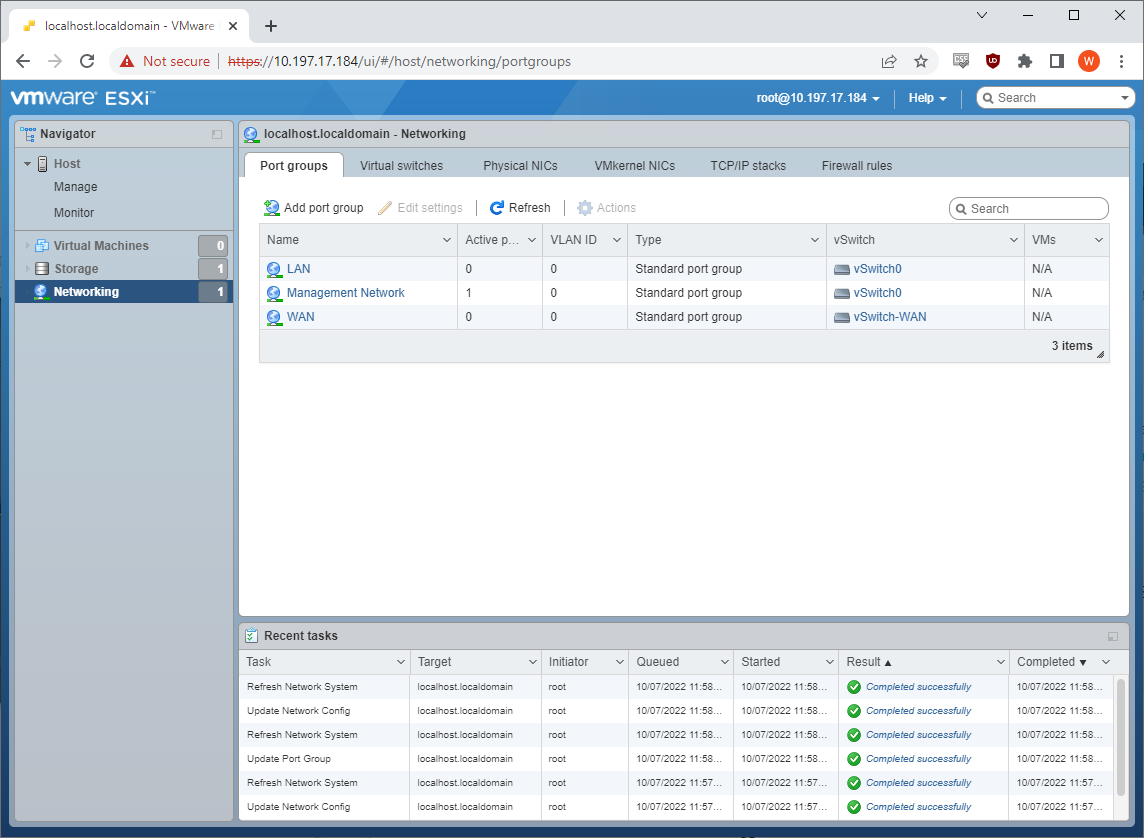

I named my new virtual switch vSwitch-WAN and assigned vmnic1 to it. I then swapped over to the Port groups tab, and set up two port groups.

The original “VM Network” port group associated with vSwitch0 I renamed to LAN, and I created a new port group associated with my vSwitch-WAN, which I called WAN. Now, when I get my pfSense VM running, I can assign it two network cards, one on the LAN and one on the WAN.

Migrating with Veeam

Since I am moving from an existing ESXi server to a new one, and I have a paid license for ESXi, the option is open to me to migrate VMs from one host to another via a few methods. I could set up a vCenter server and use VMware’s built-in vMotion to migrate VMs from one host to another, but honestly, that is a lot of trouble for a one-time move.

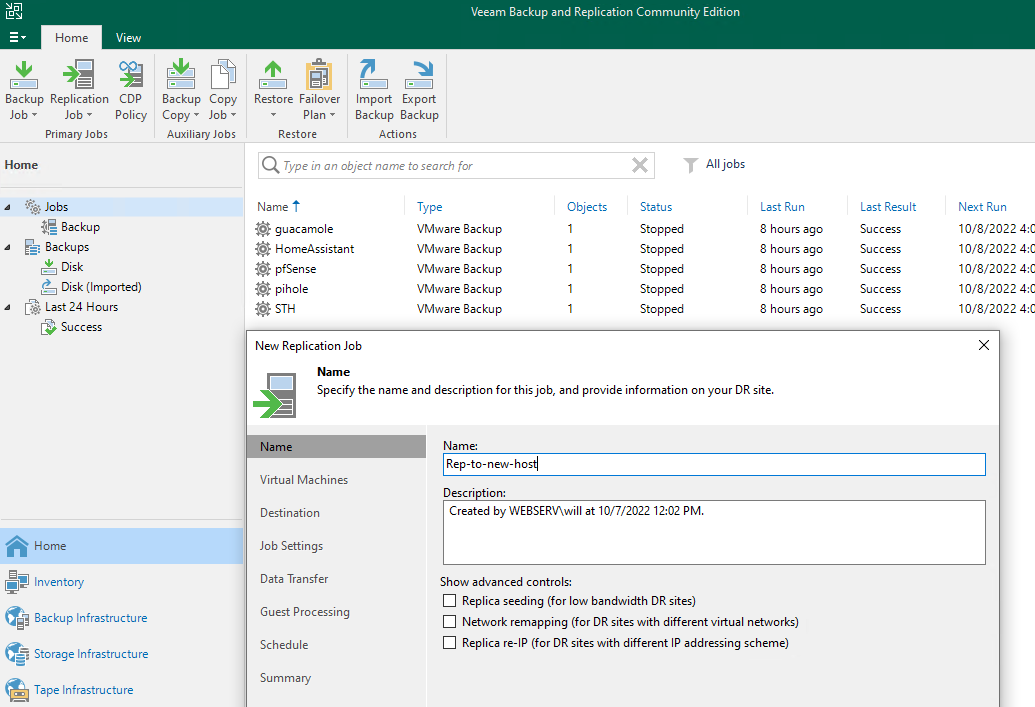

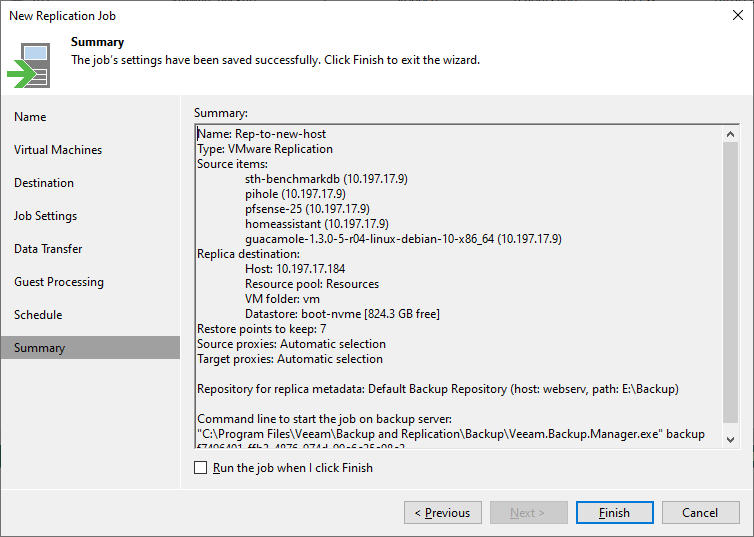

The better option for me was to use Veeam. I already run Veeam on a server at my house to back up the VMs running on my ESXi host, so it was a relatively simple thing to set up a replication job between the old and new host.

I set up the new rep job, selected all my VMs, and fired it off.

A little while later, my new ESXi host had a copy of all of the relevant VMs from the old host. All that was left to do was to power down the existing ESXi host, move the RAM to the new host, plug the new host in and boot it up.

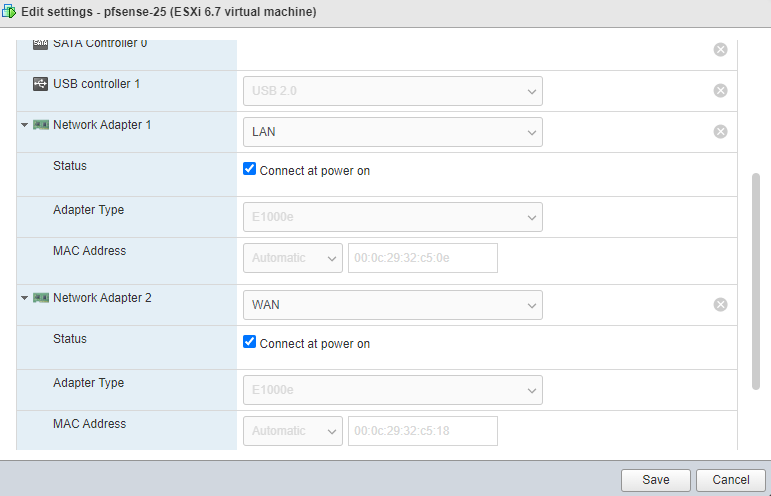

Once the new host was powered on, I had to edit each of the VMs and choose the network that each virtual NIC would connect to. In my picture here, you can see my pfSense VM, which has two NICs.

And with that, I was done! I powered all my VMs up, starting with pfSense and Pi-hole, and went to make sure everything was working.

My internet was up and working just fine. All of the other VMs booted up just as easily, and I have a ton more storage and performance available to play around with. I have started working on the iGPU passthrough, which is not something I have ever done before, and I have high hopes to offload my Plex transcoding work to this little box.

Keeping it Cool

Now, STH readers that looked at Rohit’s article may remember that there were two models of the Topton i7-1165G7 unit, one of which performed well thermally and one that did not. I received a unit that did not do thermally well, and the unit gets uncomfortably hot to the touch. My solution to this problem cost me less than $20, though it did add a bit of “DIY jank” back to my setup.

As you can see from the picture, I simply put a 140MM fan blowing straight down onto the unit. I attached fan grilles to protect against fingers and wires getting hit by the fan. The fan is powered via a USB to PWM header that I already had on hand from a different project. The fan runs at a super low RPM because it is a 12V fan operating on USB’s 5V output, which has the benefit of ensuring it is dead silent. Eventually, I will properly attach this fan via some kind of 3D-printed scaffolding, but for now, it is just resting atop the system. Thanks to this “solution” my thermals dropped nearly 30C, and the whole unit is nice and cool.

Unfortunately, this also means that there is now a fan, albeit one that is quiet.

Final thoughts

Overall, I am very happy with how the process went and with the end result. Thanks to the Veeam replication process, the total downtime on my home internet was less than 20 minutes. The new system takes up less physical space and uses less power than the old Core i5-6500T system. The trade-off, perhaps, is looking less cool than my old wooden box. Most importantly for me, the new system is significantly faster, has more storage, is equipped with better network uplinks, and allows me to move to a much newer version of ESXi. Armed with this new capacity, I can think of a bunch of different projects to test, starting with the iGPU passthrough testing. Once more 2.5 GbE devices come to my house, I will also be prepared for that.

Setting up your router on a virtualization host is not for everyone, but hopefully, my journey was at least interesting to read about. I will be around to answer questions in the comments or forums if anyone has any, as well as listening for suggestions on what other interesting services can be added to my router host!

I appreciate the screenshots – for us novices far too many people gloss over details, but the screenshots really help see how things are configured.

Thank you very much for the hard work on these articles. One suggestion: you write that esxi is “the one that I encounter most often in my daily professional life”. This site is called serve the home (and not serve the professional) — shouldn’t you be addressing the home market and using software that people use at home (you mention proxmox which would be a good alternative given it’s free for non commercial home use).

Thanks again.

John,

That’s a good point. However, for lots of folks their home labs are built to simulate their work environment or potential work environments. Folks build a home Cisco network lab to practice for their Cisco exams, or build a home Active Directory environment to practice for their Microsoft exams, etc. I run ESXi at home because it helps keep me in practice for what I encounter at work. Given that it has a free version that can do everything except the Veeam migration, I also think ESXi is an acceptable piece of software to run in a home lab.

I want to experiment with Proxmox and UnRaid and such, but not because ESXi is unsuitable, just because I am curious!

@John Anderson – That’s not what the ‘home’ in STH means. From the about page of this website…

‘STH may say “home” in the title which confuses some. Home is actually a reference to a users /home/ directory in Linux. We scale from those looking to have in home or office servers all the way up to some of the largest hosting organizations in the world. This site is dedicated to helping professionals and enthusiasts stay atop of the latest server, storage and networking trends.’

Great writeup Will.

@JA I imagine Will’s homelab efforts somewhat inform at least a few of his professional choices. Probably vice versa on that statement as well. As such, running ESXi at home and testing updates and deployment methods (to name a few) before updating and deploying professionally is likely his angle here. I don’t have the need currently, but I did exactly this from 2010 – 2020.

Watching to see how well this HW transcodes for PLex..

Spot on Rob Pinder. Also, quite a lot of folks that use ESXi on the servers we review at work also use ESXi in their home labs on smaller systems that we review.

Great article! If you manage to get plex working with the iGPU under esxi please post a follow up as it’s something the community has yet (to my knowledge) to get working with 11th gen intel

Leidrin,

I’m running into some of that now! I can be patient though; my current non-accelerated Plex server is working fine, it just draws more power than I would like.

I got my first VMware in 1999, when the only product was what’s the Workstation variant today. I’ve played with ESXi and vSphere, but for hobby use a vSphere license is a tad expensive, especially the HA variant which I really liked for a terminal server use case. Still use Workstation on Windows…

For the last years I’ve drifted to oVirt with a CentOS base because it offered 3 node HCI clusters, but quality issues there were driving me mad. The mess that Redhat/IBM has created with CentOS and giving up on Gluster and RHV doesn’t help.

So I tried Xcp-ng, which is wonderfully easy to use and without appearent bugs, but its ancient technology base (Linux 4.19) is causing issues with modern hardware, e.g. the i7-1165G7 based NUC I use looses its iGPU and the 2.5GB/s NIC. I didn’t care about the NIC, because I use a 10Gbit Thunderbolt NIC, but the iGPU was a bit of a bother.

It won’t be able to handle E/P cores and I had issues on Ryzen 5000 systems, that took a while to sort out (the developer/support community is 1st class for Xcp-NG!).

So I’m back to oVirt, but using the Oracle variant based equivalent to the commercial RedHat RHV 4.4, with EL8 and oVirt 4.4 baselines, which seems to have much fewer bugs and avoids the CentOS 8 woes, while still supporting HCI based on GlusterFS.

With KVM it its base it’s quite easy to pass through PCIe devices like NICs (also GPUs and NVMe devices), which is essential for adequate pfSense performance in a VM, but precludes live-migration of VMs.

My pfSense still runs bare metal on an iBASE mini-ITX Q67 i7-7700T based system with 8 Gbit NICs that’s limited to 16GB of DDR3 RAM. With a Noctua cooler on that 35 Watt TDP CPU it’s not fully passive, but “unnoticeable”.

But today I’d certainly use such a quad NIC box and with the 64GB RAM capacity on this hardware virtualization makes a lot of sense. I’d still want the TB port for the 10Gbit NIC, though.

abufrejoval: Perhaps give Ubuntu with LXD a try? It’s got more current kernels and lxc/xd is prety easy to use and supports both VMs and Containers.

Is ESXi 7.x any better with vSwitch and MTU larger than 1500?

I saw MTU 1500 in your screenshot, and in my past experience, if you tried to use jumbo frames with a vSwitch in ESXi 6.x, it would hang the network interface.

Caused some serious performance degradation for us with our iSCSI storage setup at my old job. (Better slow performance, than non-functional though.)

James,

In the datacenter environment that I manage, I’ve been running Jumbo Frames on vSwitches since the ESXi 5.x days with no problems. Perhaps the problem was NIC specific or something? My datacenter environment is around 20 ESXi hosts and they’re all set for jumbo frames, now running ESXi 6.7.

Hi Will, good stuff! You said, “it just draws more power than I would like.” Are you running it with a power meter? Can you give us some numbers, loaded, unloaded, average?

@Nils: I did evaluate LXD around 2008 when I was searching for a lighter virtualiziaton solution. At that time we decided to go with OpenVZ which seemed much more mature, worked with CentOS and had allowed for PCI-DSS compliant production. That infrastructure is still running on its last legs and was almost impossibly economical and great to use.

At that times control groups very still very much beta and my impression is that they are still a mess. But Debian and LXD very much build on them.

In many ways RHV/vSphere/oVirt are a regression and I always would have preferred to have a combined setup that allows me to use *everything*, IaaS containers and VM as well as PaaS containers by nesting Docker/Podmon on OpenVZ or VMs.

But Virtuozzo took some strange turns and OpenVZ containers didn’t work with CUDA, where KVM/RHV/oVirt does really well. Since I need that it’s VMs for now and I’ve got years of experience on Enterprise Linux and RHV/oVirt.

When you need to go deep into the innards of production Linux these days, switching between Debian and Fedora based distribution is like switching from German to Dutch: ok for being a tourist, a year until you’re able to hold a professional job.

But I’ll take your suggestion and have another look: perhaps some things did actually improve during the last decade.

sorry for gastly typos… need edit!

Eric,

I was referring to my current Plex server, which is a Xeon E5-2650v4 with 128GB of registered RAM. I don’t have it on a power meter, but I would wager that the idle power draw of that system is higher than the load power draw of this new router! I do things other than just Plex with that server, but I would still like to reduce it and Plex can be one of the big CPU draws.

@Rob: that explains a lot – i did wonder what the home use case for 8 socket Sapphire Rapids might be.

@Will: thanks for the clarification. It might also be a US vs. EU thing. I do get the feeling that Europe is more exposed to proxmox (unsurprising, given the product originates from Germany.

I something similar just this weekend with my pfsense router (bare metal to virtualised on proxmox) and it was very smooth. In my case the move was precipitated by a jump in electricity costs and virtualising pfsense allows me to consolidate everything. Except storage…

@Leidrin: can recommend proxmox for this. Have had plex with hw encoding running in light-weight LXC container for years now. Zero issues.

@Will Taillac: Interesting article (thanks for writing it) but it brings up several questions:

(1) You didn’t explain why you picked a model with 6 NICs instead of just 2 (or 4).

(2) Why did you pick the “less good” model that needs a fan?

(3) Why is your “Management Network” Port Group on the same NIC as your LAN? Ease of use?

(4) I didn’t know there was a free ESXi license (I’m a Linux sysadmin at work with many ESXi hosts and several vCenters). What limitations does it have? I currently use Proxmox at home and am intrigued. Proxmox works OK for my modest needs (one Fedora VM and one pfSense VM) but to use the same thing at work and at home would be handy.

(5) I’m confused about Veeam. Based on a cursory glance at their Web site I thought it was just a Cloud backup service/product. How does using Veeam require a paid ESXi license?

I probably have more questions but that’s enough for now. lol

Super

1. Because both models Patrick/STH had were 6-NIC models. I was angling for “free to me” on the cost here.

2. I didn’t; Patrick did. Rohit kept the better one.

3. Yep. I could technically separate out the management network so that it is on a separate VLAN from any client networking, but this is my home and I don’t have multiple VLANs.

4. Yep! The free license still requires registration and such on VMware so they can market at you. Technically it has some limitations, but they will not bother most folks. No support is available, only 8 vCPU cores can be assigned to a VM, and you miss out on vCenter management and have to instead use the built-in ESXi web console. The biggest missing feature, though, has to do with the way the vStorage API is restricted; this means you cannot take hypervisor-level backups from a free-license host. Veeam will not work against a free-license host for this last reason.

5. See above. Veeam is a hypervisor-host level backup software; assuming that ESXi is licensed you can have Veeam installed somewhere else on the network and point it at the ESXi host. Then you can back up or replicate any VM located on that host, without the VMs themselves even being aware it is taking place. You can even back up or replicate host-to-host when a VM is powered down, which makes for a 100% clean and safe migration path between ESXi hosts. However, as noted above, it does require a paid ESXi license. Veeam itself has a free license for up to 10 “objects” or VMs that it would manage.

I thought about an intel based router box with pfsense. The prices are just too dang high!

$400 for an n5105 box, some J4125’s even at that price point!

I ended up with a $120 Mikrotik router. Yeah, I know what you heard about the hacks. Those were routers that hadn’t been software updated in years, had remote management on, and used the default root user and password. Any router will have a problem with that. Its very easy to update the router, turn off remote admin and change the root acct/password.

Very capable quad core arm unit, and it allows for linux docker containers to be run. I set up a pihole on mine, to run next to the router software. Took about 10 minutes, and works really well.

The interfaces will top out at around 1Gbit on mine, but that’s all the connections can do. They have a ton of other products with 2.5, and the prices on those are quite reasonable.

All of their newer devices run the same software, from routers to switches. Definitely worth a look, but you really benefit from some linux and heavy networking experience to own one.

i7 w00+! Chain all the bpf you want! Add that exhaust turbo and shove it into some woodworking you made with shader code and an axe on a 3 axis gantry (incorporating or followed by same fano barrels to cut your finely tuned noise.) No project complete until it encompasses email to admin. and noiseless blow-dry.

Any reason you’re using #PiHole instead of pfBlocker?

AdrianTeri,

Didn’t know what pfBlocker was at the time I was introduced to PiHole. By the time I found out about pfBlocker, I was already happy with my initial PiHole deployment.

I would like to see some performance benchmarks – something like iperf3 or wrk would be good tools to consider.

This would show how well the firewall will do in as close to real-world conditions as possible. Doing a baseline and then adding in some of the other services like IDS would be a good to see

This article showcases the impressive performance of the Topton fanless Intel Core i7-1165G7 box, highlighting its ability to handle complex virtualization tasks without any cooling fans. The device’s performance with VMware ESXi, Veeam, and pfSense is a testament to its capabilities and potential for use in various industries.

This is a great article, a bit over my head technically (because I’ve never used ESXI) but thoroughly in line with my wish to have a single appliance between my modem & the internet, hosting multiple VMs running OpenWRT, Pi-hole, WireGuard, etc.

That would justify buying a single badass server with lots of RAM, CPU & storage, then slicing up those resources appropriately.

Thanks much!