The Supermicro AS-1024US-TRT is profound in that it is designed for a very competitive market. This dual-socket server is designed in Supermicro’s “Ultra” design language. Practically, that means that the layout and many of the features and design choices largely mirror those found on the company’s Ice Lake Xeon X12 servers. The first part of the competition is then the competition for this AMD EPYC 7003 / 7002 platform versus the Intel Xeon offerings. The second area of competition for this class of servers is that it is designed to compete with the Dell EMC PowerEdge, HPE ProLiant, and Lenovo ThinkSystems. Supermicro has other server lines offering heavy cost optimization, GPU/ storage, or simply density. This particular server is designed to compete head-to-head in the high-end and high-volume 1U server market.

Supermicro AS-1024US-TRT Hardware Overview

As we have been doing in recent reviews, we have the hardware overview for this system split into two parts. First, we are going to look at the exterior of the chassis. We will then get into the interior of the system. If you want to check out a different format, we have a YouTube video on this one.

As always, we suggest opening this video in a YouTube tab for a better viewing experience.

Supermicro AS-1024US-TRT External Overview

The outside of the server is defined by two major features. First, is simply the depth. This is a 29″ deep server, which is shallow enough to fit in the vast majority of 4-post data center racks. Some newer servers are deep enough where they will face interference with lower-cost colocation racks with zero U PDUs, but Supermicro has a very flexible solution here. The other major feature is simply the drive bay layout.

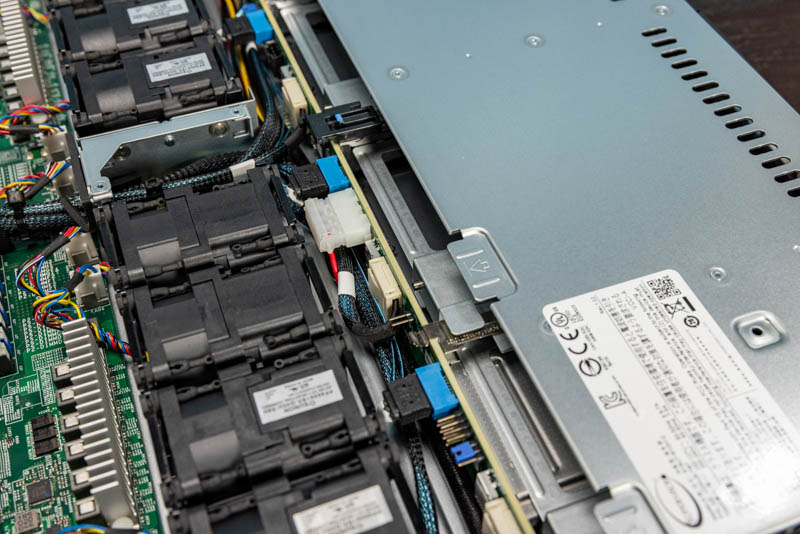

Drive bays are four 3.5″ bays, but something may look different with our test system. The 3.5″ bays can utilize SATA (mostly for 3.5″ hard drives), SAS3 (using an additional SAS3 RAID controller/ HBA), or NVMe. Our system is set up for NVMe so we have the traditional orange tabs on the drive trays. Since most NVMe SSDs are 2.5″, that means that this is not the densest 2.5″ configuration available. Here is a quick shot of the backplane driving this flexibility with a Kioxia CD6 NVMe SSD installed.

The reason we have a 4x 3.5″ configuration is simply flexibility. Many server buyers want to have the option for NVMe and hard drives in the same 1U chassis, something that is not practical with a dense 2.5″ configuration. We do wish that Supermicro had an option for 7mm SSDs or E1.S SSDs in this chassis, but Supermicro has a number of SKUs that define what the front panel layout is. This is a 4x 3.5″ chassis, but Supermicro has dense 2.5″ SKUs as well.

The rear of the chassis has a fairly standard layout. The primary I/O is handled by two USB 3.0 ports. There is also a serial port and a VGA port on the rear of the system. The serial and VGA ports may seem ancient by modern standards, but that is what many KVM carts in data centers are designed to service. There is also a bright green outlined network port. At STH, we use green network cables in the data centers for management, but not quite this bright of green/ yellow color.

One can see the networking is provided by two RJ45 ports. These RJ45 10Gbase-T ports are serviced via an Intel X710-at2 NIC that one can find on the proprietary 1U riser. We will discuss the rest of the riser in our internal overview, but the advantage of this setup is that it allows the same motherboard and chassis to be used with different networking options. Since the riser has both the NIC and additional PCIe connectivity, it effectively does not use a slot. Supermicro has different options here as well, but we have the dual 10Gbase-T unit.

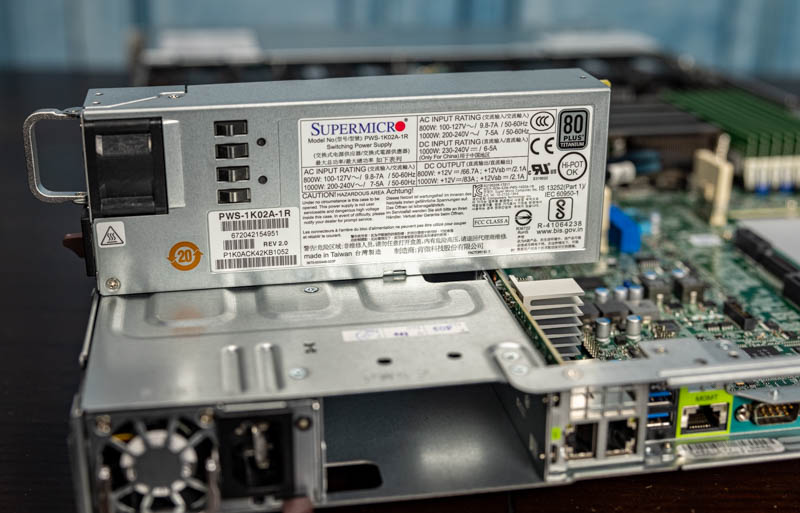

In terms of power, the unit features redundant 1kW power supplies. These are 80Plus Titanium solutions. Many of the servers we review in this class still have 80Plus Platinum power supplies. These cost a bit more but the increased efficiency can lower the power consumption of servers by a few percent freeing up power budget elsewhere.

The outside of the chassis is great, but let us get inside to see what else the system has to offer since that gets to configuration options.

I wish STH could review every server. We use 2U servers but I learned so much here and now I know why it’s applicable to the 2U ultras and Intel servers.

Hi Patrick, does AMD have a QuickAssist counterpart in any of their SKUs? Is Intel still iterating it with Ice Lake Xeon?

I’m fascinated by these SATA DOMs. I only ever see them mentioned by you here on STH, but every time I look for them the offerings are so sparse that I wonder what’s going on. Since I never see them or hear about them, and Newegg offers only a single-digit number of products (with no recognition of SATA DOM in their search filters), I’m confused about their status. Are they going away? Why doesn’t anyone make them? All I see are Supermicro branded SATA DOMs, where a 32 GB costs $70 and a 64 GB costs $100. Their prices are strange in being so much higher per GB than I’m used to seeing for SSDs, especially for SATA.

@Jon Duarte I feel like the SATA DOM form factor has been superseded by the M.2 form factor. M.2 is much more widely supported, gives you a much wider variety of drive options, is the same low power if you use SATA (or is faster if you use PCIe), and it doesn’t take up much more space on the motherboard.

On another note, can someone explain to me why modern servers have the PCIe cable headers near the rear of the motherboard then run a long cable to the front drive bays instead of just having the header on the front edge of the motherboard and using short cables to reach the front drive bays? When I look at modern servers I see a mess of long PCIe cables stretching across the entire case and wonder why the designers didn’t try avoid that with a better motherboard layout.

Chris S: It’s easier to get good signal integrity through a cable than through PCB traces, which is more important with PCIe 4, so if you need a cable might as well connect it as close to the socket as possible. Also, lanes are easier to route to their side of the socket, so sometimes connecting the “wrong” lanes might mean having to add more layers to route across the socket.

@Joe Duarte the problem with the SATA DOMs is that they’re expensive, slow because they’re DRAM-less, and made by a single vendor for a single vendor.

Usually if you want to go the embedded storage route you can order a SM machine with a M2 riser card and 2x drives.

I personally just waste 2x 2.5” slots on boot drives because all the other options require downtime to swap.

@Patrick

Same here on the 2×2.5″ bays. Tried out the Dell BOSS cards a few years ago and downtime servicing is a non-starter for boot drives.

I should maybe add that Windows server and (enterprise) desktop can be set to verify any executable on load against checksums, which finds a use for full size SD cards for my shop (and greatly restricts choice of laptops). the caveat with this is that the write protection of a SD card slot depends on the driver and defaults to physical access equals game over. So we put our checksums on a Sony optical WORM cartridge accessible exclusively over a manually locked down vLAN.

(probably more admins should get familiar with the object level security of NT that lets you implement absolutely granular permissions such as a particular dll may only talk to services and services to endpoints inside defined group policies.the reason why few go so far is highly probably the extreme overhead involved with making any changes to such a system, but this seems like just the right amount of paranoia to me)