Here is a rumor that we have heard a few times this week. By “rumor,” we have an idea of the target node count, location, manufacturer, and so forth. When we asked Intel, however, they just pointed us to their Developer Cloud without confirming the scale. Intel has a giant new AI supercluster being deployed. We are not just talking about a few hundred AI accelerators. The number might be many thousand.

Rumor on the Street Intel Gaudi2 Getting an AI Accelerator Super Cluster

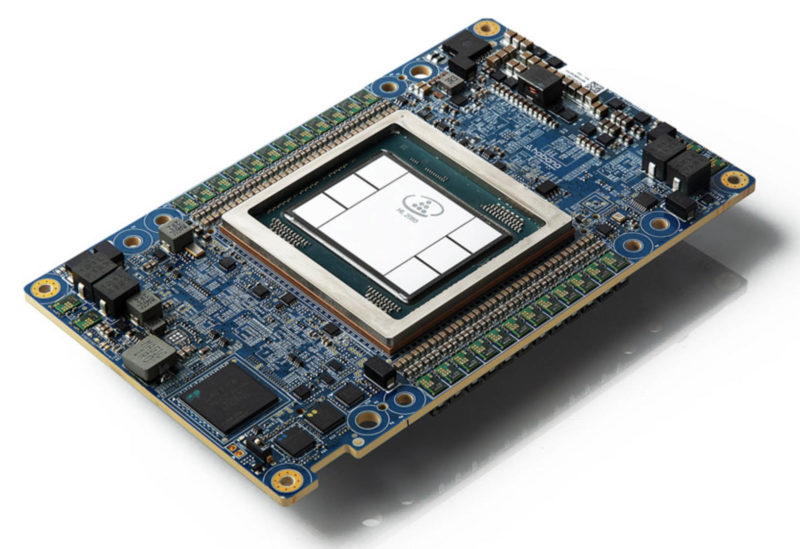

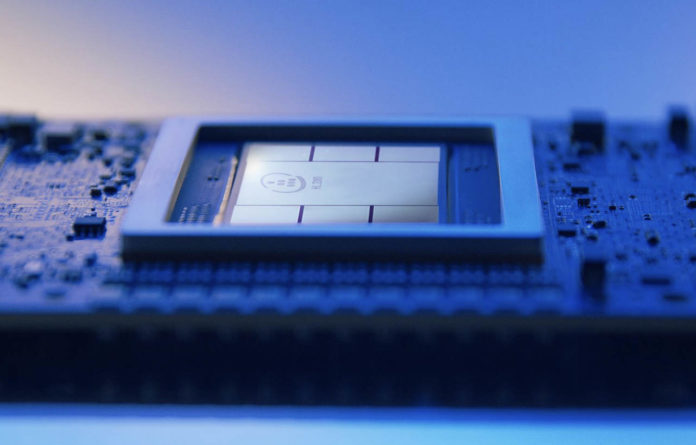

For those who do not know about this already, Intel purchased Habana Labs in 2019 to fuel its AI accelerator ambitions. Habana’s original Gaudi training accelerator was in some ways ahead of its time. It used the Open Compute Project OAM form factor as an alternative to NVIDIA’s proprietary SXM modules. It also used Ethernet to help it integrate into hyper-scale networks instead of more vendor-specific interconnects like NVIDIA’s InfiniBand and NVLink or Intel Omni-Path.

With the acquisition by Intel, it took until last year when we saw Intel Habana Gaudi2 Launched for Lower-Cost AI Training. Performance may not equal a NVIDIA H100 in training, but we are hearing the cost is well under half. Also, there is a practical challenge. At Computex 2023, we kept hearing that the NVIDIA H100 was around a 42-week lead time (that likely varies a bit by vendor) and the A100 was even hitting the same level of supply making it difficult to get NVIDIA cards in 2023. If you do not have your 2023 order in with NVIDIA already and want to have an AI cluster this year, then you are likely looking for alternatives.

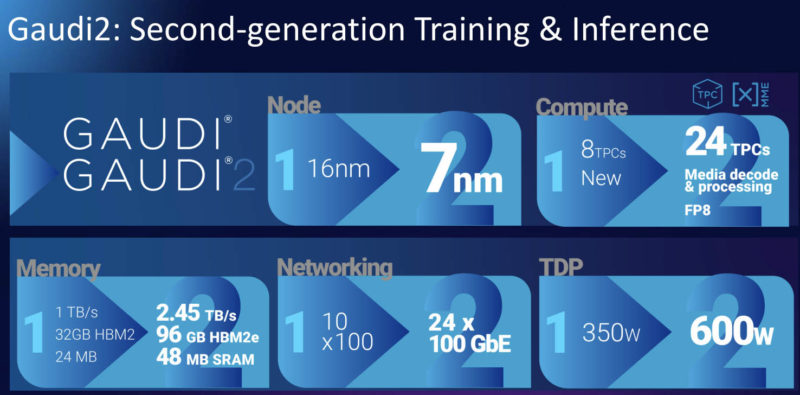

The Intel Gaudi 2 was a massive increase in both performance and memory from the previous generation, making it a higher-end solution in the market capable of scale-out AI training. That is where Intel is doing something unique to innovate.

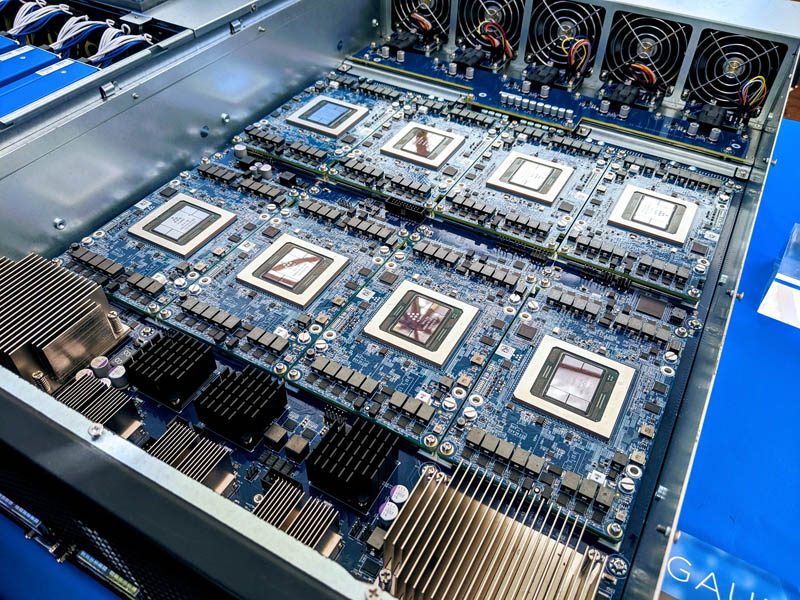

Intel has said that it is making Gaudi2 available in the Intel Developer Cloud. What Intel has not discussed previously is the scale. From what we are hearing, Intel is working towards putting together a >1000 node cluster of the Gaudi2. Assuming 8x OAM modules per node, that would be 8000+ AI accelerators. The word on the street is that the target number might be more like 10,000+ accelerators.

Intel is doing this for two reasons. First, customers are looking for NVIDIA alternatives given the long lead times at NVIDIA. Second, Intel needs developers to try its new accelerators. NVIDIA has a lead on the software side, but Intel knows this and has been targeting specific domains and is working to expand its training use cases. With a large cluster, it can target larger customers and specific use cases that can consume huge numbers of resources.

For companies with AI accelerators, the challenge is simple. AI developers will all use NVIDIA. To get companies to switch, they need to have confidence that Intel’s solution can scale to huge numbers of accelerators to train large models. NVIDIA already has that confidence.

Final Words

Intel has a unique opportunity here. Intel is trying to build demand for Gaudi2 by placing it in the Intel Developer Cloud. With NVIDIA’s price increases and supply constraints, Intel has a number of ways to win with this AI supercluster.

If this Developer Cloud solution is built out to thousands of AI accelerators, then Intel has another opportunity for developing not just a hardware sales angle, but also an AI-as-a-service solution for companies struggling to get physical NVIDIA GPUs or GPU quotas on major cloud providers. There is going to be an opportunity over the next few quarters for anyone who has AI accelerators that are already deployed and accessible or that can be deployed quickly. The big question is whether Intel will use the Gaudi2 cluster purely as a sales tool, or monetize it directly as an as-a-service model.

Perhaps the most important question is how will Intel turn this large cluster into demand and revenue for its AI accelerator business over the next few quarters.

they are still doomed in almost all mkt segments and have been losing mkt share and mindshare for multiple quarters – this says something and then there is a negative side; with arm and risc-V on the rise things are going to stay unstable – having lots of flux and competition in the market is good for everyone

Not really thought through:

Nvidia shortages will go away very soon. Nvidia is basically doubling their AI sales in the current quarter and they are still increasing supply for the following quarters. Unless demand goes up a lot from here lead times for H100 will go to <= 2 quarters soon. Even Supermicro just said during their earning call that availability is improving a lot and that they are ramp fast.

DGX customers will probably receive their systems even sooner because NV makes a lot more money on DGX than on just the H100s . Your lead time info from Computex is based on H100, not a cluster like DGX.

Next to no one wants this second rate Gaudi chip, a cluster won't change that. Intel CFO just said that they are tripling Gaudi 2 sales this quarter which could be related to this cluster story. But: Three times zero still makes zero (the CFO said "although coming from a very low point bla bla"). Very few will get a big cluster they don't want just because it's available today/sooner and have to live with it for 3-4 years.

Intel's AI prospects with these Gaudi chips continue look miserable despite all these rumors they spread.

Ron Klaus..

What flavor was the Kool Aid? Intel is in fine shape, and with SPR shipping reaching the millions all the while “Milan” (which was “launched” before SPR) has yet to show up in a single Cloud Instance – which would be their 1st customers. AMD losing market share in Desktop GPUs, CPUs and Laptops (never had a presence there in the 1st place) – and without shipping anything in needed quantities – then no market share can be gained.

Lasertoe-

“Intel’s AI prospects with these Gaudi chips continue look miserable despite all these rumors they spread.”

Gaudi is for TRAINING. You forget about AMX and it’s XMX cores which are easily as powerful as Nvidia’s version of Tensor cores.

AMD has ZERO AI capabilities – none, zero, zilch, nada, bo. They lack AI on their desktop GPUs as well as their museum pieces that will never see the light of day.

I also agree that no one will be looking at Gaudi if H100 is not available.

As far as orders – I have had my 2 DGXH100s for several months now… Likely because I have been a customer since DGX1 (nothing more than tesla cards in a commodity rack chassis) thru the DGX 2 with Volta and the heavily revised baseboard for Volta Next – thru the DGX A100 (2×8) and now with the DGX H100 (2×8) – and only needed 2 systems.