Over the last day, I have been shocked. There was a small Easter Egg in the NVIDIA GTC 2022 presentation, that the vast majority of folks missed. It was mentioned twice in the roughly 100-minute keynote, but it was not a major point of focus. Still, NVIDIA has something that is clearly a secret weapon that it showed: a third Grace variant. The NVIDIA 1x Grace-2xHopper made its debut.

NVIDIA’s Most Important 3rd Grace? 1x Grace-2xHopper at GTC 2022

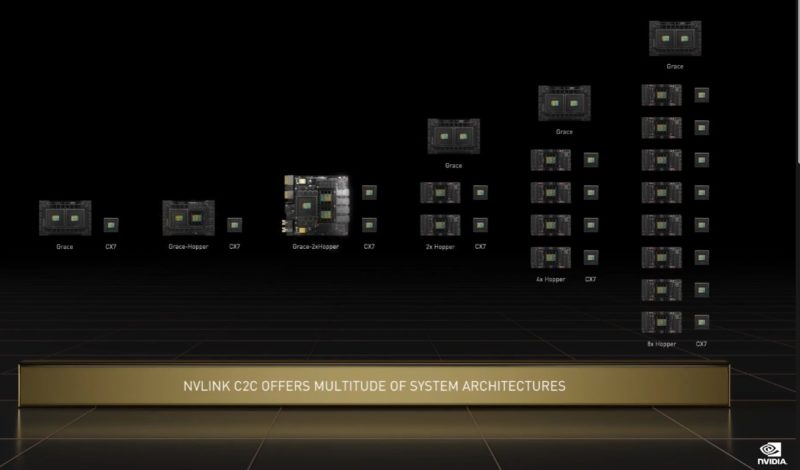

This is the slide taken at the few moments that NVIDIA was discussing how it plans to scale Grace and Hopper in different combinations:

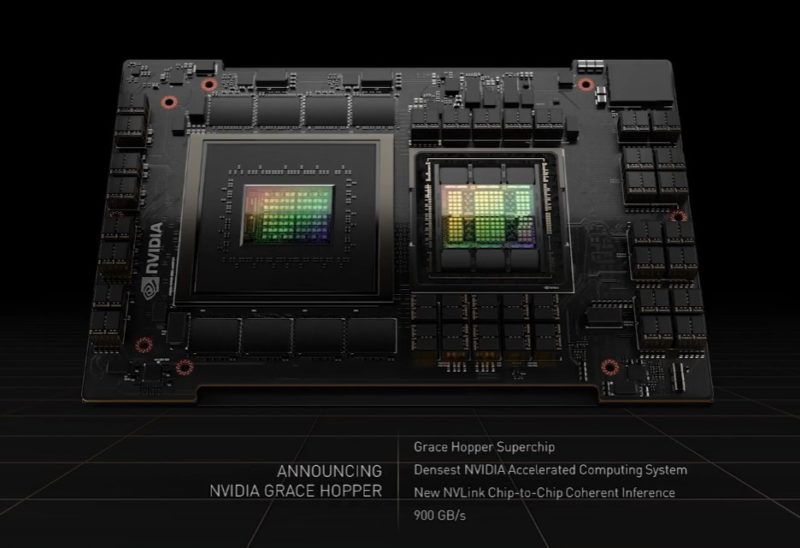

We already saw the Arm-azing Grace at the last GTC, but the new variant was shown front and center at this year’s GTC.

There was also an announcement of the NVIDIA Grace CPU Superchip, a dual Grace Arm CPU module. Just as a quick note here, NVIDIA is clearly doing something else with Grace beyond just providing 144 CPU cores. For some context, the AMD EPYC 7773X Milan-X that we just looked at we are getting over 824 in our SPECrate2017_int_base already, and there is room for improvement. Those two AMD chips have 1.5GB of L3 cache (likely NVIDIA’s 396MB is including more.) While AMD has two 280W TDP parts, and they do not include the 1TB and 1TB/s LPDDR5X memory subsystem, nor NVLink/ PCIe Gen5, they are also available today with up to 160 PCIe Gen4 lanes in a system. As a 2023 500W TDP CPU, if NVIDIA does not have significant acceleration onboard, the Grace CPU Superchip has the Integer compute of 2020 AMD CPUs and the 2021 Ampere Altra Max at 128 cores is already right at that level. The bottom line is, it would be very hard to green-light the Grace CPU Superchip unless it has some kind of heavy acceleration because it is not going to be competitive as a 2023 part versus 2022 parts on its integer performance. Still, there is “over 740” which means that 1400 could be “over 740” but it is a different claim to make.

Our sense is that there much more going on with Grace than NVIDIA is letting on, especially since Jensen said that it would “support RTX” and NVIDIA’s other frameworks.

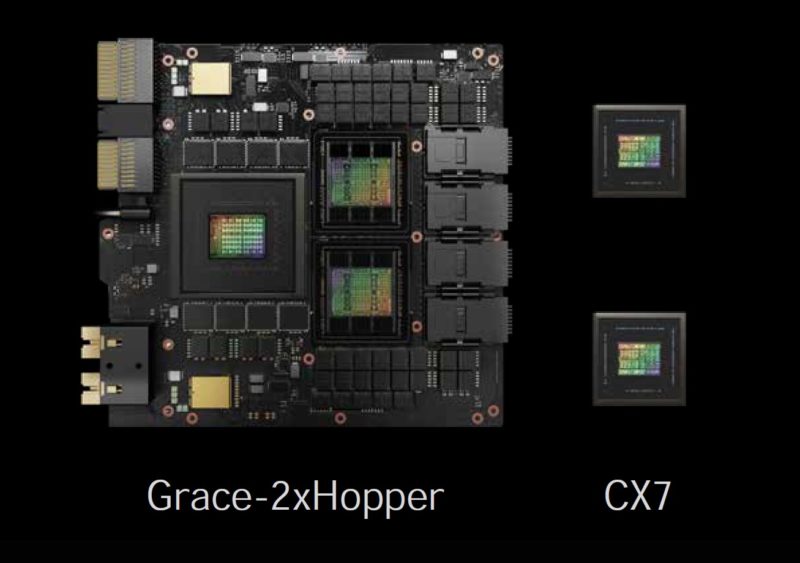

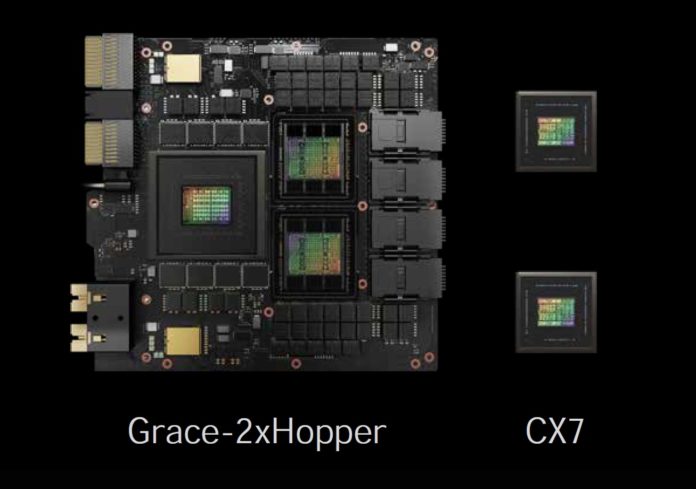

The third variant is small on the slide shown during the keynote, but if we blow it up, look at Grace-2xHopper:

This is one Grace CPU with eight of the LPDDR5X packages visible and there are two Hopper H100’s visible with their six stacks of HBM3 (although likely 5 active.)

What is more, the Grace-2xHopper has a really interesting design already with guide-pins and high-density connectors. This looks a lot like a product designed to fit into a specific chassis given the left edge’s clearly defined roles and lack of symmetry. We can also see the very interesting connectivity on the right-hand side with eight connections in each of the four terminals that are going off into the abyss. Often conceptual products are highly symmetrical and do not have these hanging bits to look better. This may just be a 2023-era supercomputer building block that we are looking at.

Final Words

NVIDIA showed this third variant, and we covered it during our live GTC 2022 piece. Realistically, it was mentioned so briefly that the only reason we covered it was that we thought it might be coming and were specifically looking for it. Figure a few thousand dollars for that CPU complex, plus two GPUs easily well north of $10K each (most likely a lot more) and that is likely a $25-30K module minimum that has connectivity for high-density servers. NVIDIA has something brewing with Grace-2xHopper that is clearly an assembly designed for large-scale systems integration, and that makes it very exciting.

NVIDIA GeForce RTX 3080 Ti Giveaway for GTC

NVIDIA is sponsoring a GPU giveaway on STH for GTC 2022. For this, you likely need to have a free GTC 2022 account. If you do not already have one, you can register on the GTC website. Registration is free this year and there are hundreds of free sessions. Then take a screenshot, uploading it below with a quick caption as to why the session is your favorite.

Here is the simple giveaway form:

STH NVIDIA GeForce RTX 3080 Ti Giveaway

For those who want a few bonus entries, after you submit the photo, you can get a bonus entry by subscribing to the STH Newsletter and/or going to the NVIDIA GTC 2022 page again.

I don’t think you were supposed to cover that. I don’t see it on other sites.

samir – I disagree wholeheartedly. If the CEO has it in the middle of a slide announcing a new family, it is fair game. Future products to not make it to slides without the company giving thought on whether or not to show it, even if it is small.

You got over 824 in your SPECrate2017_int_base for the AMD EPYC 7773X Milan-X using what compiler? In NVIDIA’s blog post on the Grace superchip, they compare the projection of 740 SPECrate2017_int_base for Grace to an estimate of 460 for a dual socket AMD EPYC 7742 system “with the same class of compilers.” The compiler they used for the Epyc system was gcc 10, with the arguments used also given in the footnote. That seems to imply the 740 projection for the Grace superchip is a projection for an unoptimized compiler. If NVIDIA is comparing their chip using an optimized compiler to other chips using an unoptimized compiler then they are just spouting BS.

Also, the 500 W for the Grace superchip is the power draw given for the CPU plus the memory subsystem. “The LPDDR5x memory subsystem offers double the bandwidth of traditional DDR5 designs at 1 terabyte per second while consuming dramatically less power with the entire CPU including the memory consuming just 500 watts.”

there’s only 1 specint and that’s submitted and accepted. if they’re comparing a n/a chip’s specint est to some number that isn’t on the official page, then they’re just making a fantasy number up. that’s it.

@xzbit That’s not true. When you see “estimated” next to specint score that means it’s not submitted and official. It’s quite common. In fact you will see it very commonly on reviews for processors. When NVIDIA use gcc 10 instead of aocc for its Epyc specint scores then they are not comparing its projections to the submitted scores, but rather to scores representing a different context than the official scores are meant to represent. And there are two possible reasons for that: either 1) it’s inappropriate to compare it to the official results because it would not draw a proper comparison with their chip or 2) NVIDIA are being shady and making the competitor’s offerings look much, much worse than they really are.

Anyway, to suggest that it’s a “fantasy number” to use different settings from what was submitted to specint is off the wall, even if there were no such thing as optimized compilers to deal with. Isn’t it true that much of the code running on AMD Epyc CPUs was not compiled with AOCC? Then the AOCC scores mean very little to the performance of that specific code. Then how can it be fantasy to consider Epyc CPUs’ integer performance with compilers and settings that aren’t optimized for the specint test?

There are good reasons for maintaining an official specint score database using best results. And there are also good reasons for considering scores that use compilers and settings other than what give those best results. It’s a little like using wrist straps or not using wrist straps for a deadlift. Even if there were some official repository of deadlifting results that uses wrist straps, whereas wrist straps result in higher weights, it’s still useful to consider deadlifting results without wrist straps as that tests a someone different context of strength. Such results are not “fantasy”.

The “official” SPEC scores are fantasy numbers due to ICC and AOCC doing special tricks that only work on SPEC source code. They don’t indicate realworld performance in any meaningful way. Neither ICC nor AOCC give significant speedups on general code over GCC or LLVM. They really only exist to give good SPEC scores, and that’s it.

Comparing a trick compiler with a standard compiler like GCC is misleading at best. The only fair comparison between different CPUs is to use the same compiler and same options. And that is what NVIDIA seems to have done here. You can find similar SPEC comparisons on AnandTech.

@Patrick: So what is the SPEC score for Milan-X using GCC with the options NVIDIA used? That’s the only useful comparison in this context, not the 840 fantasy number.

Will – The applications that AMD is targeting for Milan-X are ones that do not use GCC. AOCC/ ICC do give meaningful performance gains and that is why commercial vendors often use them. Both numbers compare different things, but the actual SPEC number is what is published on the website. I am not trying to get hired by an Arm CPU vendor so we are a bit more neutral in the opinion on these things.

@Patrick: Saying Grace is already outclassed is not being neutral when you use inflated SPEC scores to get there. AnandTech does fair SPEC comparisons using the same compiler and options, and they show 540 for 2P Altra Max vs 537 for 2P EPYC 7763: https://images.anandtech.com/graphs/graph16979/122609.png

740 SPECINT is a 37% gain over Altra Max/EPYC, so that will definitely be competitive early 2023.

Note also that Grace has 2.5x the memory bandwidth of Milan(-X), and that is what really matters.

Will – SPEC scores, by definition, are what is published on the spec.org website. Anything else is a different test.

And 100% both ICC and AOCC are used, in production, with commercial ISVs, and they yield a performance gain.

GCC is still valid as a least common denominator compiler, but that is then not valuing the optimization on the software side. It is not that GCC is invalid, but if we are looking at maximum performance it is hard to only say GCC is the only one.

And on being unbiased, again, you cited AT, but I am not currently trying to get a job at an Arm CPU vendor.

I’ve used ICC myself and yes it does absolute magic on SPEC (especially once you realise what transformations it actually does). But it is disappointing for anything else, I got about 5% slower code on my application. Phoronix recently tested AOCC: https://www.phoronix.com/scan.php?page=article&item=aocc32-clang-gcc&num=1

The official 2P 7763 SPECINT score is 913 using AOCC, AnandTech got 537 using GCC. So AOCC is clearly 70% faster than GCC, right? So how is it possible that GCC actually won most of the tests and for the tests with the largest spread AOCC was the slowest compiler? AOCC is certainly lacking some optimizations for code that doesn’t look like SPEC sources…

HPC people generally use different compilers and choose the fastest one for their code. However there isn’t a single best compiler, and ICC/AOCC are certainly not the only compilers they use.

As for AnandTech, I’m not sure what you have against them. They are one of the few tech publications that use fair, meaningful and unbiased benchmarks.