To date, no major vendor such as Dell EMC, HPE, Inspur, Lenovo, QCT, Huawei, or Supermicro has announced mainstream support for the Intel Xeon Platinum 9200 series. Other vendors like Wiwynn, Gigabyte, and Tyan also have told us they are not planning to support the Platinum 9200 series. In April 2019, I asked eight of those ten vendors why they do not support the Intel Xeon Platinum 9200 series and have gotten shockingly similar responses. HPE will support the Platinum 9200 when the Cray acquisition closes, but Cray systems are not mainstream ProLiant. Server customers and Intel rely upon a broad partner ecosystem and the partner ecosystem has largely rejected the Intel Xeon Platinum 9200 series since its launch. In this article, we are going to talk about why.

Why Intel’s Partners Are Rejecting the Platinum 9200 Series

Asking vendors why they have rejected the platform to date (Intel may pressure vendors for an announcement later at ISC) the common themes are twofold. First, Intel is not selling chips. Second, the platform makes extreme trade-offs for density that many customers cannot use.

Intel Xeon Platinum 9200 Platform Concerns

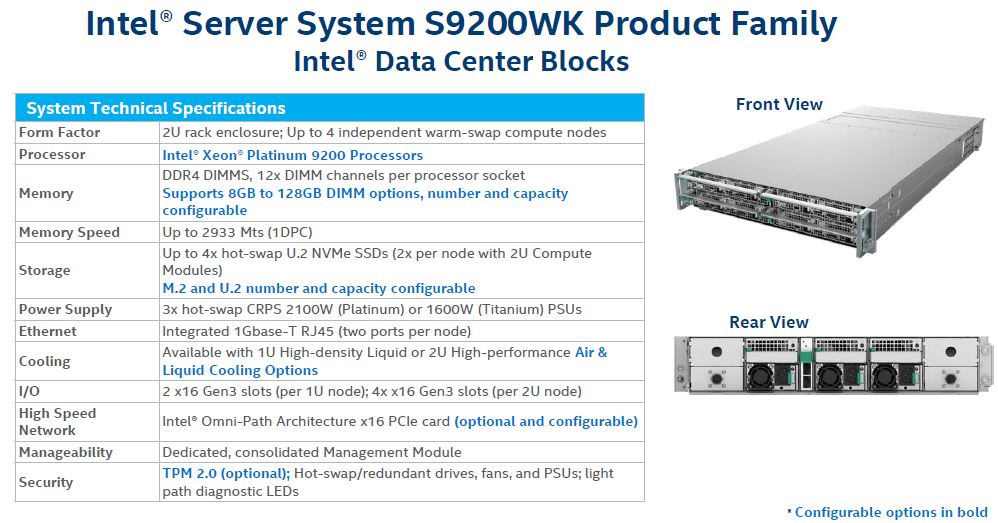

Instead of selling chips to put into vendor designed PCB, Intel is selling complete boards with two BGA packages and set I/O. Large systems providers also note that that form factor is not appealing because it effectively also locks one into the system form factor that Intel is providing. Although they can technically innovate on chassis and cooling, those are the lower value items since they are easier to copy. Essentially, the Intel Xeon Platinum 9200 series took away Intel’s major partner’s ability to innovate and differentiate in the market.

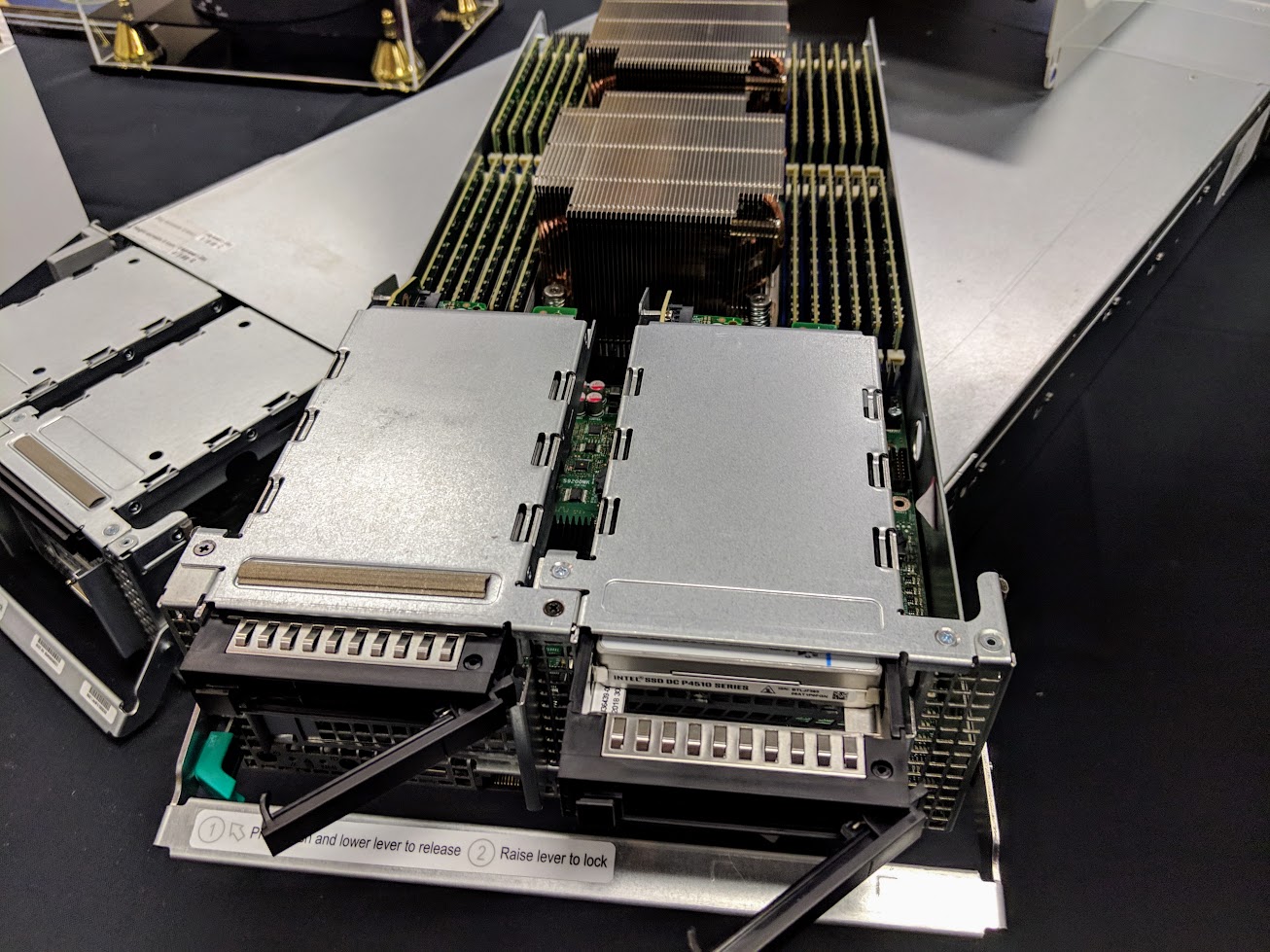

Here is a practical example using one of the Intel Server System 9200WK nodes:

One can see the Aspeed AST2500 BMC on this board. Aspeed is an Intel Capital investment that puts Arm cores into many servers. When these Intel Platinum 9200 servers are sold an Intel Capital portfolio company is helping to sell Arm cores alongside them. As an aside, the Altera Max 10 is also made by Intel.

The Aspeed AST2500 family is used by companies like QCT, Wiwynn, Inspur, Supermicro, Gigabyte, and Tyan, but it is not universal. Dell EMC PowerEdge, HPE ProLiant, and Lenovo ThinkServer all utilize their own BMCs. Features like iDRAC, iLO, and XClarity that are staples of each company’s offerings are not designed for the Aspeed AST2500. Likewise, an Intel designed PCB has limited room for server OEMs to innovate and differentiate on expansion slots and storage.

Essentially, all server vendors can innovate on is in terms of cooling and perhaps power and form factor. Server vendors are not seeing the business case there for mainstream markets since how does Dell EMC differentiate from Supermicro when they are selling the same Intel machine? The decision to offer fully integrated systems that are not just chips means that OEMs lack differentiation and therefore are not embracing the platform.

To be clear, if one takes the position that the Intel Xeon Platinum 9200 is a mainstream two-socket offering and Intel’s business model going forward, it is tantamount to Intel declaring war on its entire OEM partner ecosystem. This is, of course, not happening because the Intel Platinum 9200 series is not designed to be a mainstream product.

Intel Xeon Platinum 9200 Density Trade-offs

OEMs also cite a lack of customer demand in the mainstream market as a reason they are not launching offerings. With the Intel Xeon Platinum 9200 series platforms, the density offered is hard for many customers to justify. Assuming two 400W BGA packages and two sleds per U, DIMMs, boot drives, NICs, local storage, plus efficiency loss, these are being designed for 2kW per U or more racks. Assuming 40U of compute and 2U of switches or more, this is a lot of power to the tune of 80kW+ racks. An 80kW+ rack is not unheard of in the data center, but it is also not something that one can go to a commodity colocation provider and get. Instead one needs a specialized facility. Comparing specialized facilities that have the appropriate cooling capacity, it is often the case that standard 4-socket (4P) platforms can offer better performance and expandability in lower cost facilities.

For some perspective here, we generally use no more than 1kW per U for 4U 8x NVIDIA Tesla P100 or Tesla V100 servers with dual CPUs, networking and storage. Many data center racks cannot even handle 40kW worth of GPU nodes let alone twice that amount.

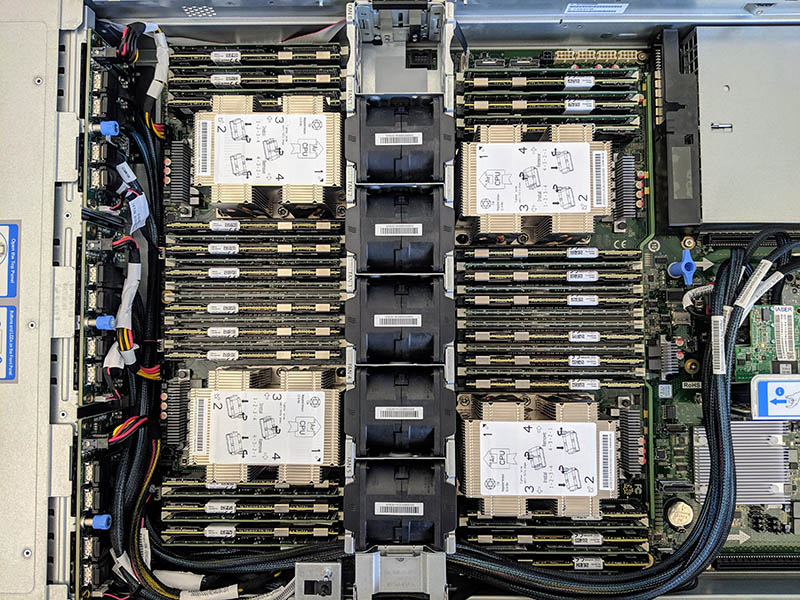

The trade-offs with the Intel Xeon Platinum 9200 series are severe. These 400W chips are constrained in 1U nodes to two PCIe Gen3 slots and four U.2 NVMe SSDs. This is less than we see with 2U4N mainstream dual-socket designs like the Supermicro BigTwin SYS-2029BZ-HNR or the Gigabyte H261-Z61 2U4N AMD EPYC with NVMe. In a four-socket system like the Inspur Systems NF8260M5 or Supermicro SYS-2049U-TR4 with the same core count, one gets significantly more PCIe lanes for NVMe storage, networking, and accelerators.

On one hand, having per-socket licensed software (e.g. VMware vSphere) and a 56 core CPU may seem attractive, pending the Per Socket Licenseageddon, but there is another constraint. Intel’s 9200WK platform for the Intel Xeon Platinum 9200 series only supports 12 DIMMs per socket. Intel Xeon Platinum 9200 does not support Optane DCPMM. In essence, for most virtualization workloads memory capacity is half, or less, than a four-socket Intel Xeon Scalable node with the same core counts. One also cannot use Intel Optane DCPMM which Intel is promoting heavily.

Further, standard QSFP28 and DD networking connectors and cages practically limit switches to under 80 ports per U of rack space. That means one would likely need more switches per rack or more rack space for fabric just to handle the compute density of 80 nodes per U in realistic deployment scenarios with 100GbE, Omni-Path, or Infiniband to each node.

The bottom line here is, this density can only be appreciated by the few that value density above all else. Density is the key value proposition of the Intel Xeon Platinum 9200 series. It may be important in a few years, but in Q2 2019, this is too dense for most deployments and organizations. Major OEMs are telling us that their customers, outside of some specialized HPC markets, are not demanding Intel Xeon Platinum 9200 over mainstream Xeon Scalable because of the constraints it provides.

Final Words

Intel would like the market to believe that the Intel Xeon Platinum 9200 series is an upgrade over the Platinum 8200 series and is a real dual-socket competitor. Unless one is operating in the densest deployments where space and memory bandwidth to x86 cores are at an absolute premium the Intel Xeon Platinum 8200 series in four-socket configurations remains a better choice. The proof is in what we see. We are not seeing announcements at launch from major OEMs, only Cray a more boutique player compared to the server giants. We did not see Intel Xeon Platinum 9200 at Inspur Partner Forum or Dell Technologies World over the past two weeks, representing two of Intel’s largest worldwide partners.

To be clear, the Intel Xeon Platinum 9200 series has a well-defined niche that is well suited to serve. For density optimized HPC applications where AVX-512 will be used heavily in conjunction with high memory bandwidth but not high memory capacity, it makes perfect sense. It is a perfectly appropriate architecture for the HPC space when one does not want to use accelerators. At the same time, it is not a mainstream market architecture appropriate for the same markets that the Intel Xeon Gold, Platinum 8200, and AMD EPYC servers are targeted at. We are going to see more wins for the Intel Xeon Platinum 9200 series, but those are going to be in HPC clusters and supercomputing platforms like the Intel-Atos-HLRN system rather than HPE Azure Stack clusters or VMware Cloud on Dell EMC.

Intel’s anti-partner form factor of the Xeon Platinum 9200 series is not driving a broad ecosystem as we see in the mainstream two and four-socket systems. Intel is banking its two-socket dominance on the Intel Xeon Platinum 9200 series, a platform its largest partners have taken a public pass on for the first two months of its lifecycle.

To most of STH’s readers, the above will make perfect sense somewhere around the “no duh” domain. Intel itself is not positioning the Platinum 9200 series as a HPC rather than a mainstream product. The company is, however, using the Platinum 9200 series as a halo benchmark vehicle in segment comparisons outside of the narrowly defined HPC niche. To our readers, when you see Intel try to use the Platinum 9200 series comparing it to its mainstream 2nd generation Xeon Scalable line and AMD EPYC from major OEMs, feel free to send your colleagues this article explaining why it is only an appropriate comparison for density constrained x86 compute HPC applications.

Incidentally, since this article was written before the recent AMD EPYC “Rome” NAMD numbers came out, NAMD is a valid application that could be run on density optimized x86 compute HPC clusters.

We tried to get a quote from Dell to do that TCO analysis. Our rep said they aren’t carrying the 9200’s.

I’m in total agreement. 9200’s are just a HPC product. If you want GPUs even you aren’t going to use it either. You could have done a better job highlighting that GPU HPC won’t use it. Your right its not a bad project, its just a narrow niche product is valid.

No way Intel should use this to compare to EPYC. I can get a quote for EPYC. I can’t for 9200. That means one is usable, the other may as well be pixie dust.

Our Lenovo rep was here for lunch. She said the same and that nobody’s ever asked her about Plat 9.2k

I also know we’re limited to 30kW/rack so yeah. We could use these but 4p 8276 is better and easier to procure.

You’re missing support. If it doesn’t come from Lenovo who services? Are there spares stocked nearby?

I checked with our HPE rep, they do not have this either right now. Cray isn’t part of HPE yet.

I’ve seen a lot about these Platinum 9200s online without realizing this. Great article STH

We buy ~5k nodes/ year. We aren’t looking at CLX-AP because it’s not useful. You had it in your earlier piece but you’ve got 80 PCIe lanes for 112 cores. That’s silly in 2019. Except, as you showed, in a very narrow segment.

This is a pure marketing device. I don’t even buy the theory that this is a HPC product. You can always replace one 9xxx CPU with two 8xxx (or 4 8xxx for 2 9xxxx). It’s the same or better.

License cost for real HPC stuff don’t come per socket:

Supercomputer level stuff is mostly open-source plus they have their own staff for creating/optimizing libraries.

Smaller HPC projects like Ansys comes with per Core/process pricing. Matlab parallel Server also per Core or/per worker per hour.

Yeah… When all those articles came up saying Intel shot back with their benchmarks, featuring the 9200, I had to laugh.

Now, don’t get me wrong, it’s definately a halo product. But it’s nowhere near an apples to apples comparison. Just the fact that it’s not, nor ever will be it seems, shelf available means it shouldn’t even be in mind compared to EPYC.

Also, not missing the irony of Intels awesone “glued together” cpus… Anyway…

@Kazaa this article, as I read it, did not say HPC. It said HPC when the system is space constrained. Maybe fewer racks offset buying more tubing lengths and you get shorter fiber runs and other parts that save you something? The higher-density will cost more than that anyway but there’s got to be some reason someone out there wants a denser system.

HPC you’re better off with 1-4S normal systems, but when you need to get more density, this is what you’ll need when you don’t want GPUs

@Fred Yes, you are right I didn’t read that right.

Since this is 2 NUMA nodes anyways a quad socket Platinum 82xx Setup gives you similar or even better performance (easier to cool).

FOUR NUMA NODES not 2.

Four nodes, Sorry, I meant two per socket.