Today we have the launch of the Intel Xeon Platinum 9200 series sporting up to 56 cores and 12 DDR4 DIMM channels per socket. To get here, Intel needed to make some significant packaging advancements and also make some intriguing trade-offs. In this article, we are going to show you around this new series.

Ever since Intel went public with Cascade Lake-AP in November 2018, there has been great confusion over what the new chips are. Perhaps as intended, much of the non-technical and consumer technology press hailed this as the direct competitor to AMD EPYC. It is not.

Let us be clear, the Intel Xeon Platinum 9200 series allows one to achieve higher rack compute density, especially with liquid cooling, than the Intel Xeon Platinum 8200 series. It is, however, not designed to be a mainstream product. Instead, it is designed to be a HPC product with a narrow focus.

Intel Xeon Platinum 9200 Overview

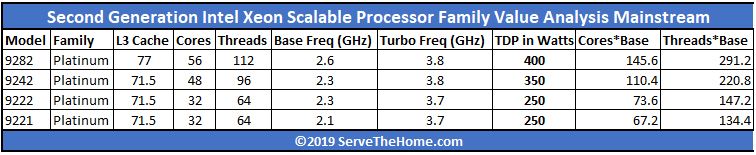

First off, here are the headline specs. There are four processor SKUs ranging from the 400W Intel Xeon Platinum 9282 with 56 cores and 112 threads to the Intel Xeon Platinum 9221 with 32 cores and 64 threads at only a 250W TDP. This is a very small platform lineup. The last four or fewer server SKU lineup we saw from Intel in recent memory may have been the original Intel Xeon D-1500/ Broadwell-DE launch that had two SKUs, the Intel Xeon D-1540 and Xeon D-1520 succeeded a few months later by the “1” SKUs as STH was first to confirm the Broadwell-DE Intel Xeon D-1500 series and SR-IOV issue that pushed the re-spin.

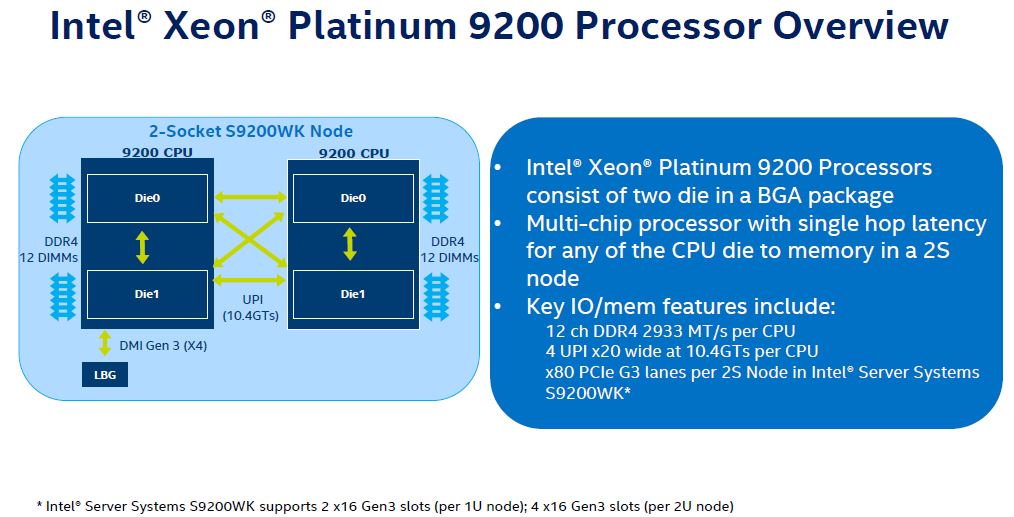

To make the Intel Xeon Platinum 9200, Intel glued together two Xeon Platinum 8200 processors that would normally be found in separate sockets into a single socket. It then utilizes UPI between the CPUs in the same socket and between two sockets. As we surmised at its announcement, this is the same architecture as the Intel Xeon Platinum 8100 and 8200 have in four socket topologies.

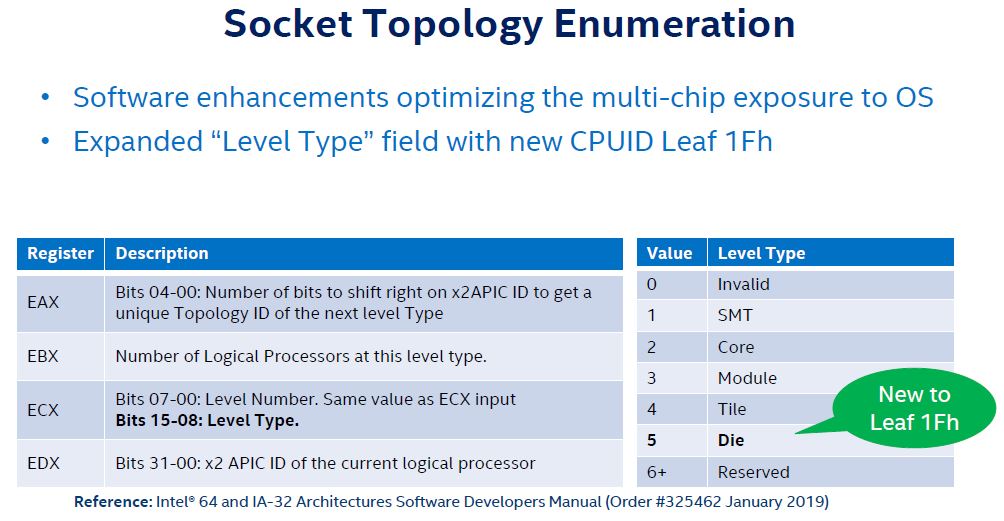

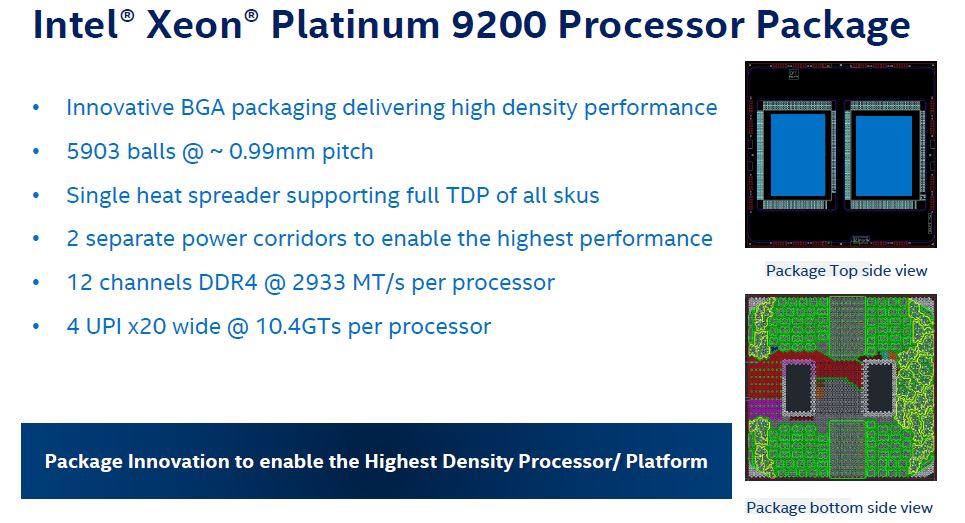

This arrangement means Intel had to abandon the socket altogether. Instead, these are BGA parts affixed to motherboards. BGA helps keep PCB layers down which is important to keep the platform compact. In fact, Intel had to add a new CPUID definition of socket to now include die in socket just to support this topology.

Perhaps one of the biggest implications of this is that, until software vendors patch for this, an Intel Xeon Platinum 9200 series socket will effectively have two CPUs. If you license software on a per-socket basis, this can half your license costs. Before you rush to order these and install VMware vSphere, remember that this cannot use DCPMM.

Using this, one gets a full 12 channels of DDR4-2933 memory or the same as two Intel Xeon Platinum 8200 series CPUs. There are four UPI links for die-to-die and socket to socket communication. A note here, there are 2U4N systems that have three UPI links directly between the two sockets for the Platinum 8200 series. This is a clear example of where Intel had to make a trade-off when gluing Xeons together.

Although the chips themselves are interesting since they are BGA you cannot just go buy them. Instead, one needs to purchase the CPUs from Intel, on an Intel motherboard.

Intel Xeon Platinum 9200 Packaging

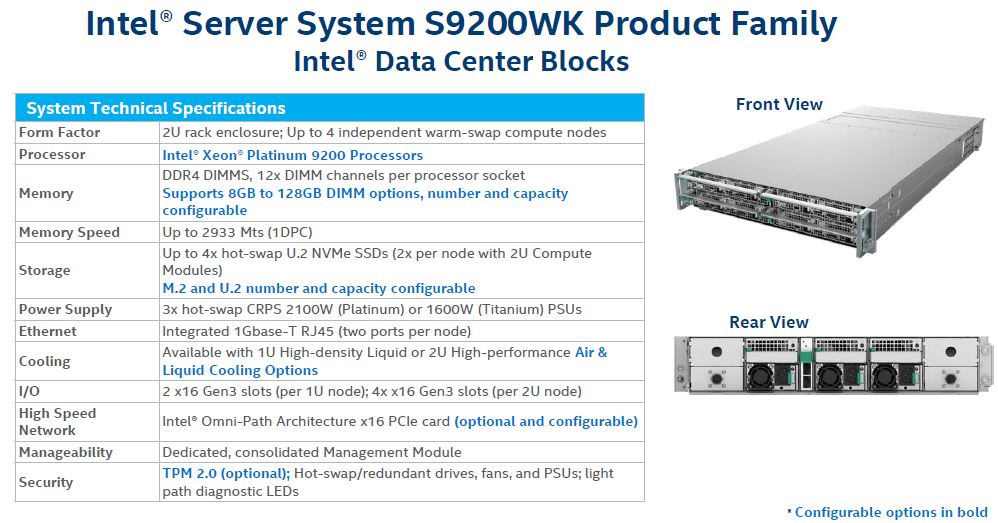

The Intel Server System S9200WK is the family of Intel branded servers that support the Intel Xeon Platinum 9200.

Here the spec table says that the DIMMs supported are up to 128GB DDR4-2933 RDIMMs, but we suspect 256GB DIMMs may work as those will work on the Xeon Platinum 8200 series. Of note, released on a day alongside Intel Optane DC Persistent Memory (DCPMM), the new revolutionary memory is not listed as being supported by the Intel Xeon Platinum 9200 series in any of the documentation. In theory, the Cascade Lake memory controller across the line should be able to support Optane DCPMM, but the Intel Xeon Platinum 9200 cannot. There is a surprising reason for that.

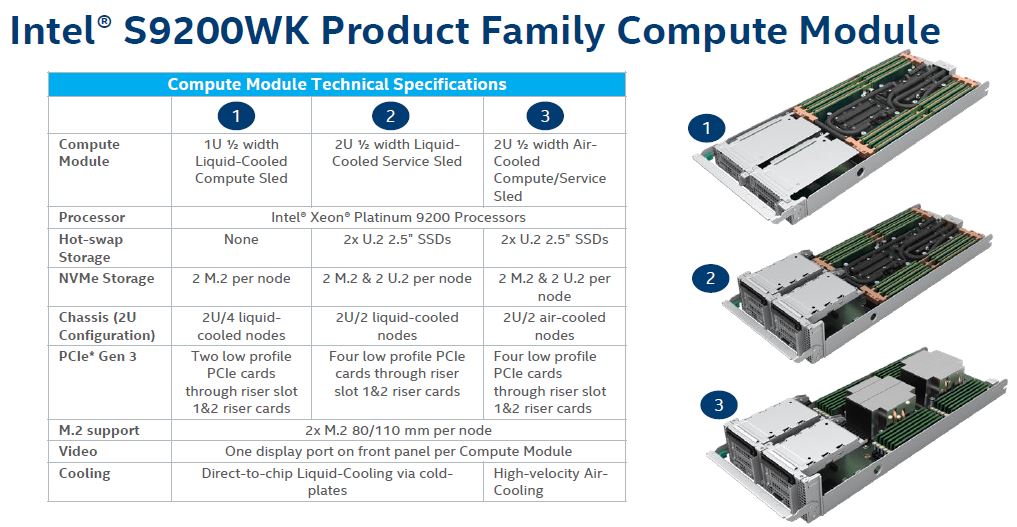

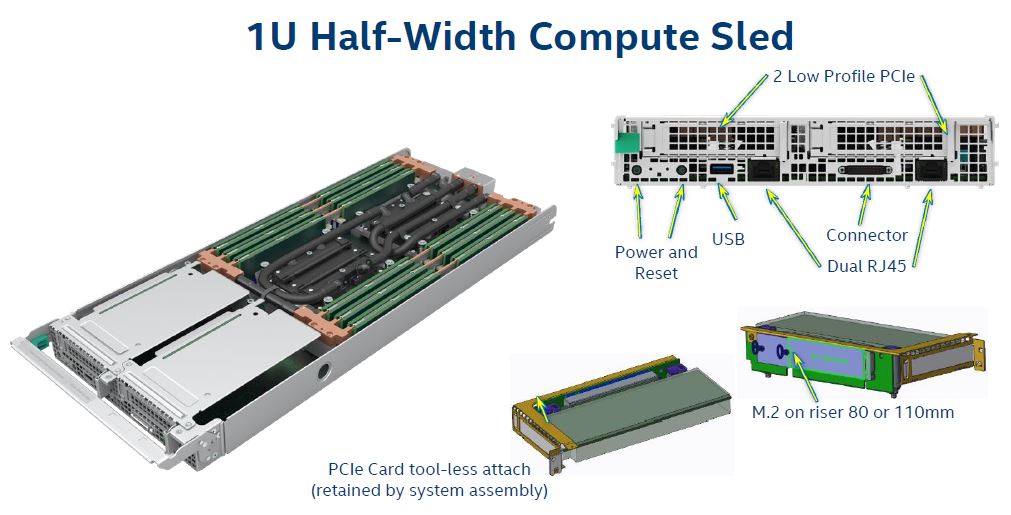

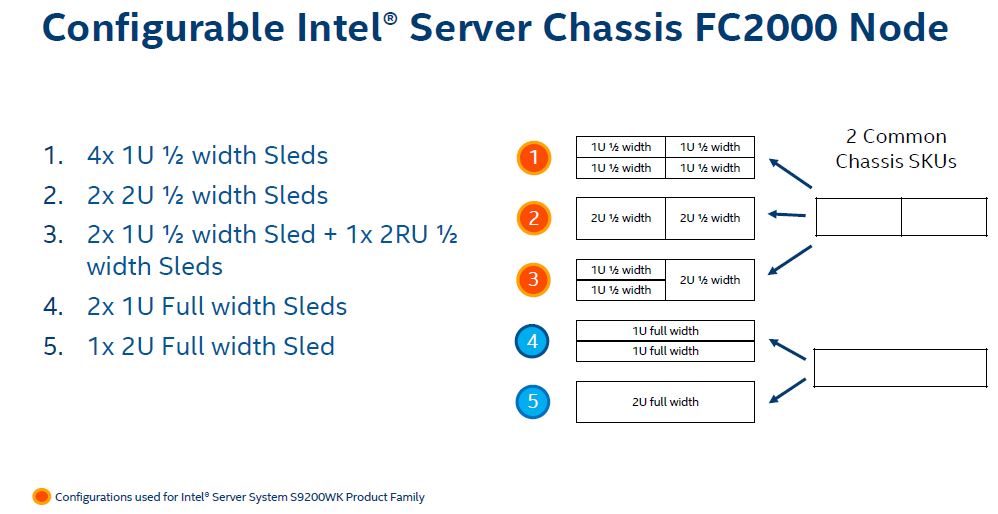

Since Intel is selling the Platinum 9200 series affixed to PCB, the company has three primary S9200WK comptue modules. A 1U half-width liquid cooled sled along with two 2U modules, one liquid cooled and one air cooled. The entire premise of the Intel Xeon Platinum 9200 series is to effectively take a four-socket server and put it in a half-width form factor so one can effectively get eight Xeon Platinum 8200 series chips per U of rack space.

The half-width compute sleds also have room for network fabric NICs and fairly standard I/O. These designs are denser than a typical 2U4N system, but one is losing a lot of expandability.

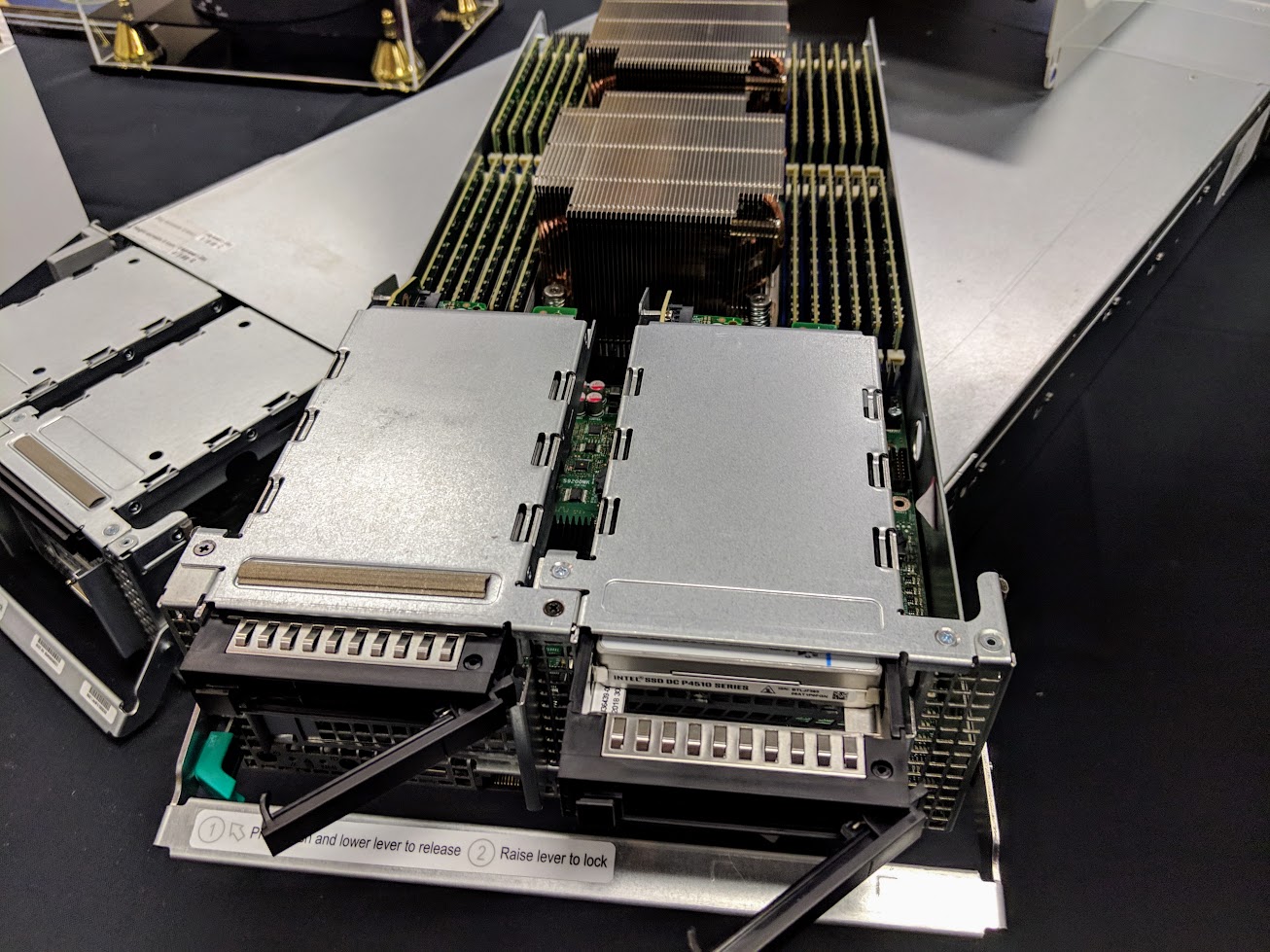

Here is a shot of the 2U air cooled node. There are a pair of U.2 NVMe drives for hot swap storage and one can see the massive coolers and memory array. To be clear, 2U4N systems can have 12 DIMMs per socket in half-width sleds, so this is not exactly a dense memory capacity configuration. It is, however, a dense memory channel configuration.

On the topic of trade-offs, because Intel Cascade Lake generations need to have standard DDR4 in the same memory channel as Optane DCPMM these are low memory capacity nodes. A 2U4N server with two Xeon Platinum 8200 CPUs per half-width sled can handle 24 DIMMs, and up to 12x 512GB DCPMM modules. On the other hand, the Intel Xeon Platinum 9200 series, without a second memory channel cannot take advantage of Intel’s most innovative new memory technology.

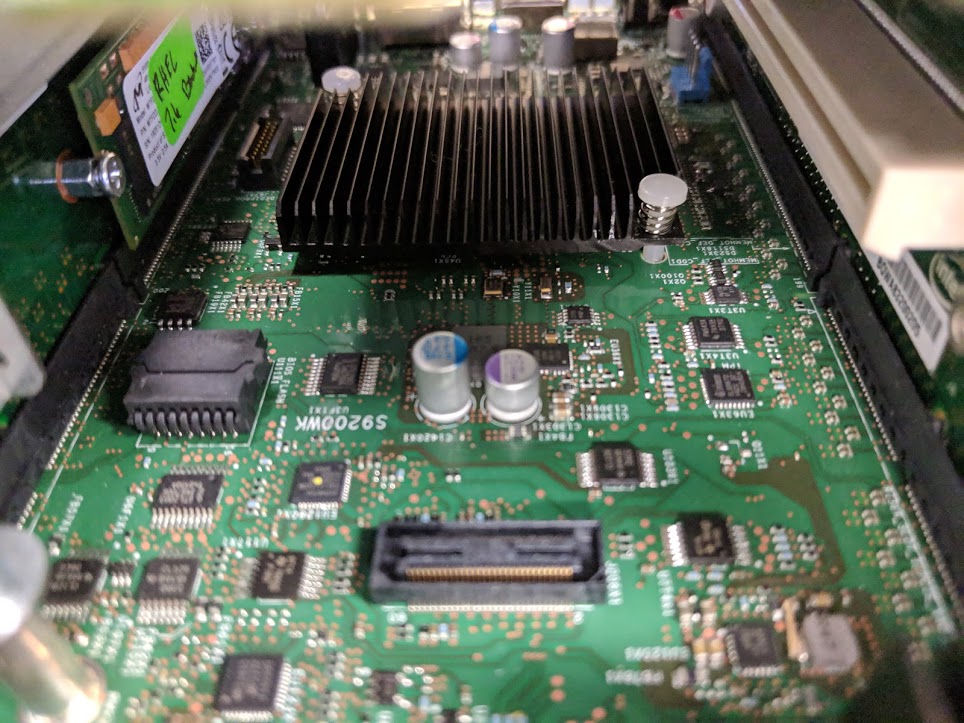

On the motherbaord we can find a familiar Lewisburg PCH handling the basic I/O needs. One can also see PCIe 3.0 x16 risers and M.2 NVMe SSD slots via risers.

Also on the 9200WK platform, one can see an Aspeed BMC and an Altera chip to handle I/O.

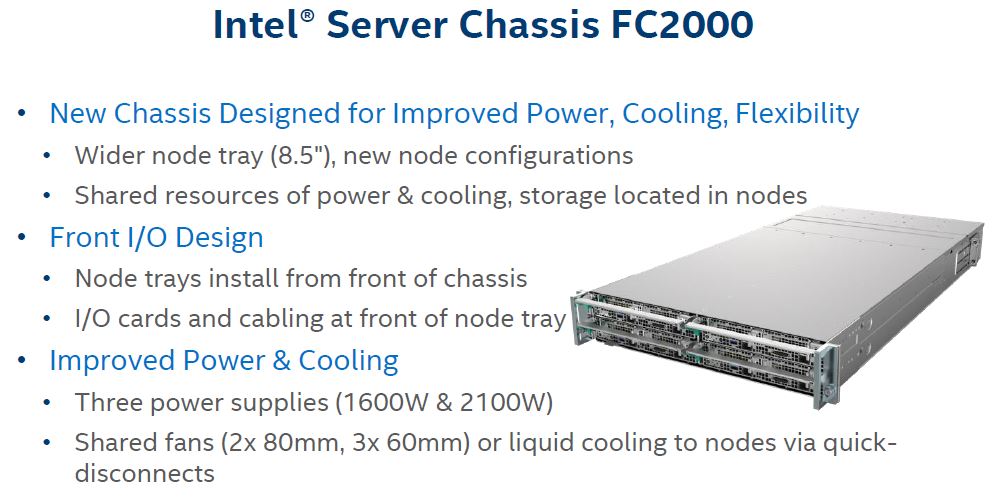

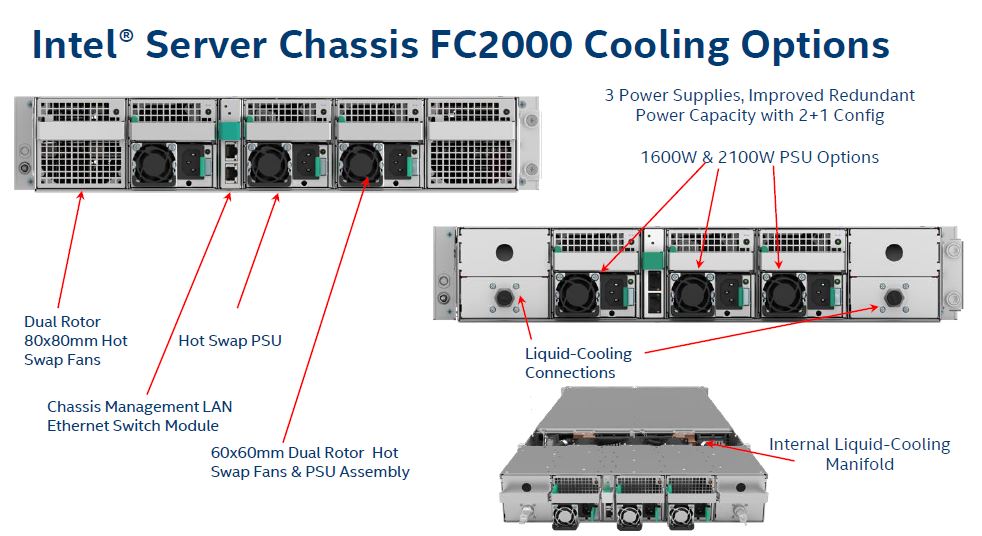

The nodes slide into the Intel Server Chassis FC2000 which is designed to cool these hot CPUs.

There are a total of three power supplies as well as large fans. Typical 2U4N systems have two power supplies mounted between nodes. Here, Intel needs to have the PSUs and fans behind the nodes. That configuration means that the server sleds are shorter than one may expect. Keeping the system compact is good, but it also means that the system is not as expandable as Intel’s 2U4N standard Xeon offerings.

Like most modern 2U4N servers, there is the option to replace two half-width nodes with a single 2U node. A good example of that is the air-cooled node above. Beyond what the Intel Xeon Platinum 9200 series uses, the chassis is designed to support 1U or 2U full-width nodes as well. This is a benefit of moving the power supplies to the rear of the chassis instead of between nodes.

The implications of this arrangement are profound. If an OEM wants to sell the Intel Xeon Platinum 9200 series, it needs to buy an Intel motherboard and platform along with the CPUs. That limits OEM options for value add. A good example is that while the Aspeed AST2500 is ubiquitous on many servers, many of the large OEM platforms like Dell EMC’s iDRAC, HPE iLO, and Lenovo XClarity utilize other BMCs. That is a challenge if those same OEMs want to support the Intel Xeon Platinum 9200 series.

On the next page, we are going to show Intel’s performance claims, then give our final words.

“gluing Xeons together”

Nice one Patrick, lol