Several STH readers will notice that we had downtime last evening. It sucks. Almost everything is back up, and performing well. While we are still finishing the process, we wanted to give a mini-postmortem to the STH community on what went wrong and also give a quick update into the next-generation that we are bringing online soon.

The Bad: Power Outage in the Data Center

This happened. We are in the same data center suite (for now) as Linode, Android Police and etc. Last evening, the power to the suite was cut. We do not have official word on what happened, but it took a few hours to resolve. When this suite goes down we are not the only ones impacted:

Our host's (@linode) colo in Fremont is down, so both @APKMirror and @AndroidPolice are down until they bring it back up.

— Android Police (@AndroidPolice) June 21, 2018

And another example:

Connectivity Issues – Fremont https://t.co/S2LYPd3TUY

— Linode Status (@linodesys) June 21, 2018

The outage covered well over an hour, so this is not something that one can realistically fix with in-rack battery backups.

Normally, once power resumes, we are back online within 2-3 minutes while everything boots. We had a 2011 article on how to do this Keeping Servers On: BIOS Setup for Availability (throwback Thursday!)

This time was different. There were three additional failures that we found after the power came back on.

The Worse: Failures Two through Four

After we got confirmation that power was back on, and Linode and others who have larger support teams and status pages, were online, we realized something was wrong. STH was still down. As power was restored we actually had three failures to deal with:

- The primary firewall would not boot. It seems dead due to the AVR54 bug.

- A switch connected to the emergency firewall was not powered on. We did not diagnose this on-site this morning as it will be replaced with a new 10GbE/40GbE switch next week anyway. A potential cause may be a dead PDU port. Update: It was the PDU port failure. You can see how we upgraded power here.

- A dual Intel Xeon E5-2699 V3 server decided it did not want to finish booting due to SATA SSD linkages.

It took about 30 minutes to design and build an emergency firewall appliance and get software loaded then another 2 minutes to make coffee and a 15-minute drive to the data center. We spent a few minutes diagnosing the issue (firewall) to ensure it was indeed the problem before replacing.

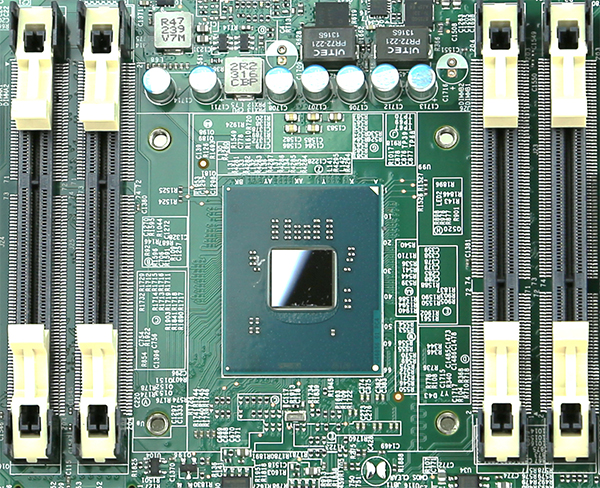

Intel Atom C2000 AVR54 Bug Strikes STH

Readers of STH may remember our pieces on the Intel Atom C2000 Series Bug and Intel Atom C2000 C0 Stepping Fixing the AVR54 Bug. In Intel’s Atom line of that generation, there is a nasty bug that, over time, degrades clock signals to the point that systems will not boot. The Intel Atom C2000 series is the most famous of these, but there are others of this era, as we covered in our piece Another Atom Bomb Intel Atom E3800 Bay Trail VLI89 Bug. We are not going to name the vendor (it was not Supermicro despite the images we are using) because it is a known Intel Atom issue.

At STH, we have an in-line firewall that is designed to cut traffic when it dies. There are other options for this such as the bypass designs that we covered in our Supermicro A1SRM-LN7F-2758 Review. Given we do not sell products or services on STH, a few minutes of downtime is essentially a rounding error for our application so the safer option is to endure a few minutes of downtime during maintenance.

This time, we simply swapped the old unit out for a Xeon D-1500 based solution and got everything running. It was faster than bringing everything up in another data center, then migrating everything back to the original infrastructure.

On the Mend: Cleaning-up and the Future

The STH hosting cluster is actually smaller in anticipation of updating over the next ten days or so. We normally only visit the hosting racks once every other quarter, usually when we find things like: What is the ZFS ZIL SLOG and what makes a good one that get us to upgrade immediately. The dual Intel Xeon E5-2699 V3 node was offline while the site was still running, but when it came back online and everything was back to being balanced STH page load times went from about 2.85s to 1.42s which is a big improvement.

What is next for our environment? CPUs and systems are in. We are preparing for an EPYC upgrade next. Stay tuned. The switch that went down was going to be replaced anyway, so that is getting a significant upgrade next time.

Indeed. If you buy a retail AMD EPYC 7000 series processor, there is an enormous case badge in the package that will not fit a rackmount server bezel.

Want to Know More About Hosting?

We keep tabs on our maintenance needs for our Falling From the Sky Part 4 Leaving the Cloud 5 Years Later series as well as any drive failures Used enterprise SSDs: Dissecting our production SSD population. Our failures this week are now in our tracking sheets.

There are certainly things that can be designed better in our infrastructure. We have plenty of bandwidth, colo space, and all of the hardware one could want. Part of STH’s early mission was to find the balance between overbuilding (and seeing failures from complexity) and underbuilding (and seeing failures like these.) That is still a journey. We should not have left the firewall with the AVR54 bug installed. This was a risk that we took and it bit us.

Do you know if the outage was inside the facility? A Properly designed datacenter shouldn’t have that big of an outage hit for a utility line fail. Even then, is there A+B Power?

Better save than sorry next time, do like openBSD and switch-off HT/SMT, to prevent vulnarability for the upcoming Spectre/Meltdown next gen.

Do you have extra AMD Epyc badges?? If you do, can I get one??? I’ll pay for postage!!

Hour outage? Doesn’t site have any backup diesel generators to cover outage after UPSs going down? Otherwise I’m sorry you’ve been hit by yet another Intel bug and glad you are back alive again. :-)

*knocking on wood* my 2758F is still running nicely after 3 years 24/7.

Lots of datacenters do not offer any UPS system. The kind of power one needs in a datacenter would require way too many diesel generators or way too many batteries. These days, it is much cheaper to use HA methods than trying to avoid downtime at any cost.

I just lost an older Atom C2758 Supermicro 1U SYS-5018A-FTN4 last week at home. I had a power failure that lasted through the UPS reserves and when the power was restored the server will not boot. Using the IPMI interface I can control the BMC but the iKVM stays black. 3 years and dead.

Why are you running a hardware firewall? I don’t know your throughput and all, but why not virtualize it in a HA setting?

Thanks for posting this, it’s good to have some real world documentation of these things since typically people just have to deal with them silently behind the scenes and wonder if anyone else is running into the same thing.

Also, a link could be added to the 2017-02-07 article pointing to this one for reference.

@CadilLACi: Network design philosophy and probably the complexity to convert it to a full VM HA setup.

“It took about 30 minutes to design and build an emergency firewall appliance and get software loaded then another 2 minutes to make coffee and a 15-minute drive to the data center. We spent a few minutes diagnosing the issue (firewall) to ensure it was indeed the problem before replacing.”

You guys are quick! Even the coffee-making (unless it was instant).

“Do you know if the outage was inside the facility? A Properly designed datacenter shouldn’t have that big of an outage hit for a utility line fail. Even then, is there A+B Power?”

Ugh, you’re right, but this is the Fremont datacenter. I’ve also had a bad experience here with power being down where a generator completely failed to kick in when it should have. This wiped out the entire Linode deployment in May 2015 ( see: https://status.linode.com/incidents/2rm9ty3q8h3x ), and I’ll never forget that very long night of waiting for things to come back online after 4-5 hours of downtime. It was nuts. Definitely one of the worst outages I remember.

Glad you guys were back quick!

@CadilLACi

> Why are you running a hardware firewall?

Because some people, through experience, simply just /know/ it’s a bad idea to virtualize. That doesn’t sound very scientific, I think, but I don’t need to use capacity planning either to tell me that cheating on a partner is always a terrible idea.

> I don’t know your throughput and all

What’s it got to do woth throughput? Don’t you think if it they took your advice and virtualized it then handling through is part of the initial design?

> but why not virtualize it in a HA setting?

Your whole argument for virtualizing the main firewall is that it’s a fashionable thing to do.