Perhaps the most interesting announcement in the GPU computing world this week came from NVIDIA. NVIDIA made four major announcements at CVPR in Salt Lake City, Utah. For us, the one that will stand out is the support for heterogeneous GPU clusters for Kubernetes. The company also announced PyTorch Apex, DALI, and the availability of TensorRT 4.

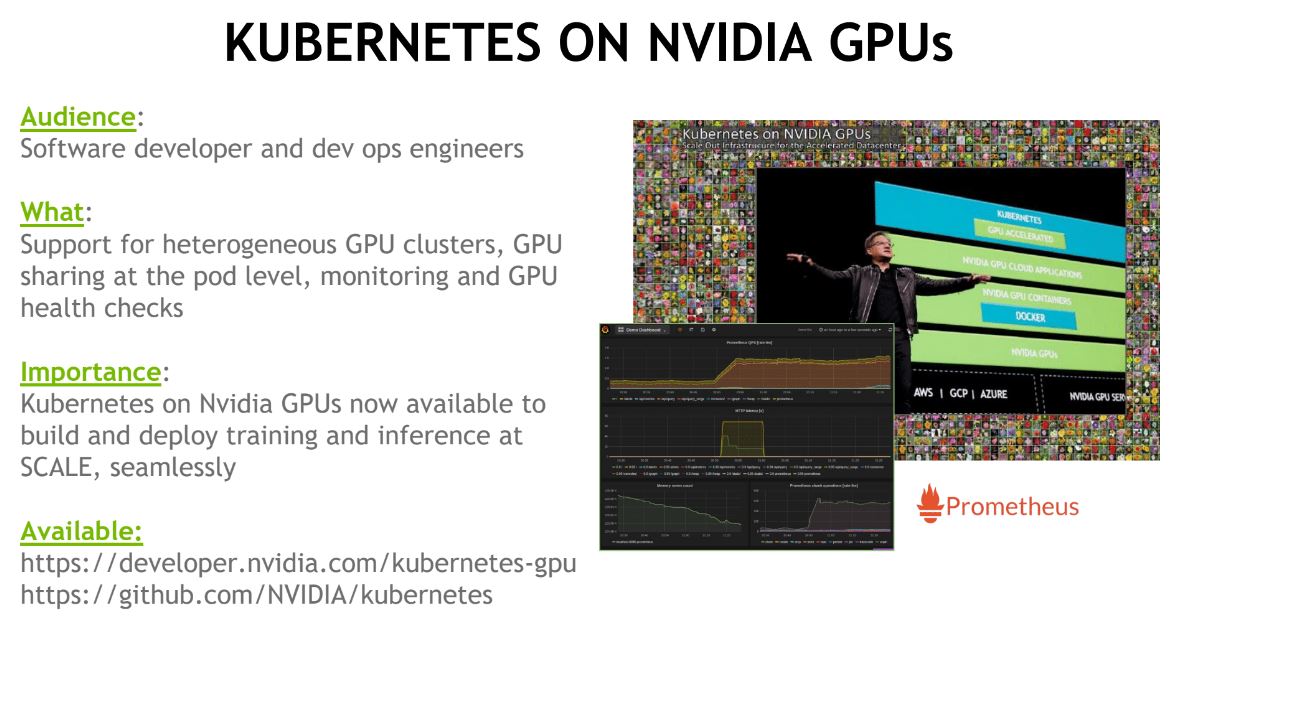

Heterogeneous GPU Kubernetes Clusters

Kubernetes has essentially won the container management and orchestration wars and is the way container orchestration on clusters will happen for the near future. While the vast majority of applications are CPU based, there has been a shift pushing GPU compute to containers as well, especially with cloud providers in the mix. Until this point, using heterogeneous GPU Kubernetes clusters has been more difficult than it needed to be. With a homogeneous GPU cluster, one can simply specify that one needs a GPU (or GPUs) for a container and that works well. When one has different types of GPUs in the cluster, then Kubernetes will need to differentiate between nodes that have one type of GPU over another. That used to take manual tagging. NVIDIA’s latest announcement takes care of that.

Kubernetes with GPU support is now open source Google GCP, Amazon AWS, Microsoft Azure. Containers can now specify a type of GPU including, K80, Pascal, Vota, and Kubernetes will schedule according to that hardware requirement. For those who have generations of GPU compute nodes available, support for heterogeneous GPU Kubernetes clusters is awesome.

PyTorch: APEX

PyTorch: APEX is a an optimization engine for PyTorch. With the various types of GPU architectures, accelerators, problem types, and deep learning frameworks optimizing how computations are performed is important.

NVIDIA PyTorch Apex allows PyTorch users to get the most benefit from tensor cores, such as those found in the NVIDIA Tesla V100 codenamed “Volta.” PyTorch Apex can be implemented in as little as four lines of code in a training script and help the model converge and train quickly. NVIDIA PyToch Apex is an open source extension. It helps determine what code can be FP16 eligible versus what needs to work with FP32. This is still not a core part of PyTorch, but NVIDIA is now working to get it upstream.

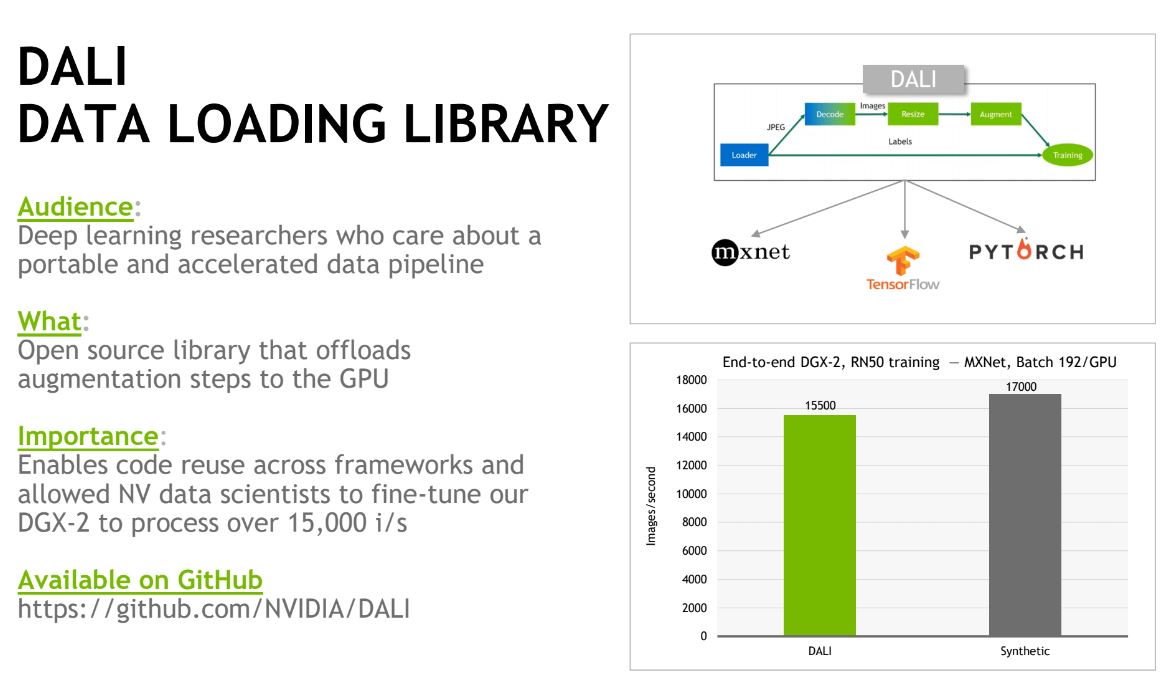

NVIDIA DALI Data Loading Library

NVIDIA DALI is an open source data loading library. DALI offloads data augmentation steps to the GPU to speed the process.

While a model may be portable, it does not make your data pipeline to be portable. DALI is open source and able to be extended across frameworks. This is what NVIDIA was using to show the DGX-2’s 15000 images per second in Resnet-50 training.

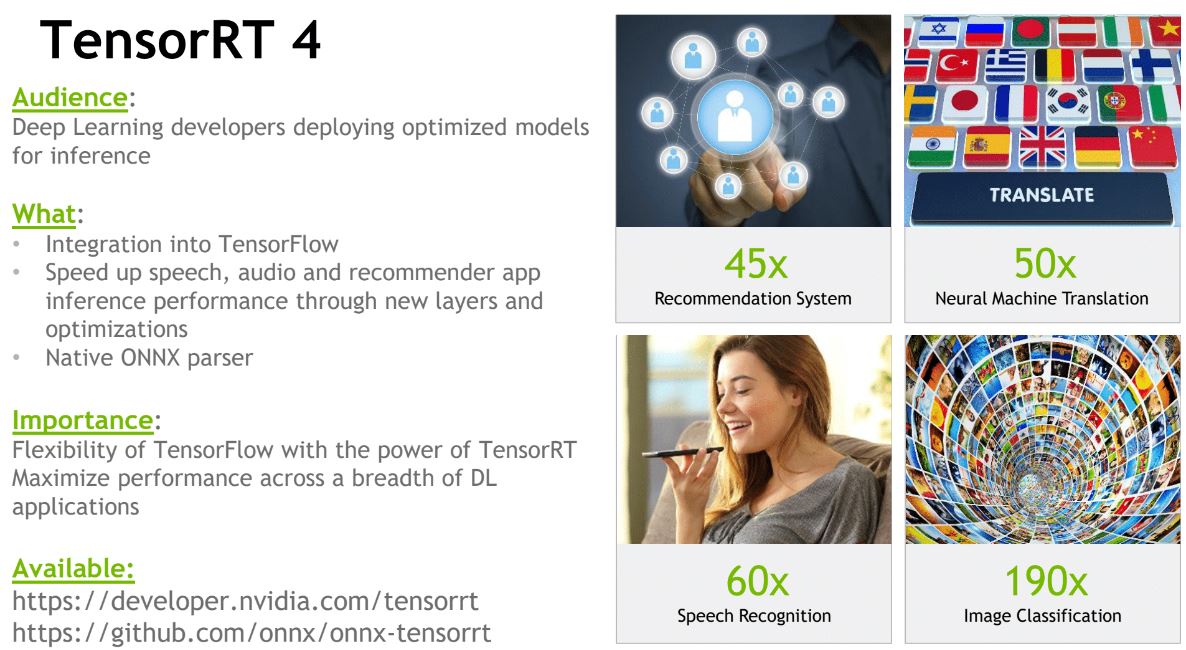

NVIDIA TensorRT 4 Available

NVIDIA TensorRT 4 was announced at GTC 2018 but it just became available. TensorRT 4 has a slew of new features such as INT8 and FP16 precision inference. The newest iteration is integrated into Tensorflow. In TensorBoard, you can see TensorRT optimized node.

Although the “big iron” training systems are impressive, the major gains are also on the endpoints that will need to run inferencing. We are excited to see what else TensorRT 4 will bring by way of connected, intelligent, endpoints.