Dell EMC PowerEdge MX Blades

If there is any section of this review which we are writing that will become obsolete quickly, we expect it is this section. At present, there are two compute blade options for the PowerEdge MX. The Dell EMC PowerEdge MX740c is the dual CPU comptue sled that was in our test unit. We have also seen the Dell EMC PowerEdge MX840c quad CPU comptue sled in-person.

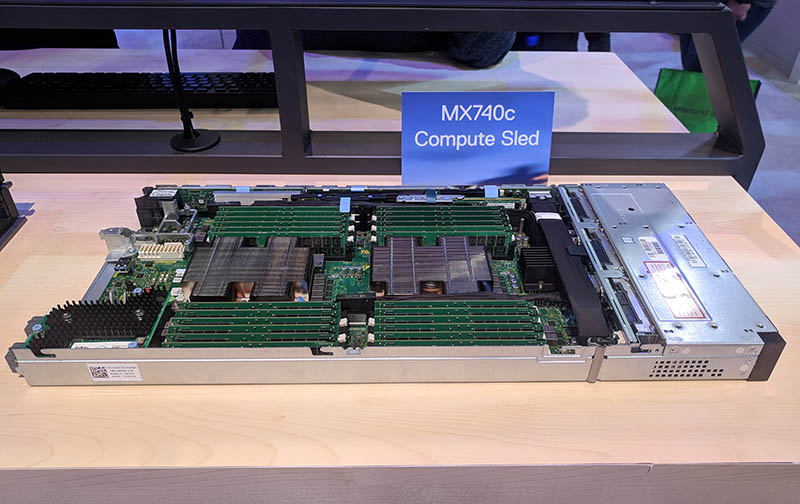

Dell EMC PowerEdge MX740c Compute Sled

The Dell EMC PowerEdge MX740c compute sled is a standard dual Intel Xeon Scalable compute node. Dell fits the maximum 12 DIMMs per socket which means that the company can support both high-end CPUs and up to 1.5TB of RAM per socket or 3TB of RAM per MX740c with the Skylake-SP Intel Xeon generation. The twelve sockets are important today but with the upcoming Cascade Lake generation and Intel Optane DC Persistent Memory, launching in a few months we are going to see these sleds support up to 6TB of Optane persistent memory in DRAM channels.

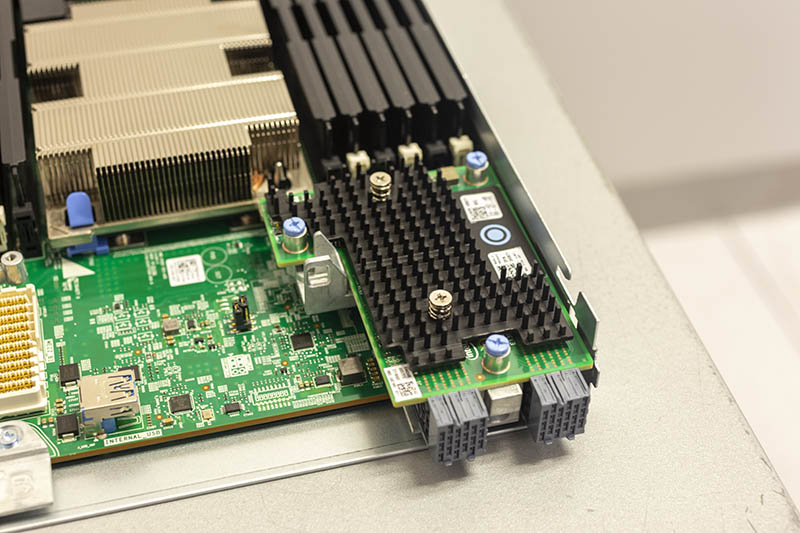

One of the exciting things (for us) is that the Dell EMC PowerEdge MX blades have a lot of familiar features. A good example of that is the Dell BOSS card. When we opened the node we quickly spotted this familiar fixture in current generation PowerEdge servers.

A Dell EMC BOSS card utilizes two M.2 SATA SSDs to provide a boot solution. One can set the SSDs to mirrored mode to reduce the impact of a SSD failure, and install a boot OS or hypervisor on the redundant solution. For those accustomed to the Dell EMC BOSS in standard PowerEdge rack servers, this same feature is available in the PowerEdge MX even in the reduced server footprint that the form factor allows. The PowerEdge MX also has low profile PERC cards and the ability to utilize PCIe cabling for SAS/SATA or NVMe front panel storage on the compute nodes.

There are other distinctively PowerEdge features afoot. One can see that there is a USB 3.0 Type-A connector for those who are utilizing embedded OSes or USB-based license keys. Dell EMC is even using their custom LGA3647 cooling solution with the blue clips that we see in the PowerEdge rack server line here in the PowerEdge MX.

On the rear of the sleds, one can see how Dell EMC is enabling a no-midplane design. Each node utilizes mezzanine cards to connect to fabric I/O modules. You can see the 25GbE dual port mezzanine card above. To circle back to the chassis side, these connectors plug into the fabric modules’ connectors directly.

In-sled storage is configurable, and Dell EMC has a number of customization options for the MX740c.

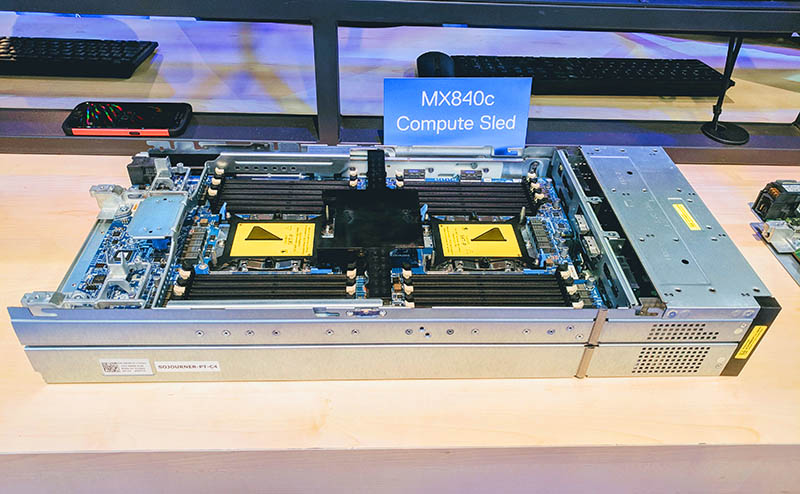

Dell EMC PowerEdge MX840c Compute Sled

The Dell EMC PowerEdge MX we reviewed had two MX740c nodes, but the company has another compute option. The Dell EMC PowerEdge MX840c compute sled is double width, so one can only fit four per MX7000 chassis. At the same time, the node supports twice as many processors and DIMMs at four processors and 48 DIMMs total. We saw one of these sleds and wanted to mention it.

You may be looking at the picture above and the sign and thinking, “Good sir, I see two CPU sockets. You are going crazy.” Indeed, the black mechanism between the two visible sockets covers a through PCB via (for Intel UPI and such) that mates the top visible motherboard with another dual socket motherboard below.

This is one of those designs that is simply great to keep the system compact although it adds some time to service DIMMs and CPUs. With four quad socket servers in 7U this is actually a high-density play. Most quad socket Intel Xeon Scalable servers are at least 2U in height which means four servers would take up 8U, or 1U more than the PowerEdge MX7000. At the same time, the PowerEdge MX is able to add fabric directly into that footprint as well, thereby increasing density further.

Why This Will Expand to More Blade Types

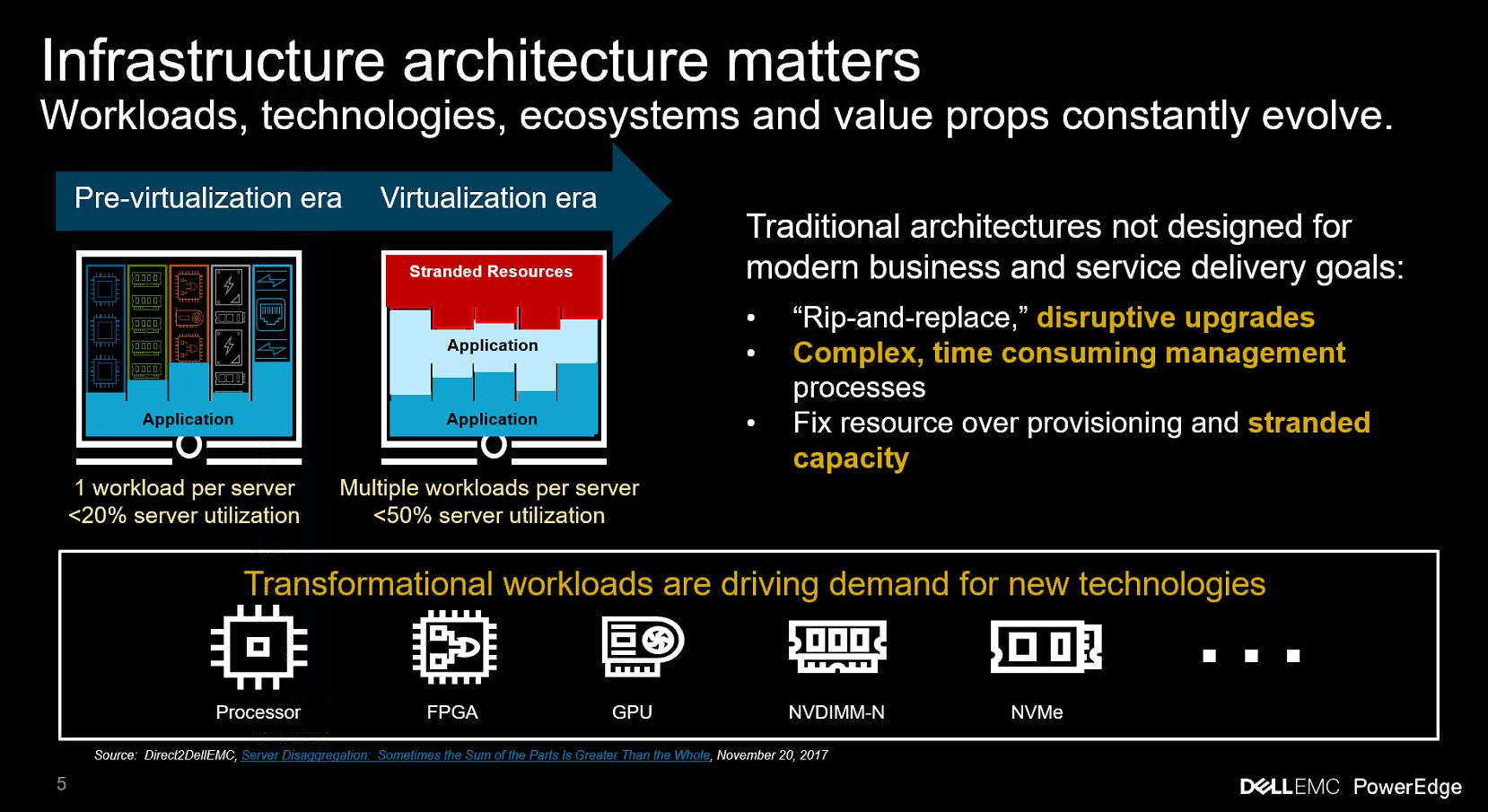

While today there are two compute nodes, the design of the Dell EMC PowerEdge MX is one that begs for additional types. At the PowerEdge MX launch, here is a slide that the company showed.

One can clearly see the GPU and FPGA solutions called out and on Dell EMC’s radar. What the no-midplane design allows is for the company to add different types of blades easily. There is absolutely no reason the company could not build FPGA blades and GPU compute blades today. In the future, if one believes Gen-Z will be a next-generation fabric, the PowerEdge MX is designed for a memory-centric compute model.

Next, we are going to look at the PowerEdge MX fabric as that will enable Dell EMC’s flexibility in utilizing differentiated types of blades.

Ya’ll are on some next level reviews. I was expecting a paragraph or two and instead got 7 pages and a zillion pictures — ok I didn’t count.

I think Dell needs to release more modules. AMD EPYC support? I’m sure they can. Are they going to have Cascade Lake-AP? I’m sure Ice Lake right?

“we now call the PowerEdge MX the “WowerEdge.”

Please don’t.

Can you guys do a piece on Gen-Z? I’d like to know more about that.

It’s funny. I’d seen the PowerEdge MX design, but I hadn’t seen how it works. The connector system has another profound impact you’re overlooking. There’s nothing stopping Dell from introducing a new edge connector in that footprint that can carry more data. It can also design motherboards with high-density x16 connectors and build-in a PCIe 4.0 fabric next year.

Really thorough review @STH

I’d like to see a roadmap of at least 2019 fabric options. Infiniband? They’ll need that for parity with HPE. It’s early in the cycle and I’d want to see this review in a year.

Of course STH finds fabric modules that aren’t on the product page… I thought ya’ll were crazy, buy the you have a picture of them. Only here

Give me EPYC blades, Dell!

Fabric B cannot be FC, it has to be fabric C, A and B are networking (FCOE) only.

“Each fabric can be different, for example, one can have fabric A be 25GbE while fabric B is fibre channel. Since the fabric cards are dual port, each fabric can have two I/O modules for redundancy (four in total.)”

what a GREAT REVIEW as usual patrick. Ofcourse, ONLY someone who has seen/used the cool HW that STH/pk over the years would give this system a 9.6! (and not a 10!) ha. Still my favorite STH review of all time is the dell 740xd.

btw, i think you may be surprised how many views/likes you would get on that raw 37min screen capture you made, posted to your sth youtube channel.

I know i for one would watch all 37min of it! Its a screen capture/video that many of us only dream of seeing/working on. Thanks again pk, 1st class as always.

you didnt mention that this design does not provide nearly the density of the m1000e. going from 16 blades in 10 ru to 8 blades in 7ru… to get 16 blades I would now be using 14 RU. Not to mention that 40GB links have been basically standard on the m1000e for what 8 years? and this is 25 as the default? Come ON!

Hey Salty you misunderstood that. The external uplinks have 4x25Gb / 100Gb at least for each connector. Fabric links have even 200Gb for each connector (most of them are these) on the switches. ATM this is the fastest shit out there.

How are the drives “how-swappable” if the storage sleds have to be pulled out? Am I missing something?

Higher-end gear has internal cable management that allows one to pull out sleds and still maintain drive connectivity. On compute nodes, there are also traditional front hot-swap bays.

400Gbps/ 25Gbps = 8 is a mistake. When NIC with dual ports are used, only one uplink from MX7116n fabric extender is connected 8x25G = 200G. The second 2x100G is used only for Quad port NIC.

Good review, Patrick! I was just wondering if that Fabric C, the SAS sled that you have on the test unit, can be used to connect external devices such as a JBOD via SAS cables? It’s not clear on the datasheets and other information provided by Dell if that’s possible, and the Dell reps don’t seem to be very knowledgeable on this either. Thanks!

I don’t have more money. Can I use 1 switch MX508n and 1 switch MXG610s?