Dell EMC PowerEdge MX7000 Chassis

The base of the entire system is the Dell EMC PowerEdge MX7000 chassis. This is a 7U chassis which is smaller than some other units on the market. That 7U chassis weighs around 105lbs empty and can almost quadruple that filled with gear.

Dell EMC PowerEdge MX7000 Overview

We first wanted to show the front and rear of the chassis to give some sense of what our readers are looking at. After working with these systems for a few minutes, the major functions become obvious, but if you are new to the PowerEdge MX, you may not immediately recognize the components.

You can see a layout with up to eight single-width or four dual-width blades running vertically. There are four fans running through the middle vertically which help cool the rear I/O. The six hot-swap power supplies sit at the bottom of the chassis.

Here is the rear of a PowerEdge MX out of a rack.

For some basic overview, the top two horizontal rows are networking and interconnect fabric as is the row below the fans. This horizontal fan row is the row that cools the blades while they are in operation. The next two slots are for storage fabric. Finally, the bottom row has the power connectors on the outside with up to two chassis management modules.

Dell EMC PowerEdge MX7000 No Midplane

Perhaps the most touted feature of the PowerEdge MX is the lack of midplane. Midplanes in blade servers are features that when they work, nobody thinks about them. When a midplane fails, it usually takes down your entire blade chassis, or a good portion thereof. Midplanes are made of PCB and have some signaling constraints based on how they are designed which means that over generations, you may need to replace a midplane just to use faster networking or storage. With the PowerEdge MX, Dell EMC did away with the midplane in an extraordinarily forward-looking design direction.

As it turns out, a midplane that sits in the middle of a large chassis is very difficult to photograph. We are going to show some of the connections to the midplane in later sections, but we wanted to give some visual representation of this.

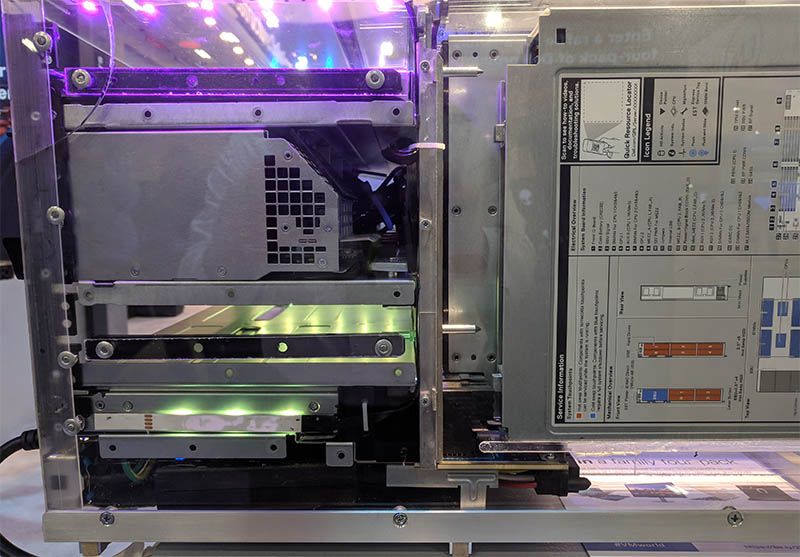

These first two photos are of an acrylic, running, PowerEdge MX model that Dell EMC displays at shows like VMWorld. The benefit of using this depiction is that it is much easier to see than in the middle of a chassis in a dimly lit cold aisle. As you can see, there are guide pins and grooves for components to align, but the components do not plug into a big green PCB midplane. Instead, they have direct connections. For example, blades directly connect to fabric.

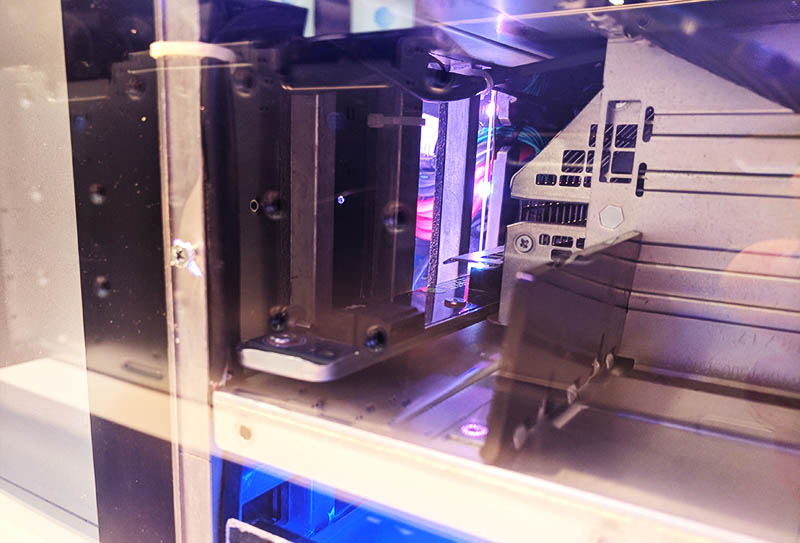

Here is a shot looking down a blade slot. At the rear, you can see the two connectors near the top which directly attach to the switch fabric modules at the rear of the chassis. There is no PCB midplane here. One can then see the fan spinning. This followed by additional connector spaces for additional fabric modules and storage at the bottom.

In a traditional blade server, instead of just seeing connectors that are parts of fabric modules, you would instead see a shared PCB at the rear.

We are going to talk more about why this is important later in our review. The short answer is that there are two main benefits. First, it isolates potential failures, increasing the reliability of the system. Second, it allows storage and networking fabric to be upgraded without taking the chassis down for midplane replacement. Dell EMC plans to leverage both of these features.

Dell EMC PowerEdge MX7000 Power and Cooling

If you are looking at starting a green IT initiative, chassis servers like the Dell EMC PowerEdge MX make a lot of sense. While the compute options are likely to change on a quarterly basis, storage will change perhaps less fast, and networking will continue a march toward faster Ethernet, InfiniBand and next-gen interconnects like Gen-Z, there are some items that will not change. Rack rails, sheet metal, fans, and power supplies all change at a much slower pace.

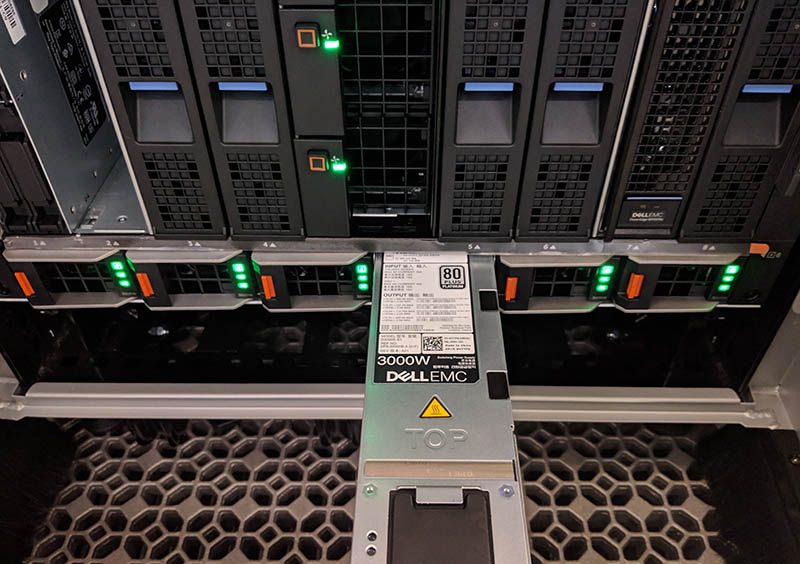

During our time with the Dell EMC PowerEdge MX7000 chassis, we indeed pulled power supplies and fans to validate it all worked as expected. The six power supplies are 80Plus Platinum rated 3kW units which means that the chassis has 18kW worth of high-efficiency power supplies in its current form. The eight units mean that the chassis can survive an A or B side power failure and maintain operation.

We took our pulling of random components to a new level, removing front fans and power supplies simultaneously.

The fans may have a small front profile, but after the simple press of a button and a gentle pull, they can be removed from the chassis following a long pull.

We even removed the rear fan as well just to see what would happen.

As one would expect, the Dell EMC PowerEdge MX handled this without a hiccup. Fan speeds raised. You certainly want to wear ear protection working in racks of these swapping components even for short periods of time.

Dell EMC tells us they overdesigned the PowerEdge MX power and cooling systems to be able to handle the foreseeable future of server architecture. For a green IT initiative, the ability to only cycle the bare necessities with each generation means that less waste will be created. We like this direction.

Still A Modern PowerEdge Chassis

The Dell EMC PowerEdge MX7000 is still a thoroughly modern PowerEdge chassis. The PowerEdge MX7000 gets a LCD touch screen that has a few major hands-on management functions like alerts and information.

If you are using a server like the Dell EMC PowerEdge R640 or PowerEdge R740xd you may have used Dell EMC’s Quick Sync 2 module which allows pairing a chassis to a mobile device directly. On the PowerEdge MX7000, the feature works just like it does on a standard PowerEdge rack server. This is an optional feature, but it is one that some data centers will adopt. As we are going to see in the management section, Dell EMC has gone through great lengths to ensure that the PowerEdge MX management and features will be familiar to existing users.

Ya’ll are on some next level reviews. I was expecting a paragraph or two and instead got 7 pages and a zillion pictures — ok I didn’t count.

I think Dell needs to release more modules. AMD EPYC support? I’m sure they can. Are they going to have Cascade Lake-AP? I’m sure Ice Lake right?

“we now call the PowerEdge MX the “WowerEdge.”

Please don’t.

Can you guys do a piece on Gen-Z? I’d like to know more about that.

It’s funny. I’d seen the PowerEdge MX design, but I hadn’t seen how it works. The connector system has another profound impact you’re overlooking. There’s nothing stopping Dell from introducing a new edge connector in that footprint that can carry more data. It can also design motherboards with high-density x16 connectors and build-in a PCIe 4.0 fabric next year.

Really thorough review @STH

I’d like to see a roadmap of at least 2019 fabric options. Infiniband? They’ll need that for parity with HPE. It’s early in the cycle and I’d want to see this review in a year.

Of course STH finds fabric modules that aren’t on the product page… I thought ya’ll were crazy, buy the you have a picture of them. Only here

Give me EPYC blades, Dell!

Fabric B cannot be FC, it has to be fabric C, A and B are networking (FCOE) only.

“Each fabric can be different, for example, one can have fabric A be 25GbE while fabric B is fibre channel. Since the fabric cards are dual port, each fabric can have two I/O modules for redundancy (four in total.)”

what a GREAT REVIEW as usual patrick. Ofcourse, ONLY someone who has seen/used the cool HW that STH/pk over the years would give this system a 9.6! (and not a 10!) ha. Still my favorite STH review of all time is the dell 740xd.

btw, i think you may be surprised how many views/likes you would get on that raw 37min screen capture you made, posted to your sth youtube channel.

I know i for one would watch all 37min of it! Its a screen capture/video that many of us only dream of seeing/working on. Thanks again pk, 1st class as always.

you didnt mention that this design does not provide nearly the density of the m1000e. going from 16 blades in 10 ru to 8 blades in 7ru… to get 16 blades I would now be using 14 RU. Not to mention that 40GB links have been basically standard on the m1000e for what 8 years? and this is 25 as the default? Come ON!

Hey Salty you misunderstood that. The external uplinks have 4x25Gb / 100Gb at least for each connector. Fabric links have even 200Gb for each connector (most of them are these) on the switches. ATM this is the fastest shit out there.

How are the drives “how-swappable” if the storage sleds have to be pulled out? Am I missing something?

Higher-end gear has internal cable management that allows one to pull out sleds and still maintain drive connectivity. On compute nodes, there are also traditional front hot-swap bays.

400Gbps/ 25Gbps = 8 is a mistake. When NIC with dual ports are used, only one uplink from MX7116n fabric extender is connected 8x25G = 200G. The second 2x100G is used only for Quad port NIC.

Good review, Patrick! I was just wondering if that Fabric C, the SAS sled that you have on the test unit, can be used to connect external devices such as a JBOD via SAS cables? It’s not clear on the datasheets and other information provided by Dell if that’s possible, and the Dell reps don’t seem to be very knowledgeable on this either. Thanks!

I don’t have more money. Can I use 1 switch MX508n and 1 switch MXG610s?