Dell EMC PowerEdge MX Management

Conceptually, there are at least two primary levels that the Dell EMC PowerEdge MX will get managed at. The first is at the overall chassis level where one will manage the overall health of the PowerEdge MX’s major components. The second level in systems like these is at the individual component level.

The PowerEdge MX we reviewed had two chassis management modules that act as a redundant gateway into the system. These modules allow one to manage the PowerEdge MX as a whole, but also to delve into individual components.

We took video of all of the items we wanted to share while trying out the PowerEdge MX management interfaces with the thought that we could take screenshots and show off the functionality. We ended up with over 37 minutes of screen capture time and a feeling like we still were scratching the surface. Instead of a Tolstoy War and Peace size management overview, we are going to look at some of the key features and examples from both the chassis level management as well as the individual component level management.

Dell EMC PowerEdge MX Chassis Management Example

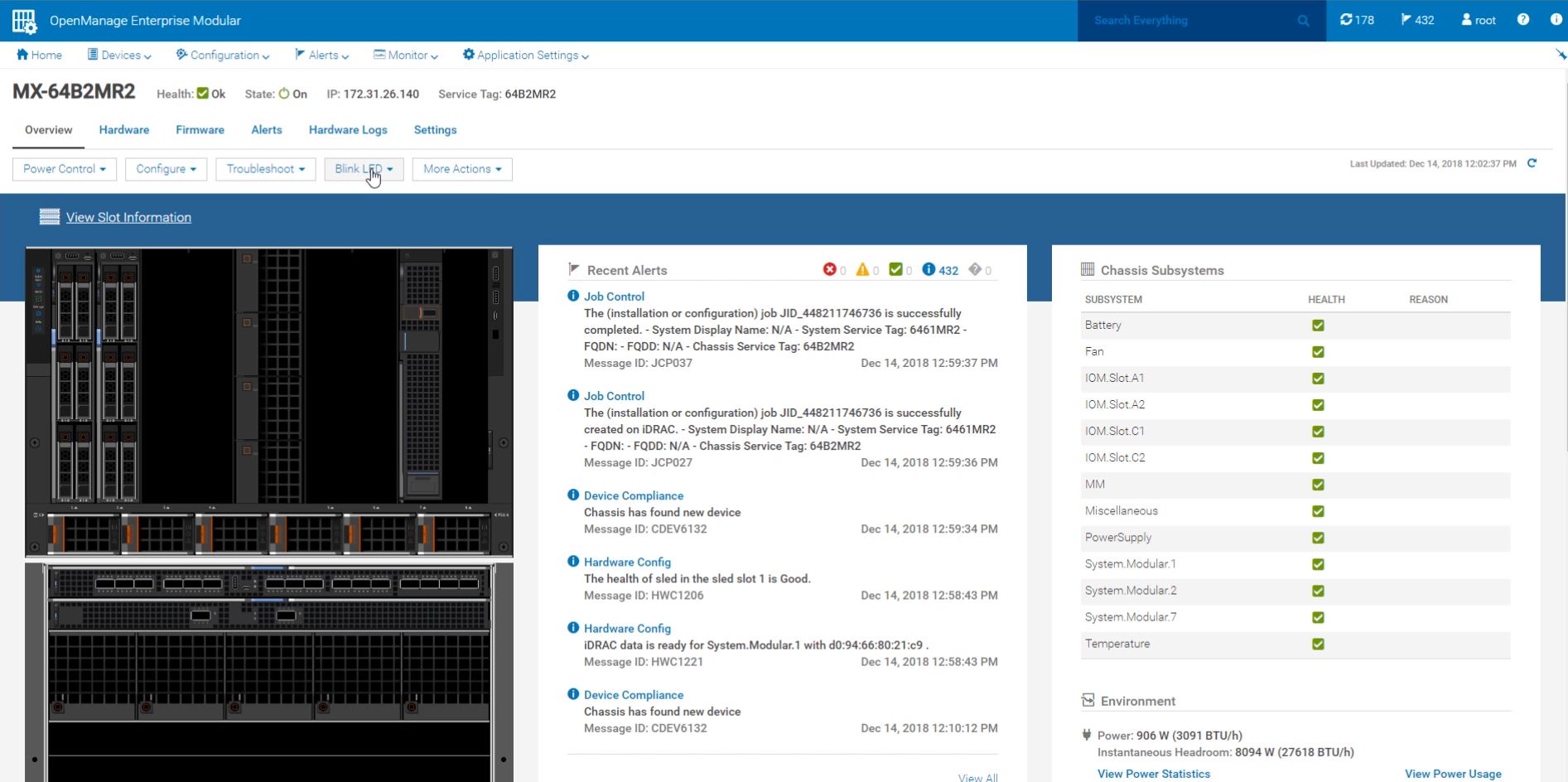

At the top level, we have the overall Dell EMC PowerEdge MX chassis management. From the dashboard, we can see what components are installed. We can see their health. We can see management IPs, service tags, alerts. From here, one can click on relevant components for a drill down and to complete specific tasks.

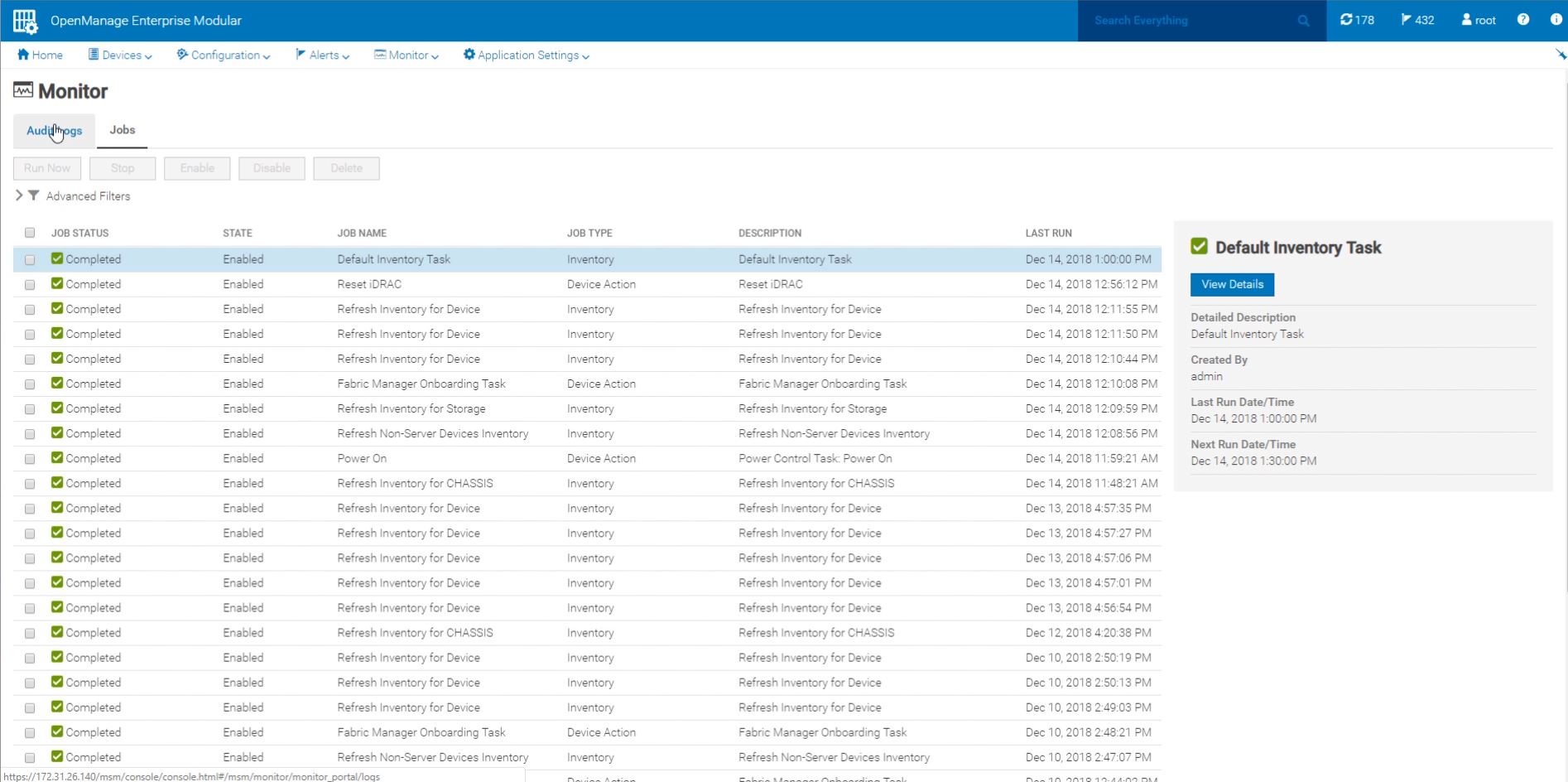

One example is that one may want to check system logs to see what jobs are running and their status. This can be done at the chassis level from either chassis management module.

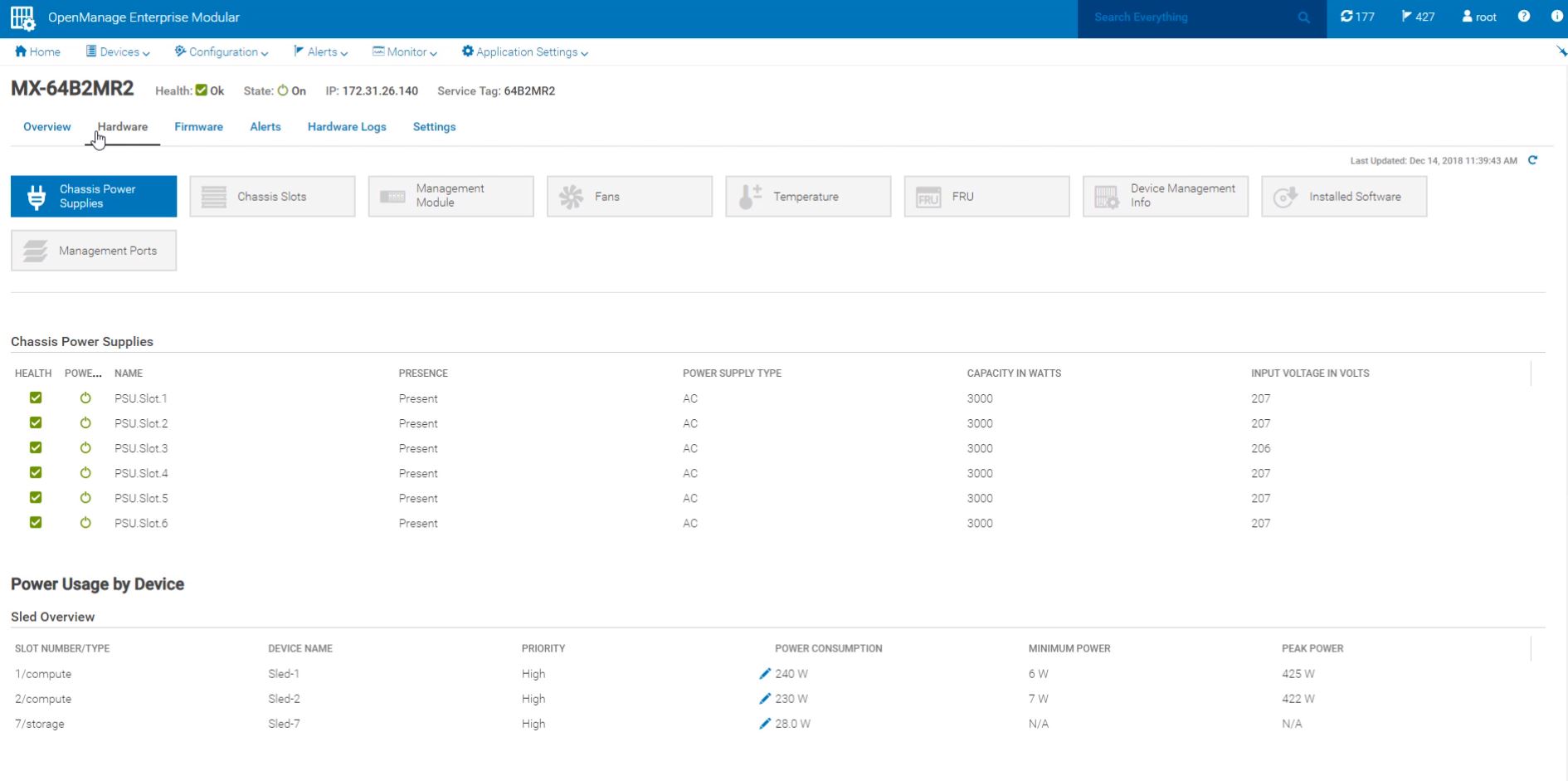

One can drill down into individual components or subsystems. For example, one may want to see the status of the power supplies and see peak power for planning purposes. The PowerEdge MX allows one to drill down into individual subsystems like this. Should a failure occur, one can diagnose directly from the chassis dashboard.

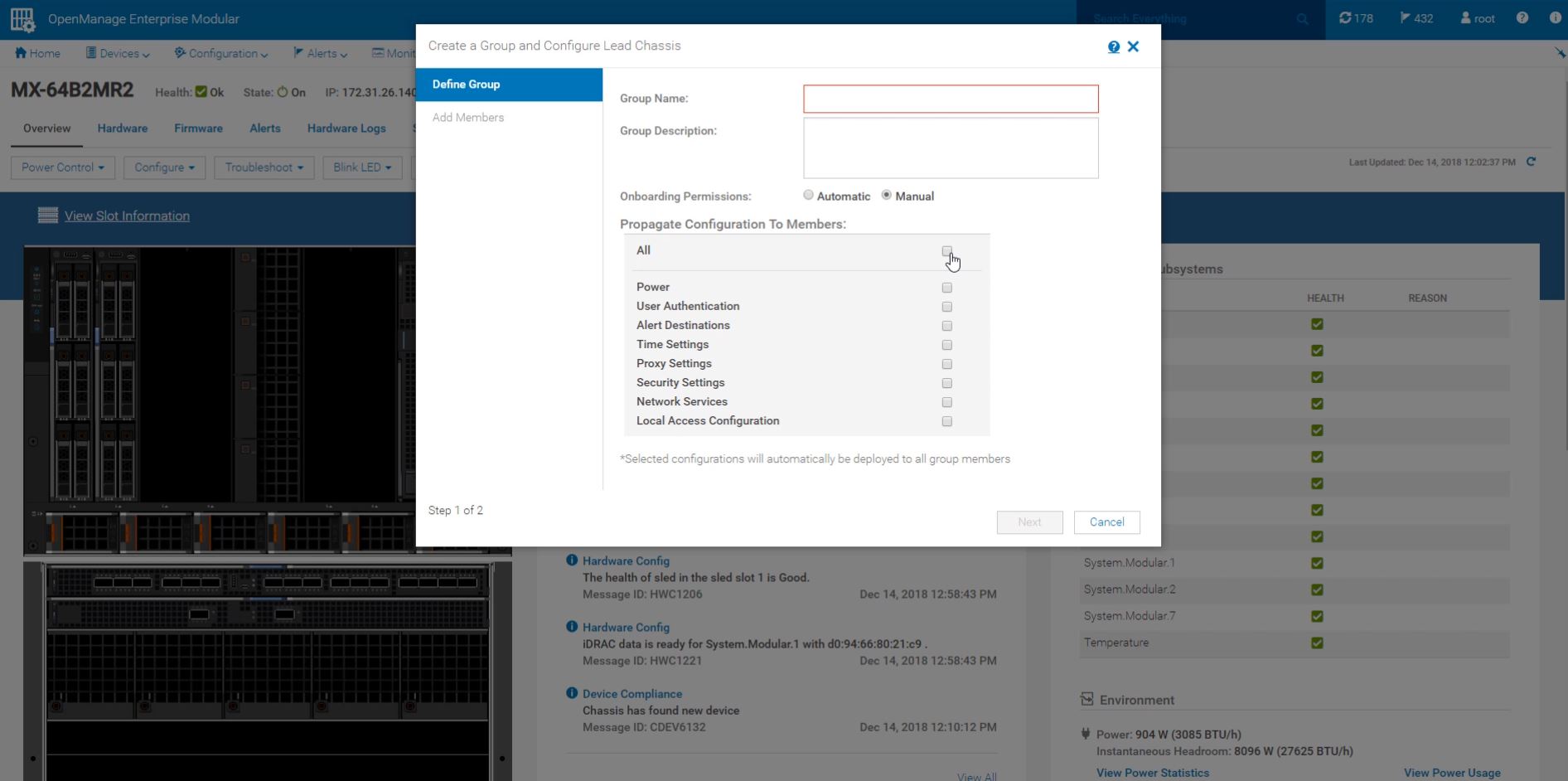

While the PowerEdge MX is a great standalone system, the power of the solution is in clusters of MX7000 chassis. One can create groups of PowerEdge MX chassis so that one can manage them at an aggregated level.

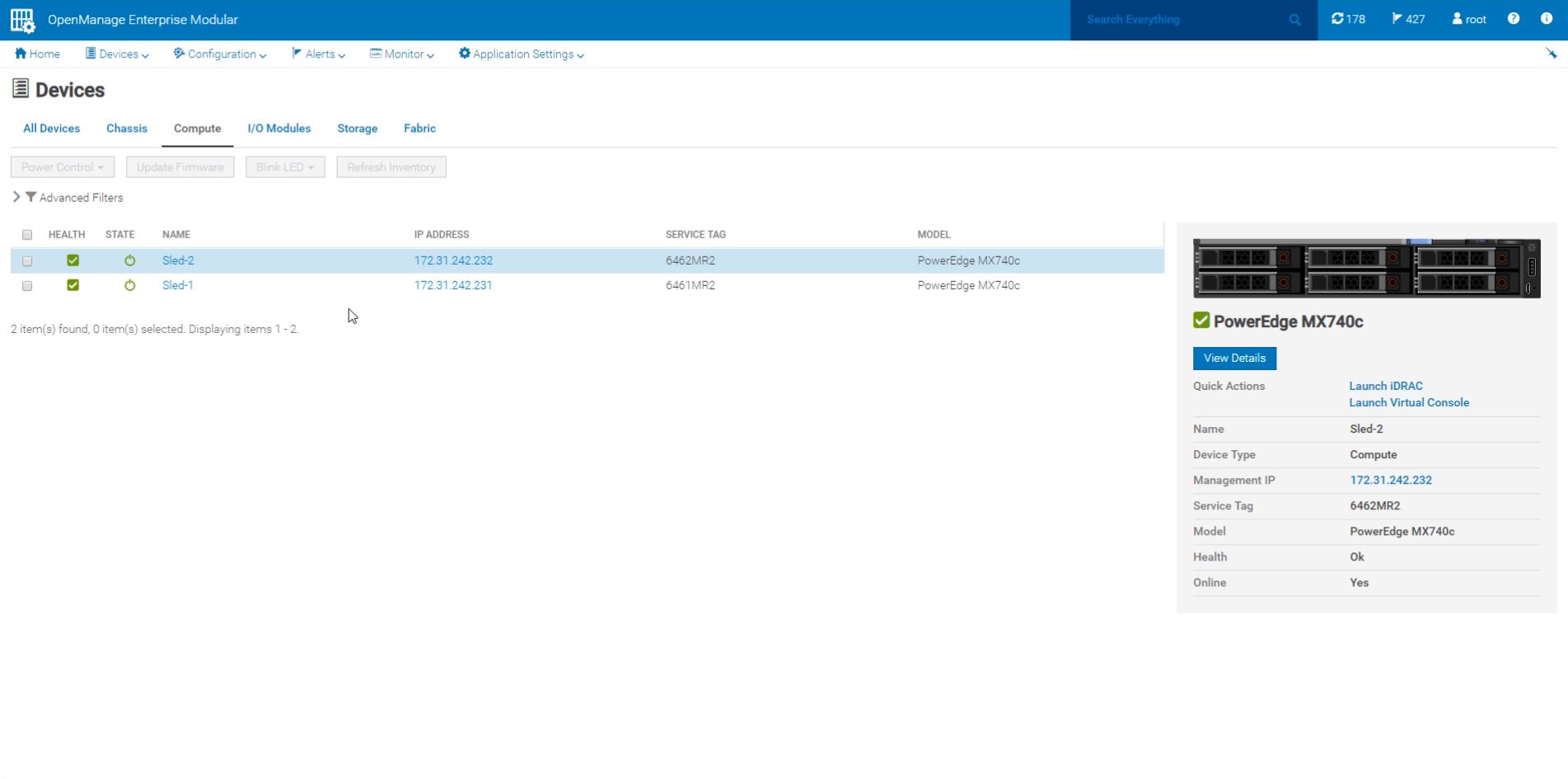

As you can see, we are using Dell EMC OpenManage Enterprise Modular here. This is a management solution for the PowerEdge MX that has features designed for multi-node modular chassis. There is a ton of functionality here. We suggest requesting a demo if you want to see it in action.

Beyond the high-level view, one can drill-down into components like compute blades, fabric, and storage. We are going to give an example of each of these in turn.

Dell EMC PowerEdge MX Compute Blade Management Example

From the chassis level, one can inventory the blades that are installed into each blade slot. There is basic information that flows up from the blade to the chassis management interface. This is handy if you just want to inventory a chassis and see what is in it years after the nodes are deployed.

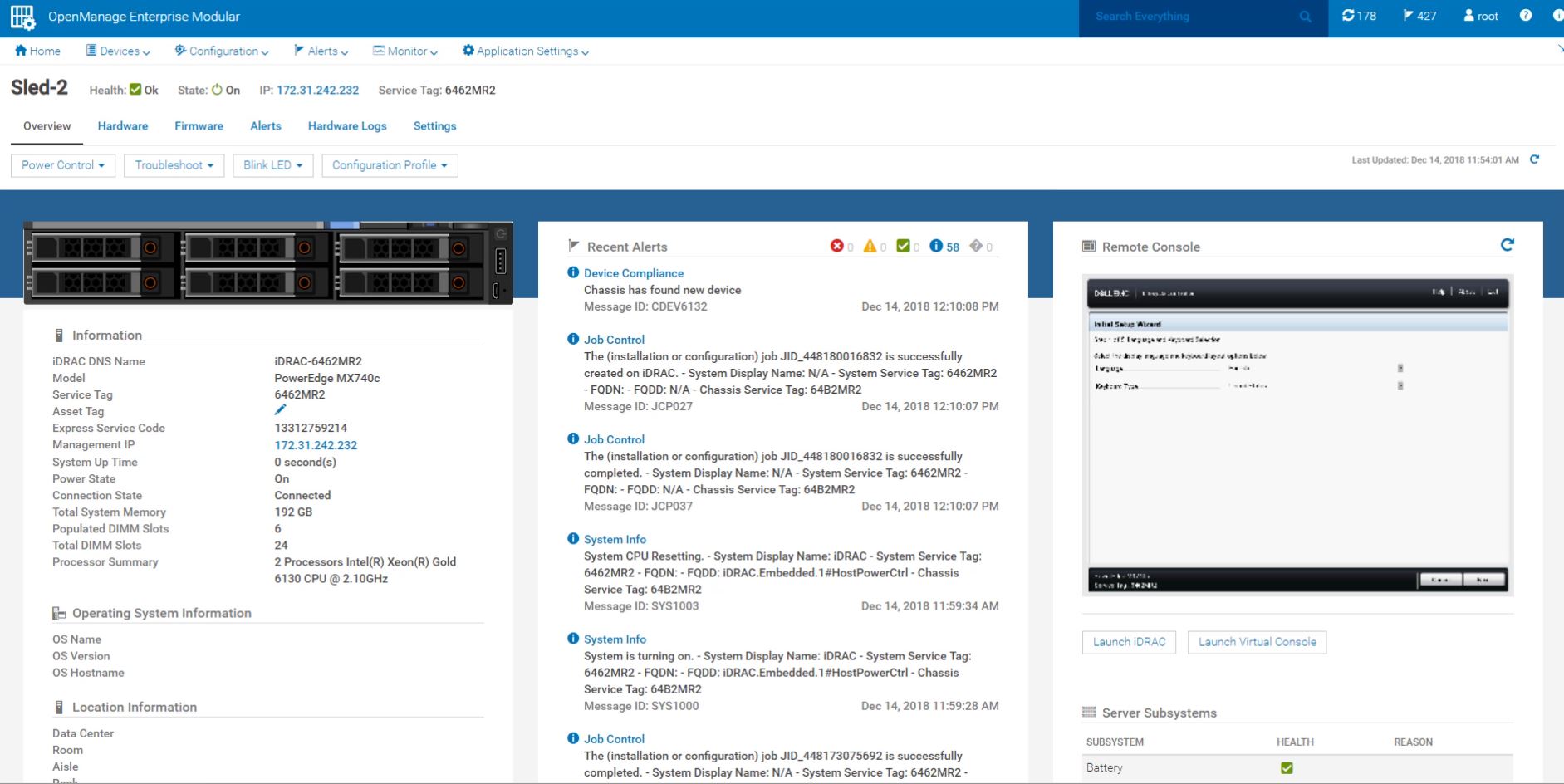

Drilling down into our Dell EMC PowerEdge MX740c compute node, we can see a dashboard with status, configuration information, alerts, logs, and settings. One can even launch a remote console directly from the OpenManage Enterprise Modular chassis-level management solution.

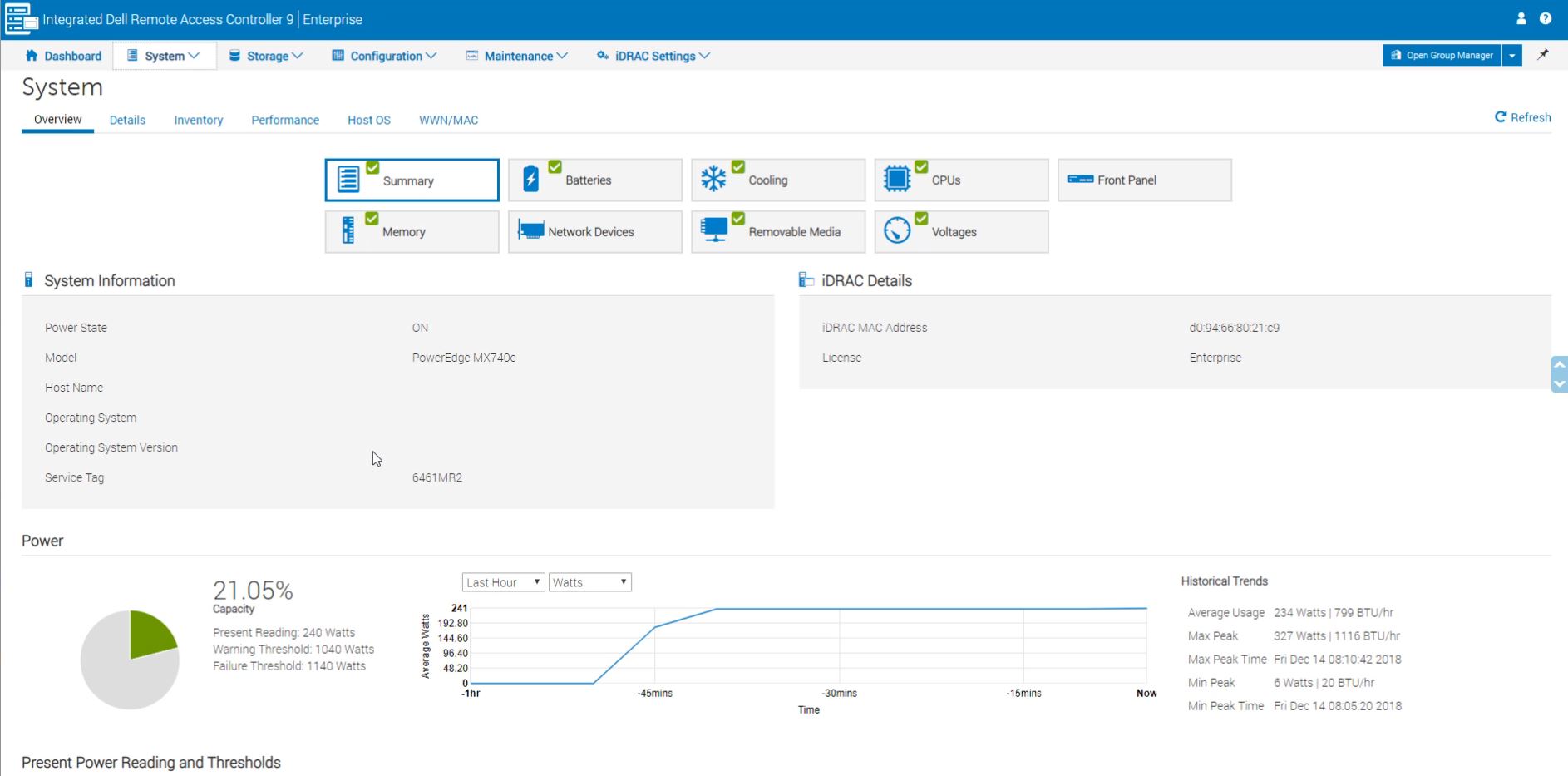

There is a link to the management IP of the node, and one can click that link and go to a familiar Dell EMC iDRAC 9 management interface. Here one can do things like change BIOS settings, update the firmware, or do just about anything else that one can do using iDRAC 9 on a standard PowerEdge.

For a PowerEdge administrator, this is extremely familiar as you may note in our Dell EMC PowerEdge R7415 review.

Unlike a standard PowerEdge server, the PowerEdge MX also has a fabric component which we are going to look at next.

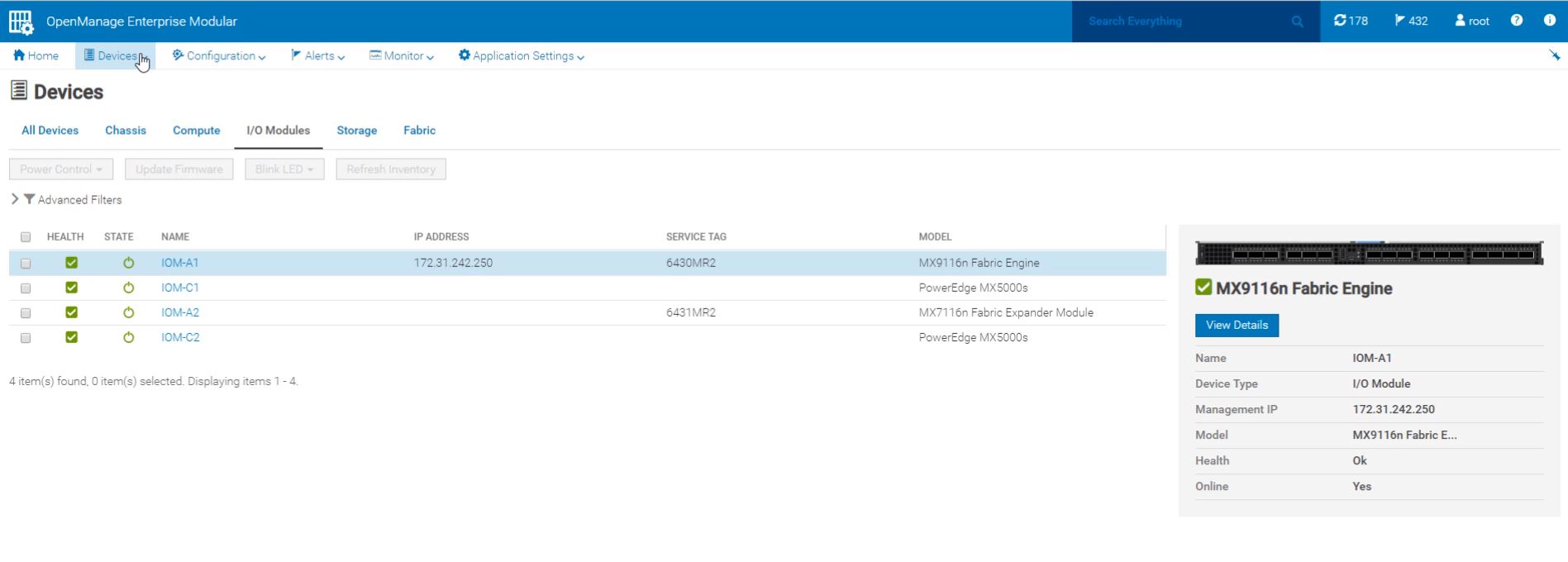

Dell EMC PowerEdge MX Fabric Management Example

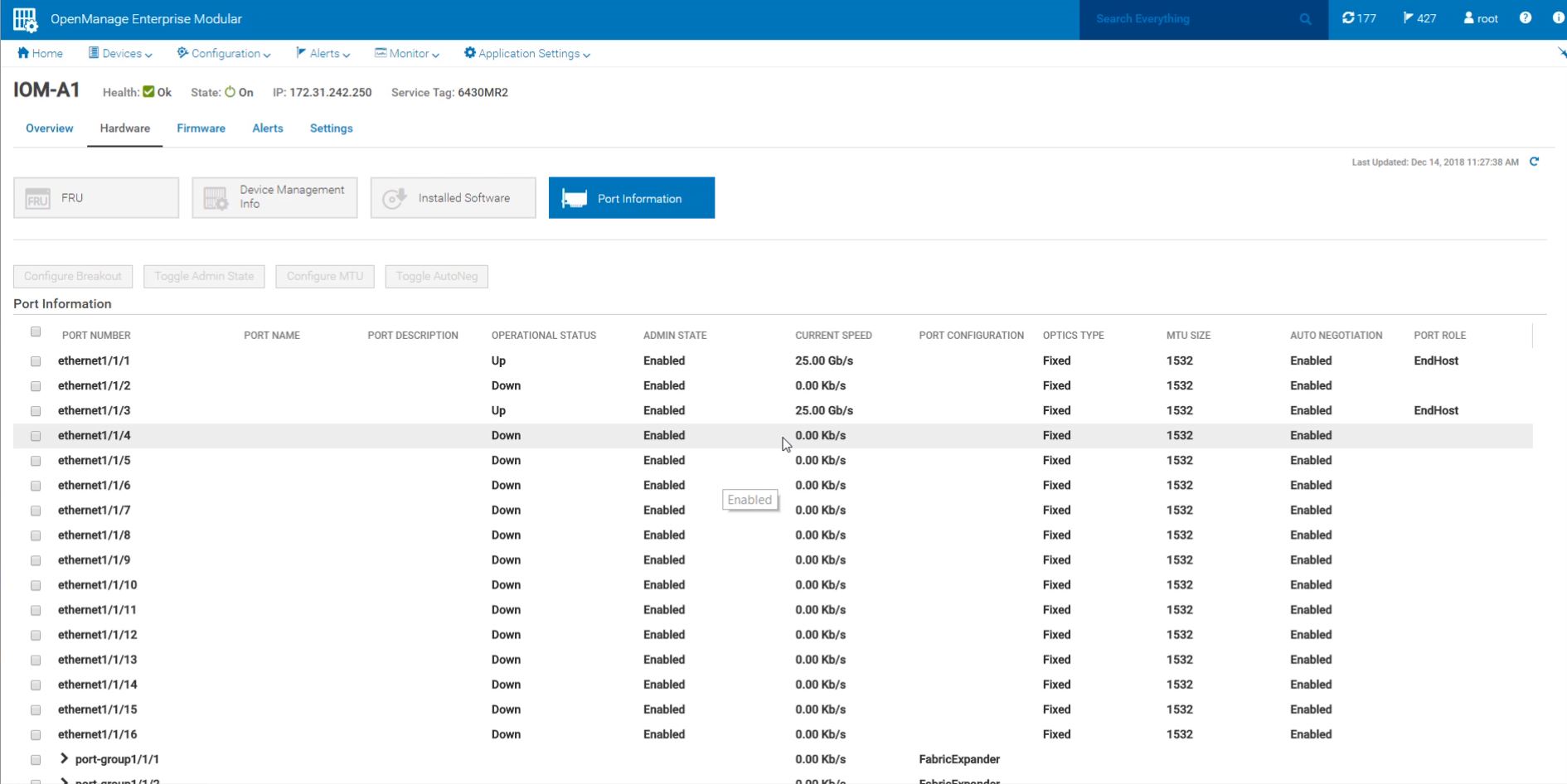

Fabric is a major component of the Dell EMC PowerEdge MX solution. In its launch form, this fabric is focused on Ethernet. As such, we can see the 25GbE network switches and fabric extender I/O modules directly from the chassis management page.

There are a lot of basic networking features that are available to administrators. These are similar to the company’s standard networking offerings, except they have a slick integrated Web GUI.

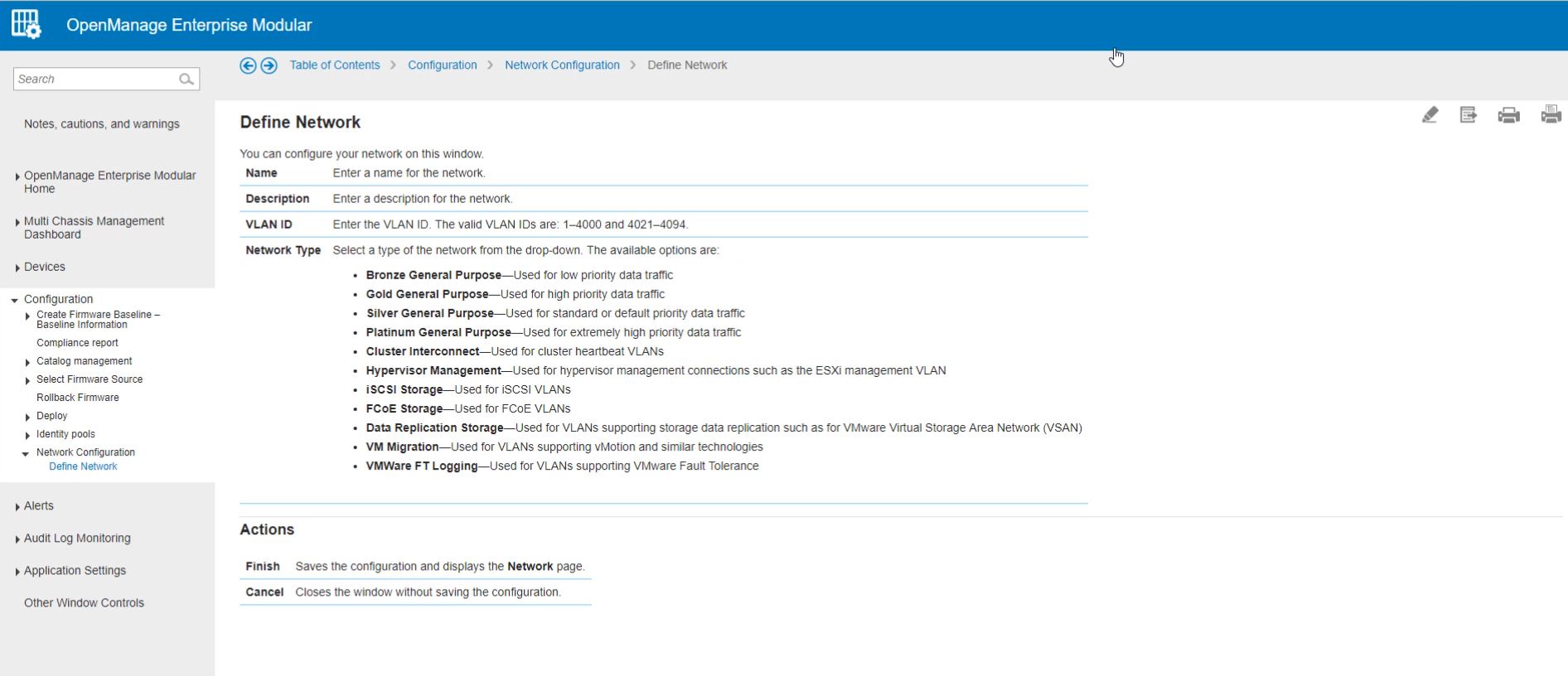

There are a few other features that are really nice in the PowerEdge MX. The chassis has the ability to define networks and VLANs across blades and chassis. At scale, this becomes important if you have Blade B06 in Chassis C01, perform an upgrade on B06, and then plug it into Chassis C02. Having a flexible network management system is important to scale the PowerEdge MX architecture. OpenManage Enterprise Modular has a number of wizards to help with that process.

Here we were defining a network and wanted to know what the “Network Type” meant. Dell EMC has in-context help that defines just about everything. If you get stuck, there is no need to look-up on PDF manuals. Instead, documentation is in the tool itself. This is a feature that Dell EMC has and some of the white box blade vendors completely lack. It is much easier to use in-context help than to search PDF manuals panning hundreds or thousands of pages.

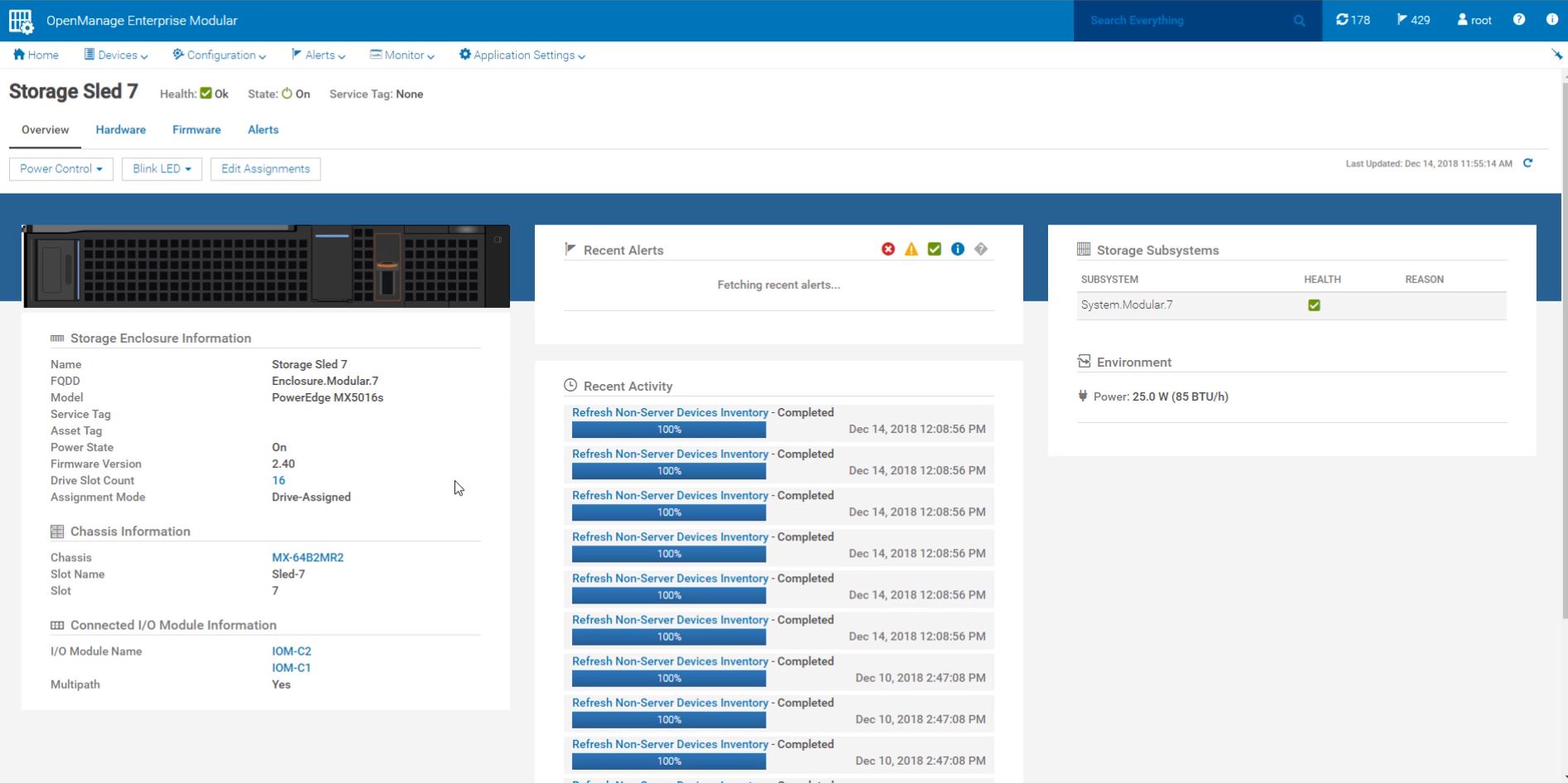

Dell EMC PowerEdge MX Storage Management Example

The storage side follows a similar pattern. One can see diagnostic information on the storage components from the drive sleds to the SAS I/O fabric modules to the individual drives. One can also manage firmware and the Dell EMC solution will upgrade in a non-disruptive manner.

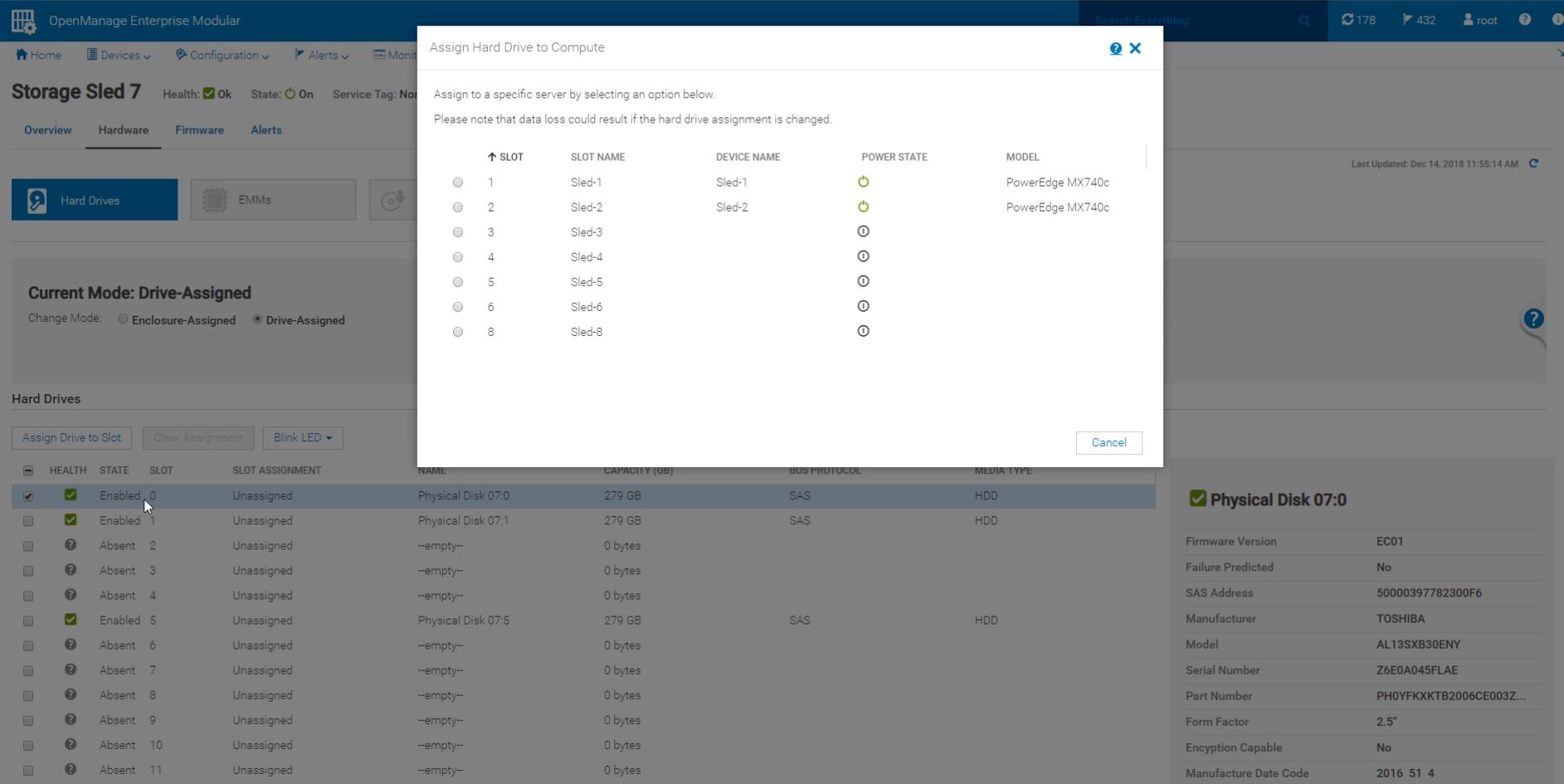

Like the networking fabric side, Dell EMC has a number of storage-related features at the chassis level to make administrators’ jobs easier. An example is the ability to assign drives to blades directly from the web GUI (or the underlying API.)

Overall, the Dell EMC PowerEdge MX software platform is very impressive. The product just launched, but the software side is several levels of maturity higher than a white box vendor’s management software that has been in the market for years. That is an impressive feat.

Next, we are going to look at the Dell EMC PowerEdge MX hardware, starting with the compute blades.

Ya’ll are on some next level reviews. I was expecting a paragraph or two and instead got 7 pages and a zillion pictures — ok I didn’t count.

I think Dell needs to release more modules. AMD EPYC support? I’m sure they can. Are they going to have Cascade Lake-AP? I’m sure Ice Lake right?

“we now call the PowerEdge MX the “WowerEdge.”

Please don’t.

Can you guys do a piece on Gen-Z? I’d like to know more about that.

It’s funny. I’d seen the PowerEdge MX design, but I hadn’t seen how it works. The connector system has another profound impact you’re overlooking. There’s nothing stopping Dell from introducing a new edge connector in that footprint that can carry more data. It can also design motherboards with high-density x16 connectors and build-in a PCIe 4.0 fabric next year.

Really thorough review @STH

I’d like to see a roadmap of at least 2019 fabric options. Infiniband? They’ll need that for parity with HPE. It’s early in the cycle and I’d want to see this review in a year.

Of course STH finds fabric modules that aren’t on the product page… I thought ya’ll were crazy, buy the you have a picture of them. Only here

Give me EPYC blades, Dell!

Fabric B cannot be FC, it has to be fabric C, A and B are networking (FCOE) only.

“Each fabric can be different, for example, one can have fabric A be 25GbE while fabric B is fibre channel. Since the fabric cards are dual port, each fabric can have two I/O modules for redundancy (four in total.)”

what a GREAT REVIEW as usual patrick. Ofcourse, ONLY someone who has seen/used the cool HW that STH/pk over the years would give this system a 9.6! (and not a 10!) ha. Still my favorite STH review of all time is the dell 740xd.

btw, i think you may be surprised how many views/likes you would get on that raw 37min screen capture you made, posted to your sth youtube channel.

I know i for one would watch all 37min of it! Its a screen capture/video that many of us only dream of seeing/working on. Thanks again pk, 1st class as always.

you didnt mention that this design does not provide nearly the density of the m1000e. going from 16 blades in 10 ru to 8 blades in 7ru… to get 16 blades I would now be using 14 RU. Not to mention that 40GB links have been basically standard on the m1000e for what 8 years? and this is 25 as the default? Come ON!

Hey Salty you misunderstood that. The external uplinks have 4x25Gb / 100Gb at least for each connector. Fabric links have even 200Gb for each connector (most of them are these) on the switches. ATM this is the fastest shit out there.

How are the drives “how-swappable” if the storage sleds have to be pulled out? Am I missing something?

Higher-end gear has internal cable management that allows one to pull out sleds and still maintain drive connectivity. On compute nodes, there are also traditional front hot-swap bays.

400Gbps/ 25Gbps = 8 is a mistake. When NIC with dual ports are used, only one uplink from MX7116n fabric extender is connected 8x25G = 200G. The second 2x100G is used only for Quad port NIC.

Good review, Patrick! I was just wondering if that Fabric C, the SAS sled that you have on the test unit, can be used to connect external devices such as a JBOD via SAS cables? It’s not clear on the datasheets and other information provided by Dell if that’s possible, and the Dell reps don’t seem to be very knowledgeable on this either. Thanks!

I don’t have more money. Can I use 1 switch MX508n and 1 switch MXG610s?