Today, we get to show the first hands-on with the 4th Generation Intel Xeon Scalable, codenamed “Sapphire Rapids.” We are not going to get to show you everything. Intel has specifically only allowed us to show some of the acceleration performance of the new chips. Since it is going to be a few months until these officially launch, we have some significant guardrails on what we can publish. Still, at some point, we wanted to show off these new capabilities, so we took the opportunity.

The reason for this exercise is simple: Intel knows that it is not going to have the highest core counts in the next generation of servers. Still, only a portion of customers buys the highest core count machines. As such, Intel is trying to show that in the core count segments it will compete in, it can significantly increase the performance per core over using other chips in the market. Indeed, you will see that many of the results that we are showing align with what you have seen on STH previously. We have looked at previous-generation accelerators, like Intel QAT. Before we get into the numbers, this is also the first opportunity we have to show the chips and a live system, so we figured why not do that first.

Hands-on with Intel Sapphire Rapids Xeon The System

When we say “hands-on” what we can show you generally is the system that we are using. We can tell you these are 60-core Sapphire Rapids chips, but we cannot tell you the frequency. Part of that is that these are not retail chips, so the specs for the model may change.

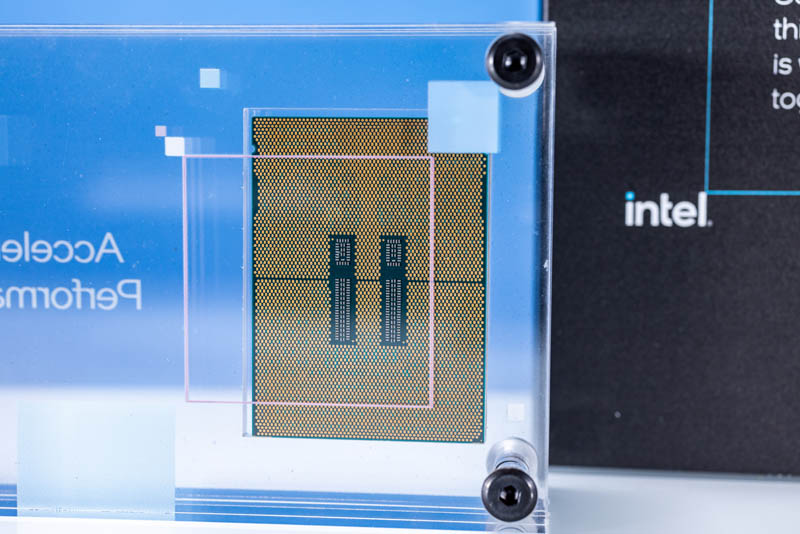

We are allowed to take one heatsink off in the system, but since we already have chips in the display case, here are both sides of a Sapphire Rapids chip, although we expect that the top markings will be more traditional.

In terms of what we can tell you about the server we are using, this is an Intel Software Development Platform (SDP) that is manufactured by QCT. When we can show you the AMD EPYC Genoa development platform, our sense is many readers will come back to this piece for a second look.

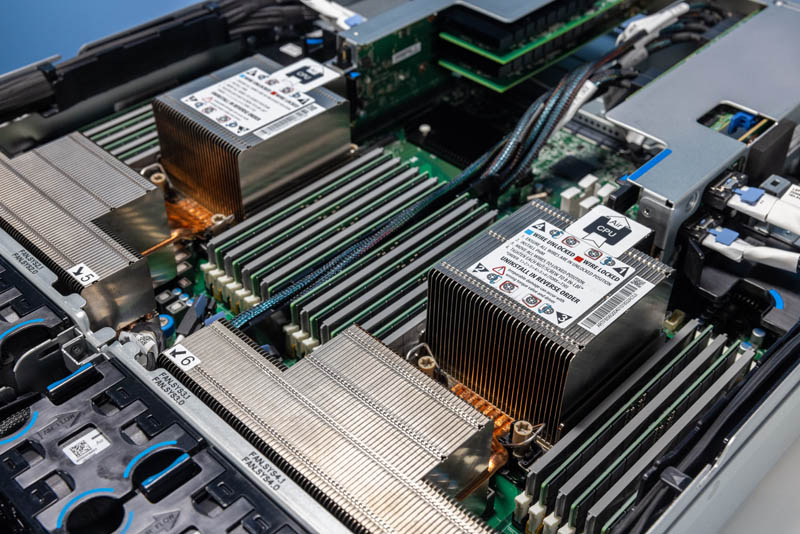

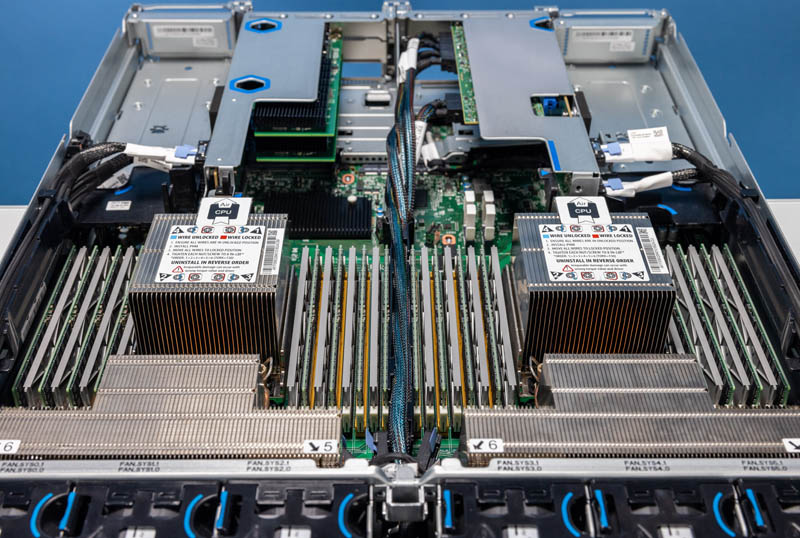

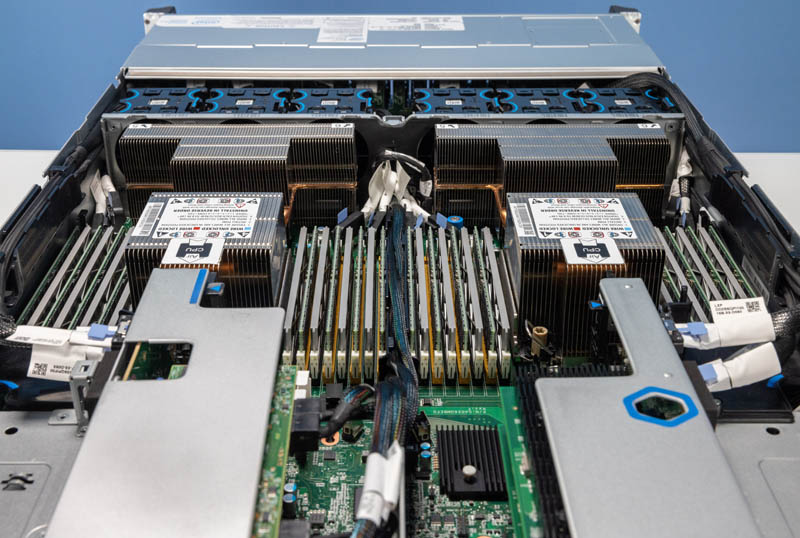

Here is the test setup. As you can see, this is a fairly standard-looking 2U QCT machine. In the front, we have 24x 2.5″ bays. In the middle, we have a fan partition. Perhaps the big one here, though, is really the CPUs.

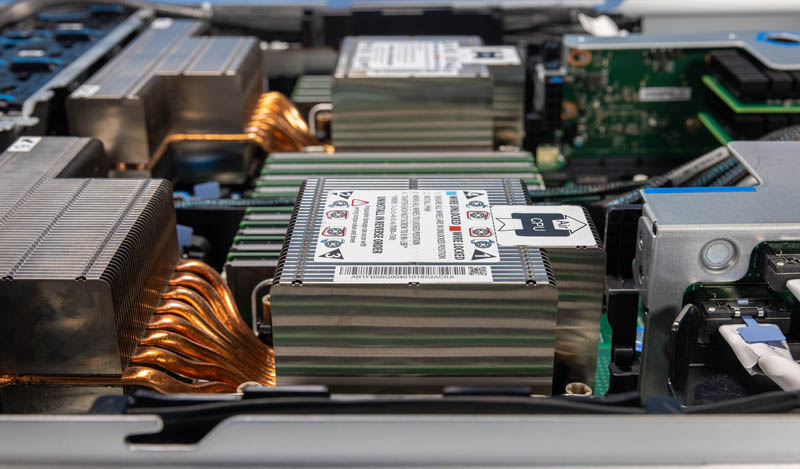

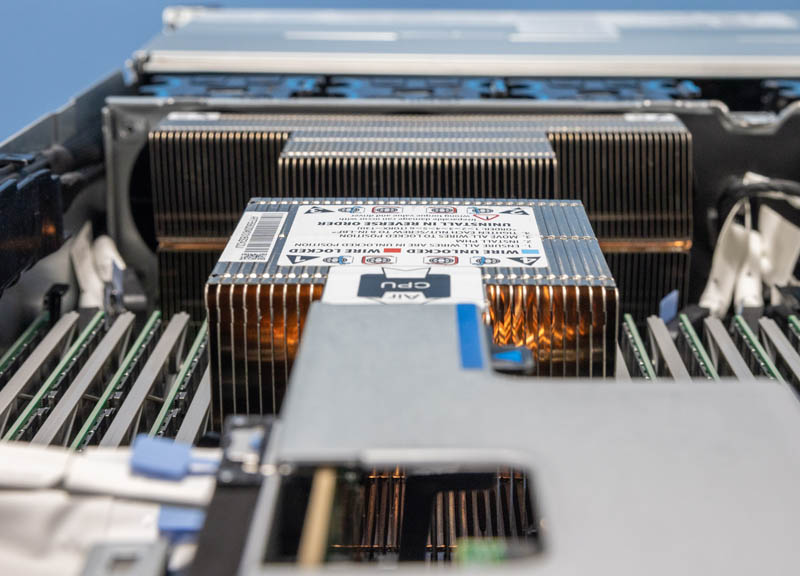

The reason this is particularly interesting is because the CPUs have giant heatsinks on them. There are four captive nuts around the heatsink, much as we saw in the Cooper Lake and Ice Lake Installing a 3rd Generation Intel Xeon Scalable LGA4189 CPU and Cooler. The process of installing chips in the new platform is very similar. The biggest difference is that the CPUs have heat pipes with extra heatsink area attached that have extra screws.

This is a design we are going to see both for Intel Xeon “Sapphire Rapids” as well as Genoa-based platforms. Even the Microsoft Genoa platform we saw recently has a similar setup, albeit in a 1U form factor. The trend is clear, for top-bin parts, the area above heatsinks is often not going to be sufficient for passive heatsink cooling in 2U. We do know one top 3 vendor that has designs that do not use these large heat pipe setups at present for next-gen 2U servers, but they may change that design as well.

Here is a look at the right CPU from the rear.

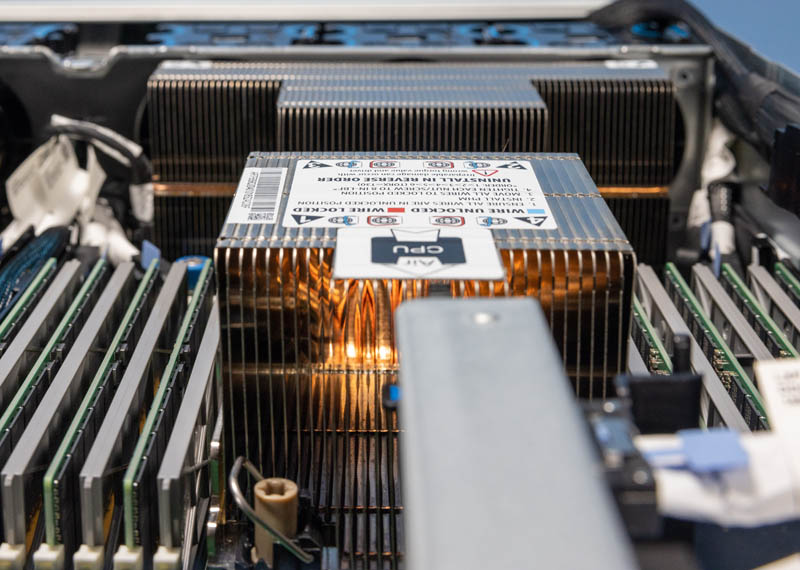

Here is the left CPU. The next generation of processors is going to be using so much power that this heatsink design will be a major change.

Here is another look, including the risers:

Key to our testing is actually this view. The only active riser slots are in the center of the chassis. We needed two Intel E810-C NICs because we are going to be pushing data over the network.

A fun aside here, during testing, we found that we were using an inexpensive 100GbE switch that will be reviewed soon, and we saw significantly lower performance. The key here is really that we are focusing on acceleration. That acceleration is to drive high-speed I/O in many cases. As such, it takes a lot going right to be able to do this kind of testing.

Next, let us get to some accelerators and their performance.

Genoa is gonna rock Intel. Thanks for not pretending like they’re not getting AVX512 and AVXVNNI.

Incredibly insightful article STH.

Read Hot Hardware’s version: “We don’t know what any of this means really, but here’s the scripts Intel gave us, and here’s what Intel told us to run. We also don’t have AMD EPYC so who cares about competition.”

Read STH: “Here’s an in-depth look at a few storylines we have been showing you since last year, and here’s what and why you can expect the market to change.”

It’s a world of difference out there.

One thing left unmentioned is the physical impacts of acceleration. For instance, what is the die space budget for something like QAT and AVX? How are thermals impacted running QAT or (especially AVX) in mixed or mostly-accelerator workloads? And on the software side, (which was briefly touched on in the earlier QAT piece), what software enablement/dev work is needed to get these accelerators to work?

In a future piece with production silicon I’d be eager to get some thoughts on the above.

I don’t think QAT uses AVX. It’s like its own accelerator. Can you show the PCIE or other connection to the QAT accelerator?

Thanks for the balanced view. It’s good you talked AMD and history.

Now can you do that MikroTik switch review you mentioned in this article???

Woot! Woot! Awesome article Patrick/STH!

Why acceleration? It seems like Intel is either not able to or do not want to take the direction on multiple chips on package direction. That leads to packaging specialized chips (accelerators) into the same package with the CPU.

Intel was the company that brought the generic purpose CPU that can be tuned for multiple usages. It seems like AMD is heavily betting on that while Intel is taking the sideway with custom chips for individual workloads, like mainframes did.

It almost seems like Intel is playing into his strength of being able to deliver custom chips leveraging its army of engineers. Would this work? Really hard to say.

Server workloads are getting pushed into more and more to Cloud. So hyperscalers will make the decision but AMD’s strategy sounds better to me. Software is always more malleable over hardware and making the cores/cpus cheaper and abundant was the winning strategy of Intel. I expect it would work again for AMD.

What would be the picture with a QAT card + AMD processor?

There are enough PCIe lines for that.

Looks like it would be the best of both worlds: highest general purpose compute, QAT accelerator if useful.

I remember reading AMX is even worse regarding CPU clock down than (early) AVX512, when they added it to Linux they made it very difficult for workloads to run with AMX (the admin has to explicitly allow it for an application).

All of this needs software support, which only seems to be widely available for QAT. A repo on github probably isn’t enough for most people who don’t want to spend their operations budget on recompiling large parts of their software stack. The only way this accelerator strategy is going to work is if you can replace an AMD machine with an Intel machine, install a few packages through your distribution and it magically runs a lot faster/more efficient.

Also the big question what part of this is available in a virtualized environment. If AMX slows down adjacent workloads that might be cause enough to disable it for VMs in shared environments. I don’t know if you can pass down QAT to a VM.

> I don’t know if you can pass down QAT to a VM.

THIS is really the point in todays cloud world.

Can QAT and other accelerating technologies be easily used in VMs and in Containers (kubernetes/docker).

If they can be used:

– what do one have to do to make it work (effort)?

– whats the loss of efficiency, and with it

– how does a bare metal deployment compare to a deployment in Kubernetes/docker e.g. on AWS EC2 ?

I can’t say I’m on board with the STH opinion that these accelerators are a have changer in there market. From the trend I see is that every buyer but especially hyperscalers don’t want these vendor specific accelerators but they want general purpose accelerators.

Even Intel QAT support is pretty scarce and harder than needed to use and for network functions seemingly overtaken by DPU/TPU hardware. I don’t really see a space for the other Intel specific extensions, and am not sure why STH is such a subscriber to this idea of encouraging vendor specific extensions.

David, sorry but that’s crazy. NVIDIA has a huge vendor specific accelerator market. If hyperscalers didn’t want QAT Intel wouldn’t be putting it into its chips. I don’t think any features go into chips without big customers supporting it. TPU’s are Google only. DPUs outside of hyperscale how many orgs are going to deploy them before Sapphire servers? Even if you’ve got a DPU, you then have a vendor’s accelerator on it.

How much of a die area hit does the QAT on the new Xeon take?

“Imagine there is an application where you are doing various other work but then need to do one or a handful of AI inference tasks.”

1. Servers are not Desktop PCs where you do a lil bit of this, then a lil bit of that.

2. If it’s really just a handful of tasks you can do it on CPU fast enough without VNNI/AMX

CPU extensions like VNNI and AMX have been designed many years before the CPUs came to market. Today it is clear that they are useless as they can’t compete with GPUs/real accelerators.

Both Intel and AMD are stepping away from VNNI and moving to dedicated AI accelerators on CPU, just like smartphones SOCs. These are much faster and much more efficient than these silly VNNI/AMX gimmicks:

Intel will start Meteor Lake embedding their “VPU”.

AMD will integrate their “AIE” first in their Phoenix Point APU next year. They have also shown AIE is on their Epyc roadmap. I seriously doubt that we will ever see AMX on AMD chips.

These accelerators will usually not be programmed directly.They will be called through an abstraction layer (WinML for windows), just like on smartphones.

VNNI and AMX are both basically dead.

“AMD’s strategy is to allow Intel to be the first with features like VNNI and AMX. Intel does the heavy lift on the software side, then AMD brings those features into its chips and takes advantage of the more mature software ecosystem.”

Please stop making things up here: Intel is doing stupid things like VNNI and AMD has to follow for compatibility. Next to no one is using VNNI and there is almost no software ecosystem. They only did make VNNI accessible for standard libraries like Tensorflow you are using and also to WinML.

I am really surprised that you are still pushing Intel’s narrative from few years ago, as even Intel has stepped away from VNNI/AMX and is embedding dedicated inference accelerator units (VPU) in their CPUs.

Even the Arm makers are embedding AI inference extensions in their next DC procs so I’m not sure why there’s an idea that they’re dead. FP16 matrix multiply is useful itself.

@Viktor

“FP16 matrix multiply is useful itself.”

Yes, but why do it in your CPU core with all inefficiencies that come along. Instead these small datatype matrix multiplication will be executed on dedicated units that have better power efficiency, better performance and they don’t stop your CPU from doing anything else while executing.

Effective matrix multiplication is exactly what these VPU, AIE, NPU (Quallcomm), APU (AI processing unit / Mediatek) are doing.

AMX and VNNI are zombies.

Which qat acceleration pcie card was paired with Xeon Gold 6338N? Was it 8950,8960, or 8970?