Going Faster with AMD Pensando DPUs and VMware ESXi 8

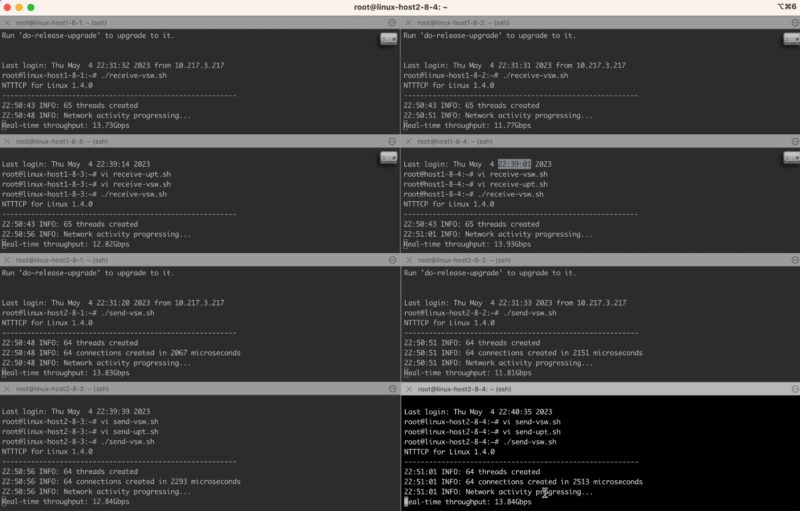

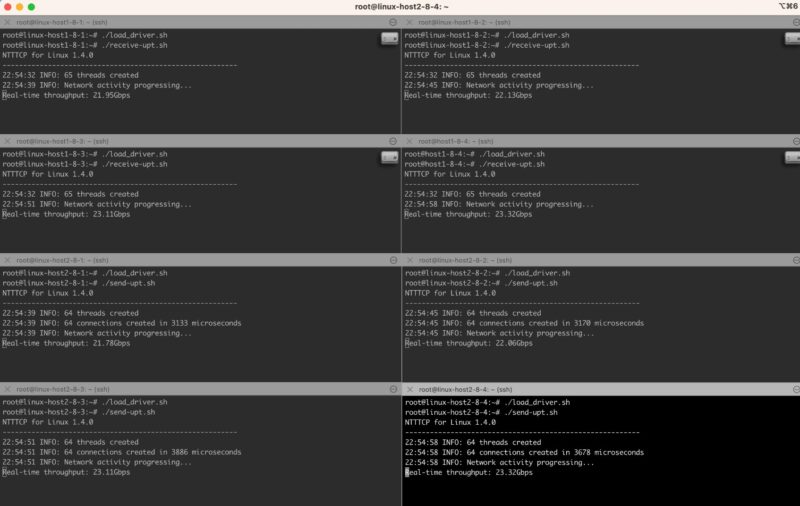

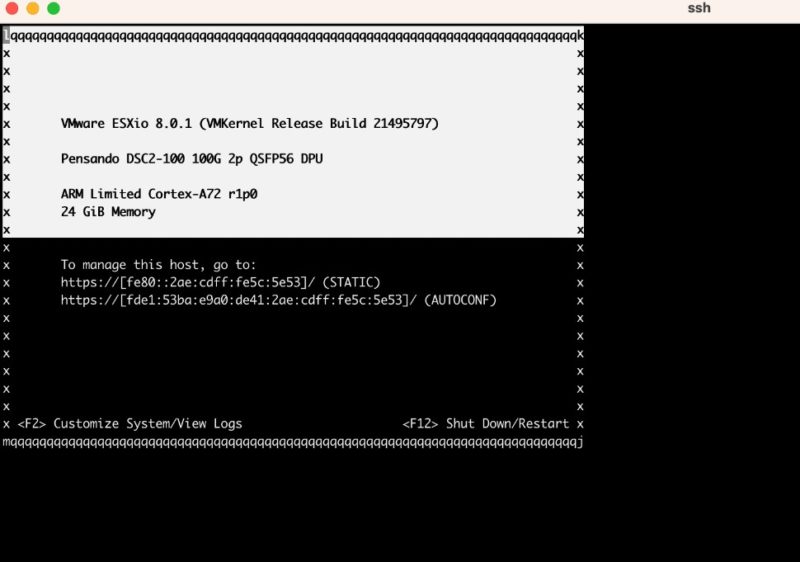

Earlier in this article, we showed how the DPUs run ESXio and work with VMware. Here is an example of using the DSC2-100G cards with standard vmxnet3 networking.

We can then turn on UPT and get roughly double the bandwidth across four VMs.

This is a big difference between this DPU and the NVIDIA BlueField-2 DPU and VMware vSphere lab we showed before. We expect NVIDIA BlueField-2 DPUs will have a certified 100GbE model by the end of the summer of 2023, but for now, if you want 100GbE, then you need AMD Pensando DPUs.

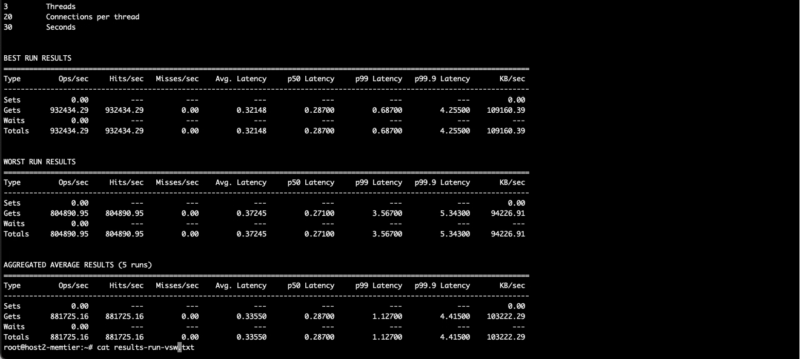

As for latency, the impact of UPT can be seen easily. In non-UPT mode, the average 99.9 percentile latency jumps to 4.415 and one can see our throughput also is not as good.

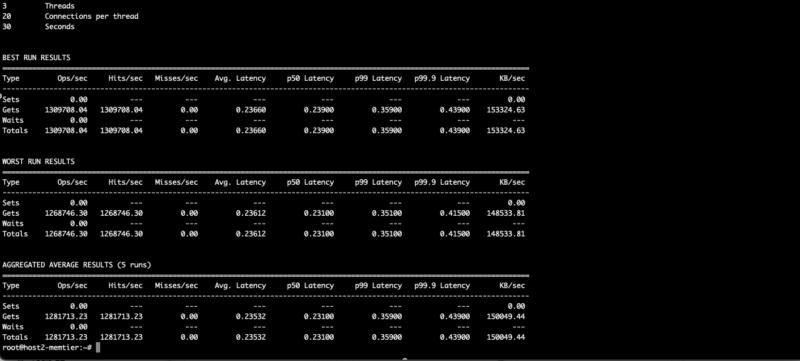

For example, see the 99.9 percentile latency with an average over five redis runs of 0.439.

This performance feels like “of course, it is better” at this point. On the other hand, we have now used both BlueField-2 and AMD Pensando DSC2 DPUs with VMware so we have seen the power of VMware NSX and UPT using DPUs. The best part, of course, is that we get performance more similar to using SR-IOV but with the manageability of being able to live-migrate VMs since we are using UPT.

Final Words

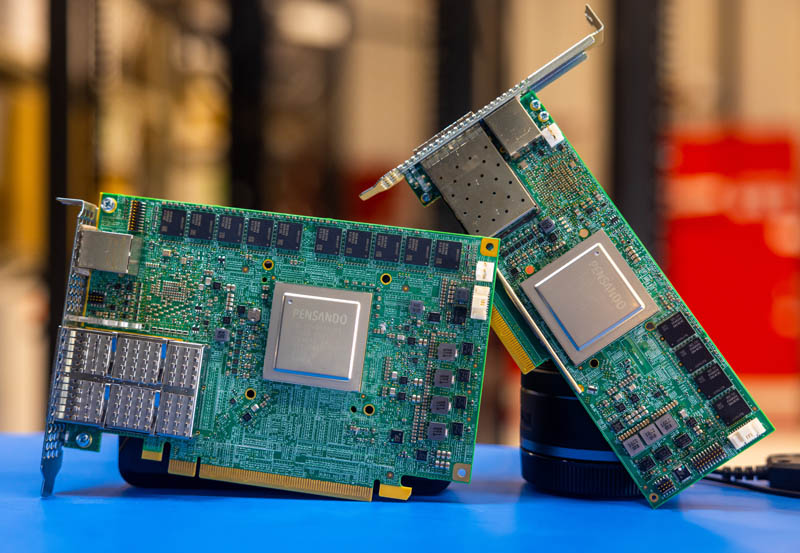

Taking a step back, requiring a P4 programmable packet processing engine, a complex of Arm cores, onboard memory, NAND, and running VMware ESXio on the NICs is a lot to just enable UPT performance. Just looking at the cards, one can see just how much is needed to accomplish that task. On the other hand, this is really the start.

Allegedly this shot with the internal management interface cable to the Dell iDRAC BMC is controversial. This is new technology and so there is a cable to provide management capabilities via the server. Hopefully, future generations can get rid of this cable but this is really minor compared to the act of putting a dual 100GbE DPU into a server that is running its own OS with its own Arm processors, memory, and storage.

After using both BlueField-2 and Pensando in VMware, and also seeing how one logs into the cards and what can be done one there, I have a fairly strong opinion about both. If you just want something that is easy to log into and play around with, NVIDIA BlueField-2 is the hands-down winner. We have shown this many times, but the experience is like logging into a fancy Raspberry Pi with a 100GbE NIC attached over a PCIe switch. AMD Pensando, on the other hand, is the DPU that has roughly 4x the performance as we were writing this piece since it has 100GbE networking for VMware versus 25GbE for BlueField-2 (this will change over time.) NVIDIA has BlueField-3 now, although not supported yet for VMware so things will change over time. Perhaps the most intriguing part is that while there is a huge gap between a non-P4 developer’s experience logging into the cards and running things, VMware hides all of that. VMware is doing all of the low-level programming to the point where vastly different architectures can be used with the only difference being selecting one drop-down menu item for either solution.

The vision of adding DPUs to VMware is simple. Order servers with DPUs today, and you can use them as standard NICs. As you are ready, you can bring a cluster’s capabilities online with NSX and UPT to increase the manageability and performance of your VM networking. VMware is going to keep building functionality in the future and has talked about things like vSAN/ storage, bare metal management, and other capabilities coming to DPUs. VMware is building those capabilities so you do not need to have DPU or P4 programming experts on staff. If you are a hyper-scaler, that is not an issue, but for most enterprises, it is easier to wait for VMware to deliver these capabilities.

The value proposition on this one is simple. In 3-5 years, you are going to want DPUs in your environment as VMware adds each incremental feature set. If you are installing servers without supported DPUs today, then your cluster will not get those same features.

Hopefully, you enjoyed this look at the AMD Pensando DSC2. We will have more DPU coverage on STH in the near future.

STH is the SpaceX of DPU coverage. Everyone else is Boeing on their best day.

Killer hands on! We’ll look at those for sure.

This is unparalleled work in the DC industry. We’re so lucky STH is doing DPUs otherwise nobody would know what they are. Vendor marketing says they’re pixie dust. Talking heads online clearly have never used them and are imbued with the marketing pixie dust for a few paragraphs. Then there’s this style of taking the solution apart and probing.

It’ll be interesting to see if AMD indeed also ships more Xilinx FPGA smartnic or no?

They released their Nanotubes ebpf->fpga compiler pretty recently to help exactly this. And with fpga being embedded in more chips, it’d be an obvious path forward. Somewhat expecting to see it make an appearance in tomorrow’s MI300 release.

You had me at they’ve got 100Gb and nVidia’s at 25Gb

Does this work with Open Source virtualization as well?

Why does it say QSFP56? That’d be a 200G card, or 100G on only 2 lanes.

@Nils there’s no cards out in the wild and no open source virtualization project that currently has support built in for P4 or similar. It’s a matter of time until either or both become available but until then it’s all proprietary.

I also don’t expect hyperscalers to open up their in-house stuff like AWS’ Nitro or Googles’ FPGA accelerated KVM