The Gigabyte R183-Z95 is a really cool 1U server. Inside there are two AMD EPYC 9004 series processors, but that is only the beginning. There is room for four different types of storage to be plugged directly into the system without using any HBAs or RAID controllers. In this review, we are going to see what is different, especially with the E1.S EDSFF SSD bays. Let us get to it!

Video Version

After publishing this article, we got a few requests to see more angles. We put it in a video:

As always, we suggest watching in its own window, tab, or app for the best viewing experience.

Gigabyte R183-Z95 External Overview

The system itself is a 1U server that is 815mm or just over 32 inches deep. From the front of the server, you can see there is something different about this one.

The left side, but not the left rack ear, has the USB 3 Type-A port as well as the status LEDs and power buttons. We do not see this often these days because front panel space is usually at a premium.

Next to that is an array of eight 2.5″ drive bays. Usually, the peak density is twelve 2.5″ front drive bays. The downside to that configuration is that cooling becomes a challenge for the entire system and the drive trays are usually not as robust. Here, Gigabyte is giving us a lot of storage, while using larger trays and more space for better airflow.

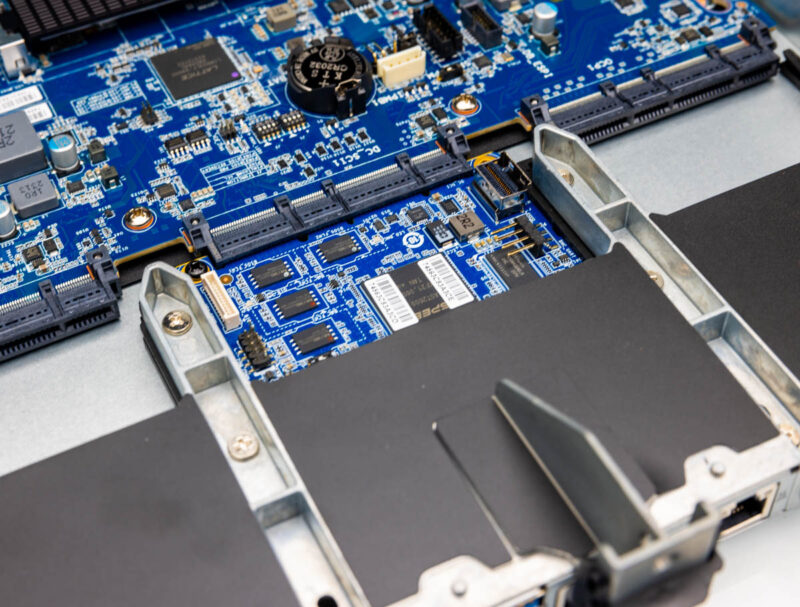

The trick to getting 14x front panel drives is using EDSFF, or more specifically six E1.S drive bays.

You may have seen our EDSFF overview.

That was done in the early days of EDSFF. Now, we have many drive manufacturers and systems that take various formats. Since we had two systems where we needed the drives, when I was in California for the recent Inside the Secret Data Center at the Heart of the San Francisco 49ers Levis Stadium piece, I stopped in at Kioxia and borrowed a few drives to use here.

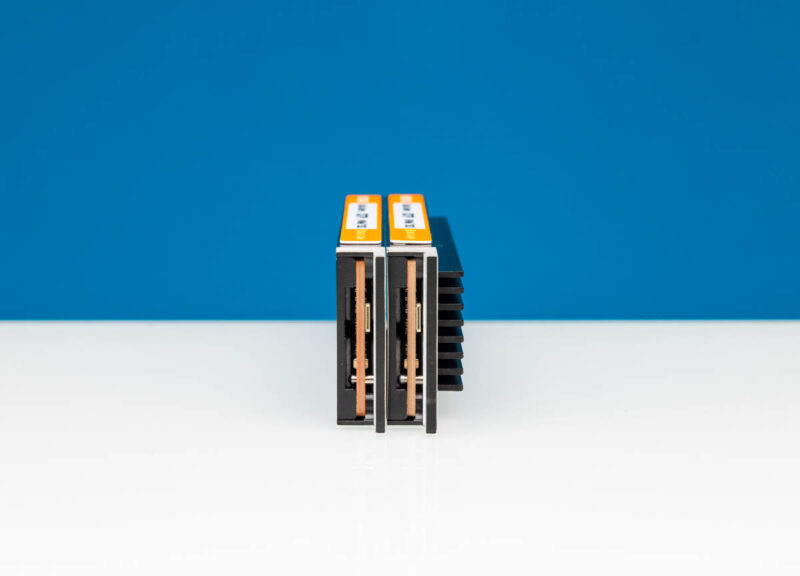

We have both Kioxia XD6 and Kioxia XD7P drives here.

Something that is fun with EDSFF, is that the server makers, or allegedly some well-known OEMs, decided that instead of making the latching mechanism a part of the spec, they instead wanted their own drive carriers as they have with 2.5″ drives. So the server needs to come with the little latching carrier to fit a server, and that is what we have here. It is secured by two screws and is very small. This is one of the silliest things in servers today.

Gigabyte provides blanks, so it is pretty easy to install the latches onto drives.

Something else that matters is the size. This server specifically takes E1.S 9.5mm drives (and smaller.) If you have a larger 15mm E1.S drive, with a heatsink for thermal management, then you are at minimum not getting to use a full six drive bays here.

Still using 9.5mm drives, we can add six E1.S drives plus the eight 2.5″ NVMe SSDs for a total of fourteen on the front of the chassis.

Here is a quick look at the XD7P in 9.5mm and 15mm so you can tell the difference easily.

Moving to the rear of the server, we have a fairly standard layout for folks that want to run A+B sides of racks for both power and networking.

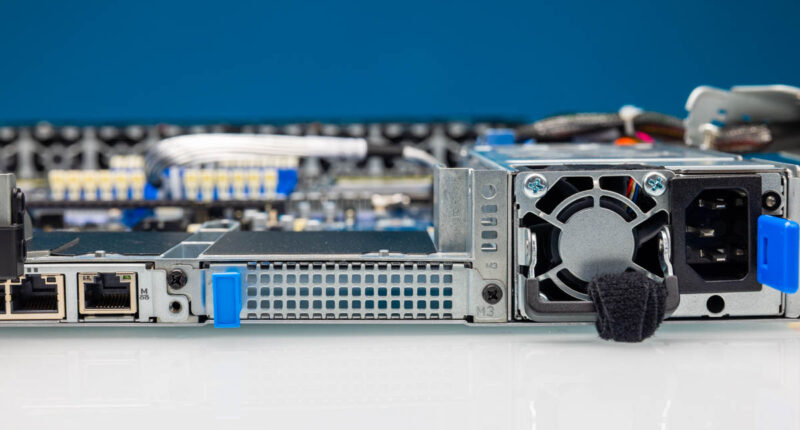

The power supplies at either side are LiteOn 1.6kW units. Having power supplies at either side allows a PDU to be connected on either side for A+B redundant power.

Next are the risers on either side of the rear. These are PCIe Gen5 x16 full-height risers and can be removed with a single thumbscrew. They are easy to work on since they are cabled.

Underneath these risers, we have OCP NIC 3.0 slots.

These are for A+B networking on each side of the chassis. Gigabyte is using the SFF with pull tab design, which is the easiest to service.

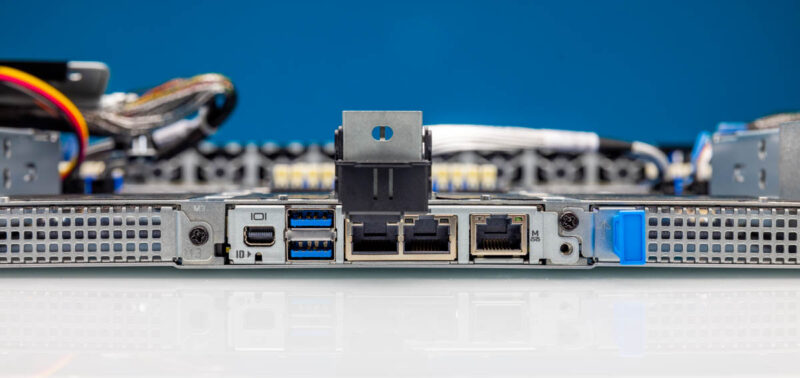

In the rear, we get something different. We get a mini DisplayPort video output instead of VGA. Then we get two standard USB 3 Type-A ports. Finally we get three NICs, two are Intel i350-AM2 ports. That is a higher quality 1GbE NIC than the i210 which is a nice touch.

We also get the out-of-band management port. The NIC and the ASPEED BMC are both on an OCP NIC 3.0 form factor card in the middle slot.

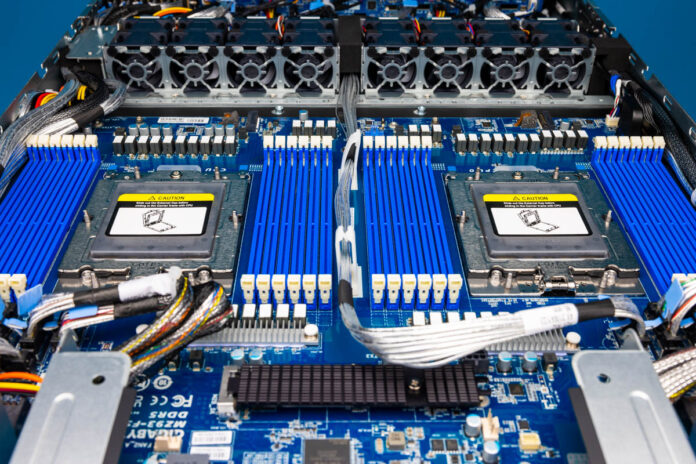

Next, let us get inside the system to see how it works.

Server power usage uptick over time “The power supplies at either side are LiteOn 1.6kW units”…Ok some very old (circa 2015+- 1 year) 2U/4Node dual-socket Xeon, 16 DIMM slots, 12 total drive slots (3.5”) servers that I have as cannon fodder for my home lab have two 1,620 Watt power supplies.

Not dissing this very cool new 1U beastie, and its a core monster compared to those old Xeons, but that’s about 2X Watts per 1U over ~8 years. So yeah way ahead with the new beast on lower Watts-per-core, but only filling that same rack at 50% of the slots…Interesting calculus.

Yes, the most recent chips are certainly changing the density of data centers dramatically. Considering a single rack usually maxes out somewhere around 20kW, being able to hit that in just over 12U is pretty wild.

But we are now talking about EPYC 96-core monsters with 384MB of L3 cache, 2 of which are 25% faster than 8 E5-2699 v4’s from 2016.

I think we will see some big changes in how datacenters run things with this type of density, and I, for one, am all for it.