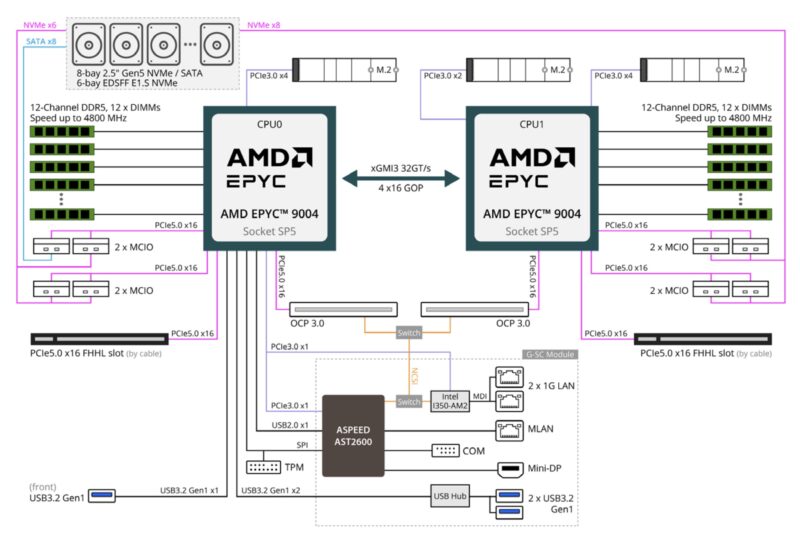

Gigabyte R183-Z95 Block Diagram

Here is the system block diagram for the server.

While most of this is symmetric, Gigabyte is using the PCIe Gen3 lanes in an interesting fashion. Each CPU gets a M.2 SSD in a PCIe Gen3 x4 slot. CPU1 gets the additional PCIe Gen3 x2 M.2 slot. Those two PCIe Gen3 lanes are split between the Intel i350-AM2 1GbE NIC and the ASPEED AST2600 BMC on CPU0. This is very common since if one did run it as a single socket server, then you would want the BMC and lower-speed NICs to be active. Still, if you want to use this as a single socket server, then you are probably better off looking at a single socket server instead.

Another feature worth noting is that Gigabyte is using a full 4×16 Infinity Fabric link between the sockets. Some servers are only using a 3×16 link. In a 1U form factor, those extra 32 PCIe Gen5 lanes that one gets with the 3×16 link are unlikely to be used due to space constraints, so this is a higher-performance configuration.

Next, let us get to management.

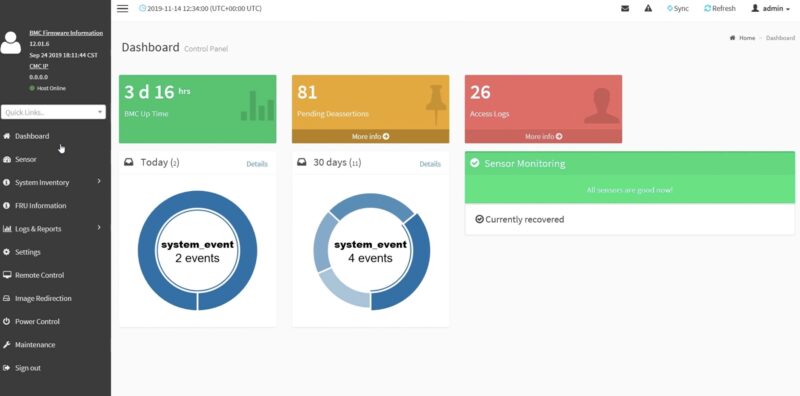

Gigabyte R183-Z95 Management

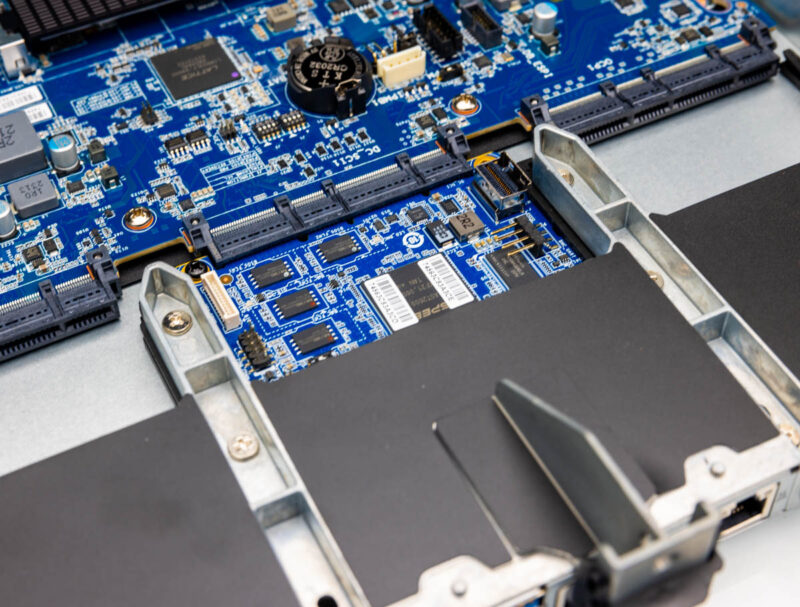

On the management side, we get something very standard for Gigabyte. We get an ASPEED AST2600 BMC. This is on its own card which is becoming more common than putting the BMC on the motherboard. Having a card BMC means that one can easily change the BMC for other customers/ regions.

Gigabyte is running standard MegaRAC SP-X on its servers, which is a good thing for IPMI, web management, and Redfish.

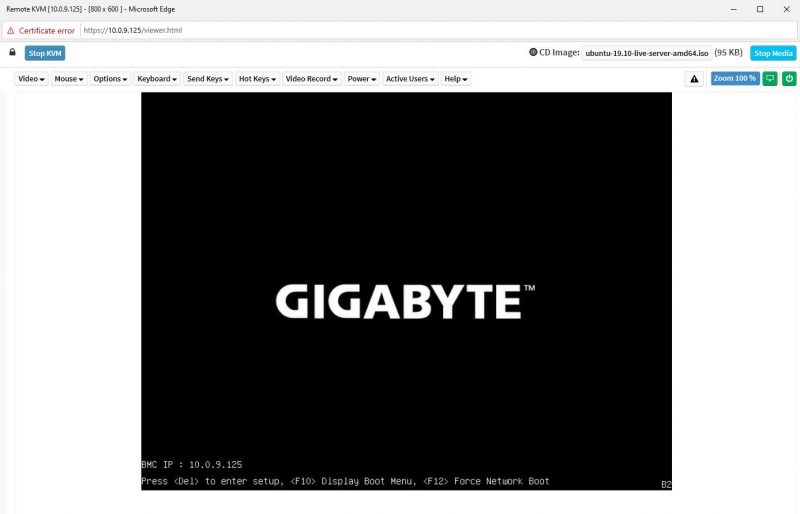

Gigabyte also has a HTML5 iKVM that is included.

Something a bit different is that this server has a unique BMC password. For those who have not purchased servers in years, this is common. You can see Why Your Favorite Default Passwords Are Changing that we wrote a few years ago on why this is the case.

Next, let us do a quick performance check.

Gigabyte R183-Z95 Performance

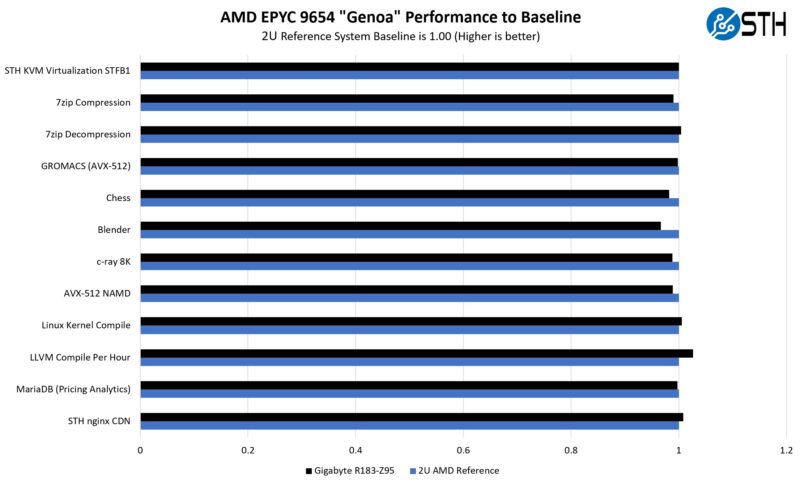

For this, we were most interested to see how the system could handle top-end CPUs in the 1U form factor. What is the “top-end” in AMD EPYC these days is debatable, so we just picked pairs of the top 3 SKUs we had for Genoa, Genoa-X, and Bergamo and ran them against our reference AMD 2U platform. Here are the Genoa results:

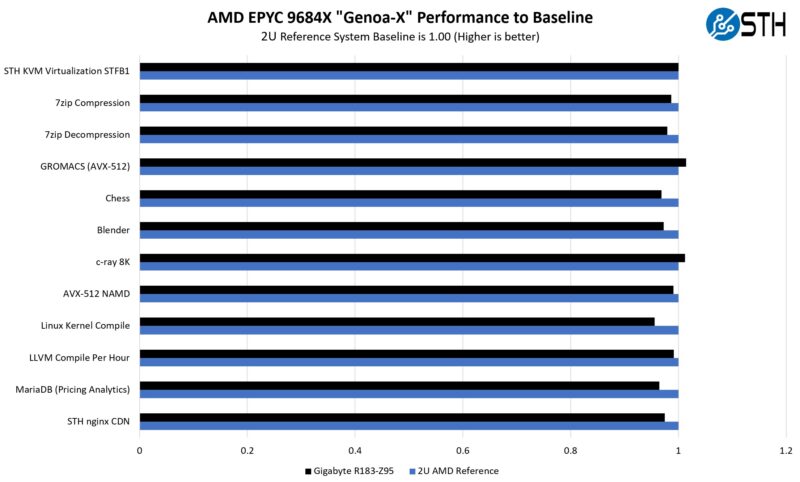

Here are the Genoa-X results:

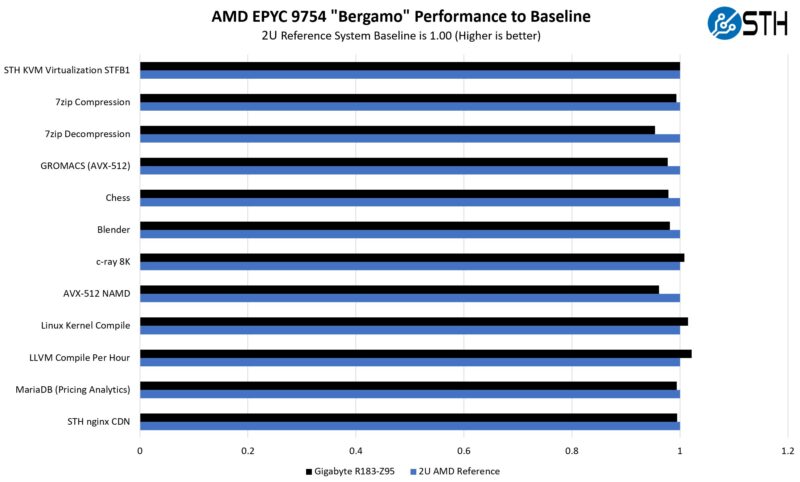

Here are the Bergamo results:

Overall, these are still +/- 5%, which can easily be seen as a margin of error. At the same time, the Genoa-X numbers were a bit lower on average. Now we are still well below the 30C temperature limits for 400W in this system, with our 24.2C rack inlet temperatures. With all of this being said, we are using a larger 2U system that is twice the size as the Gigabyte and yet the performance is pretty close. Even if on average we were 1-2% lower, getting twice the density would likely outweigh those differences.

Next, let us get to power consumption before finishing off our review.

Server power usage uptick over time “The power supplies at either side are LiteOn 1.6kW units”…Ok some very old (circa 2015+- 1 year) 2U/4Node dual-socket Xeon, 16 DIMM slots, 12 total drive slots (3.5”) servers that I have as cannon fodder for my home lab have two 1,620 Watt power supplies.

Not dissing this very cool new 1U beastie, and its a core monster compared to those old Xeons, but that’s about 2X Watts per 1U over ~8 years. So yeah way ahead with the new beast on lower Watts-per-core, but only filling that same rack at 50% of the slots…Interesting calculus.

Yes, the most recent chips are certainly changing the density of data centers dramatically. Considering a single rack usually maxes out somewhere around 20kW, being able to hit that in just over 12U is pretty wild.

But we are now talking about EPYC 96-core monsters with 384MB of L3 cache, 2 of which are 25% faster than 8 E5-2699 v4’s from 2016.

I think we will see some big changes in how datacenters run things with this type of density, and I, for one, am all for it.