Gigabyte R183-Z95 Internal Overview

Here is a quick view from the front of the system to help orient you as we go through this section.

First, there is the main large top cover that is secured via a nice latch. Then there is this additional metal piece.

Removing that piece allows us to get to the storage backplanes. We can see the EDSFF backplane is deeper than the 2.5″ one.

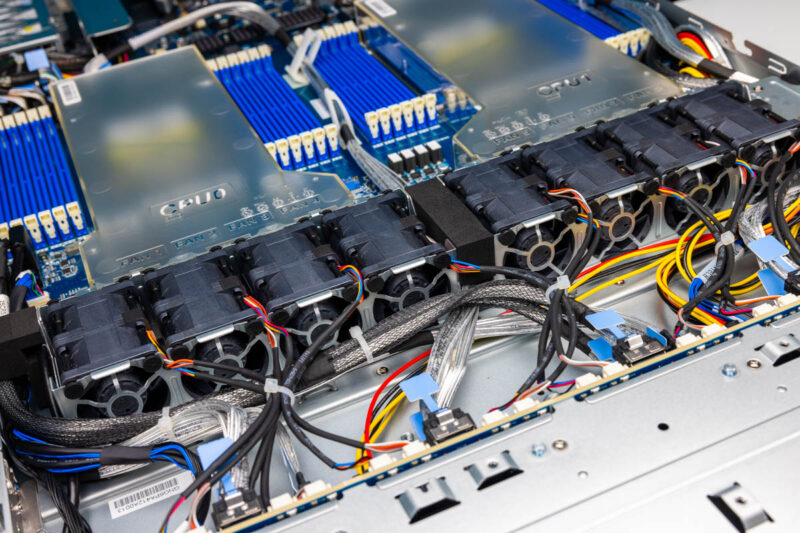

While we see the PCIe Gen5 cables to the front, we also see that the fan modules attach to the 2.5″ storage backplane. That is a bit of a bummer. If a fan fails, then most likely one will need to remove this front top metal cover to get under here and to the backplane. We wish Gigabyte changed this front metal cover to a tool-less design as that would help a lot on the service side.

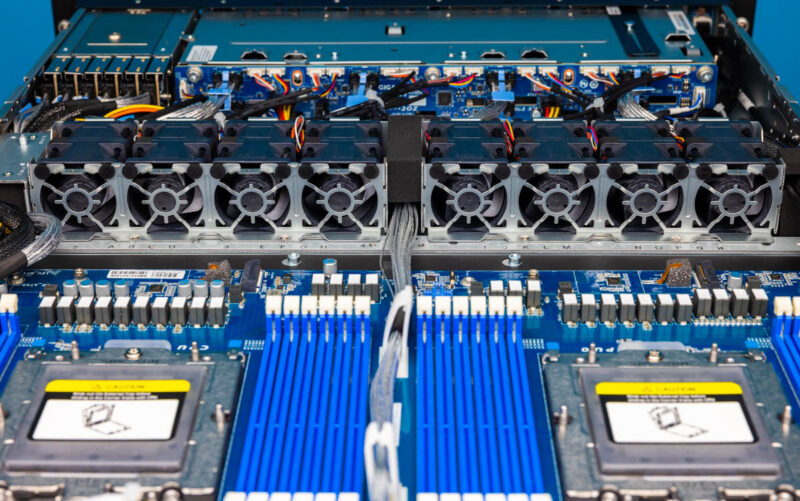

There are a total of eight dual fan modules. One advantage of moving the fan cables to the front is that it cleans up the exhaust side of the fan modules considerably.

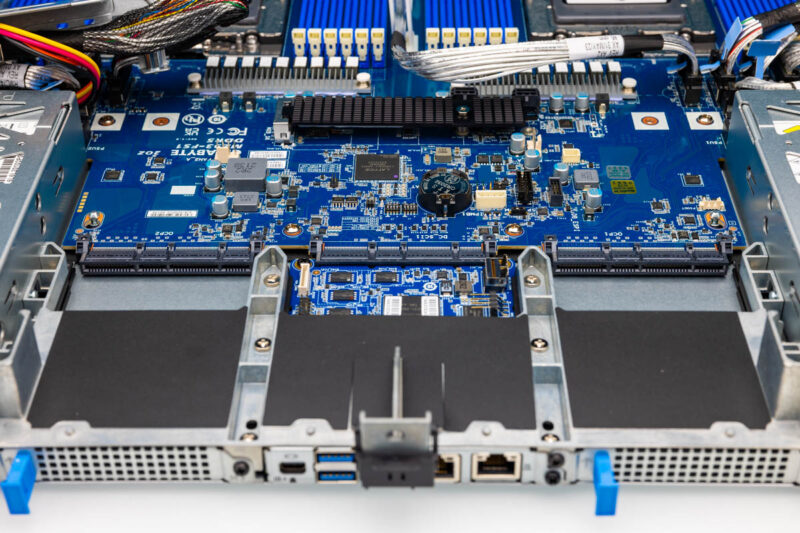

Before we get to the dual AMD SP5 sockets, there are two other features that eagle-eyed readers may have spotted.

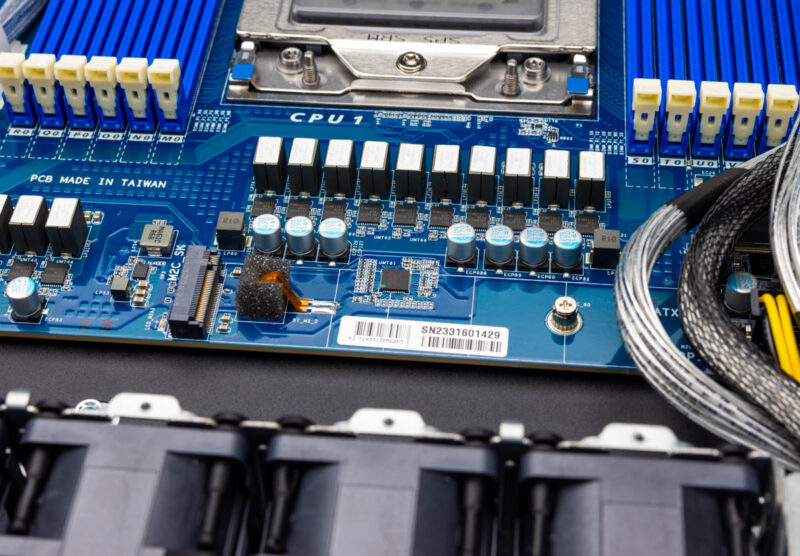

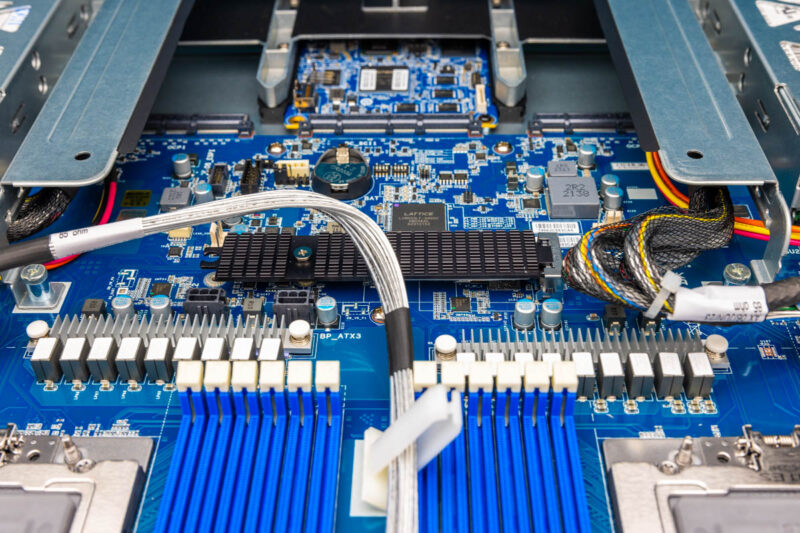

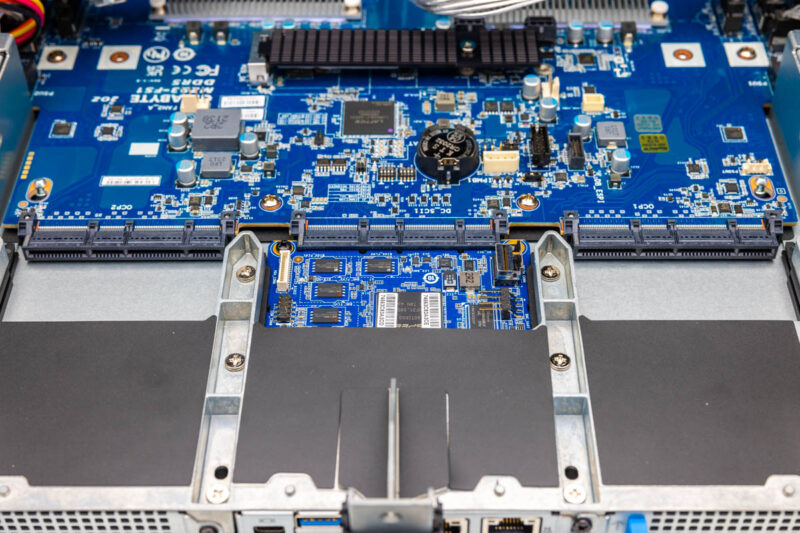

Between the fans and the CPU, we have a M.2 SSD slot.

In fact, there are two, one in front of each CPU.

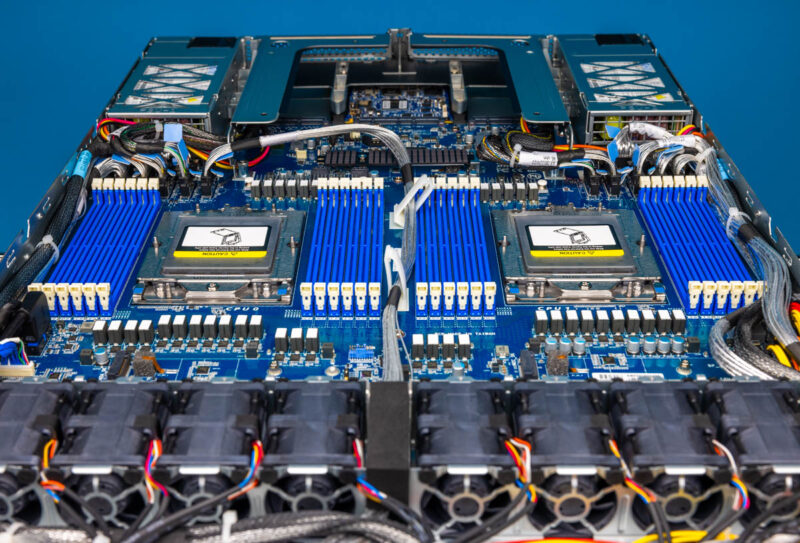

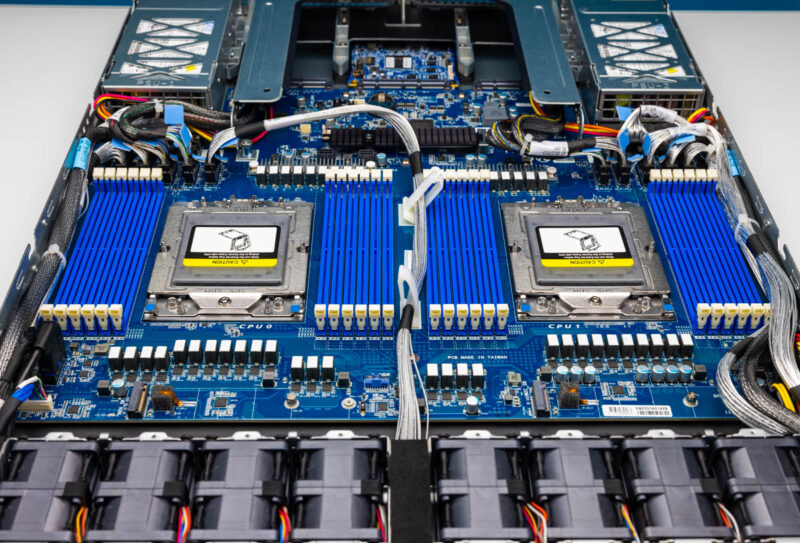

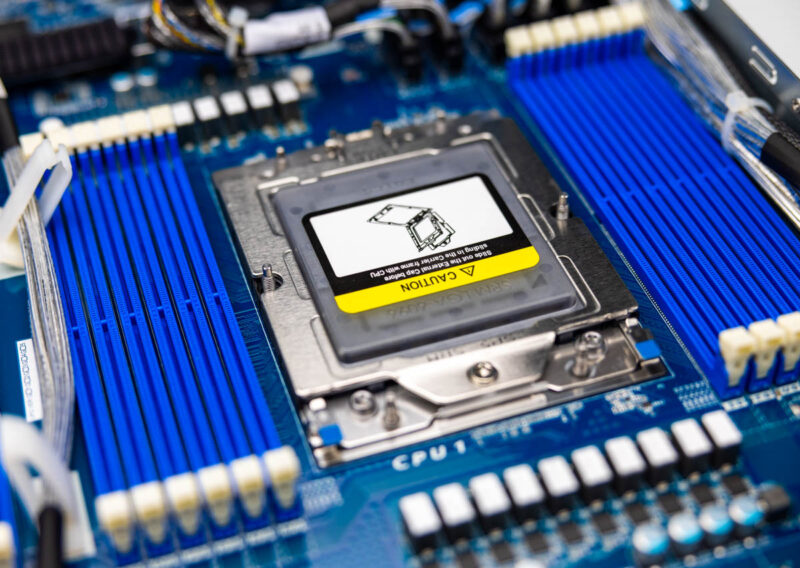

Once we get past our fourth type of SSD connectivity (2.5″, E1.S, add-in card, and now M.2) we get to the CPU sockets. These sockets currently support AMD EPYC 9004 Genoa, Genoa-X, and Bergamo processors. We expect this server to support AMD EPYC Turin later in 2024 when it is released.

Each CPU socket gets 12x DDR5 DIMM slots in a 1DPC configuration.

Something to keep in mind here is that TDPs are not unconstrained in this system. It is rated for 300W TDP at 35C ambient and 400W at 30C ambient and things like cards installed do matter if you want to get to 400W. Most data centers run under 30C, but there are folks doing higher temps to save on cooling power.

Behind the CPUs, there is a third M.2 slot for 17x SSD spots without having to use the PCIe risers.

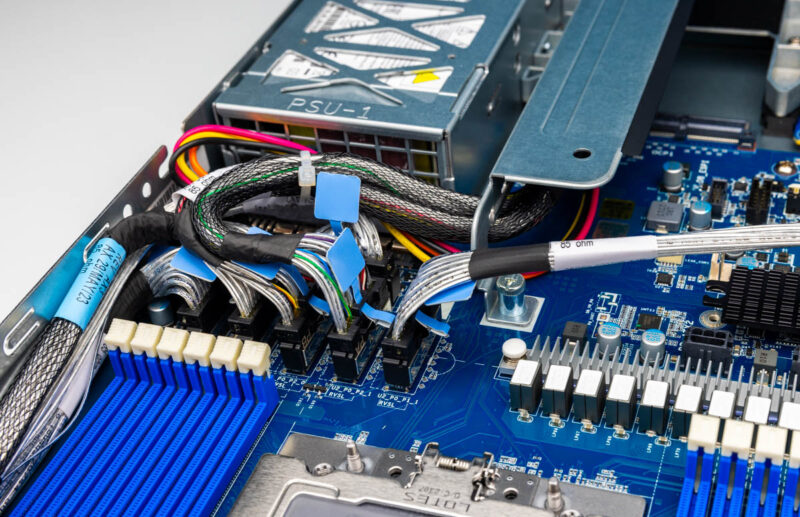

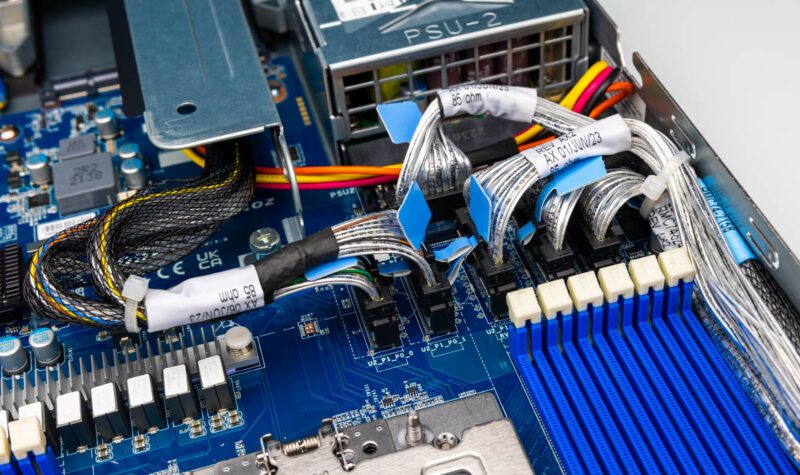

On the topic of risers, one can see the MCIO connectors between the PSU and the CPU socket.

These MCIO cable points keep PCIe traces on the motherboard to a minimum, helping to reduce the need for retimers which lowers the cost by over $20/ PCIe Gen5 x16 run that they are not used. They also add some flexibility to the system.

Looking at the rear with the risers removed, we can see the two OCP NIC 3.0 slots on either side.

In the center is our ASPEED AST2600 management BMC and low speed NIC.

The motherboard stopping well short of the rear of the system is very common these days and will become more common as PCIe Gen5/ CXL becomes better adopted and as we move into the PCIe Gen6 era.

Next, let us get to the block diagram.

Server power usage uptick over time “The power supplies at either side are LiteOn 1.6kW units”…Ok some very old (circa 2015+- 1 year) 2U/4Node dual-socket Xeon, 16 DIMM slots, 12 total drive slots (3.5”) servers that I have as cannon fodder for my home lab have two 1,620 Watt power supplies.

Not dissing this very cool new 1U beastie, and its a core monster compared to those old Xeons, but that’s about 2X Watts per 1U over ~8 years. So yeah way ahead with the new beast on lower Watts-per-core, but only filling that same rack at 50% of the slots…Interesting calculus.

Yes, the most recent chips are certainly changing the density of data centers dramatically. Considering a single rack usually maxes out somewhere around 20kW, being able to hit that in just over 12U is pretty wild.

But we are now talking about EPYC 96-core monsters with 384MB of L3 cache, 2 of which are 25% faster than 8 E5-2699 v4’s from 2016.

I think we will see some big changes in how datacenters run things with this type of density, and I, for one, am all for it.