The Gigabyte H262-Z62 is thoroughly modern. This 2U server packs an absolute ton of functionality. For those ready to make the jump to AMD EPYC with the newest 7002 series codenamed “Rome” CPUs, you will be rewarded with this platform. Not only will you get more cores than with Intel Xeon in a similar form factor, but you will also get more memory capacity (with standard SKUs), more memory bandwidth, and more PCIe bandwidth than you see being used in most 1U servers. Beyond just the raw features, these systems also include a new management feature to help manage all four nodes in the chassis. In our review, we are going to show what makes this system special.

Gigabyte H262-Z62 Overview

If you saw our Gigabyte H261-Z60 and Gigabyte H261-Z61 reviews, much of this review is going to look familiar, just with more. It is still a 2U 4-node platform with many of the same chassis features, one just gets more with this platform. One can see a great example of this just by looking at the front of the chassis:

You can see the 24-bay 2.5″ U.2 NVMe SSD front bay solution. Each node gets 6x NVMe drives. These are still PCIe Gen3 as it will likely be until more Gen4 drives come out next year that we see Gen4 U.3 backplanes hit the market. Still, these are six, but not the only NVMe storage devices these systems have. Just for comparison, here is the system in our rack:

Below the Gigabyte H262-Z62 is the H261-Z61 we reviewed previously as an AMD EPYC 7001 series platform. That platform only has two NVMe drives per node as well as four SAS/ SATA. Now, all six front bays can be NVMe and there is plenty of additional PCIe bandwidth beyond that. You can see that same 4+2 configuration on the Intel Xeon E5-2600 V4 2U4N system above these two. That system is a 2U 4-node Intel Xeon E5-2630 V4 system with 2x 2TB NVMe drives per node and a 50GbE adapter in each node. With the H262-Z62 we can now effectively consolidate those Xeon E5 V4 platforms by a 3:1 or 4:1 ratio into each H262-Z62 system and that is comparing state-of-the-art from only about 30 months earlier. More on that later in this review.

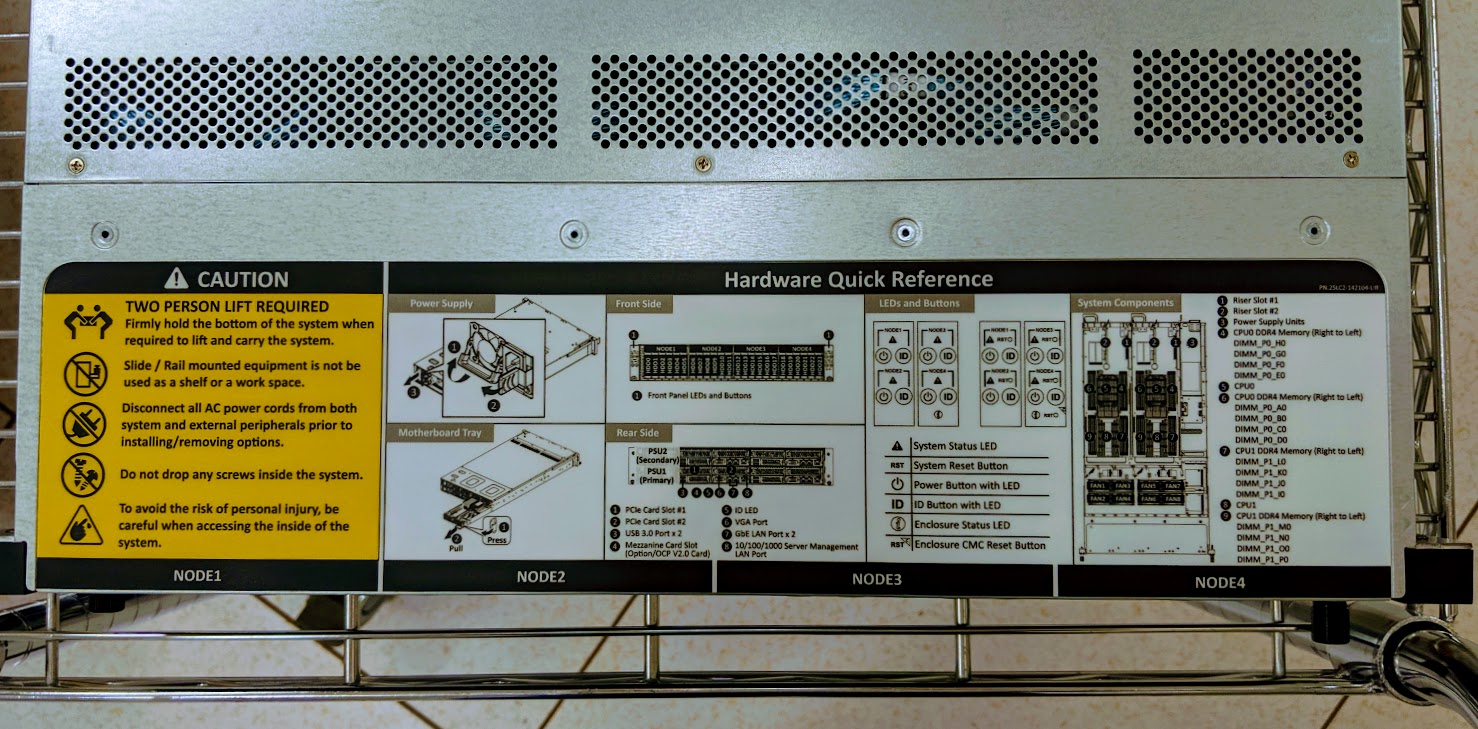

Installing the system into a rack, the server comes with a hardware quick reference guide. This is not the most extensive we have ever seen for a server, but it is adequate. Some vendors do not include this type of diagram. It shows how Gigabyte has been increasing its quality over the past few years which includes little details like this. Gigabyte’s servers are rebranded by some other larger traditional server players, and you can see how those OEM contracts are helping the design of these servers.

That increased attention to detail also extends to the shipping box which is made of high-density foam and is really a step up to what we have seen previously.

Other simple features such as the tool-less rails and now tool-less drive trays make working on the systems much better.

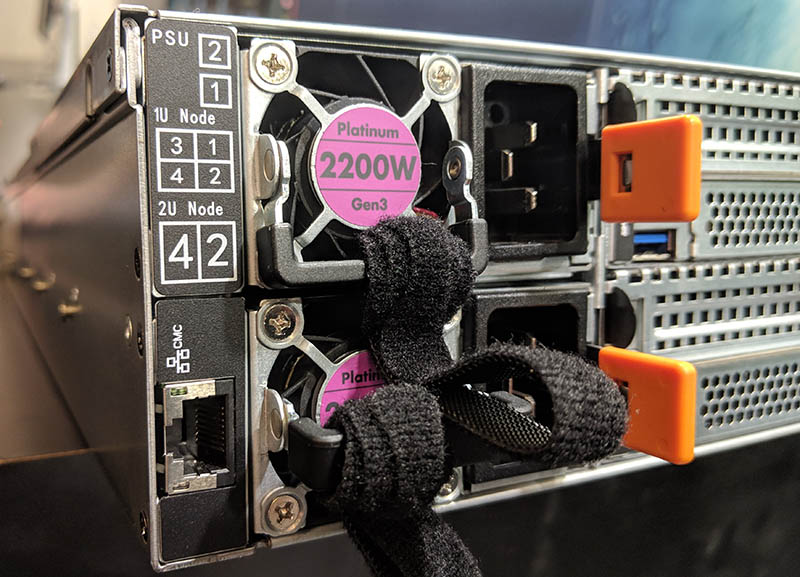

Moving to the rear of the chassis, we see dual 2.2kW 80Plus Platinum power supplies. We are just going to note here that you can put enough components in the chassis to exceed 2.2kW. We would like to see Gigabyte offer larger PSUs in the future for this platform. At the same time, if you are running the average virtualization cluster, you will not hit this number. Even with a HPC cluster, you will not see 2.2kW here. It requires loading the entire box with components and then putting everything under heavy load to get there.

We wanted to note here that one gets two USB 3.0 ports, a VGA port, two 1GbE ports, and a management port in each node. That is more than we saw with the Cisco UCS C4200 as an example. One also gets an OCP port and two low profile expansion ports. More on those when we get to the node overview.

Turning to the CMC, we just wanted to highlight this port, which is a chassis management controller port. Gigabyte has not only per-node onboard IPMI, but it has the ability to use a single port for management. We are going to discuss those changes later in this review.

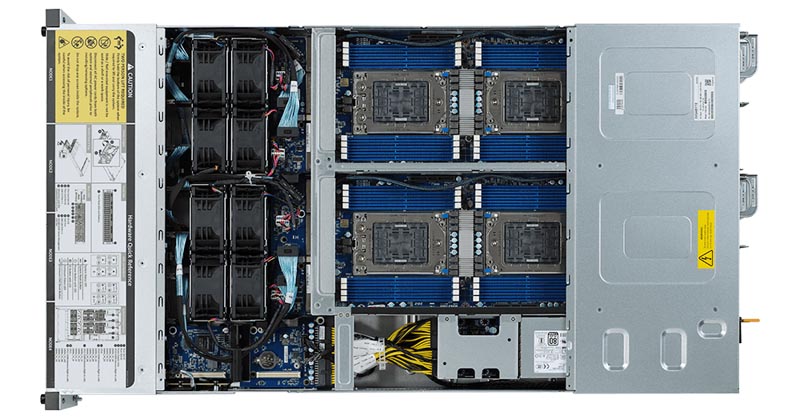

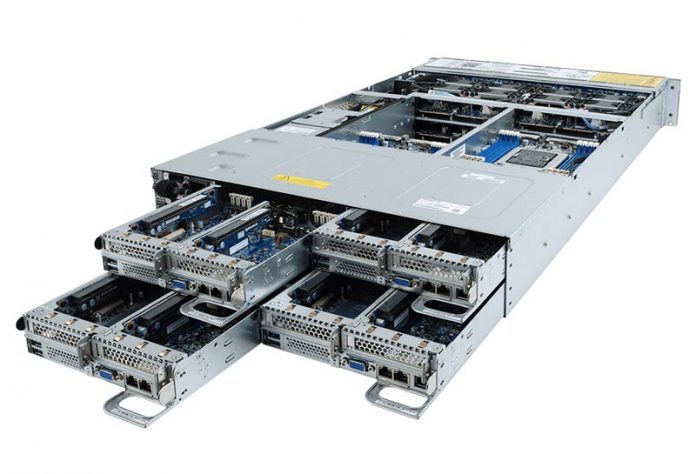

Here is the inside of the chassis. One can see 8x 80mm fans to provide immense and redundant cooling in the chassis. Next to these fans is the CMC logic. One can see two option slots and the CMC BMC just below the fans. This is actually something new compared with the H261 generation that we will go into in the management section later.

Overall, this is a fairly typical 2U 4-node setup, but Gigabyte has made improvements such as with 24x U.2 NVMe SSD bays with tool-less carriers that are generational improvements.

Gigabyte H262-Z62 Node Overview

In this section, we are going to do something a little different. We brought an unconfigured and a configured node into the studio to show off both versions.

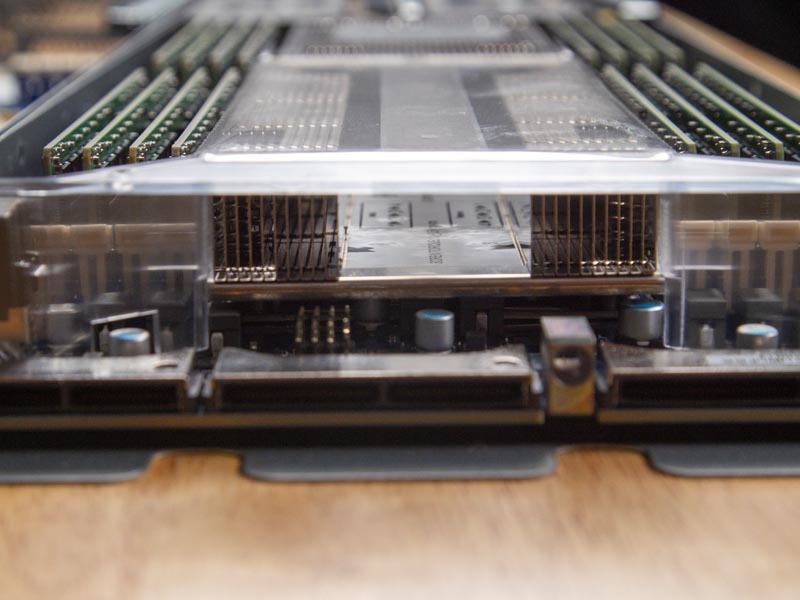

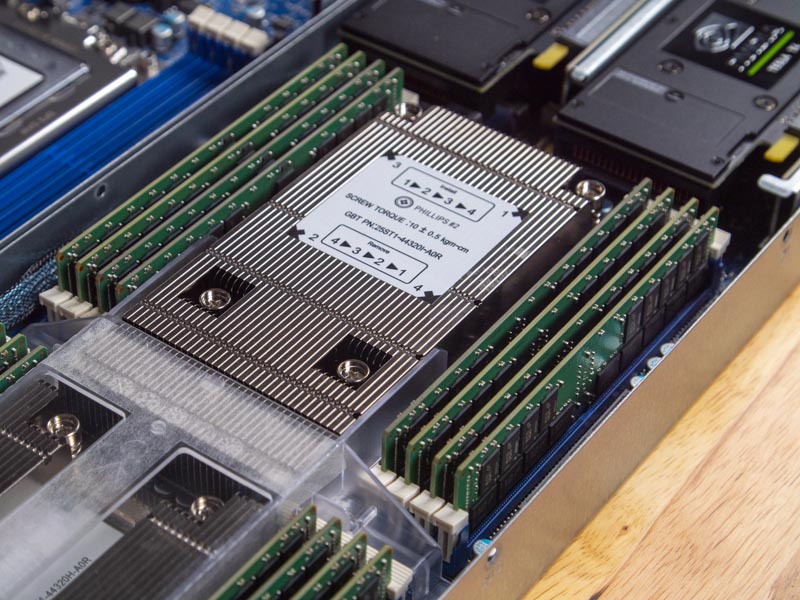

First, looking at the front of the node, one can see high-density connectors for matin the node with the chassis. These carry signals to the chassis management controller (CMC), front NVMe drive bays, and power supplies. One can see the air shroud that covers the first heatsink and the eight DIMMs per CPU. Those eight DIMMs support up to 128GB modules meaning one can fit up to 1TB of memory per CPU or 2TB of memory per node.

That large air channel in the front heatsink and the air shroud help to direct air to the second larger heatsink. The Gigabyte H262-Z62 supports up to 200W TDP for chips like the EPYC 7702. There are options from Gigabyte for supporting up to 240W cTDP chips in this system such as the AMD EPYC 7642 and EPYC 7742. One can run these chips at their full potential, although Intel is not willing to do so for their benchmark comparisons.

Taking a second to marvel at these heatsinks, here they are fresh and still int heir boxes. If you see the large overhangs past the screws, this is very different from the dimensions we see on typical 1U and 2U coolers. They are much larger for the Gigabyte H262. It may not seem like a big step, but they unlock the ability to trade higher power consumption for a bin or two of higher CPU performance with AMD’s cTDP feature on some chips.

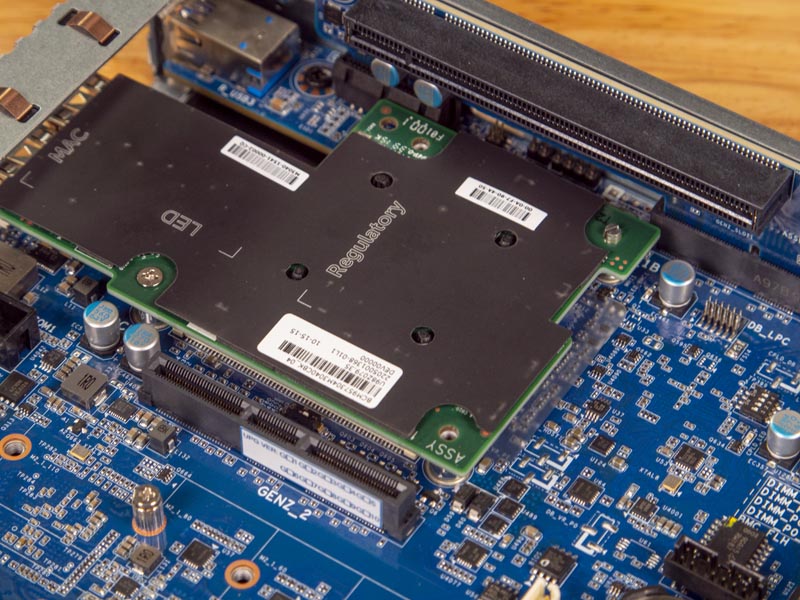

While the CPU sockets can hold up to a total of 128 cores/ 256 threads per node, the expansion capabilities are truly impressive. Here is a good example. One can see the PCIe riser, interestingly labeled “GENZ_2” along with two M.2 NVMe slots. Gigabyte is able to support full M.2 22110 NVMe SSDs here. Combined with the six front panel SSDs, one can use a total of eight PCIe Gen3 x4 NVMe SSDs in each node.

On the other side (we removed that riser to make it easier to see) one can see that there is an OCP 2.0 slot that has a PCIe 3.0 x16 connection. We expect future Gigabyte product generations to support OCP 3.0 and PCIe Gen4, but those NICs will not be available in quantity until next year. For now, this is a great solution. We have a dual-port 25GbE Broadcom NIC installed here, but technically there is bandwidth for dual 50GbE networking or 100GbE networking here.

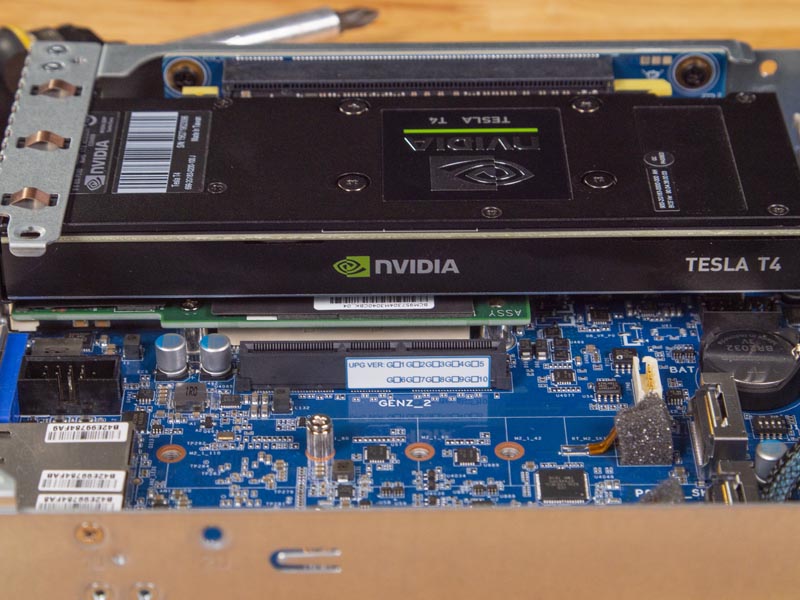

Above that, we get another GEN_Z1 riser. Both of the risers are PCIe 4.0 x16 slots, so one can, in theory, use 200GbE / 200Gbps Infiniband cards or fully saturate dual 100GbE/ 100Gbps Infiniband cards in a node. In our case, we used NVIDIA Tesla T4’s. These are NVIDIA’s versatile inferencing cards. You can read our NVIDIA Tesla T4 AI Inferencing GPU Benchmarks and Review for more about the cards. Although they are not officially supported, we were able to run them in lower-TDP CPU nodes without issue.

Yes, that is my favorite screwdriver that made a cameo in that photo.

Here is a node put together. If the idea of 128 cores/ 256 threads, dual NVIDIA Tesla T4 GPUs, 8x NVMe SSDs, and 2TB of memory does not get you excited these days, then there is not much we can show our readers that will.

Thinking about this from a chassis level, in the Gigabyte H262-Z62, one can put up some eye-popping totals.

- CPU: Up to 512 cores/ 1024 threads in only 8 CPUs

- Memory: 8TB of memory capacity

- PCIe Expansion: 8x PCIe Gen4 x16 cards

- Networking Expansion: 4x OCP 2.0 PCIe x16 networking cards

- M.2 Storage: 8x M.2 22110 NVMe SSDs (PCIe Gen3)

- U.2 Storage: 24x U.2 NVMe SSDs (PCIe Gen3)

- Onboard networking: 8x Intel i350 1GbE ports

The chassis itself is only 840mm or just over 33 inches deep and serviceable from both sides which means it fits in just about every rack. For comparison, we tested the Supermicro BigTwin SYS-2029BZ-HNR with Intel Xeon Scalable CPUs a few months ago and had a maximum of 224 cores. The Gigabyte system is about 2.28x as dense and the PCIe Gen4 slots give more available PCIe bandwidth for peripherals as well.

Next, we are going to take a look at the node topology, before moving to the rest of our review.

I think…I’m in love.

Gigabyte are really becoming a competitive name on features and design with their server hardware. Genuinely thinking of getting a Gigabyte node into our generally Supermicro rack and making decisions!

Do those pcie x16 slots support 4×4 bifurcation? Then one could have 10x m.2/node plus the icp networking and the u.2 bays. Thsi would make it a hig speed storage king.

Gigabyte has those dula pcb bifurcation cards, that would just fit perfectly in the chassis………

I just wish Gigabye did a better job with the buggy SP-X. They’ve now got good hardware but we’ve seen the BMC die when doing IKVM several times on another epyc model

“We also wish that the NVMe drives were PCIe Gen4 capable.”

Well, sure, but what PCIe 4.0 enterprise NVMe card are they going to run? Lots of stuff announced but nothing I can see actually on the market until next year. A shame, really.