Gigabyte H262-Z62 Node Topology

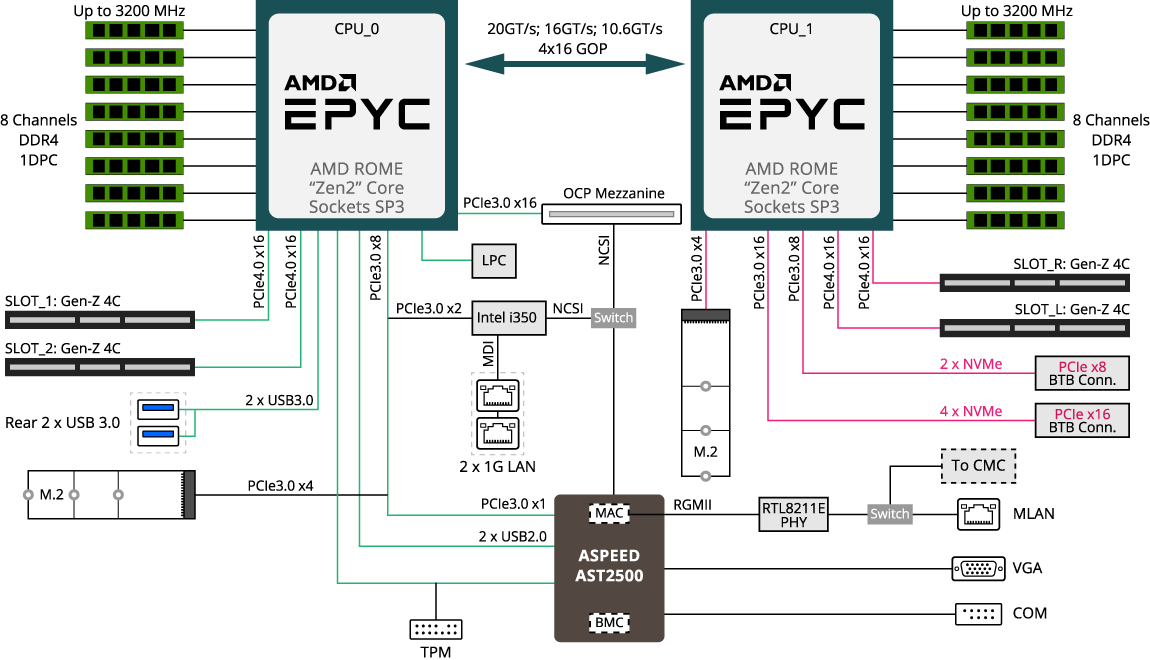

One of the really interesting aspects to the Gigabyte H262-Z62 is the system topology. Here is Gigabyte’s block diagram to frame our discussion:

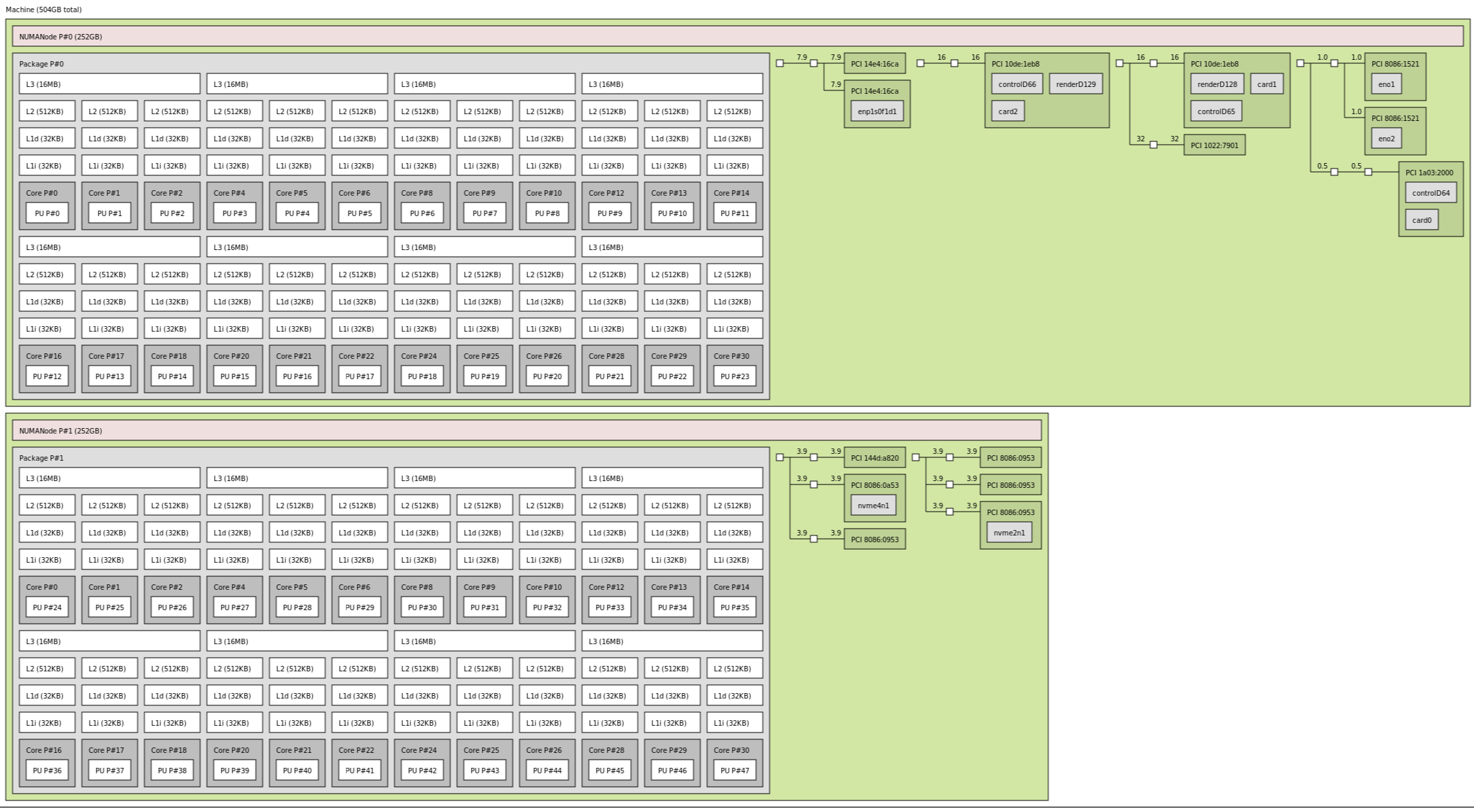

This is what a test node looks like with several NVMe SSDs, two NVIDIA Tesla T4 GPUs, and an OCP NIC:

In both the block diagram, and in the topology output, you can see two NUMA nodes. That is a big deal. When we tested the Gigabyte H261-Z61, we had eight NUMA nodes per system. With the new AMD EPYC 7002 series, AMD has one NUMA node per socket, like Intel Xeon, but with more than twice the number of cores. You can read more about the series here: AMD EPYC 7002 Series Rome Delivers a Knockout.

We actually had to step down to the 24-core AMD EPYC 7402 CPUs for that topology shot since with so many peripherals the dual 64-core CPU shots started to look unwieldy.

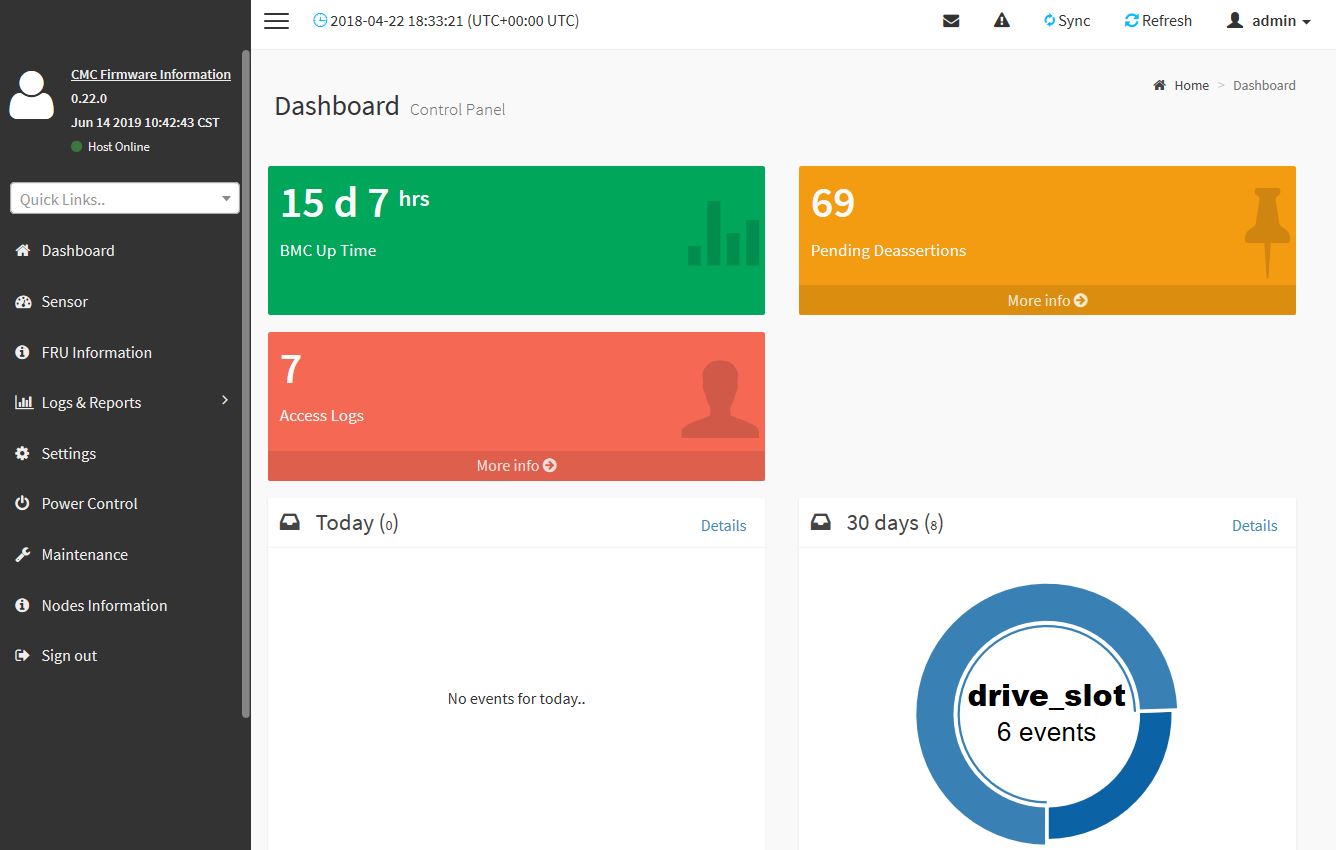

Next, we are going to start looking at management features starting with Gigabyte’s CMC.

Gigabyte H262-Z62 CMC A Big Feature

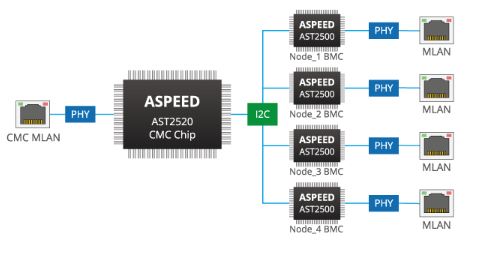

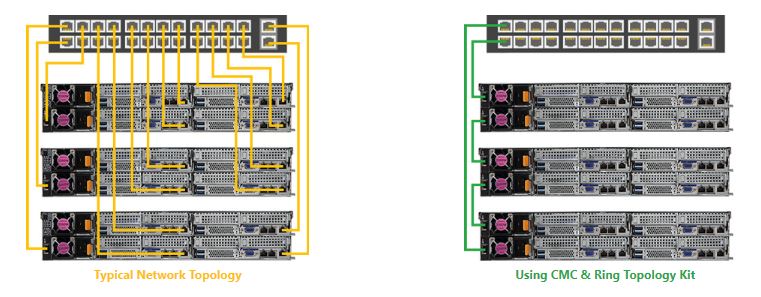

One of the newer features in the Gigabyte H262-Z62 is the CMC. CMC stands for Central Management Controller. This serves two purposes. First, it allows for some chassis-level features. Second, it allows a single RJ-45 cable to access all four IPMI management ports on the individual chassis which greatly reduces cabling. With 2U 4-node chassis, cabling becomes a big deal. Removing cabling means better cooling efficiency and less spent on cabling, installation, and switch ports. The CMC is an option we wish Gigabyte makes standard on 2U4N designs going forward.

The CMC is run by an ASPEED AST2520 BMC. Whereas we saw a legacy Avocent firmware on the H261 units, the new Gigabyte H262 utilizes a more modern MegaRAC SP-X HTML5 interface.

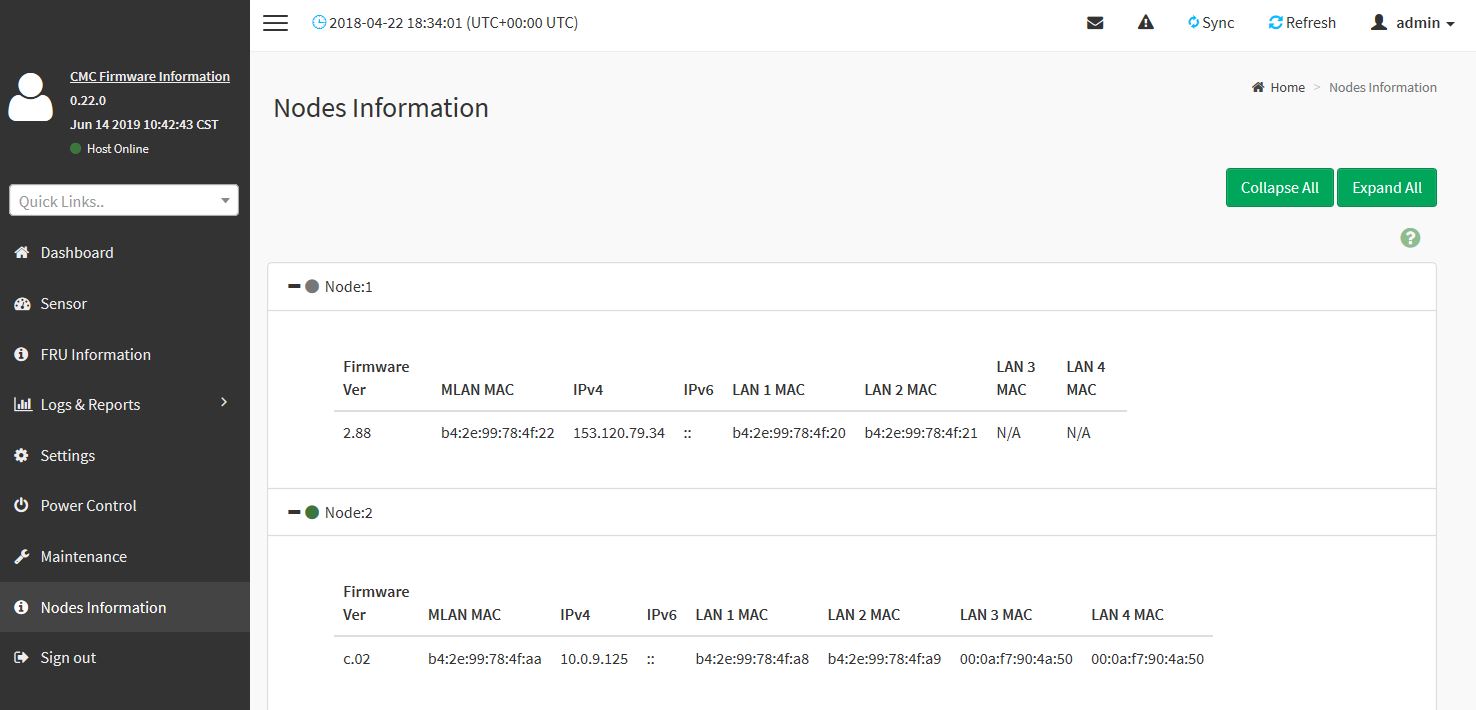

The “Node MAC Address” shows information on each node that is physically plugged in. Using the four test cases shown above, we can see that if the node is powered on, we can get the LAN MAC addresses as well as the BMC address/ IPv4/ IPv6 addresses. In a cluster of 2U4N machines, this is important when you need to troubleshoot and find a node.

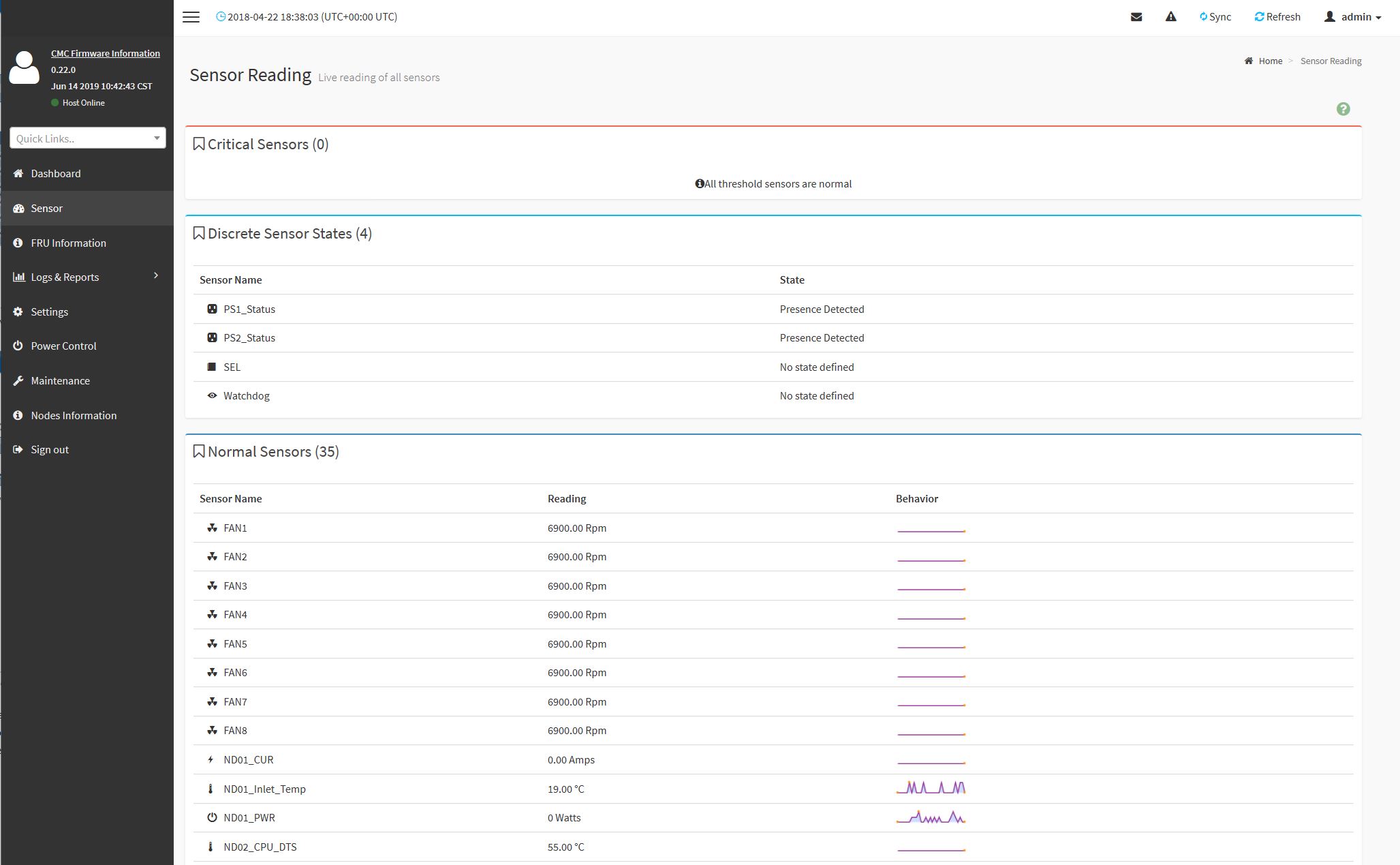

There are some aggregated sensor data points shared with the CMC for remote monitoring.

You will have to use separate firmware updates for the CMC versus the nodes, that also means there is another BMC to secure and keep updated and the functionality is a bit different between the nodes and the CMC.

There are features that are not available though. If you want to access each BMC directly with fewer cables, you need the CMC and Ring Topology Kit. This essentially puts all of the individual BMCs on an in-chassis Ethernet hub that one can then chain multiple chassis together and use significantly fewer cables and management switch ports. By doing so, one gets direct BMC access and instead of just IPMI functionality, one also gets features like iKVM.

We like the chassis power figures, the ability to turn the entire chassis off, and the assistance for tying nodes to chassis for inventory purposes. Realistically, the “killer” feature of the CMC is using fewer cables to wire everything. Reducing 4 cables helps mitigate the 2U4N cabling challenge and we would love to get to use a system with this Ring Topology kit. Our test system did not have this.

Next, we are going to look at managing the nodes without the CMC.

I think…I’m in love.

Gigabyte are really becoming a competitive name on features and design with their server hardware. Genuinely thinking of getting a Gigabyte node into our generally Supermicro rack and making decisions!

Do those pcie x16 slots support 4×4 bifurcation? Then one could have 10x m.2/node plus the icp networking and the u.2 bays. Thsi would make it a hig speed storage king.

Gigabyte has those dula pcb bifurcation cards, that would just fit perfectly in the chassis………

I just wish Gigabye did a better job with the buggy SP-X. They’ve now got good hardware but we’ve seen the BMC die when doing IKVM several times on another epyc model

“We also wish that the NVMe drives were PCIe Gen4 capable.”

Well, sure, but what PCIe 4.0 enterprise NVMe card are they going to run? Lots of stuff announced but nothing I can see actually on the market until next year. A shame, really.