Gigabyte H262-Z62 Power Consumption to Baseline

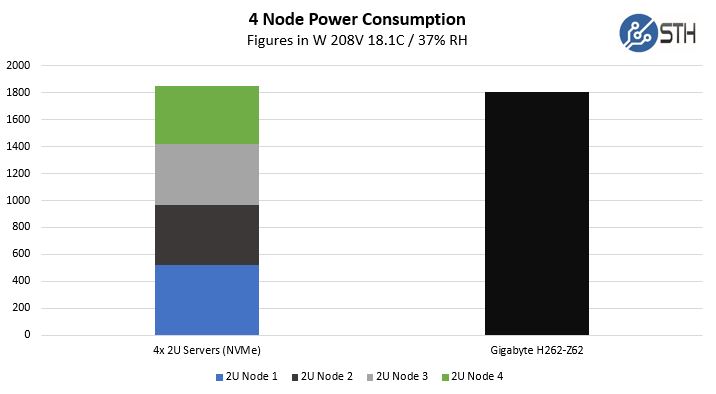

One of the other, sometimes overlooked, benefits of the 2U4N form factor is power consumption savings. We ran our standard STH 80% CPU utilization workload, which is a common figure for a well-utilized virtualization server, and ran that in the “STH Sandwich” we described on the previous page.

Here we only saw about a 1% decrease in power consumption over the four 2U servers once we equipped them with six NVMe SSDs each. While this is too close to consider a clear win, it shows that the solution is about as power-efficient as individual servers while taking up significantly less rack space. Since we did not have 4x 1U dual AMD EPYC 7002 series servers (only 7001 series), we used 2U nodes. This is 8U of individual servers to 2U of the Gigabyte H262-Z62.

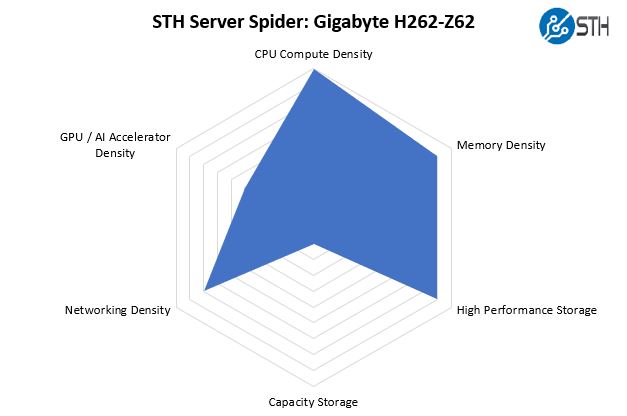

STH Server Spider: Gigabyte H262-Z62

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

Adding eight NVMe devices to the chassis means that the Gigabyte H262-Z62 has significantly more storage performance than the H261-Z60. Combine that with eight AMD EPYC 7002 series CPUs and 64 DIMM slots in 2U and one gets an extremely dense configuration. Although it was not a supported configuration, we did test lower-TDP CPUs with NVIDIA Tesla T4 AI Inferencing GPUs which makes the accelerator story quite interesting. We suggest if you want to deploy T4’s or other accelerators outside of our lab, you consult with technical support first as that changes the power/ cooling profile considerably and you want this to be a supported configuration. Also having the OCP slot with dual PCIe Gen4 x16 slots means one gets considerably more networking bandwidth than is possible with current Intel Xeon Scalable 2U4N solutions.

Final Words

We found the Gigabyte H262-Z62 to be plain cool. It has just about everything one could hope for in 2U. One gets up to 512 cores, 1024 threads, 32 NVMe SSDs (24x U.2, 8x M.2), 4x OCP NICs, 8x PCIe Gen4 x16 devices, and up to 8TB of memory in 2U. In this current generation, that is about as dense as it gets for everything except having massive numbers of GPUs and 3.5″ hard drives.

Now that the Gigabyte Central Management Controller is on HTML5-based SP-X, we hope that it continues to gain features, along with each of the individual BMC implementations. There is still some room for improvement. We also wish that Gigabyte went slightly bolder and made the ring topology standard, reducing cable counts even more. We also wish that the NVMe drives were PCIe Gen4 capable. Perhaps in a future product version, we will see more PCIe Gen4 for storage and OCP 3.0 NICs.

In today’s market, the Gigabyte H262-Z62 is just about as much hardware as you can fit into a 33 inch/ 840mm deep chassis. Gigabyte’s quality and pace of innovation in their solutions has accelerated in recent years. If you are looking to deploy dense AMD EPYC 7002 series clusters for hyper-converged infrastructure, or just dense compute, you should take a look at the Gigabyte H262-Z62.

Where to Buy

We have gotten a lot of questions asking where one can buy these servers. That is pretty common since some of the gear is harder to find online. ThinkMate has these servers on their configurator, so we are going to point folks there:

Let us know if you find this helpful and we can include in future reviews as well.

I think…I’m in love.

Gigabyte are really becoming a competitive name on features and design with their server hardware. Genuinely thinking of getting a Gigabyte node into our generally Supermicro rack and making decisions!

Do those pcie x16 slots support 4×4 bifurcation? Then one could have 10x m.2/node plus the icp networking and the u.2 bays. Thsi would make it a hig speed storage king.

Gigabyte has those dula pcb bifurcation cards, that would just fit perfectly in the chassis………

I just wish Gigabye did a better job with the buggy SP-X. They’ve now got good hardware but we’ve seen the BMC die when doing IKVM several times on another epyc model

“We also wish that the NVMe drives were PCIe Gen4 capable.”

Well, sure, but what PCIe 4.0 enterprise NVMe card are they going to run? Lots of stuff announced but nothing I can see actually on the market until next year. A shame, really.