Gigabyte H261-Z61 Storage Performance

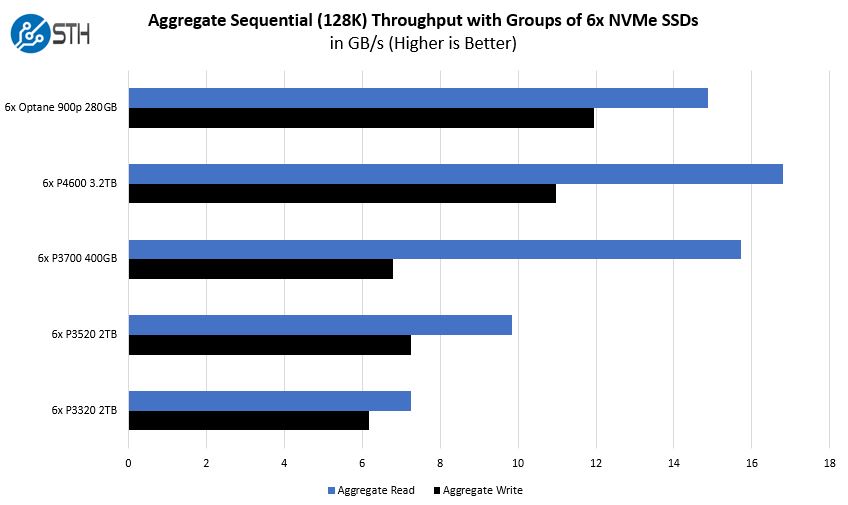

Storage in the Gigabyte H262-Z62 is differentiated by the six NVMe bays in each chassis. We used sets of NVMe SSDs we had on-hand to get some relative performance numbers from the solution.

Compared to previous generations that often use SATA SSDs, one can see a massive performance improvement. Six SATA SSDs usually run, at most, around 3GB/s but we can get much higher performance here. We even tested Intel Optane SSDs in here for those that want to add some top-end storage to top-end compute provided by the AMD EPYC CPUs.

Compute Performance and Power Baselines

One of the biggest areas that manufacturers can differentiate their 2U4N offerings on is cooling capacity. As modern processors heat up, they lower clock speed thus decreasing performance. Fans spin faster to cool which increases power consumption and power supply efficiency.

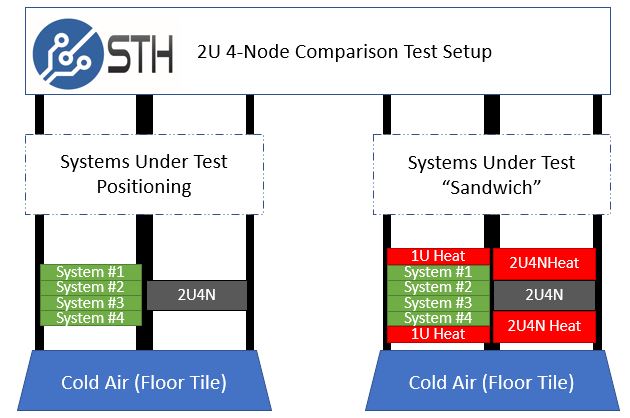

STH goes through extraordinary lengths to test 2U4N servers in a real-world type scenario. You can see our methodology here: How We Test 2U 4-Node System Power Consumption.

Since this was our second AMD EPYC test, we used four 2U servers from different vendors to compare power consumption and performance. The STH “sandwich” ensures that each system is heated on the top and bottom as they would be deployed in dense deployment.

Here is a view of the “STH Sandwich” we used for the test:

This type of configuration has an enormous impact on some systems. All 2U4N systems must be tested in a similar manner or else performance and power consumption results are borderline useless.

Compute Performance to Baseline

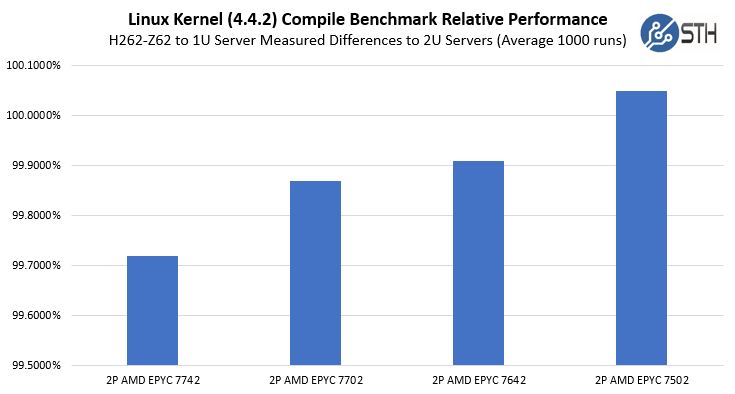

We loaded the Gigabyte H262-Z62 nodes with eight AMD EPYC CPUs. Each node also had a 25GbE OCP NIC and a 100GbE PCIe x16 NIC. We then ran one of our favorite workloads on all four nodes simultaneously for 1400 runs. We threw out the first 100 runs worth of data and considered the 101st run to be sufficiently heat soaked. The other runs are used to keep the machine warm until all systems have completed their runs. We also used the same CPUs in both sets of test systems to remove silicon differences from the comparison.

Note: This is not using a 0 on the Y-axis. If we used 0-101% you would not be able to see the deltas as they are too small.

We found the Gigabyte H262-Z62 nodes are able to cool CPUs essentially on par with their 2U counterparts. There was a slight drop-off on the higher-power CPUs, but this is within our tolerances. This is a great result.

Next, we are going to look at power consumption before closing this review out.

I think…I’m in love.

Gigabyte are really becoming a competitive name on features and design with their server hardware. Genuinely thinking of getting a Gigabyte node into our generally Supermicro rack and making decisions!

Do those pcie x16 slots support 4×4 bifurcation? Then one could have 10x m.2/node plus the icp networking and the u.2 bays. Thsi would make it a hig speed storage king.

Gigabyte has those dula pcb bifurcation cards, that would just fit perfectly in the chassis………

I just wish Gigabye did a better job with the buggy SP-X. They’ve now got good hardware but we’ve seen the BMC die when doing IKVM several times on another epyc model

“We also wish that the NVMe drives were PCIe Gen4 capable.”

Well, sure, but what PCIe 4.0 enterprise NVMe card are they going to run? Lots of stuff announced but nothing I can see actually on the market until next year. A shame, really.