Today, we are going to talk about server power consumption. Specifically, there are a few easy steps to cut down power by a fairly significant amount. As we move to 2022-era servers and beyond, TDP and power consumption of chips is going up as the chips and systems are getting denser. Plus, many companies have sustainability goals so saving power means saving money and hitting those sustainability goals sooner. Given this backdrop, there are a few relatively easy ways that one can lower power consumption in the data center without having to be a hyper-scale architect with complete control over the environment. We are going to cover a few of those today.

We are going to quickly note that Intel is sponsoring this and providing the Xeon Platinum CPUs along with we are using the workloads that we ran for part of this in our Stop Leaving Performance on the Table with AWS EC2 M6i Instances piece. Also, Inspur provided the Inspur NF5280M6 we reviewed along with the NF5180M6 that we will have a review of in March. We had the HPE power supplies already in the lab. This type of testing requires a lot of supplies, so we needed help here. As always, we are doing this piece editorially independently, and no company reviews these pieces before they go live.

We also have a video version of this as well:

As always, we suggest opening this in a new YouTube window, tab, or app for a better viewing experience.

1U v. 2U Servers

One area that we have looked at before is the conventional wisdom of 1U v. 2U server efficiency. When we looked at this in 2018, in Testing Conventional Wisdom in 1U v 2U Power Consumption we used a 1U Dell EMC PowerEdge R640 and a 2U R740xd. The test platforms were not identical so we actually saw that the R640 had lower power consumption. The point was that configurations matter, so getting identical platforms was going to be key.

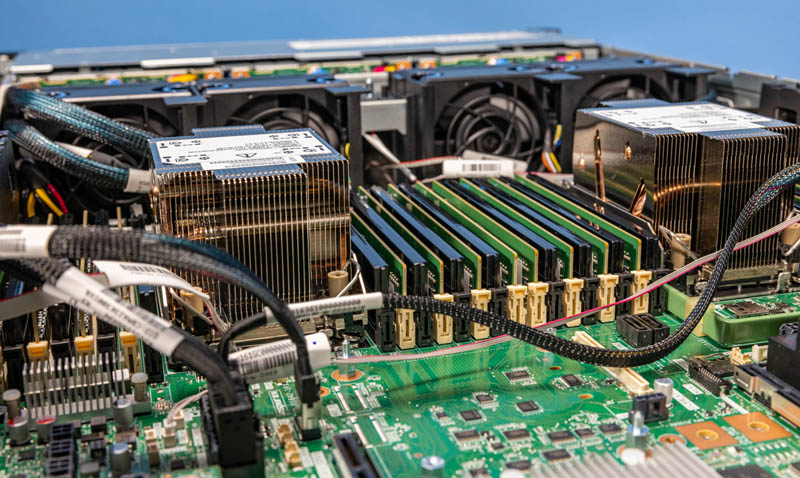

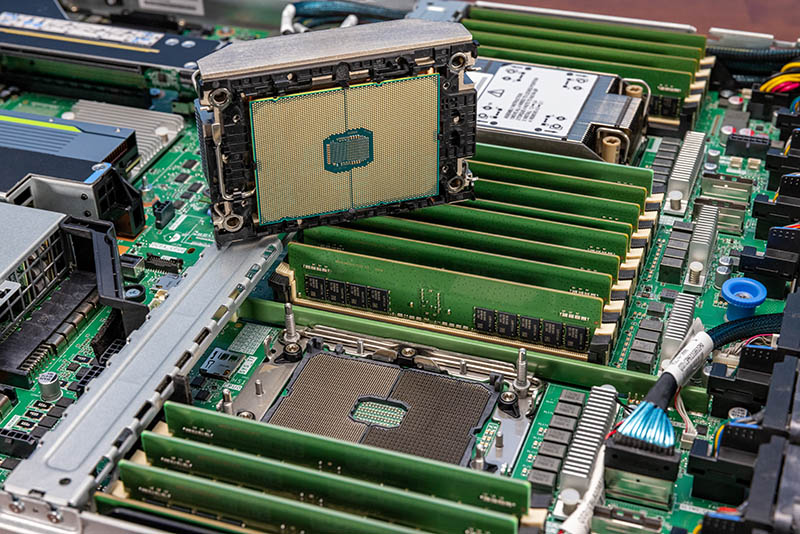

This time, we are re-doing the test using the Inspur NF5280M6 along with the NF5180M6. We are equipping identical processors, Intel Xeon Platinum 8362 CPUs. These are the 3rd Generation Intel Xeon Scalable “Ice Lake” processors that have 32 cores each. We asked Intel for these SKUs because they fit nicely into current VMware and Microsoft licensing. The benefit of the two Inspur systems is that the motherboards are virtually identical. There are a few different headers populated on each, but we de-populated them to get more reliable figures.

We then ran our acceleration workloads including AVX-512 HPC workloads, Tensorflow, and our crypto web acceleration WordPress workload, and noted the differences between the two platforms. We also found the gem that the Inspur platforms have a sensor for fan power consumption so we could track power consumption at the PDU, the power supplies, and also at the fan (there are CPU and memory sensors too but those were similar between the two platforms.)

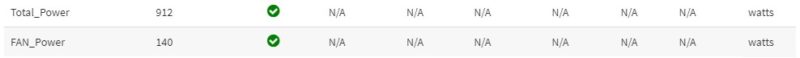

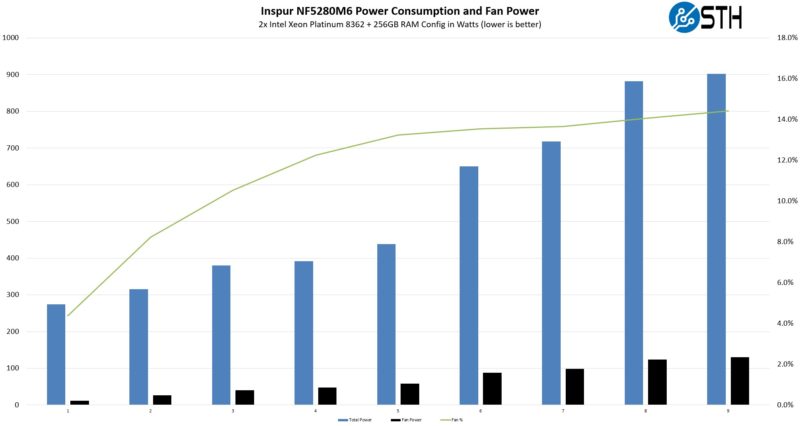

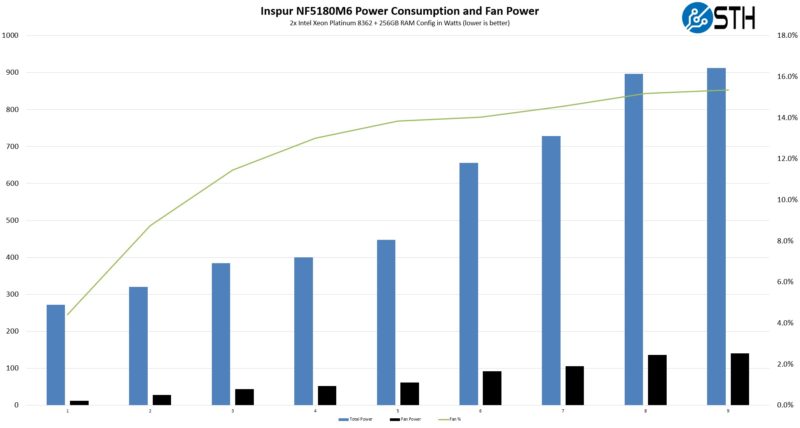

The workloads we are running are similar to the ones we ran showing the Ice Lake built-in accelerators plus workload 1 is installing Tensorflow. We are going to get into the workloads more in the next section, but here is what they looked like in the NF5280M6 we reviewed:

Here is the same set in the NF5180M6 that we will have a formal review on in March. Performance was nearly identical between the systems as we would expect, with no significant variance over our sets of runs. Power consumption, however, was different.

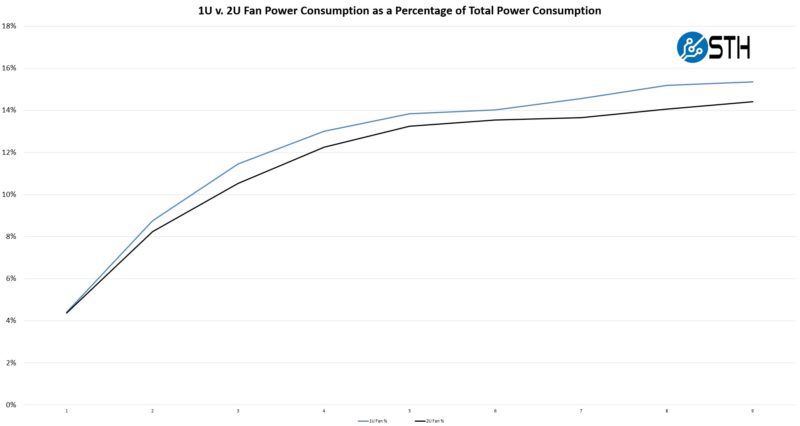

The important bit here, and perhaps the most interesting, is the fan power consumption readings between the two servers. The 1U server is in blue, the 2U is in black.

What we found this time around was that we ended up using anywhere from 0.1-1.1% less total system power consumption on the 2U platform versus the 1U platform, solely due to the fans. We swapped components between the systems so that we were using the same CPUs, RAM, SSDs, NICs, and power supplies. The motherboards were the same models save for some unpopulated headers. Effectively, because of how Inspur and many other vendors, build servers these days, we were able to isolate the changes effectively to just the cooling delta.

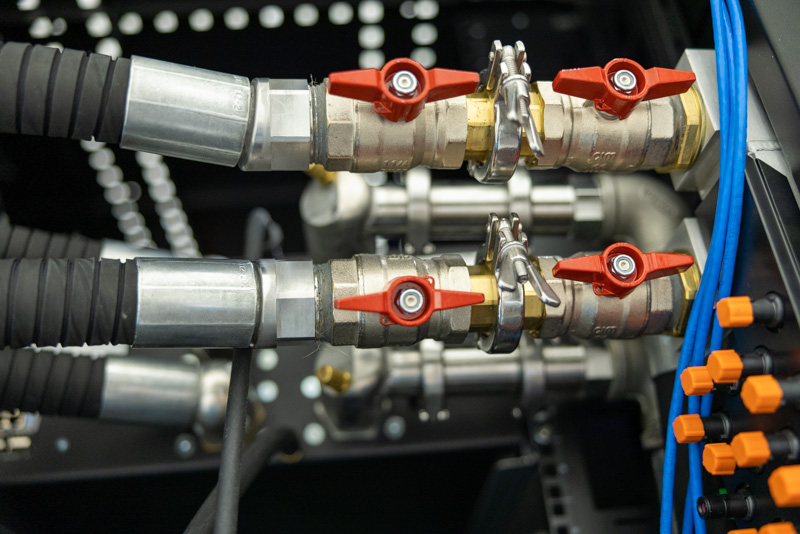

The key takeaway is that we indeed saw a notable power consumption delta, especially as the power consumption increased. This is going to vary based on server models and configurations, but it makes a lot of sense. If you are looking for efficiency, then going to lower-density 2U systems may be worthwhile. This will become more important later in 2022 and beyond as chip TDPs rise. Many organizations will be able to go to less dense 2U chassis in order to take advantage of these efficiencies as the rack-level power budgets may not be able to handle top-bin 1U configurations. This is also one of the reasons we have been putting a much larger focus on liquid cooling at STH. On the 8x GPU systems we test, the percentage of power dedicated to cooling continues to rise to the point that we are seeing 20% of system power going to cooling.

Next, let us take a look at power supplies and why the power side is important as well.

Efficient Power Supplies

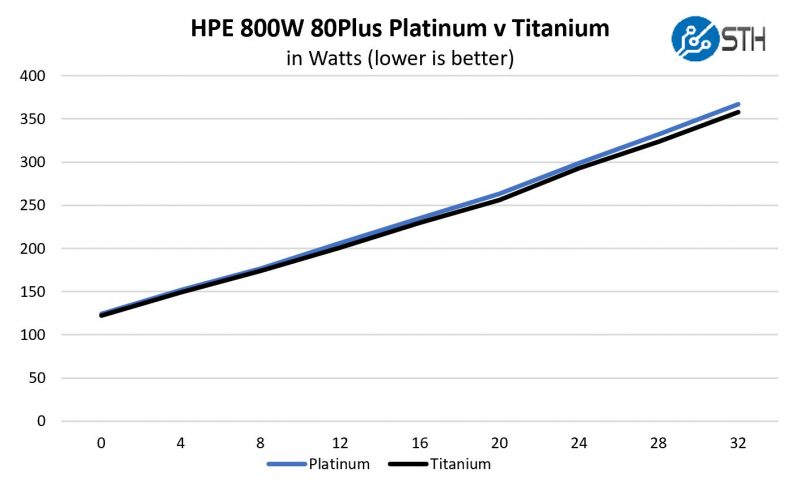

Back in 2019, we ran a piece simply adding VMs and seeing if we could get better power efficiency using 80Plus Platinum versus Titanium PSUs. We tested HPE ProLiant 800W 80Plus Platinum and Titanium PSUs and basically just added the same VMs to the server to generate a small incremental load on each.

At idle, there was not an appreciable difference between the two. As we got closer to half of the rated power supplies we saw roughly a 1.5-2% delta in terms of overall power consumption at the PDU.

This is not huge, but it is easy. Another implication is that one needs to target power supplies sized to the expected workloads. Getting 2kW power supplies for a server that uses 100-500W does not put one in the efficiency bands for many modern power supplies. In our two test servers that we are using for these, we saw about a 0.3% better power consumption figure by using the 1.3kW power supplies versus the 1.6kW Inspur power supplies. These are both Platinum level efficiency PSUs so the difference is in the power efficiency at rated levels.

As a quick note here, one can also move from 120V to 208V or higher and get power efficiency gains as well. We tested this in 2015 and saw a roughly 2% power efficiency advantage by moving to higher 208V, and there is usually another 0.25% or so that goes into higher 220-240V.

The key here is that moving to higher voltage racks and higher efficiency power supplies can get up to 3-4% in terms of power consumption savings.

Still, let us get away from the small items, and look to the future in terms of how accelerators change the game, and why some current comparisons are actually suboptimal.

I had no idea fans used that much power. I’d say this is the best power article I’ve seen in a long time.

Wow that’s amazing. That’s the whole “if it completes faster then it’s more efficient” argument, but it’s cool. Do those accelerators work for SpecINT and SpecFP?

I’ll be sending this out.

Nice analysis

It’s surprising that the market hasn’t moved to a combined UPS+PSU per rack format and basically connect individual servers with just a 12V connector. Efficiency wise that would be a far bigger delta than the bump in 80Plus rating on a single PSU, as well as creating more space in a chassis and reducing cooling needs in a single unit.

U need pretty damn big amount of copper. 4 AWG for average server, and of your rack has 10+ nodes…

This is physics, 99% sure it is already have been calculated.

On the amount of copper I doubt this is a big impact on a full rack in terms of cost compared to 10+ PSUs (if anything it’ll be cheaper and reduce maintenance as well). As you’re in low voltage you can just use bus bars with the PSU+UPS in the middle. To my knowledge AWS Outposts already have this design.

We saw about the same as you. We did the change-out to 80+Ti psus and 2U and we got around a 3% lower monthly power for the new racks.

I know that’s not your exact number but it’s in your range so I’d say what you found is bingo.

Going to 12 VDC would definitely introduce a lot of extra cost (significantly more metal), lost space due to all the thicker cables/bus bars, higher current circuit breakers, etc. and to top it all off you still get a drop in efficiency that comes any time you lower the voltage regardless of AC or DC. Then you still need a power supply anyway to convert the 12 V into the other voltages used inside each machine, and what’s the efficiency of that? This is why power to our homes is delivered at 240 V (even in the US) and power distribution begins at 11 kV and goes up from there. The higher the voltage the more efficient, and conversely the lower the voltage the more loss, for both AC and DC.

But DC is more efficient at the same voltage as AC and this is why there is a move towards powering equipment off 400 VDC. You can find a lot of stuff online about data centers running off 400 VDC, and new IEC connectors have been designed that can cope with the electrical arc you get when disconnecting DC power under load. For example normal switch-mode power supplies found in computers and servers will happily run off at least 110 to 250 VDC without modification, but if you disconnect the power cable under load, the arc that forms will start melting the metal contacts in the power socket and cable. I believe the usual IEC power plugs can only handle around 10 disconnects at these DC voltages before the contacts are damaged to the point they can no longer work. Hence the need to design DC-compatible replacements that can last much longer.

The advantage of using mains voltage DC also means the cable size remains the same, so you don’t need to allocate more space to busbars or larger sized cables. You do need to use DC-rated switchgear, which does add some expense. But your UPS suddenly just becomes a large number of batteries, no inverter needed, so I suspect there is a point at which a 400 V DC data center becomes more economical than an AC one.