The AIC SB407-TU is a really interesting 60-bay storage offering. Even using 18TB hard drives (all that we had 60x of on hand), we were able to hit 1.08PB of raw capacity. In our review, we will see what makes this server different in the market.

As we have had with many of our recent reviews, we also have a video version of this server for those who want to listen along or see the action of removing drive trays:

With video is a bit easier to show some of the moving bits versus the web format, so feel free to check that out. We always suggest opening the video in its own browser, tab, or app for a better viewing experience.

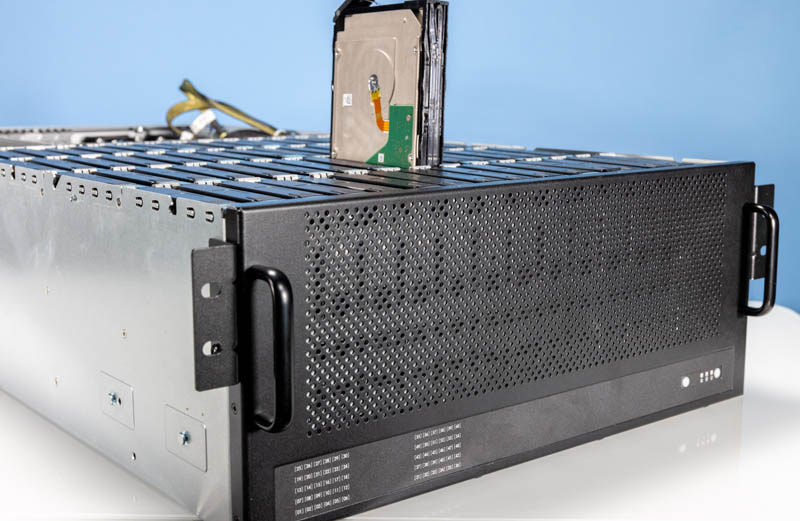

AIC SB407-TU External Hardware Overview

The front of the system is fairly simple. Unlike many servers we review, this is a top-loading storage server. That means most of the front of the system is designed for airflow, with over 3U of the 4U being dedicated to cooling hard drives. The entire chassis is only 35″ deep or 853mm. By keeping the depth reasonable, it will work in most racks. One thing to keep in mind, though is the weight since 60x hard drives can add a lot of weight to systems.

On one side, we have the 60-bays worth of status lights so one knows the status of all of the drives without opening the chassis. On the other, we have our standard power buttons and LEDs.

We will get to the drives in a bit, but the rear of the server is quite interesting, with three main areas.

Most of the rear is dedicated to a ~3U storage server. That means we get things like an OCP NIC 3.0 rear slot, a com port, two USB ports, and a management port. The VGA connection is fun as it is on the rightmost I/O expansion slot. One can also see that there is a lot of room for adding in other cards here.

To the right rear, we have an 8x 2.5″ array. These are NVMe SSD bays and designed to house higher-speed read and write cache drives as well as logging devices.

Power in our unit is supplied by 4x 80Plus Platinum 800W PSUs. These are setup as 2+2 redundant. There are also options for 1.2kW and 1.6kW in 1+1 redundant configurations.

Let us get to the big features by looking inside the system.

Very cool, but if we’re going to talk about “cost optimizations” and being “much less expensive”, it would be nice to have a ballpark as to what the chassis cost. I didn’t see that anywhere (unless I missed it).

In …we were able to hit 1.08TB of raw capacity… you mean 1.08PB.

In …On the other hand, CPU0 has a Gen4 x16 slot and two x8 slots… you mean CPU1

domih – Yup, they didn’t even make it 1 paragraph without a typo. ;)

Fixed. 12 flights in 22 days. I am pretty beat at this point.

It’s fun to see the backblaze legacy still thriving. Bulk storage will always be needed. I’m more curious when chip density will eventually overtake spinning rust in TB/$. It seems every time the gap gets close, the mad scientists create a better spinner. Who knows, maybe we’ll get rewritable crystal storage before that happens.

There has been a tendency to take liberties with the “unregulated 3rd dimension” of 19″ rackable storage gear over the past few years.

I used many Sun “Thumpers” (X4500, 4U 48-drive, 2x CPUs) in a previous life…A bit of a challenge to rack (per Sun, place said beast in the bottom of a rack).

With the more recent Western Digital UltraStar102 (4U JBOD, 102 drives, 42″ depth iirc and 260 lbs loaded with drives) things got a bit dicey/scary with that much weight & depth hanging out of the rack when swapping a drive from the top of the chassis.

I know SSDs don’t yet have the capacity of hard disks, but would converting this thing to SAS SSDs make the energy consumption and total system weight figures a little more tenable? Also, could one plug a DPU or GPU into any of those PCIe x16 slots? Y’know, to have more compute options, CUDA, etc.?

Also, why don’t they do one of these up for 2.5″ drives? I’m assuming that either that’s been done before or nobody’s actually thought to do that, which in that case, why not? Smaller drives = more drives = more storage = more better, right?

Maybe more smaller drives != more storage

Where’s the required airflow ? I worked with a similar unit once, seeing our drive access pattern with an thermal camera was fun, but scary…

Stephen if you mean 2.5″ HDDs, I think they only topped out at 4TB. I’m not positive though.

2.5″ HDDs here top out at 5TB…

they are SMR (shingled magnetic recording) disks, the tracks overlap a bit. The controllers do a good job of mitigating write amplification to some degree.

For my home lab, 2.5″ HDD was probably a mistake; better performance, reliability, density with 3.5″ disks. On the other hand, some applications prefer more small drives, I suppose for read bandwidth. Latency on my 5400 RPM 2.5″ drives isn’t going to beat 3.5″ enterprise drives.