AIC SB407-TU Power Consumption

Regarding base power consumption, we were a bit over 500W maximum with the Intel Xeon Gold 6330’s and over 600W with the Platinum 8368 CPUs. That was a fairly large base load.

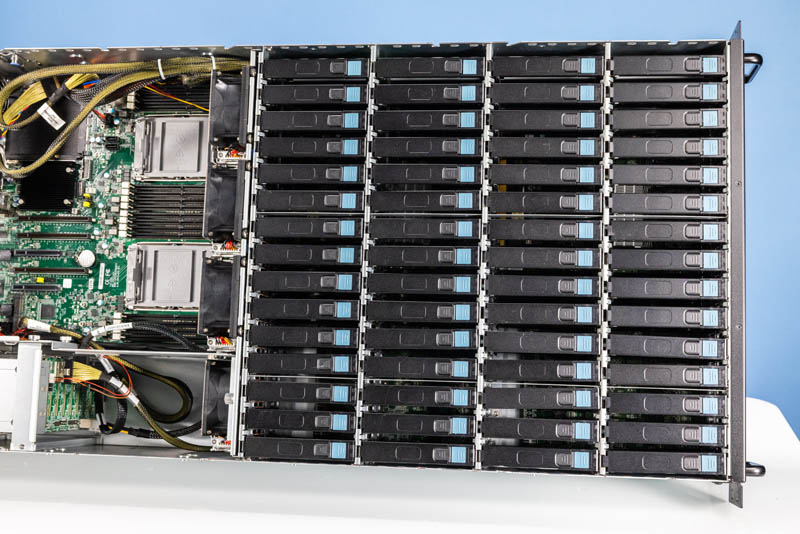

With 60x HDDs, the critical aspect is being able to power and cool the hard drives. Each one running at 5W adds 300W plus another ~10-15% of cooling overhead. The 2+2 800W was sufficient for what we deployed and, frankly, more than sufficient for most loads.

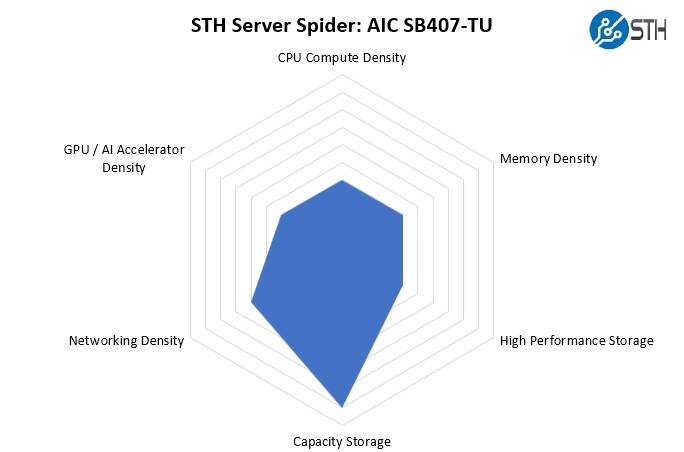

STH Server Spider AIC SB407-TU

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This system is designed to be a high-density 4U storage platform for 60x hard drives. In 35″ depth, this is a lot of storage. AIC does a good job with this system of providing balance so one can add features like high-speed networking, GPUs, and NVMe storage to round out configurations. That mirrors the applications that these systems are found in these days.

Final Words

Top-loading storage servers are very cool. They provide a lot of storage density in a fairly easy form factor.

Overall, this system was easy to work on. It felt very much like a mid-range high-density storage server. It was very apparent that this is a well-thought-out design beyond what lower-end systems offer. It is also apparent that this is designed with some cost optimizations in mind. That makes it much less expensive than some other systems we have reviewed and an important trait for low-cost scale-out storage. We would have liked to have seen Broadcom SAS 9500-8i or -16i controller(s) built into this platform to make the setup and supply chain easier.

Overall, this was a fun, albeit very large top-loading storage server, and it is always fun to build a 1PB+ solution from barebones in less than 90 minutes.

Very cool, but if we’re going to talk about “cost optimizations” and being “much less expensive”, it would be nice to have a ballpark as to what the chassis cost. I didn’t see that anywhere (unless I missed it).

In …we were able to hit 1.08TB of raw capacity… you mean 1.08PB.

In …On the other hand, CPU0 has a Gen4 x16 slot and two x8 slots… you mean CPU1

domih – Yup, they didn’t even make it 1 paragraph without a typo. ;)

Fixed. 12 flights in 22 days. I am pretty beat at this point.

It’s fun to see the backblaze legacy still thriving. Bulk storage will always be needed. I’m more curious when chip density will eventually overtake spinning rust in TB/$. It seems every time the gap gets close, the mad scientists create a better spinner. Who knows, maybe we’ll get rewritable crystal storage before that happens.

There has been a tendency to take liberties with the “unregulated 3rd dimension” of 19″ rackable storage gear over the past few years.

I used many Sun “Thumpers” (X4500, 4U 48-drive, 2x CPUs) in a previous life…A bit of a challenge to rack (per Sun, place said beast in the bottom of a rack).

With the more recent Western Digital UltraStar102 (4U JBOD, 102 drives, 42″ depth iirc and 260 lbs loaded with drives) things got a bit dicey/scary with that much weight & depth hanging out of the rack when swapping a drive from the top of the chassis.

I know SSDs don’t yet have the capacity of hard disks, but would converting this thing to SAS SSDs make the energy consumption and total system weight figures a little more tenable? Also, could one plug a DPU or GPU into any of those PCIe x16 slots? Y’know, to have more compute options, CUDA, etc.?

Also, why don’t they do one of these up for 2.5″ drives? I’m assuming that either that’s been done before or nobody’s actually thought to do that, which in that case, why not? Smaller drives = more drives = more storage = more better, right?

Maybe more smaller drives != more storage

Where’s the required airflow ? I worked with a similar unit once, seeing our drive access pattern with an thermal camera was fun, but scary…

Stephen if you mean 2.5″ HDDs, I think they only topped out at 4TB. I’m not positive though.

2.5″ HDDs here top out at 5TB…

they are SMR (shingled magnetic recording) disks, the tracks overlap a bit. The controllers do a good job of mitigating write amplification to some degree.

For my home lab, 2.5″ HDD was probably a mistake; better performance, reliability, density with 3.5″ disks. On the other hand, some applications prefer more small drives, I suppose for read bandwidth. Latency on my 5400 RPM 2.5″ drives isn’t going to beat 3.5″ enterprise drives.