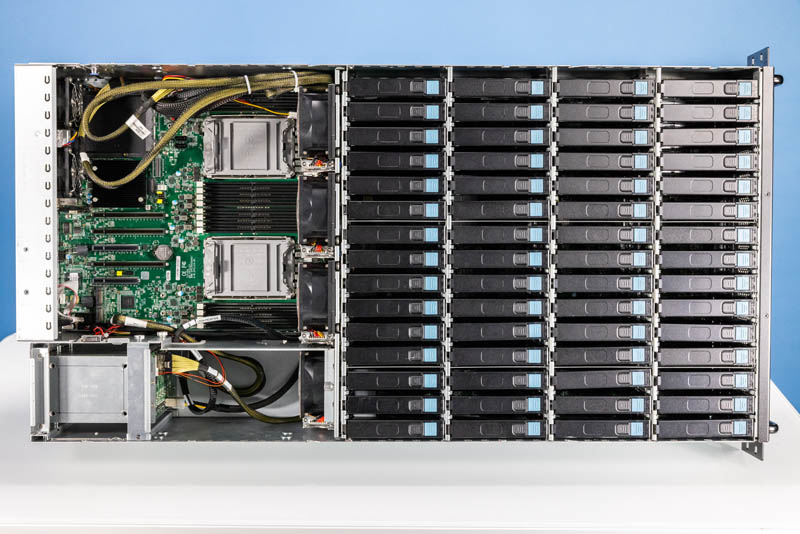

AIC SB407-TU Internal Hardware Overview

Inside the system, we have 60x 3.5″ storage bays on the front and then the main storage server on the rear of the system.

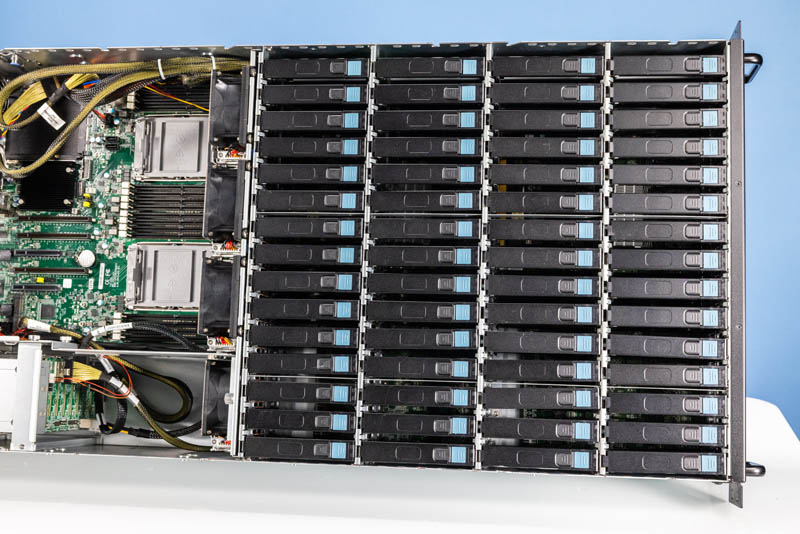

The 60x bays are logically split into two segments. There are the front 30 drives and then the rear 30 drives. Those rear 30 drives are really in the middle of the system.

Each drive is placed in a tool-less hard drive carrier. This carrier is then slotted into the system.

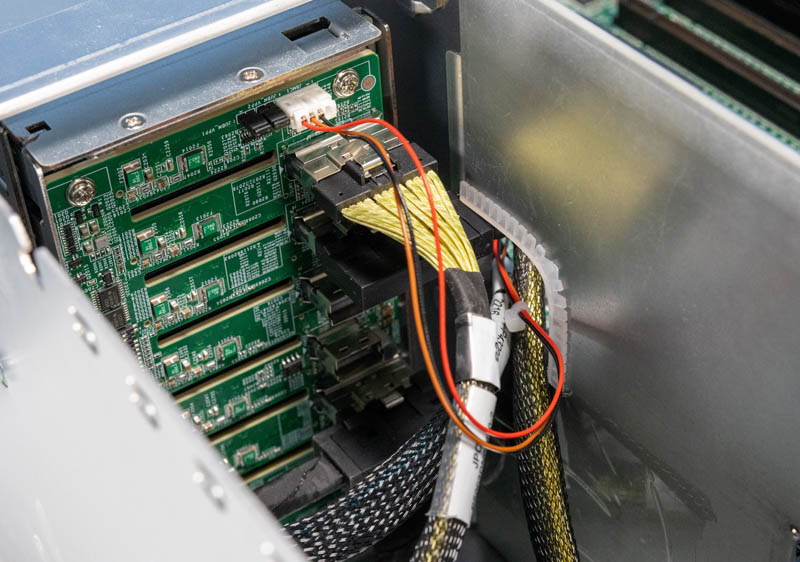

The reason those front and rear sets of 30 drives are significant is that it is also the logical layout of the system. Removing the first 30 drive trays, we can see the SAS expander board with 30x SAS data and power connections.

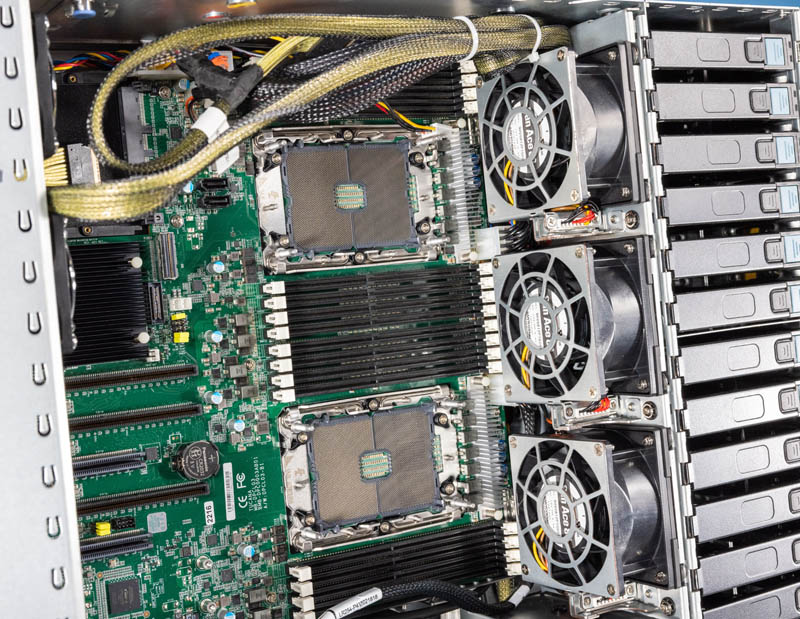

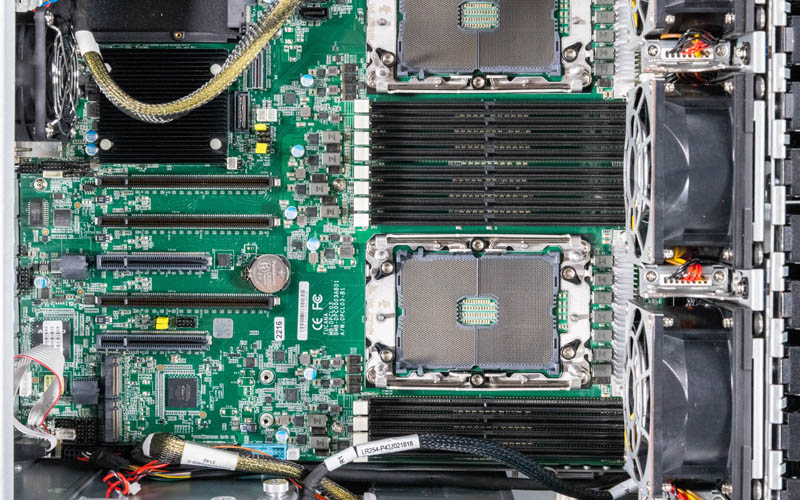

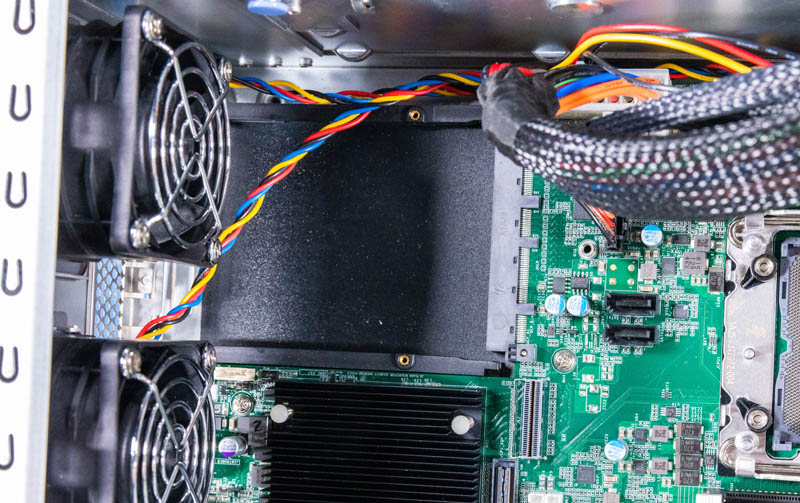

Cooling the system, we a fan partition. This fan partition is mostly closed to the front drives, except where the fans are, to maximize the effectiveness of the fans. The fans then blow directly over the dual 3rd Generation Intel Xeon Scalable (Ice Lake) CPUs.

There is another fan in this partition that is partitioned off to also cool the NVMe SSDs on the rear.

Our system came with the rear dual fan option as well.

Each 3rd Gen Intel Xeon Scalable CPU has eight DIMMs. These are DDR4-3200 DIMM slots. One can also utilize Intel Optane DCPMEM DIMMs in the server if desired.

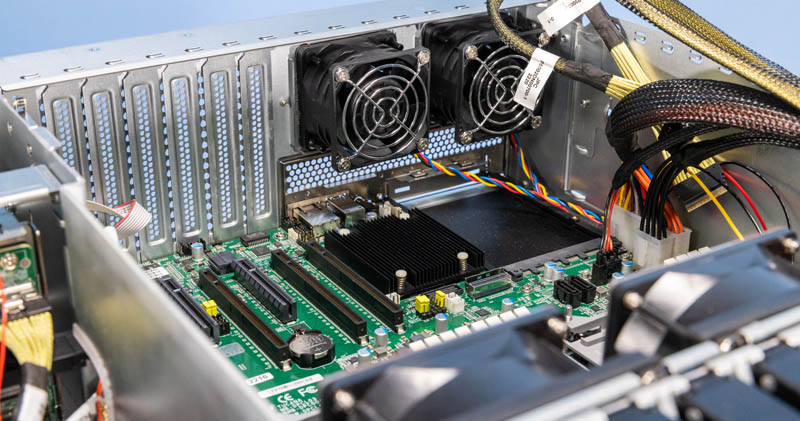

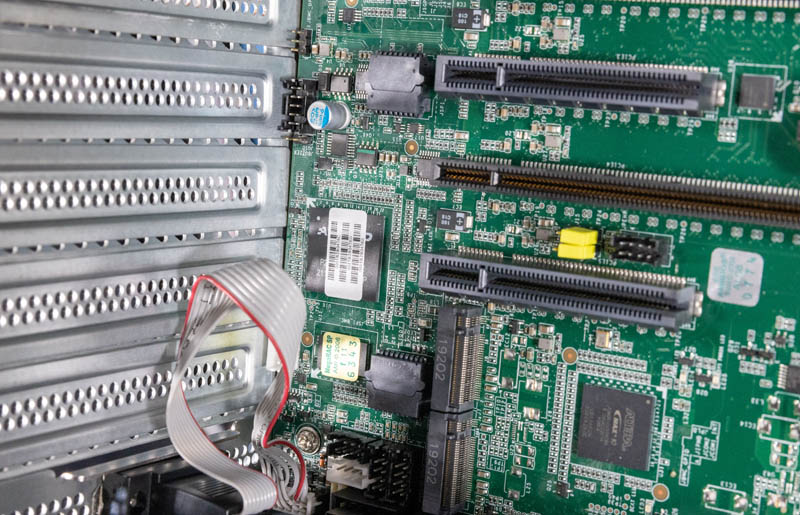

In terms of PCIe slots, we have 3x PCIe Gen4 x16 and 2x PCIe Gen4 x8 slots.

At the top rear, we have the OCP NIC 3.0 slot.

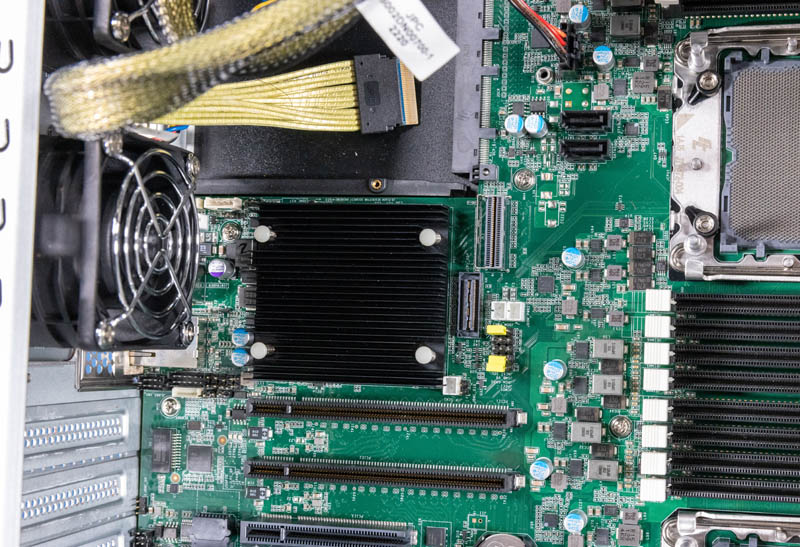

Below that, we have the Lewisburg PCH.

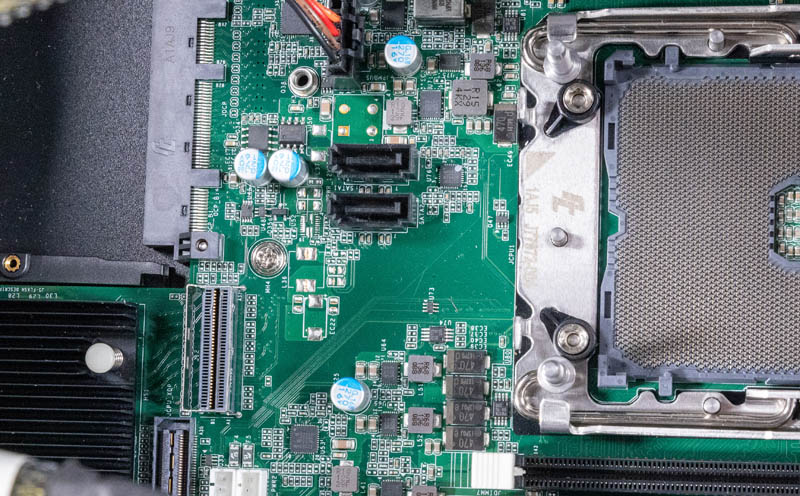

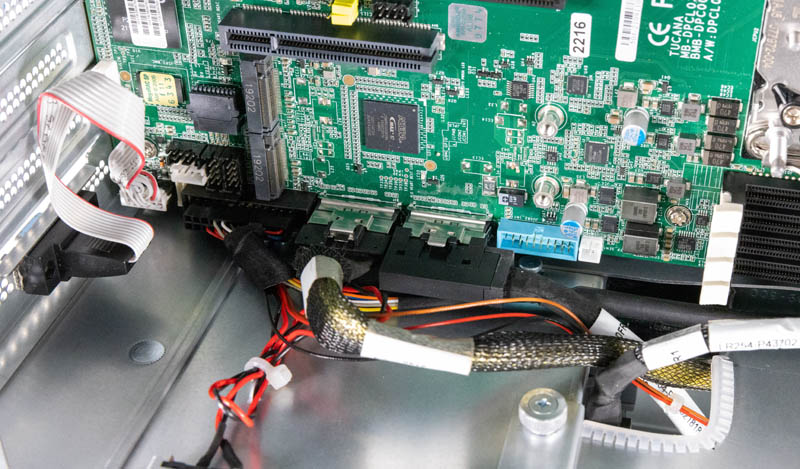

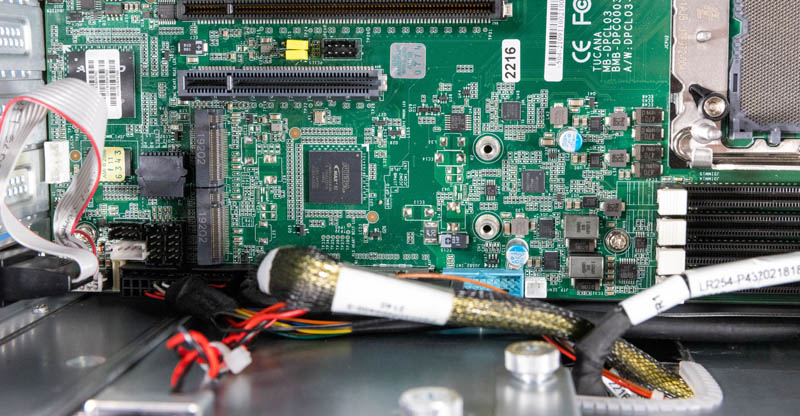

The front drive bay connections all terminate into two SFF-8654 SlimSAS 8i connections. One connection goes to the front and one to the rear SAS expander.

Something we would have liked to have seen is built-in SAS capabilities. That tends to cost a bit less, but also Broadcom SAS HBAs and RAID controllers have had spats of challenging supply. It took longer for us to SAS Broadcom SAS 9500-16i HBAs than it did to get the 60x HDDs for this review.

On the bottom of the system, we have cabling for some of the 2.5″ NVMe bays, although one will likely want to add other cards in the PCIe slots for connecting more drives.

Here is a look at the SlimSAS cabling to the rear drive bays.

There are also two internal M.2 slots. M.2 is very popular in this class of system for boot drives. Intel has M.2 Optane drives, which make great logging devices for systems like this, but with the 2.5″ bays, it makes sense to use these as boot devices.

The system also has a standard ASPEED BMC for management duties.

With dual socket storage servers, topology is important, so that is what we will look at next.

Very cool, but if we’re going to talk about “cost optimizations” and being “much less expensive”, it would be nice to have a ballpark as to what the chassis cost. I didn’t see that anywhere (unless I missed it).

In …we were able to hit 1.08TB of raw capacity… you mean 1.08PB.

In …On the other hand, CPU0 has a Gen4 x16 slot and two x8 slots… you mean CPU1

domih – Yup, they didn’t even make it 1 paragraph without a typo. ;)

Fixed. 12 flights in 22 days. I am pretty beat at this point.

It’s fun to see the backblaze legacy still thriving. Bulk storage will always be needed. I’m more curious when chip density will eventually overtake spinning rust in TB/$. It seems every time the gap gets close, the mad scientists create a better spinner. Who knows, maybe we’ll get rewritable crystal storage before that happens.

There has been a tendency to take liberties with the “unregulated 3rd dimension” of 19″ rackable storage gear over the past few years.

I used many Sun “Thumpers” (X4500, 4U 48-drive, 2x CPUs) in a previous life…A bit of a challenge to rack (per Sun, place said beast in the bottom of a rack).

With the more recent Western Digital UltraStar102 (4U JBOD, 102 drives, 42″ depth iirc and 260 lbs loaded with drives) things got a bit dicey/scary with that much weight & depth hanging out of the rack when swapping a drive from the top of the chassis.

I know SSDs don’t yet have the capacity of hard disks, but would converting this thing to SAS SSDs make the energy consumption and total system weight figures a little more tenable? Also, could one plug a DPU or GPU into any of those PCIe x16 slots? Y’know, to have more compute options, CUDA, etc.?

Also, why don’t they do one of these up for 2.5″ drives? I’m assuming that either that’s been done before or nobody’s actually thought to do that, which in that case, why not? Smaller drives = more drives = more storage = more better, right?

Maybe more smaller drives != more storage

Where’s the required airflow ? I worked with a similar unit once, seeing our drive access pattern with an thermal camera was fun, but scary…

Stephen if you mean 2.5″ HDDs, I think they only topped out at 4TB. I’m not positive though.

2.5″ HDDs here top out at 5TB…

they are SMR (shingled magnetic recording) disks, the tracks overlap a bit. The controllers do a good job of mitigating write amplification to some degree.

For my home lab, 2.5″ HDD was probably a mistake; better performance, reliability, density with 3.5″ disks. On the other hand, some applications prefer more small drives, I suppose for read bandwidth. Latency on my 5400 RPM 2.5″ drives isn’t going to beat 3.5″ enterprise drives.