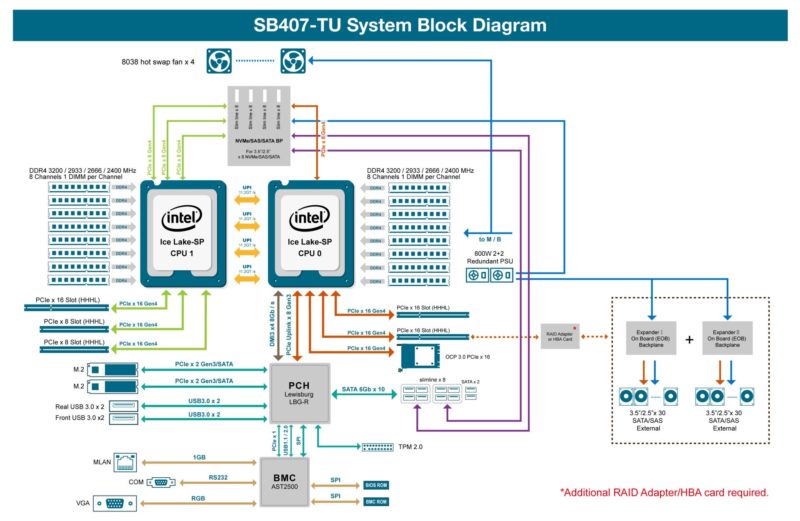

AIC SB407-TU Topology

With all of the slots and connectors, it is important to see how the dual-socket server is connected. Here is that view:

CPU0 controls the OCP NIC 3.0 slot and two PCIe Gen4 x16 slots along with the Lewisburg PCH. On the other hand, CPU0 has a Gen4 x16 slot and two x8 slots but handles 6 of the eight rear SSD bays.

To be transparent, something that would have been nice in the system is to have had two x16 slots on both CPUs. One of the challenges with systems of this class is balancing I/O and avoiding UPI links. Having two x16 links would allow for 200Gbps networking from each CPU plus enough I/O for higher-end RAID controllers/ HBAs or other devices. One of the biggest challenges we had in terms of performance was simply getting everything aligned to a single NUMA node so we could have 32 cores, 256GB of memory, a 100GbE NIC, and 30x drives connected to each NUMA node.

AIC SB407-TU Performance

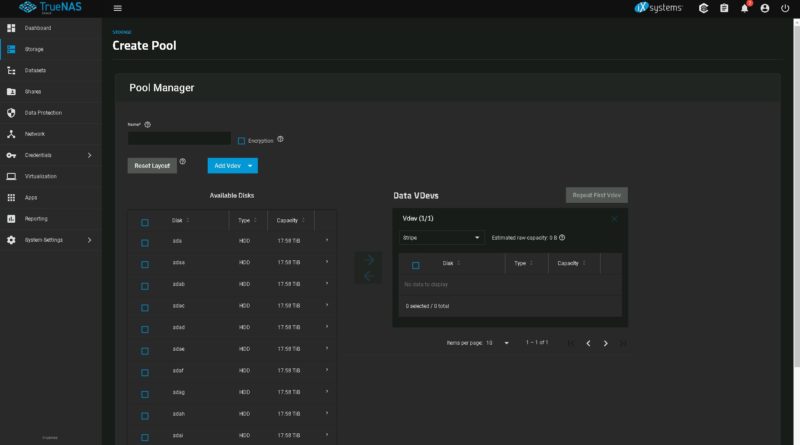

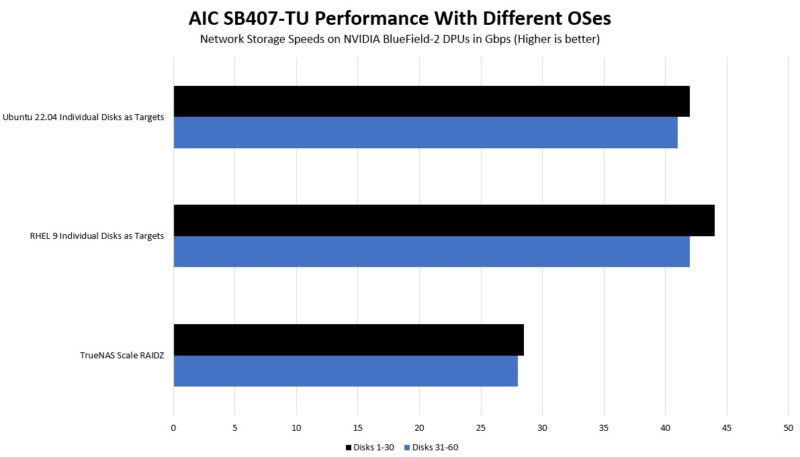

In terms of performance, we initially tried with TrueNAS Scale. This is always fun to see the ZFS solution scale to many drives.

After 15 minutes or so, we had a configuration that could hit 25GbE speeds from either bank of 30 drives.

We did not have several of these chassis to be able to come up with a larger-scale Ceph cluster, so instead, once we balanced the configuration on each NUMA node, we were able to push over 40Gbps of traffic from either NUMA node. Applications will vary, but by far this NUMA alignment was the most important aspect of getting performance from this system.

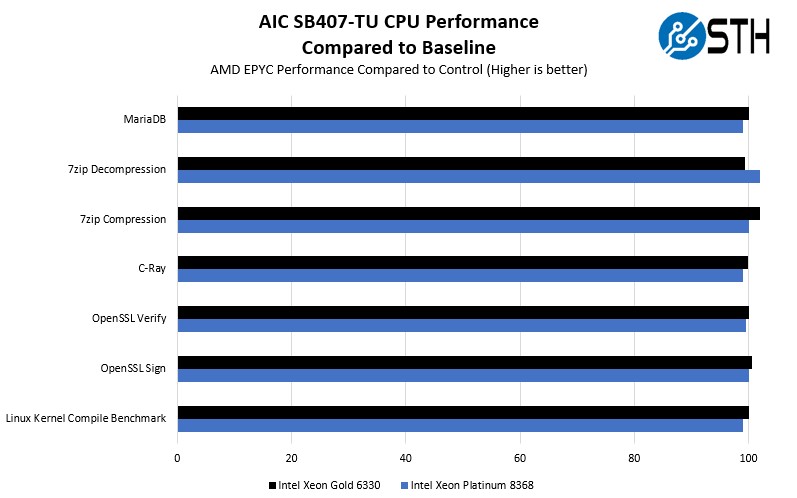

In terms of CPU performance, we saw fairly close to baseline performance using the Intel Xeon Gold 6330. The Intel Xeon Platinum 8368 is a significantly hotter CPU. That is one where we might suggest using lower power and lower cost CPUs for a storage server like this.

We also managed to run our NVIDIA T4 in this system. Our results mirrored our NVIDIA Tesla T4 AI Inferencing GPU Review results. The NVIDIA T4 is easy to power and cool, but cards like the T4 are important in storage servers because many of these top-loading storage servers are now being deployed into video analytics solutions. Having a local GPU minimizes network transfers of large video footage. We just had one T4 for this system, but it worked flawlessly.

Next, let us get to power consumption.

Very cool, but if we’re going to talk about “cost optimizations” and being “much less expensive”, it would be nice to have a ballpark as to what the chassis cost. I didn’t see that anywhere (unless I missed it).

In …we were able to hit 1.08TB of raw capacity… you mean 1.08PB.

In …On the other hand, CPU0 has a Gen4 x16 slot and two x8 slots… you mean CPU1

domih – Yup, they didn’t even make it 1 paragraph without a typo. ;)

Fixed. 12 flights in 22 days. I am pretty beat at this point.

It’s fun to see the backblaze legacy still thriving. Bulk storage will always be needed. I’m more curious when chip density will eventually overtake spinning rust in TB/$. It seems every time the gap gets close, the mad scientists create a better spinner. Who knows, maybe we’ll get rewritable crystal storage before that happens.

There has been a tendency to take liberties with the “unregulated 3rd dimension” of 19″ rackable storage gear over the past few years.

I used many Sun “Thumpers” (X4500, 4U 48-drive, 2x CPUs) in a previous life…A bit of a challenge to rack (per Sun, place said beast in the bottom of a rack).

With the more recent Western Digital UltraStar102 (4U JBOD, 102 drives, 42″ depth iirc and 260 lbs loaded with drives) things got a bit dicey/scary with that much weight & depth hanging out of the rack when swapping a drive from the top of the chassis.

I know SSDs don’t yet have the capacity of hard disks, but would converting this thing to SAS SSDs make the energy consumption and total system weight figures a little more tenable? Also, could one plug a DPU or GPU into any of those PCIe x16 slots? Y’know, to have more compute options, CUDA, etc.?

Also, why don’t they do one of these up for 2.5″ drives? I’m assuming that either that’s been done before or nobody’s actually thought to do that, which in that case, why not? Smaller drives = more drives = more storage = more better, right?

Maybe more smaller drives != more storage

Where’s the required airflow ? I worked with a similar unit once, seeing our drive access pattern with an thermal camera was fun, but scary…

Stephen if you mean 2.5″ HDDs, I think they only topped out at 4TB. I’m not positive though.

2.5″ HDDs here top out at 5TB…

they are SMR (shingled magnetic recording) disks, the tracks overlap a bit. The controllers do a good job of mitigating write amplification to some degree.

For my home lab, 2.5″ HDD was probably a mistake; better performance, reliability, density with 3.5″ disks. On the other hand, some applications prefer more small drives, I suppose for read bandwidth. Latency on my 5400 RPM 2.5″ drives isn’t going to beat 3.5″ enterprise drives.