At STH, we have been covering OCP since 2015 and the OCP Summit events in person since 2017. This year, the schedule is still disrupted quite a bit so it is harder to get hands-on time. Still, OCP has evolved by a huge amount over the past few years and I wanted to take a moment to talk about the impact OCP is having on the market.

I asked Alan Chang VP of Product Development / Sales Group of Inspur Systems who has taken time to chat with me previously on OCP about the project. Alan spends his days working at one of the biggest server vendors and is passionate about OCP so he is always a person I reach out to.

Alan and the Inspur folks suggested that we also loop in Vladimir Galabov Head of the Cloud and Data Center Research Practice at Omdia. Inspur and Omida just published a research paper outlining OCP. We do not normally feature industry analysts on STH, but as we are moving to the larger facilities and trying new things as a result, I thought we would give it a try here.

Background

Patrick Kennedy (PK): Can you tell our readers about yourself and how you got involved in OCP?

Vladimir Galabov (VG): I started my career at Intel, planning processor supply and demand across various business units, and over the last 10+ years got increasingly focused on the data center industry from both an IT and a physical infrastructure perspective. When I transitioned into an industry analyst role in 2017 I started researching the open computing market which was underrepresented in existing research and reports. The OCP is an incredible innovation generator and technology testbed, so over time, my entire team at Omdia got involved in contributing to the OCP and helping the world better understand open computing adoption trends, opportunities, and challenges.

Alan Chang (AC): My name is Alan Chang, and I consider myself as the first generation of OCP promoters since I was involved with OCP since day one. I am very fortunate that the two hardware design manufacturers that I worked with both believe that open source hardware is going to be the future for data centers, so I was appointed to work on OCP-related topics very closely over the past 10 years.

(PK): OCP just turned 10 years old, but an OCP server is not a single design. Can you give our readers a brief overview of the major standards now encompassed by OCP?

(VG): The different contributors and participants in the OCP all tried to solve different problems with the server designs they put forward. Facebook’s big focus was to design an energy-efficient server that is easy to maintain. Microsoft was focused on hardware deployment and operations costs. The Open19, at the time led by LinkedIn, was focused on ease of deployment, particularly in a remote environment, as they used a lot of colocation facilities and wanted to send the minimum amount of employees for each installation. The ODCC aimed to enable standardization and high compute density. Since their original contributions, the members of the different open computing groups have all looked into use case-specific designs, most notably for edge computing. I think that having multiple designs enables end-users to match their workload and constraints, to an optimal server design. Another key thing is that collaboration is enabling the co-creation of components that fit all of the different designs like the OCP NICs and accelerator modules.

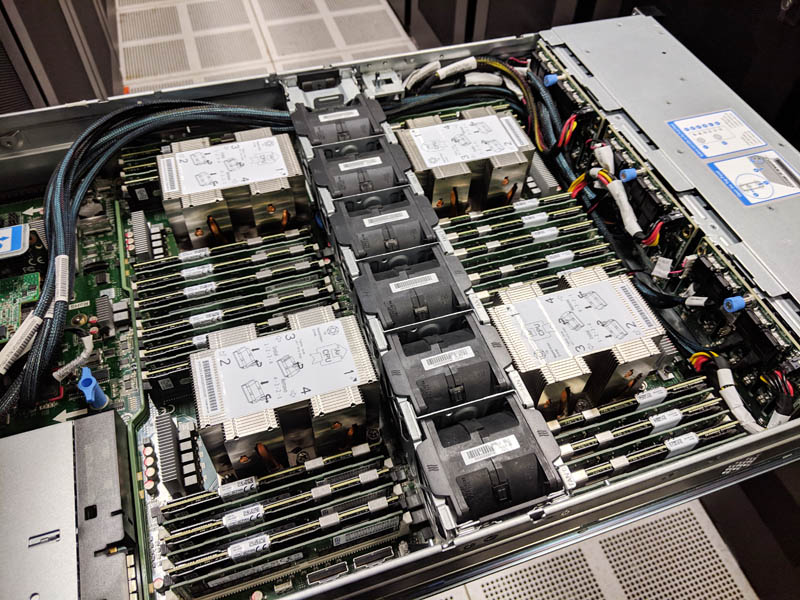

(AC): Today, OCP is no longer just about the server hardware anymore. When it first started its more server system architect and system engineers trying to work out an optimized system design. Today, there’s sub-groups standard involved firmware manageability, the advanced cooling solution from system to rack level, there’s a discussion on the chipset design for AI, and so forth. The coverage for the OCP is also adapting to our real-world data center evaluation. One of the major standards that Inspur is trying to promote in the coming year will be “optimization for the standard 19” enterprise server at the rack level.

(PK): Can you give our readers a sense of the scale of the different standards and a comparison to the market as a whole?

(VG): From an adoption perspective we think that the OpenRack design by Facebook is leading from a total server market value (in USD), based on the data we have for 2020. Microsoft’s OCS and the ODCC are battling for the second spot but we think they are close to one another. The ODCC leads in the number of deployed servers. Open19 is still picking up steam but we think it has a future in the data center and expect deployments to continue.

OCP Hardware Trends

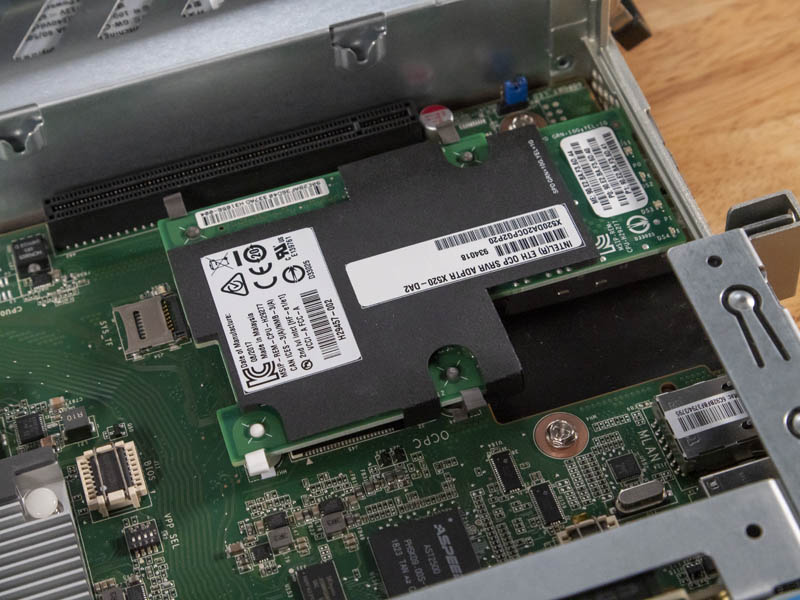

(PK): At STH we have covered extensively OCP technologies and standards that have made their way into traditional servers such as OAM (AI accelerator), the OCP NIC 3.0 (networking), and most recently in our EDSFF deep-dive (storage, and other use cases.) What do you think will be the next type of device in a server to be standardized in this manner?

(VG): The Open domain-specific architecture (ODSA) project which aims to establish open physical and logical processor to processor interfaces for chiplets is very interesting. It could ultimately create a marketplace of interoperable chiplets from multiple vendors based on open interfaces. This will allow product designers to develop best-in-class chiplets and System-in-a-Package (SIP) from multiple vendors. I am also closely following the work the OCP is putting into the server memory space. I think that their software-defined memory project would be a game-changer as memory-intensive workloads are on the rise.

(AC): I do believe some type of next-generation acceleration modules (PCIe or CXL or other) that are designed to be more “front access” friendly will be the next thing that is going to be standardized. As more hyperscalers are moving to front-access design, networking, storage, and other attached devices are completing for the same front side real estate in a server. I do believe that there will more standardization similar to OCP NIC 3.0 that will apply to more future devices. Another thing I believe will also be standardized is the modular BMC allowing more synchronized management over different hardware suppliers.

(PK): If a server has a standard CPU, DIMMs, OCP 3.0 NIC, EDSFF SSD array, and OAM accelerators, is that now an OCP server since almost every vital function is standardized? Why?

(VG): Even if every component is based on an OCP specification, I personally would not count that as an OCP server because OCP is more than just components. The engineers who produced the original server designs thought about factors like airflow (enclosure heights, fan size), best practices in power distribution, and network connectivity. Putting components based on OCP specifications in a non-OCP server won’t necessarily make it more energy-efficient, easier to maintain or deploy. It also won’t make it cheaper or enable higher rack density.

(AC): This is something that Inspur is trying to promote. We have many mature standardizations in terms of modules. If a server can adopt as many of those modules without trying to re-invent the wheels then it shall be considered as an OCP server. We shall encourage hardware system vendors to adopt more mature module/ standardization that is coming out from OCP.

(PK): OCP set out a goal of reusability early on. However, almost every generation of new hardware is using new designs. Will we ever hit a point where we have truly reusable designs, or will simple power/ thermal/ signaling constraints mean that we will get successively new standards for each generation?

(VG): I think it is inevitable that some aspects of OCP designs will continue to change as new technologies become available and as end-user priorities change. We will likely see some reusability happening but there will likely be a balance between reusability and innovation. I think this is something both vendors and end-users understand and are accustomed to.

(AC): Open Rack design has evolved during the past ten years, and of course, Facebook worries about backward compatibility a little less since their goal is to truly design the most efficient server generation-to-generation.

If we are able to look beyond any specific rack design and focus on the conversation of a pizza box server in OCP. The challenge with system reusability is that most OEMs will not maintain a product over 5-7 years in terms of software updates. The firmware codes are generated by OEMs and when OEMs stop maintaining those code that system is going to have compatibility issues with newer devices (such as newer SSDs, HDDs, and etc.) With OCP pushing for open-sourcing the BIOS/ BMC codes in the future, I do believe that the sustainable goal/ reusable goal can be extended beyond the 5-7 years OEM time limits since users can continue to maintain the codes themselves.

(PK): Can you give an example of an OCP initiative that started in one direction and pivoted significantly in the last 10 years?

(VG): Through the development process all OCP initiatives have had the designs evolve. One of the most interesting and notable is how the OpenRack design was adapted by Nokia to adhere to the industry requirements of the telecommunication industry like electromagnetic shielding and earthquake resistance. I think this is something Facebook engineers did not consider at all when developing the original design.

(AC): I will rather call this example more a “evaluate” than “pivot”, and this change is also due to the greater number of companies/ individual involvements for OCP? The OCP compute initiative started out by having a single design, the Open Rack, which is owned by Facebook. Many of the innovations and optimizations really just focused on the Open Rack. It was almost the synonym of OCP for a very long time. Then Microsoft joined and contributed the Project Olympus, more and more OEM/ ODM also contributed other designs and form factors. This helps end-users to have more choices. We are seeing standardization and adoption of different system form factors, not just only within Open Rack

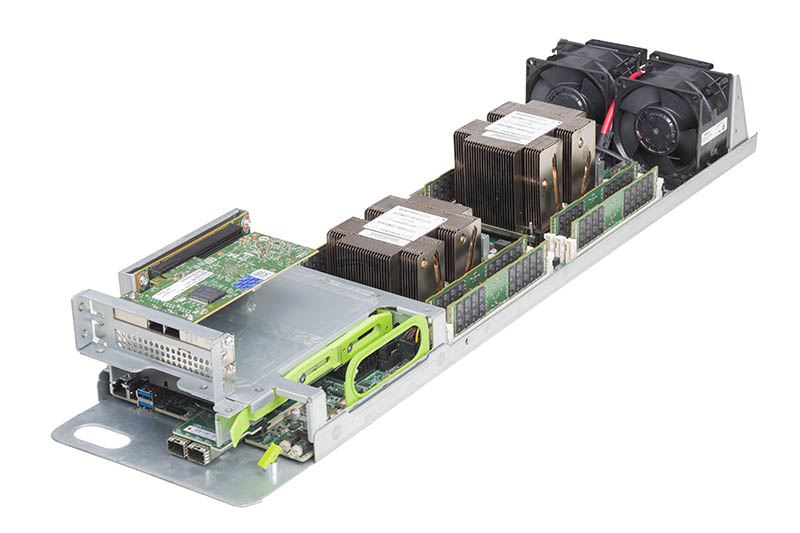

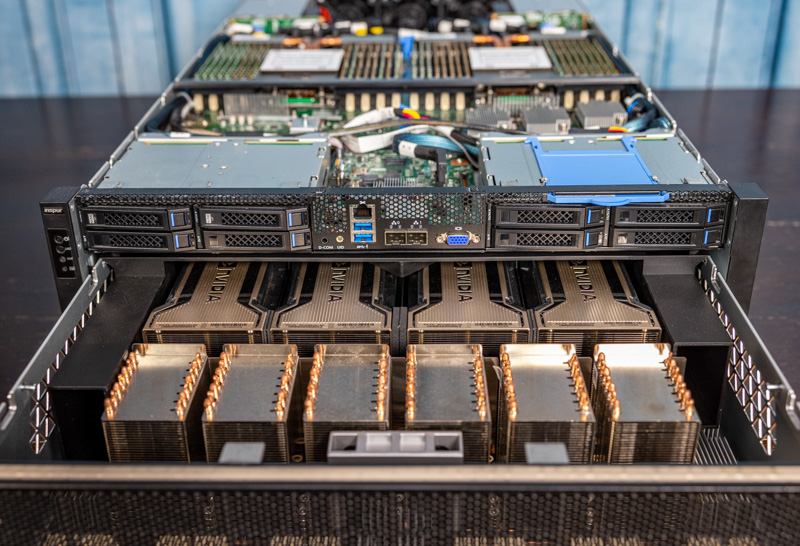

(PK): How is OCP driving next-generation power and cooling for servers in 2022 and beyond that will use more power than what we saw in our recent Inspur NF5488A5 review?

(VG): I don’t want to spoil the surprises the OCP has in store for the November summit, so perhaps it’s best to revisit this question after the global summit. I would say that there are multiple areas of development that are interesting. One that I am quite excited about is liquid cooling.

(AC): I believe this is where ACS (Advanced cooling solution) group is going to be super important for the next 10 years. People are hungry for more computing power, and we will have a find a way to dispatch heat more effetely other than the convention air cooling.

2021 OCP Trends

(PK): Where are you seeing the biggest adoption of OCP on a regional basis?

(VG): At the moment North America leads but we are seeing the biggest growth or new deployments happen in Europe and Asia.

(AC): EMEA & Asia is picking up a lot on OCP. Also they also now have more choices than the Open Rack/ Project Olympus. I considered having those “intermediate” OCP-accepted solutions is a pathway for them to gradually changing their infrastructure and be ready for the Open Rack design.

(PK): Did the recent pandemic change the market for OCP?

(VG): I do think that the recent pandemic has led to enterprises revisiting ways they can save money and be more efficient. Open compute equipment addresses many of the challenges we have seen enterprises face like reduced workforce and the need to lower CAPEX and OPEX. Interestingly, I think the market for refurbished OCP equipment is blossoming, as it enables a significant CAPEX reduction.

(AC): People are having a hard time validating new designs during the pandemic, so it is for sure slowing down some of the new conversations about OCP.

(PK): OCP is ten years old. If OCP continues its momentum, how do you think that will change the market for servers and server vendors after the next ten years?

(VG): Look at the open-source software ecosystem, Linux adoption has rocketed over the last decade and new technologies like container software and management are developed entirely as a community effort. Kubernetes is, after all, the de facto standard for container management, creation, and orchestration. If the OCP continues its momentum it could transform the data center industry where hardware design is done collaboratively and the way vendors differentiate is through services. After all the margin vendors make on hardware is low. Their true value is in how they help their customers deploy, optimize and service their equipment.

(AC): OCP is still the de facto community for open-source hardware; and Inspur is trying to smooth up the process for end-user adoption of OCP. With this, I think we will see more mature standardization and modularity that coming out of the OCP. We might have a wider selection of servers that are built upon the OCP standardization modules.

(PK): What is the hottest project at OCP these days? (If not covered above)

(VG): ODSA and software-defined memory.

(AC): For Inspur it is for sure the General Enterprise Server Spec standardization, we want to create a lower entry barrier for enterprise / smaller size DC operators to be able to understand some of the key OCP components and benefits. This way they can slowly progress into the next infrastructure changes that might be required for integrated rack design such as Open Rack or Project Olympus.

Final Words

I just wanted to take a moment and thank Vladimir and Alan for taking the time to answer our questions. OCP is a major disruptive force in the industry that we have been covering for over six years now.

As we have done reviews of OCP hardware and some of the software that has come out of the efforts, it is clear that OCP is going to continue to drive data center server design. Perhaps the biggest change since we started covering OCP and today is that OCP has grown to encompass many parallel efforts under a single umbrella. As the scope and breadth increase, it has made OCP cover more of the industry. Still, traditional vendors such as Dell EMC and HPE that are losing market share over time are certainly taking note of the move to OCP as they can no longer dictate previously proprietary form factors as we see them migrate to OCP NIC 3.0 in their servers as an example. It is certainly exciting to see OCP grow and branch off to more efforts.

My comment is meant to be constructive criticism. I’ve read your interviews with Alan C. before. He’s usually got good insight since he works on this every day. It’s strange when at least twice the “analyst” contradicts the person that’s building and selling stuff.

Patrick, you know more about OCP than any analyst I’ve met so it’s like the rock star analyst is asking a junior analyst what they did. I know you’re trying something new, but we come to STH to get in-depth information from people we know and trust the opinion of because we don’t believe or trust in normal analysts. That’s at least why I come here and my colleagues come here. I’d rather see your opinions with Alan C.’s opinions.

I don’t want to be rude. You’re always asking for feedback.

I’d say no more “analysts” too. We don’t come here to see that. Alan had some good points.

Is it possible for individuals to order an OCP server for home lab deployment? Or are they only for SCALE companies.

Why would you want to order OCP for a homelab? I’m not being snitty, but genuinely curious. For the vast, vast majority of homelabs and SMB deployments, OCP hardware is going to cost far more and give far less back in return.

I just can’t imagine OCP being anywhere near worthwhile when it’s not under scale. Curious to see what the need is for OCP in that setting.

For one thing, OCP uses rack-scale power supplies with individual servers fed by busbars – saves a few percent when deploying tons of standardized racks, but definitely not what you’d want in a homelab.