One of the more interesting announcements this week is the Supermicro SYS-6049GP-TRT server. This new server supports up to 20x NVIDIA Tesla T4 inferencing GPUs. We have a bit of analysis since the company made the announcement without the system specs on its website. This announcement is especially interesting since 20x NVIDIA Tesla T4 GPUs would need a total of 320x PCIe 3.0 lanes to run at full speed. Intel Xeon Scalable dual-socket platforms have a maximum of 96 lanes in dual socket configurations, plus a nominal amount from the chipset. AMD EPYC has up to 128 PCIe lanes (minus a few for system peripherals.) There is also the question of physically fitting the GPUs. We think we know what Supermicro is doing to make this work.

Over the past few days, we have received quite a few e-mails asking about the announcement and photo that we wanted to cover a bit more in-depth.

Supermicro SYS-6049GP-TRT

Supermicro shared a single banner image of the SYS-6049GP-TRT. This single banner image only has 16x NVIDIA GPUs installed.

We covered the NVIDIA Tesla T4 inferencing GPU at its launch. One reason that the new GPUs generated so much buzz is that the new NVIDIA Tesla T4 is a low profile card which greatly expands its ability to fit into general purpose server form factors.

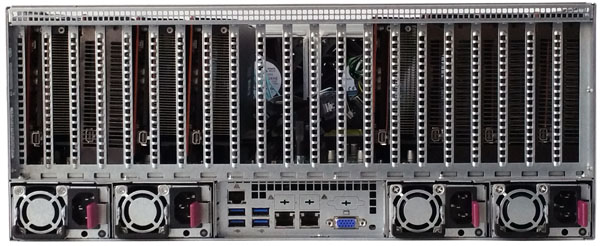

One will note that the Tesla GPUs pictured in Supermicro’s banner image are full height Volta generation Tesla design cards, although there are only a handful of people in the world that would catch that. To STH readers, this should be really interesting. NVIDIA makes a “baby” Tesla V100 single slot 150W card. It is possible that the design Supermicro showed off is a 16x NVIDIA Tesla V100 (150W PCIe) 4U server.

The basic chassis building block that Supermicro used we saw in DeepLearning10 and DeepLearning11 along with our Supermicro 4028GR-TR 4U 8-Way GPU SuperServer Review.

The chassis has 21 full height PCIe expansion cutouts in the back of the chassis as can be seen above.

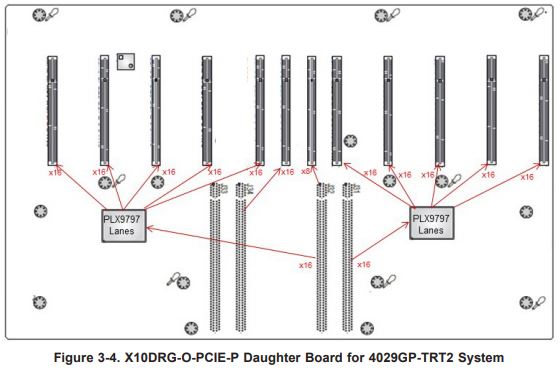

We had the daughterboard diagrams in the STH CMS from our How Intel Xeon Changes Impacted Single Root Deep Learning Servers article. This is now what the Supermicro SYS-6049GP-TRT is using, but it gives a PCB layout to follow:

We think that the Supermicro SYS-6049GP-TRT is using a PCB with additional PCIe switches driving additional PCIe expansion slots. Instead of leaving ten dual width PCIe spacing slots, Supermicro has another PCB with perhaps 8 or 9 additional PCIe slots. There is either a large PCIe switch complex or each GPU is getting fewer PCIe lanes.

Final Words

It appears as though fitting 20x single-width cards is possible in Supermicro’s standard chassis design. With 8kW of available power using four 2kW power supplies, the Supermicro SYS-6049GP-TRT can certainly handle that number of NVIDIA Tesla T4 GPUs for inferencing. Perhaps more intriguing is the possibility that the system pictured is actually of 16x NVIDIA Tesla V100 single-width 150W GPUs. Those would have a 2.4kW GPU draw, just like 8x 300W double-width GPUs.

With one small image, the Supermicro SYS-6049GP-TRT became one of the most intriguing AI inferencing servers available in the NVIDIA Tesla ecosystem assuming it supports both NVIDIA Tesla T4 and NVIDIA Tesla V100 (150W) GPUs.

Have you guys managed to do any testing on Supermicro GPU servers with the RTX2080’s yet?

A few vendors have released these cards with the blower style coolers so the results should be interesting

Broadcom PLX9797 in your figure 3.4 is the switching fabric which multiplied the PCIe lanes.

I did not find information about tesla V100 about 150W, it looks like Max Power

Comsumption for tesla v100 is 250w for pcie version:

https://images.nvidia.com/content/technologies/volta/pdf/437317-Volta-V100-DS-NV-US-WEB.pdf

also tesla v100 is double width/dual slot, not single…

also, power supply for this server is max = 2000W (2+2) Redundant Power Supplies Titanium Level (96%+),

20 x Tesla T4 ==20 x 70W=1400W, if we will use 150W cards, quantity will be 1400W/150W=9, which means 8 only, but not 16 ( 16 x 150W=3200w – not enough power ).

sorry, hard day, 16 x 150 =2400W and we can’t use all 2000w, we need something for everything else..

George – there is a 150W Tesla V100 single slot as well.

can you do me a favor, give me a link from NVIDIA, because i did not find it on NVIDIA site…thanks

George, this is pretty easy to find. Search for P/N 900-2G502-0300-000 online as an example.

morning and thanks a lot!