The Supermicro 2029UZ-TN20R25M is a 2U dual-socket server that is part of the company’s “Ultra” line meant to compete in the higher-end of the server market. We requested this server specifically because it has 20x NVMe SSD bays, it supports Intel Optane DCPMM, and it has built-in 25GbE. Those 20x NVMe SSD bays we wanted to use to show a very important concept in storage, the benefit of direct-attach storage. In our review, we are going to discuss why this is important.

Supermicro 2029UZ-TN20R25M Overview

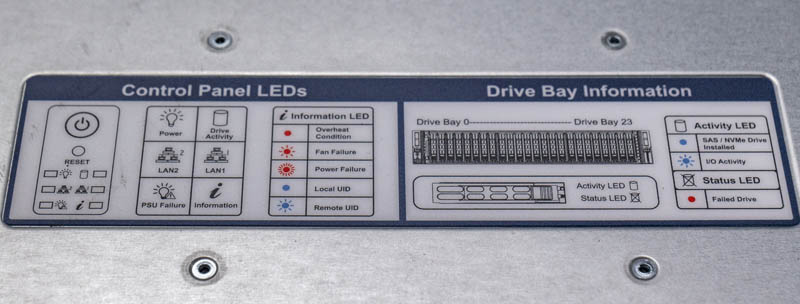

The front of the 2U chassis is a 20x 2.5″ NVMe SSD + 4x SATA/ SAS configuration. The 20 instead of 24 U.2 NVMe SSD bays are an important distinction that we are going to discuss later in this review. These drive bays are directly attached to CPU PCIe lanes without a PCIe switch.

The four SATA/ SAS bays we see mostly being used as SATA in this platform. Adding a SAS card for four drives will constrain other customization. Still, for some OSes that require hardware RAID controllers, these can also be used with a SAS controller and SAS drives.

We wanted to point out another, and extremely important feature on our Supermicro 2029UZ-TN20R25M test unit. This is, surprisingly, the first Supermicro unit that we have in the lab with a random password. Going forward, Supermicro default passwords will no longer be “ADMIN” and instead be randomly generated. Supermicro says this change was to comply with a 2020-01-01 California law that would prohibit it from using the same password on every device. We are going to have more on this soon. One item that we would like to see Supermicro change is to increase the size of this label. As you can see, the password is printed in a high-contrast black on white text, but it is very small. For those without great eyesight in the data center, this label is simply too small. Plus, there is plenty of additional room on the label.

Supermicro has also started adding service guides to the exterior of their systems. We think this is more of a bare minimum service guide and we hope this is an area that Supermicro invests in. Perhaps this is also an area where a larger version of the unique password can be printed as we see with HPE machines.

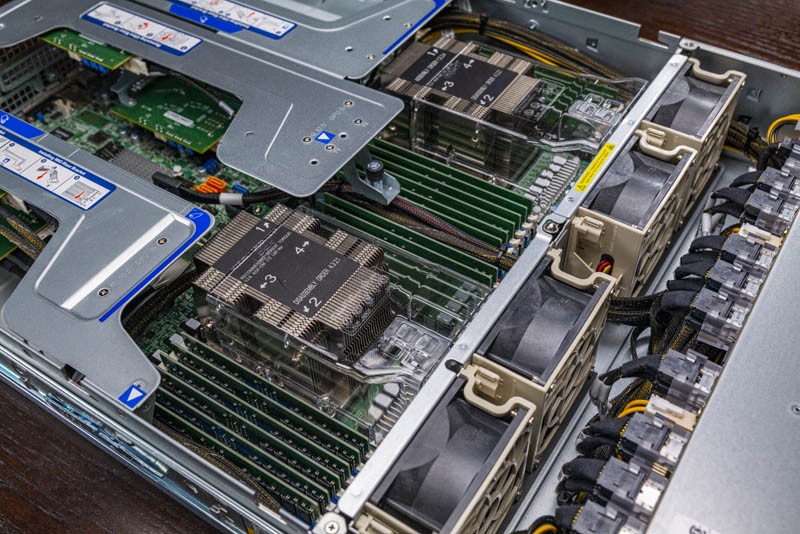

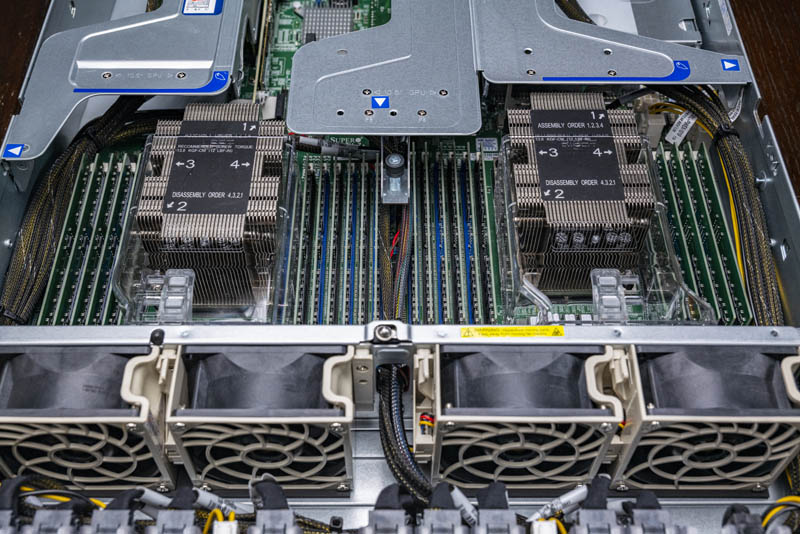

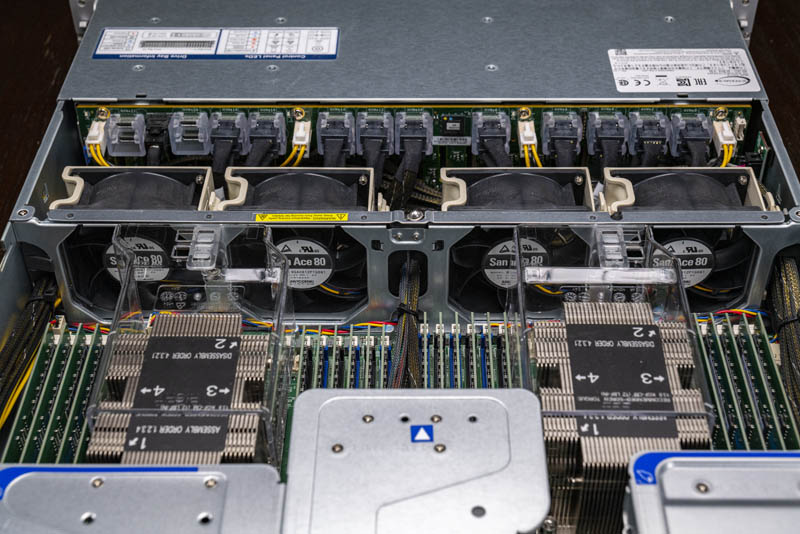

Inside the system, we see a fairly standard Supermicro 2U Ultra server layout. The company utilizes this design with front hot-swap bays, four midplane fans, then CPU/ memory, followed by risers with PCIe connectivity and PSUs across much of their Ultra line. That includes both Intel Xeon models, like the 2029UZ-TN20R25M, as well as AMD EPYC models.

The system itself supports up to two 205W TDP Intel Xeon Scalable CPUs. Each CPU gets a full set of 12 DIMM slots for a total of 24 DIMM slots in the server. This is important because that means that the system can support the full stack of Xeon Scalable offerings from the Intel Xeon Bronze 3204 up to the 205W Xeon Gold and Platinum SKUs such as the Intel Xeon Platinum 8280 and Xeon Gold 6258R that is better suited for this server. Dell EMC PowerEdge servers, for example, required more expensive fans to be added to configurations for higher-end CPU support, but Supermicro includes this with the server. Dell says that the extra fans and power supplies for the new Xeon “Refresh” SKUs will add 3% on average to their server costs, so this is a significant value Supermicro includes.

What is more, with 24x DIMM slots, we also see support for Intel Optane DCPMM modules in 128GB, 256GB, and 512GB capacities each. This server is designed to handle the maximum configured load that you can get with a dual Intel Xeon solution today.

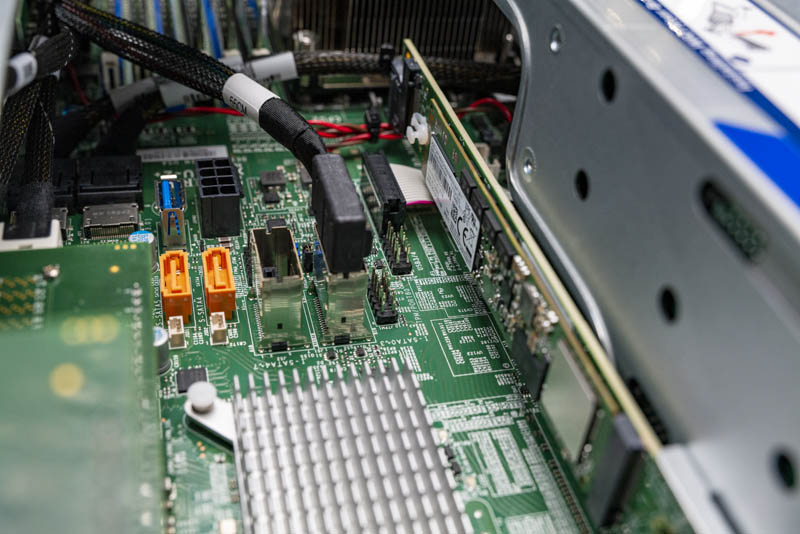

We wanted to briefly touch on the backplane. As you can see, the backplane has 20x individual PCIe Gen3 cables, one to each of the 20 front 2.5″ NVMe SSD bays. There is no expander onboard, these are directly connected. This same backplane can support 24x NVMe SSDs, but instead, it is using a SFF-8643 cable for the four SATA / SAS drive bays.

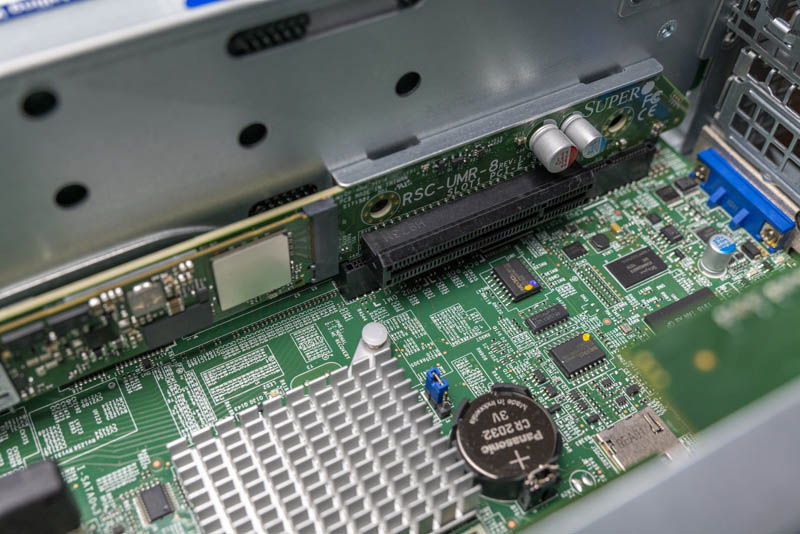

Those individually cabled hot-swap NVMe drive bays have cables that run through the chassis to a series of PCIe riser cards that take PCIe slot signals and route them to the NVMe bays. Effectively what Supermicro has done is take their standard Ultra server layout and provide a customized solution for these direct attach NVMe drive bays.

This direct-attach solution is extraordinarily important. In many 20-24-bay NVMe SSD designs, PCIe switches are utilized. A PCIe switch is like a network switch in that it can aggregate device bandwidth to fewer upstream ports and oversubscribe the bandwidth. Generally, we see Intel Xeon Scalable 24-bay NVMe SSD servers utilize either one or two PCIe switches. Each switch is limited to a PCIe Gen3 x16 link back to the CPU which means there are 80-96 lanes (20-24 drives) of PCIe devices and 16-32 lanes of bandwidth to the system. In the Supermicro 2029UZ-TN20R25M one gets 80 lanes of NVMe devices and 80 lanes of PCIe back to the system. Avoiding PCIe switches also lowers the cost, power consumption, and latency of the server.

There is another internal NVMe SSD in the form of a M.2 slot on a riser. Supermicro has options to customize this for SATA M.2 SSDs as well. One can see a USB 3.0 Type-A internal header along with two “gold” SATA DOM ports that can provide power directly to SATA DOMs or utilize motherboard power with headers just next to the ports. There is also a SFF-8087 cable that provides the four SATA lanes to the remaining four front panel hot-swap bays.

A current dual Intel Xeon Scalable platform has a maximum of 96 PCIe lanes. With the 80 front panel lanes and lanes for the internal M.2 slot as well as the dual 25GbE network onboard, one has very limited PCIe lanes available for additional expansion. Specifically, there is a single PCIe Gen3 x8 low profile slot in the middle of the chassis that is available for customization.

Tying that PCIe slot to the rear of the chassis, this slot is just above the legacy serial and VGA ports. The remainder of the expansion slots on all of the system’s risers are dedicated to providing cabled PCIe interfaces to the front NVMe drives. This is a great example of what we mean by it being a very specific solution. The other legacy I/O on the rear panel includes two USB 3.0 ports as well as an out-of-band management port.

25GbE is a major networking trend and we have already started doing overviews of 25GbE TOR switches such as the Ubiquiti UniFi USW-Leaf 48x 25GbE and 6x 100GbE switch overview. We have done adapter reviews such as the Supermicro AOC-S25G-i2S, Dell EMC 4GMN7 Broadcom 57404, and the Mellanox ConnectX-4 Lx. We also have 100GbE switch reviews in the publishing queue so we wanted to start focusing on the new systems. The Supermicro 2029UZ-TN20R25M has dual 25GbE networking built-in. These ports replace legacy 1GbE connectivity and are via the AOC-2UR68-m2TS add-in card. We still generally prefer having at least one 1GbE provisioning/ management port, however, we also specifically asked to review this model because 25GbE is becoming a common feature.

Next, we are going to look at the system topology as well as the management solution, including the new default password randomization change, before getting to our performance testing. That randomized password change is a major operational change if you have not encountered it before.

It seems like an awful lot of storage bandwidth to the processor with very little bandwidth out to the network. Maybe database where the compute is local to the storage, but I don’t see the balance if serving the storage out.

It is a shame that Supermicro doesn’t offer that chassis/backplane in an single AMD EPYC CPU config, that combination of mixed 20 NVME + 4 SATA/SAS drives would be ideal while costing a whole lot less with a single EPYC CPU, while leaving plenty of available PCIe channels to support 100+Gbs network adapter(s) all without breaking a sweat.

Suggestion for the workload graph please: Dots and Dashes.

It’s tough to see what colour belongs with which processor graph line.

Thanks.

So just to confirm, 20 x PCI-e drives were connected to one of the few servers that can directly support this config. Then you install 20 NVME drives and didnt show that bandwidth or IOPS capabilities?

I apologize for dragging in Linus Sebastian of Linus Tech Tips fame because he is a bit of a buffoon, but he might have hit a genuine issue here:

https://www.youtube.com/watch?v=xWjOh0Ph8uM

Essentially, when all 24 NVME drives are under heavy load there seem to be IO problems cropping up. Apparently, even 24-core Epyc Rome chips cannot cope and he claims the problem is widespread – Google search results shown as proof.

I would like to hear from the more knowledgeable people that frequent STH. Any comments?

Good article, Patrick. However, with regards to the IPMI password, couldn’t it potentially be possible to change to the old ADMIN/ADMIN un/pw using ipmitool from the OS running on the server?

Philip – what about a bar chart?

Henry Hill – 100% true. With the whole lockdown, we had to use the Intel DC P3320 SSDs. Look for reviews on retail versions like we have. We have some of the only P3320’s in existence. They would not go overly well showing off the capabilities of this machine. Instead, we focused on a simpler to illustrate use case, crossing the PCIe Gen3 x16 backhaul limit.

Nickolay – check out the AMD/ Microsoft/ Quanta/ Broadcom/ Kioxia PCIe Gen4 SSD discussion. They were getting over 25GB/s using just 4 drives per Rome system. At these speeds, software and architecture becomes very important.

Oliver – there are new password requirements as well. More on this over the weekend.

Patrick,

A late catch-up, can you post a link to that AMD/… / Kioxia PCIe Gen4 SSD discussion, tks

Hi BinkyTo https://www.servethehome.com/kioxia-cd6-and-cm6-pcie-gen4-ssds-hit-6-7gbps-read-speeds-each/

What about storage class memory?

hi,

may i ask how it is possible to create a RAID5/ array with ESXi or Windows on bare metal?