As supercomputers get larger and accelerators get faster, teams planning infrastructure need faster interconnects. While there are future products that are still in the planning phase that could displace some of the markets, like Gen-Z, one of the tangible technologies aside from HPE Cray Slingshot that we know will power exascale supercomputers, is Infiniband NVIDIA Mellanox NDR 400Gbps Infiniband. To that end, NVIDIA’s recent Mellanox acquisition is now yielding a NDR 400Gbps Infiniband line.

NVIDIA Mellanox NDR 400Gbps Infiniband

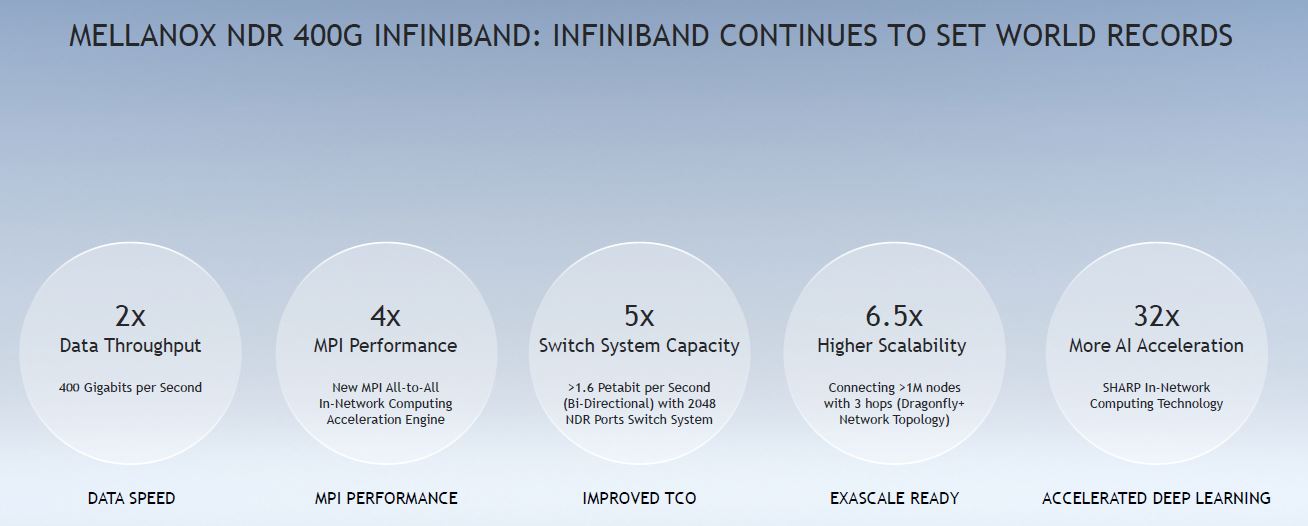

Mellanox drove Infiniband to effectively double performance every generation and that is happening again. As current systems are looking to HDR 200Gbps Infiniband, NDR 400Gbps Infiniband is the next stop now that the NVIDIA-Mellanox deal closed.

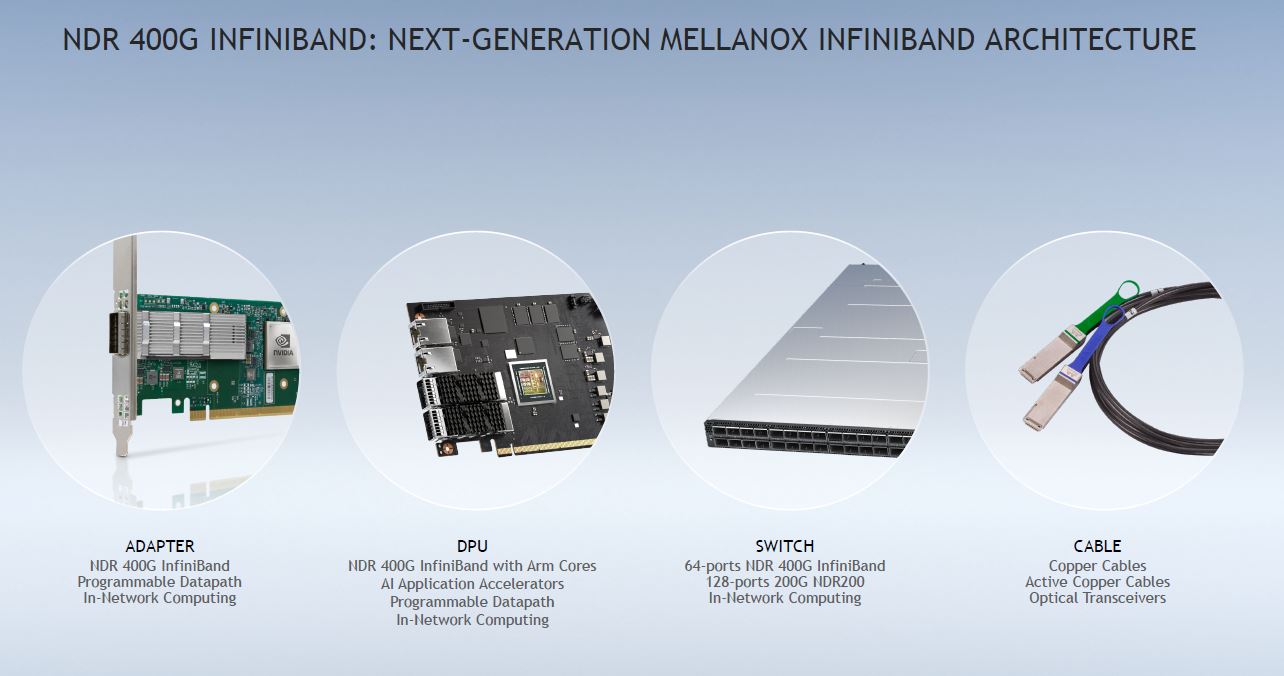

For the new NDR generation, we get a portfolio of products including adapters, DPUs, switches, and cables. NVIDIA has not announced ConnectX-7 but Mellanox has been following a pattern of implementing a new generation of Infiniband in one generation of adapter, then refining offloads in the next. On the adapter side, we need PCIe Gen5 x16 or two PCIe Gen4 x16 slots to drive ports in servers, so it may be some time until we see adapters since we do not expect PCIe Gen5 until after the early 2021 generations of server CPUs.

NVIDIA also is calling out DPUs with Infiniband which is exciting since combined with GPUs that could obviate the need for a server altogether with direct fabric attached GPUs as we discussed in our NVIDIA to Acquire Mellanox a Potential Prelude to Servers piece.

On the cable side, NVIDIA told us they are able to use copper DACs (likely thick ones) and reach 1.5m. As a result, switches will not require co-packaged optics.

As we would expect, the new NDR Infiniband provides more performance than the previous generation as we double bandwidth from 200Gbps to 400Gbps.

Final Words

As network speeds increase, two things happen. First, offloading functions for communication become more important so ConnectX’s accelerators will be required. Second, we can push limits of disaggregation further. With NDR likely slated for PCIe Gen5, that means we could see it in the CXL 1.1/ CXL 2.0 era which will be an exciting time for servers and infrastructure since it is when we will start to see disaggregation.

Finally, while writing this, something came up that is interesting. NVIDIA is using NDR 400G Infiniband in its marketing materials. At what point can we just have 400G Infiniband and drop the “xDR” naming? It seems like we could do this already just as we say “400GbE”.

Look closely there is an effective 128 port 200Gbps uncontended Infiniband switch in that announcement.

That is huge because you can now build with a few PB of storage using AMD Epyc a 16384 core facility in three racks and have uncontended Infiniband. Though to be fair as the number of cores goes up the number of users running multinode jobs does drop off.

A decade ago you would have been looking at 30 plus racks for that sort of compute.

Jonathan Buzzard

Mighty tall racks there – and still just a bunch of 2 socket servers – since Epyc cannot scale past 2 sockets – and could easily be owned by a single rack of systems with 4-8 GPUs….

By uncontended I assume you mean non-blocking…

There are already 400Gb/s ethernet shipping TODAY… also non-blocking.

Cool story though. /s