We wanted to share an update on Gen-Z ahead of SC20 this week. The Gen-Z Consortium is pushing ahead with building a fabric that extends in-memory computing beyond the bounds of a single box. While CXL is focused more on the in-server connectivity, Gen-Z is focused on bringing a disaggregated data center to the forefront beyond what CXL offers. The two consortiums have a Formal MOU that describes where each will play and how they will work together. In this update, we wanted to again catch our readers up on what Gen-Z is, and then look at some of the interesting proof-of-concept (PoC) solutions that are aiming to lower the cost of development.

The Thrust of Gen-Z

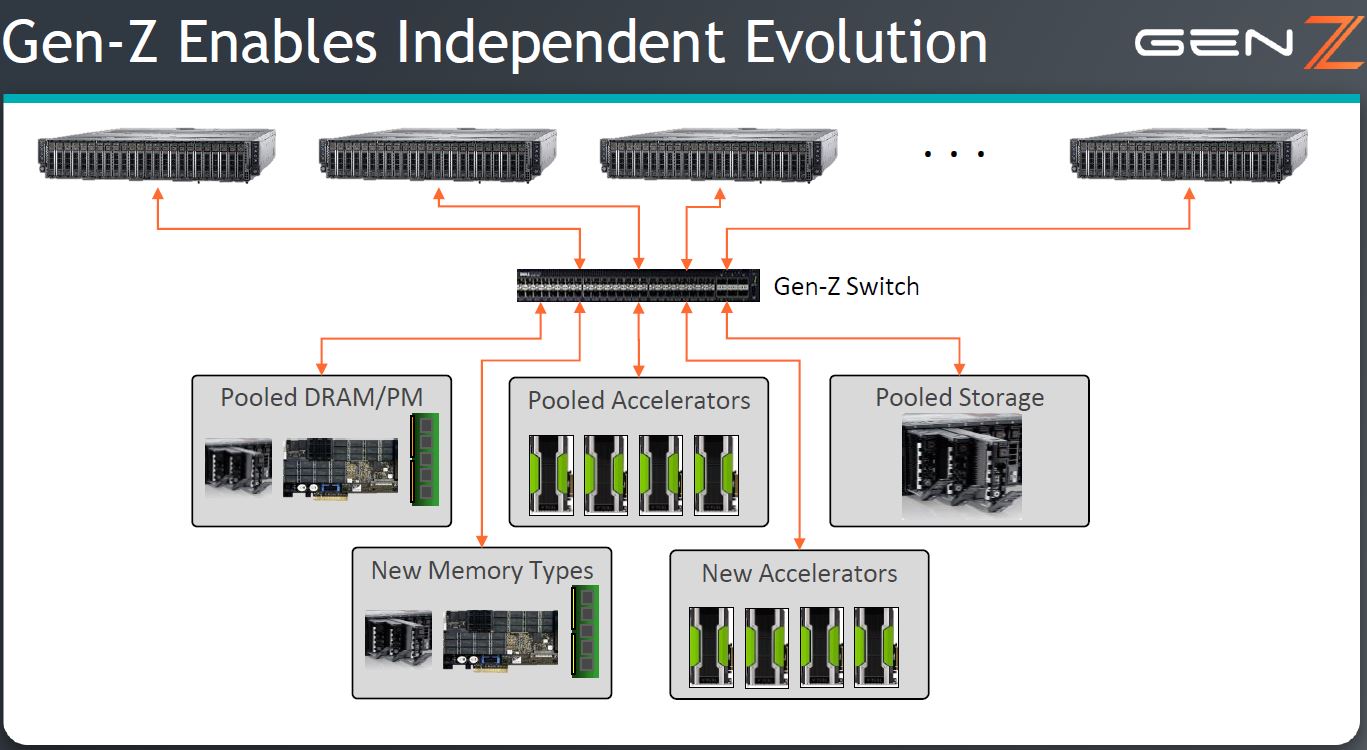

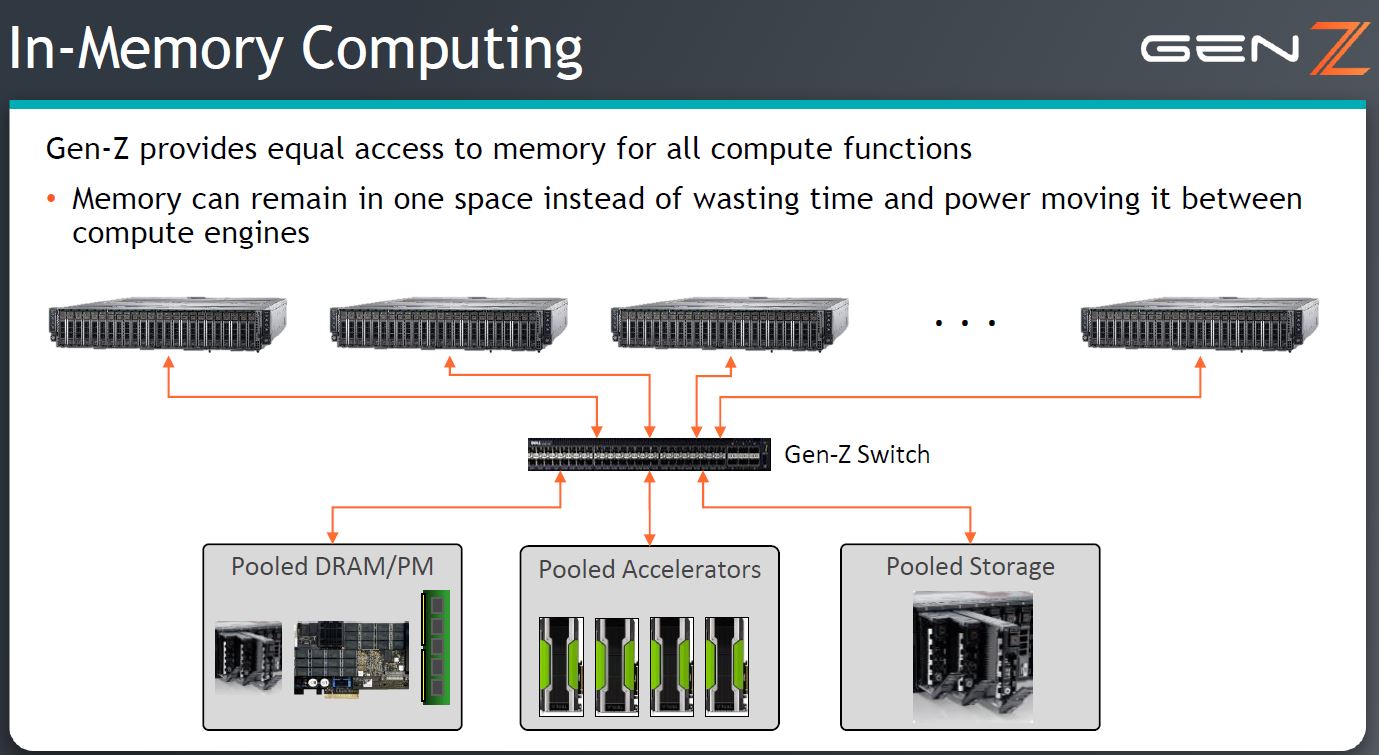

Since this is a future technology, we need to spend a moment focusing on what is Gen-Z and why it matters. Gen-Z is a fabric where different types of resources whether they are memory, storage, traditional compute, or accelerators can share the memory footprint across a fabric.

The picture above may seem abstract, but the implications are important today. For example, many companies see PCIe Gen4 devices but have Intel Xeon servers which will be about a year and a half behind AMD’s release of PCIe Gen4 servers. As such, upgrade cycles for new accelerators such as the NVIDIA A100, Xilinx Versal, Kioxia CD6 or other devices are held back by the need for platforms to move in a synchronized manner. Disaggregating the major systems components while providing load/ store access to memory means that each major set of components can be expanded and upgraded according to specific needs. Gen-Z solves the problem of having to use a server or more specifically a server CPU as a building block of the data center.

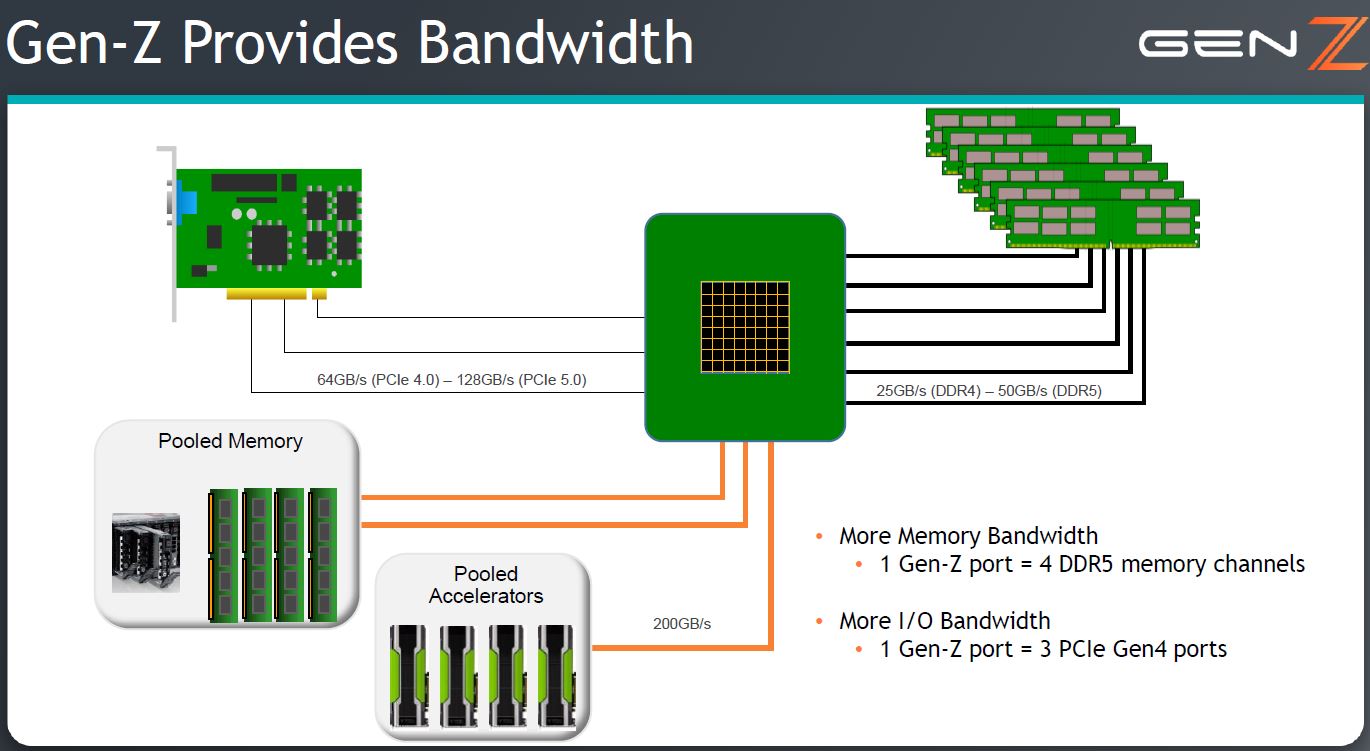

This disaggregation is achieved by being able to deliver a lot of bandwidth over a switched fabric that is designed to operate between different chassis and racks in a data center. Here is a diagram regarding the bandwidth. Since latency goes up with this disaggregated approach, and because a stated goal is to have pools of DRAM or persistent memory on the Gen-Z fabric to share, Gen-Z needs a lot of bandwidth.

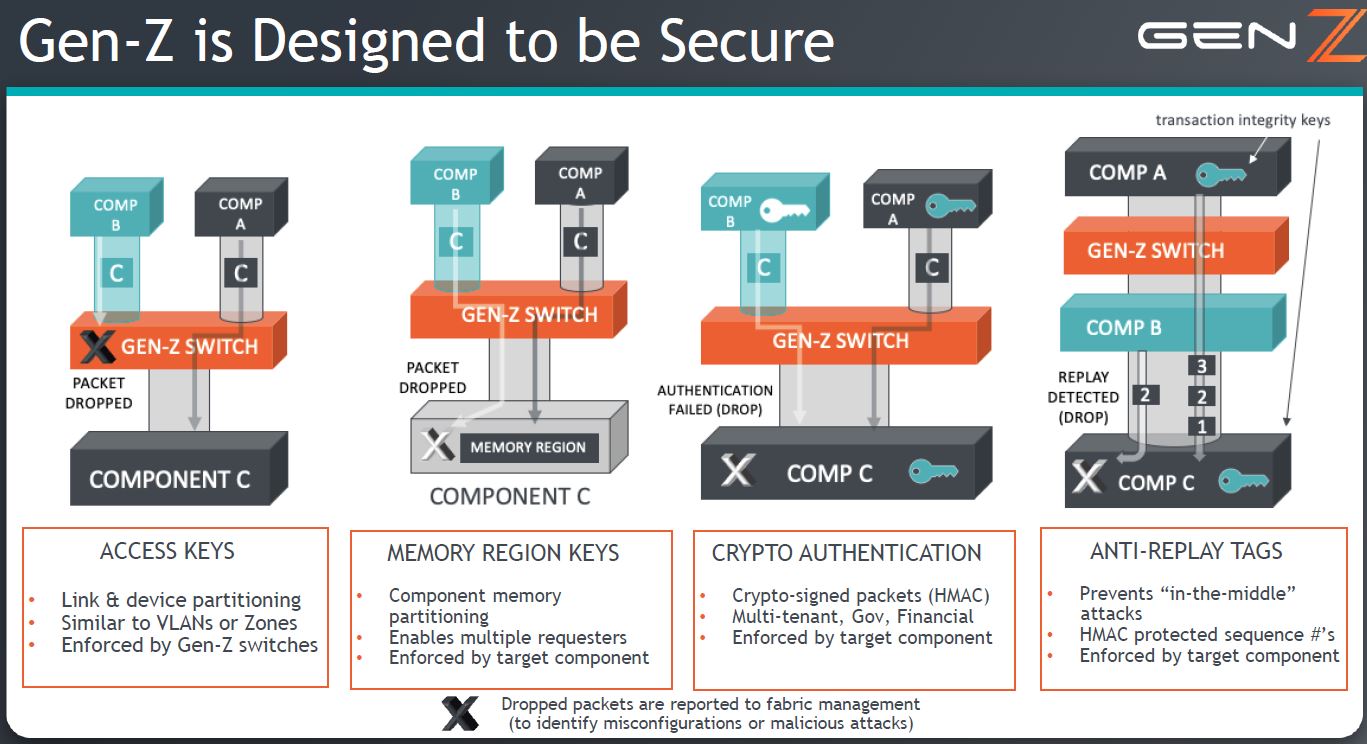

Another newer feature that Gen-Z is discussing is the security aspect. Gen-Z has different levels of security which are important in a disaggregated model. As an example, if you are a cloud provider and you have two different customers on different CPU nodes sharing memory from a memory shelf, you need to ensure that there is strong isolation.

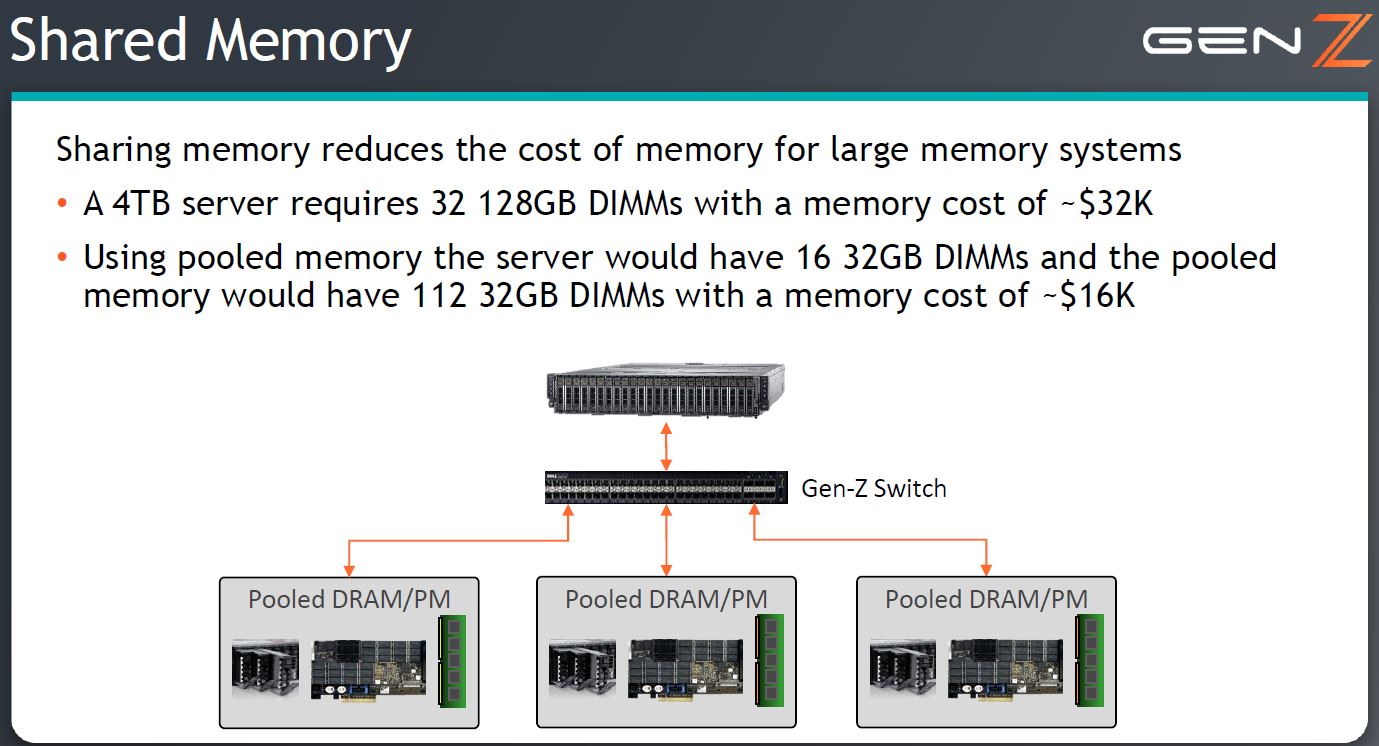

One of the biggest costs in data centers is memory. Using pooled memory in memory shelves as opposed to in an individual server means that, although there is a latency penalty, hitting higher capacities can be accomplished with lower-cost smaller capacity DIMMs. This is an example of getting a higher level of memory capacity by the server using Gen-Z to go beyond the 0.5TB of local memory. The cost here is being presented as half. I asked if that includes the cost of the Gen-Z switches and such to make this happen. I was told that it is expected that enabling the topology, in this example, would use about half of the price difference which still leaves a 25% cost savings. Scaled out for a data center, even $8000 per server node is an enormous amount of savings.

This is a bit simplistic of a cost breakdown for another reason. As we discussed on the security side, these pools of DRAM can be provisioned and shared among compute nodes. For data center operators, having larger pools means less overprovisioning and another enormous area of cost savings.

Part of why the in-memory computing paradigm is going to take some time to implement is that it radically changes some of the basic software assumptions for infrastructure. Accelerators can be added to pools of memory for computation without having to go over a fabric. Another example is that a data center class storage solution can have sync writes to a persistent memory pool, then storage can pull that data to lower-cost storage without the host managing the process. For an analogy, think of this as a RAID controller, just at a data center scale and for more modern scale-out storage rather than legacy RAID models.

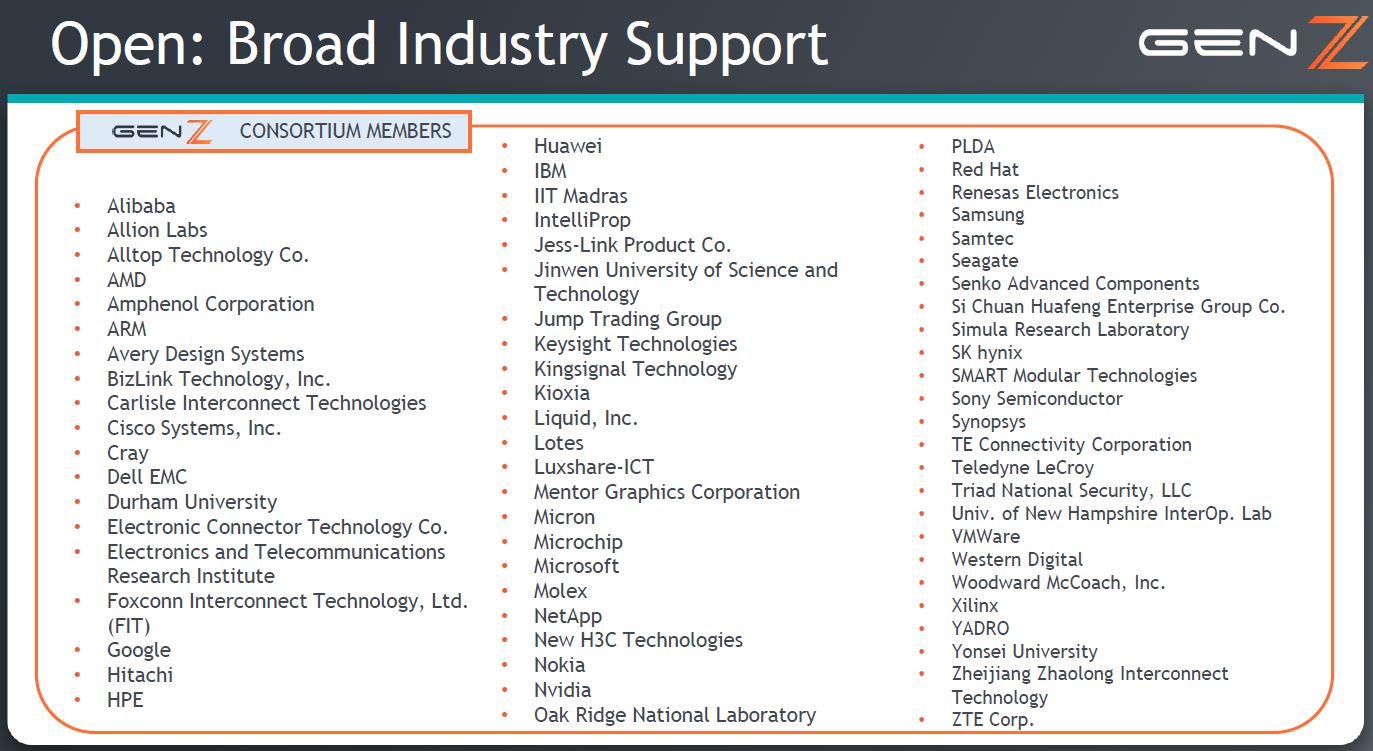

The industry sees this level of integration as essential. Overall, the industry support is virtually every major supplier in the data center ecosystem except for one notable exception: Intel is not on this list. Gen-Z does necessarily move infrastructure from CPU centric to memory and xPU centric which aligns with Intel’s goals. At the same time, Intel pushed CXL. With the CXL and Gen-Z MOU, Intel has an onramp to Gen-Z without being listed on this slide.

Even if one did not know about Gen-Z before this article, at this point, the path forward is clear: the industry needs to do a lot of development both on hardware and software. To that end, the Gen-Z Consortium is showing off more PoC efforts to expand the base of developers.

New Gen-Z PoC Expansion

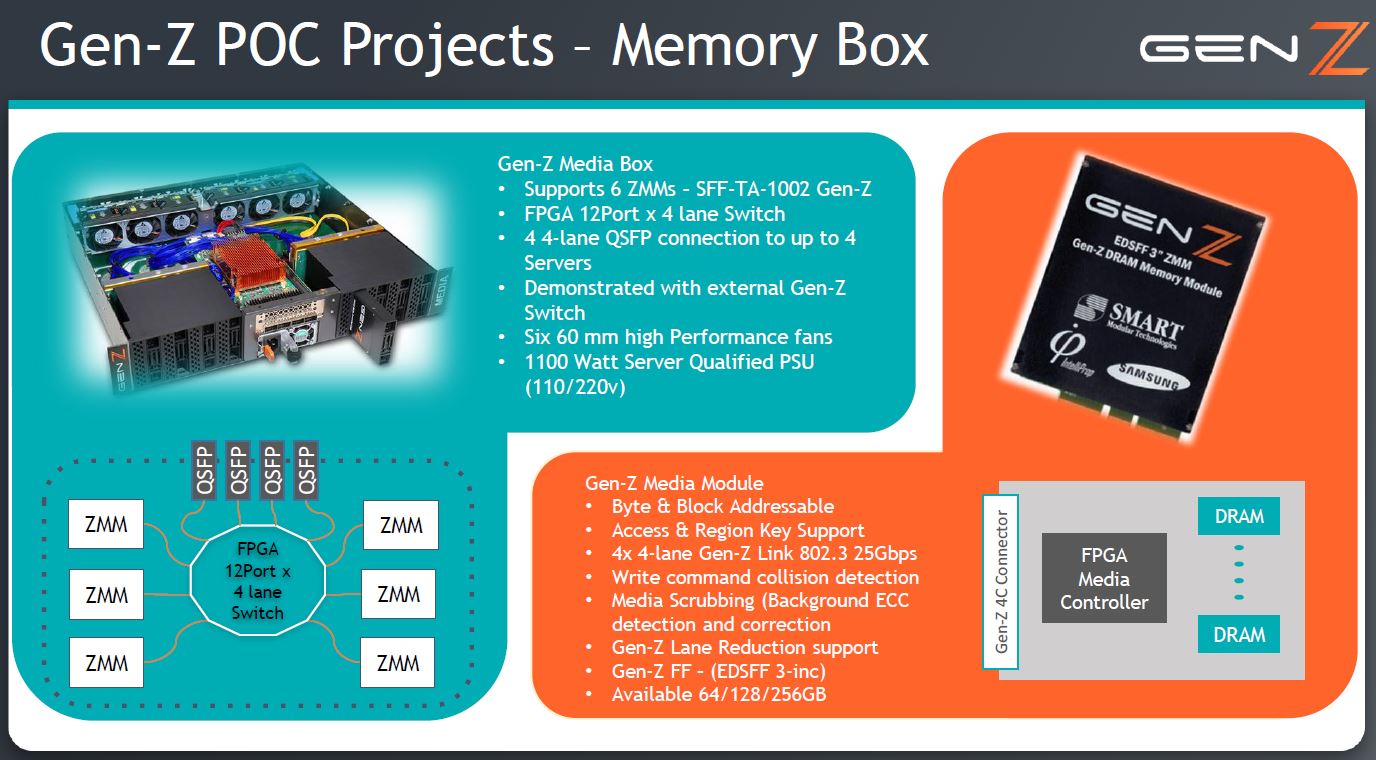

The first item is one that we have seen before. The Memory box which uses ZMMs (Gen-Z Memory Modules) and a switch to get a remote memory target for vendors to do development with.

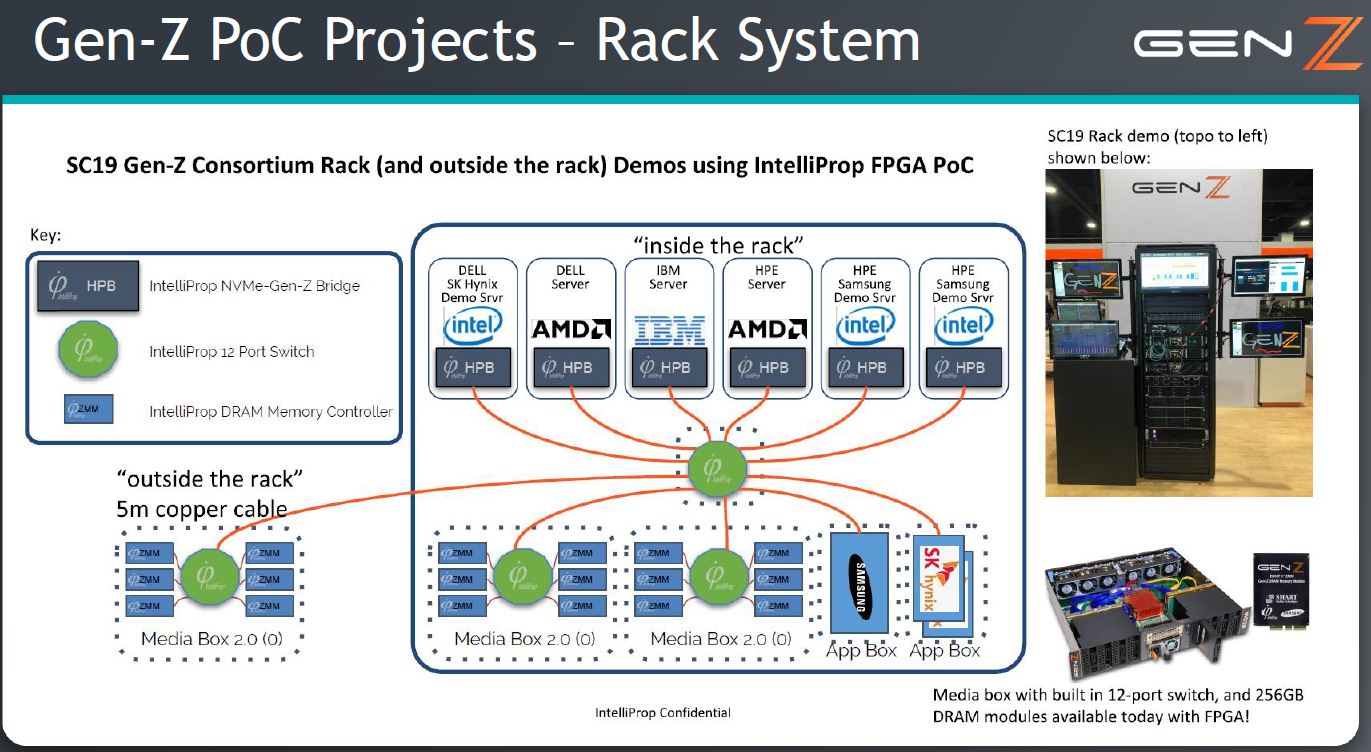

This includes the Gen-Z PoC rack that has CPUs from IBM, AMD, and even Intel working with different memory in an integrated fashion. This is Gen-Z demonstrating interoperability with the Memory Box.

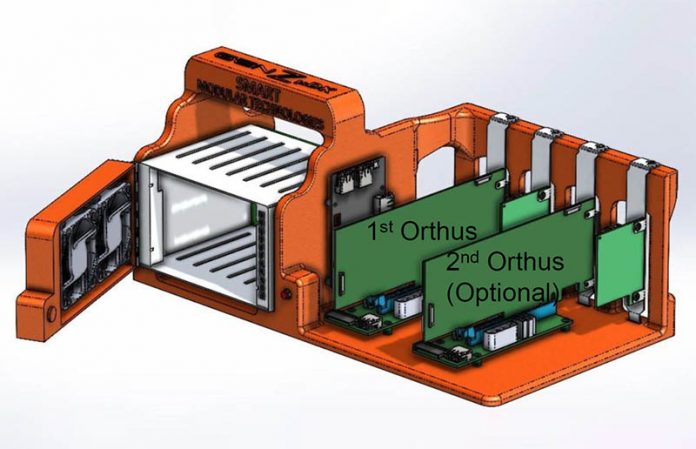

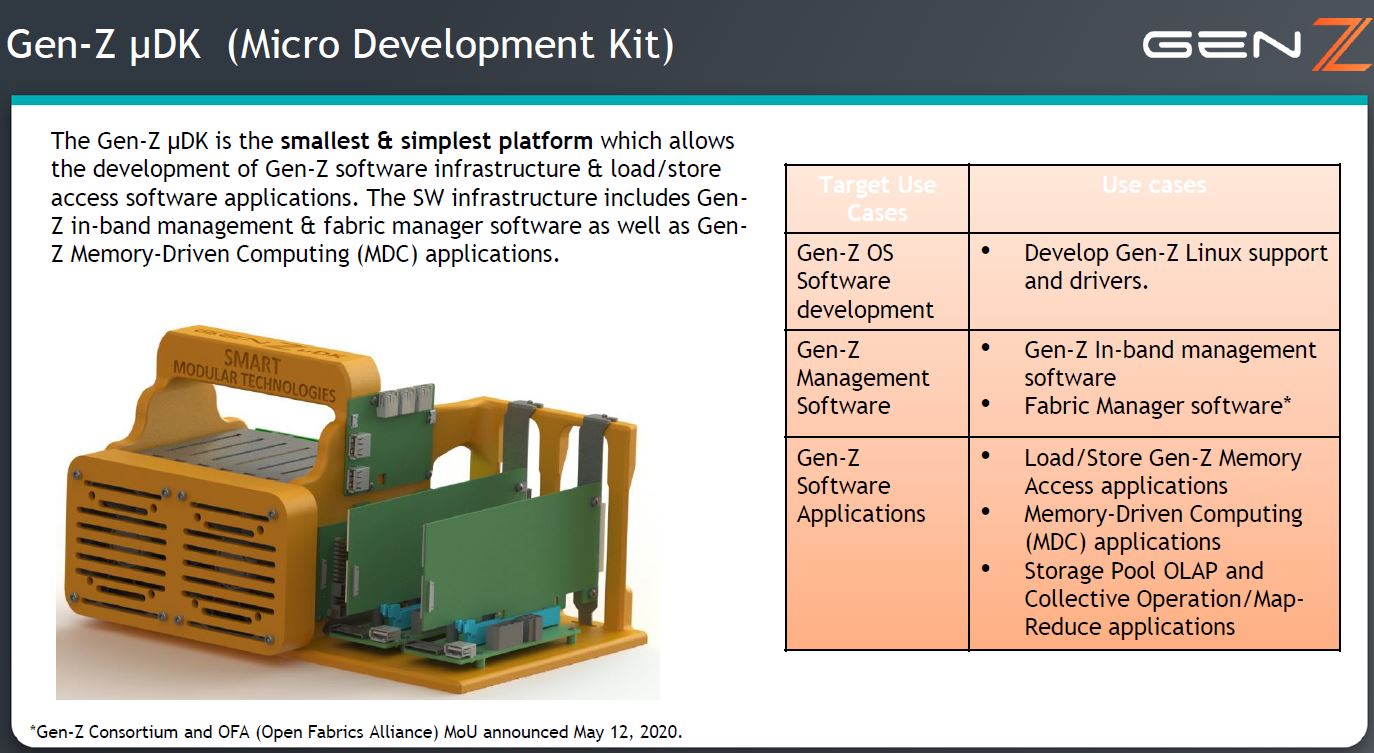

Something that I did not know prior to my briefing is that there is a new Gen-Z Micro Development Kit. While a Memory Box can cost over $50,000 (and likely up from there depending on the exact setup) the Micro Development Kit is designed to be much lower cost. We are told that it is around $10,000-$11,000 with a 64GB ZMM and $14,000-$15,000 with a 256GB ZMM. That does make it extraordinarily expensive memory, but at the same time, it brings the cost of entry into Gen-Z development down to a point where more companies can get involved.

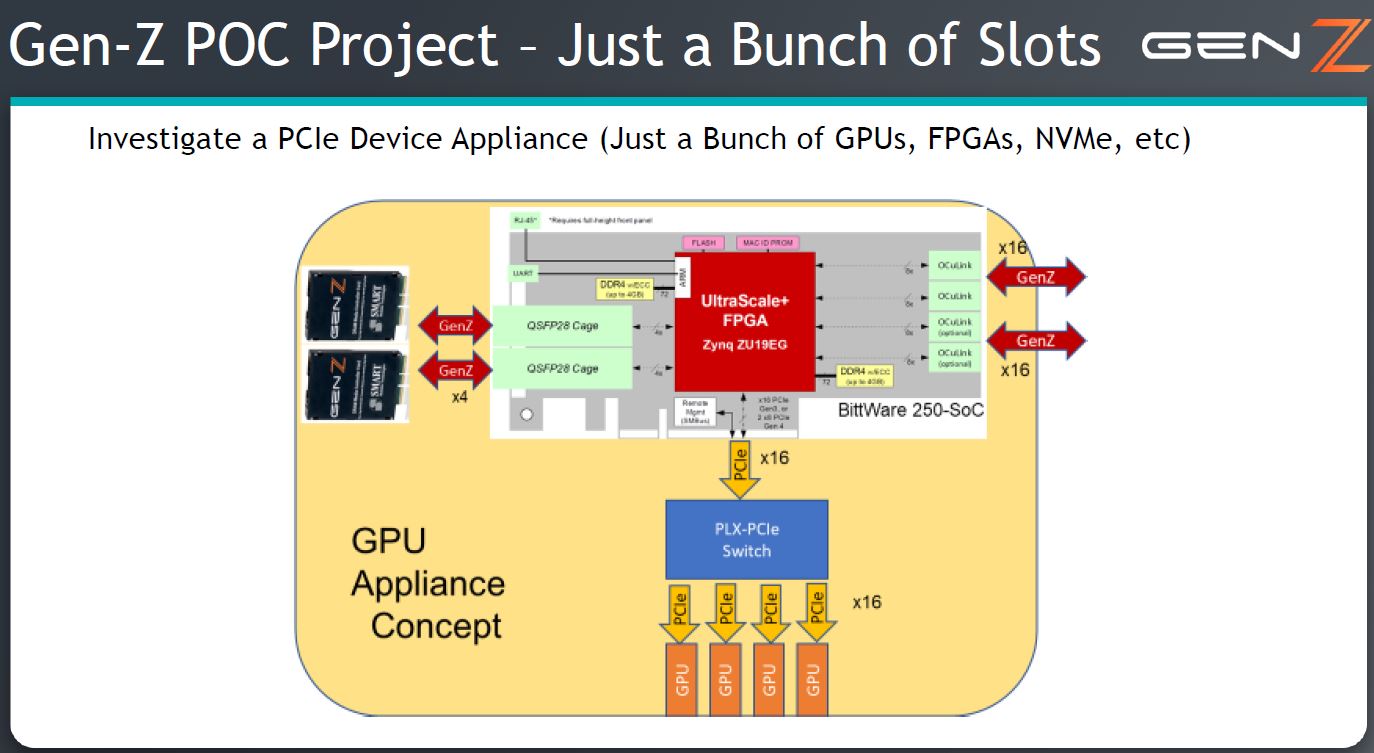

Looking beyond the Micro Development Kit is the Gen-Z PoC Project dubbed the “Just a Bunch of Slots.” I lobbied Kurtis Bowman on our call to call this a JBoS. Here, this uses a FPGA (in the diagram a Xilinx FPGA) to provide Gen-Z fabric connectivity as well as a PCIe subsystem so that traditional PCIe devices, focusing on GPUs at first, can be brought onto the Gen-Z fabric.

If we look at what Gen-Z is working towards, they will soon have the ability to show Gen-Z memory, compute, accelerators, and storage all disaggregated. This is an important step for the effort.

Final Words

Gen-Z is not something that is going to impact your infrastructure in the next two, and likely not the next three years. There is a good chance we will have to wait for Compute Express Link CXL 2.0 devices to be out before hyper-scalers start looking at Gen-Z. In our PowerEdge MX review, that is why we said the chassis was designed for the future. Dell has already shown off the Gen-Z in the Dell EMC PowerEdge MX but we are still some time until that becomes a production reality.

For our readers, CXL 1.1 and 2.0 are likely to have an impact first, but it is important to see where the memory-centric paradigm is extending beyond just the server itself and to how it impacts data center scale systems. Stay tuned to STH for more on Gen-Z.