At STH we have been covering CXL for man quarters from the early Intel CXL Compute Express Link Interconnect Announcement and the CXL explainer at Intel Interconnect Day 2019. Since then, we have chronicled major milestones in adoption as it went from an Intel-led standard to displace CCIX to its position now as the way forward and how it will work with the CXL and Gen-Z Formal MOU. While we expect to see early CXL 1.x generation products in late 2021/ early 2022, the industry is waiting for CXL 2.0 for a very important reason: switches and retimers. Indeed, we have heard major cloud providers talking about CXL 1.x as nice for development, but CXL 2.0 as tantalizing for deployment.

CXL 2.0 Context

There have been a number of different attempts at making a cache coherent interconnect. Ultimately CXL has been adopted by the industry in a major way. We have major chip companies, cloud service providers, and traditional enterprise IT providers all onboard and represented in the CXL Board of Directors. While there was some question in 2019, at this point, it is clear we are moving to CXL.

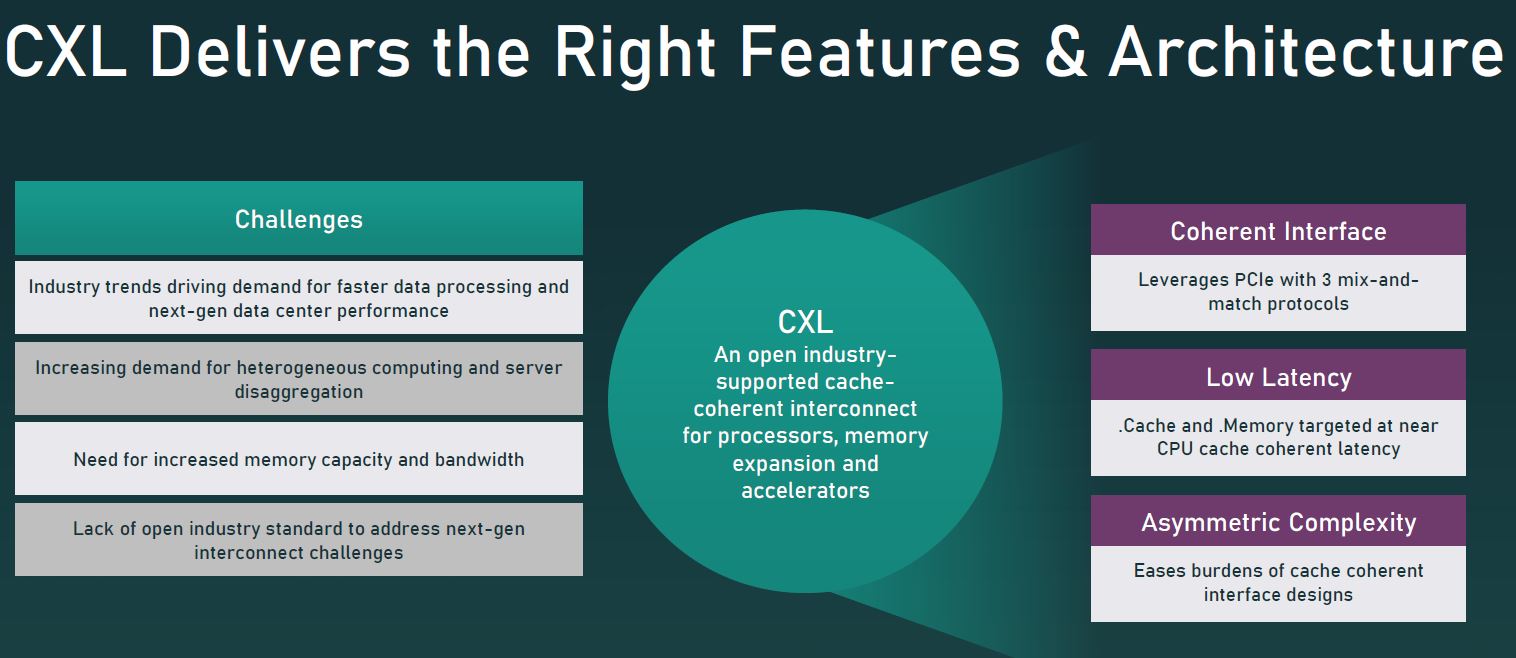

We are not going to cover this slide in too much detail (please read for more) but the main driver for a cache coherent interconnect is that it more efficiently utilizes memory, minimizes data transfer and replication, and can help increase performance.

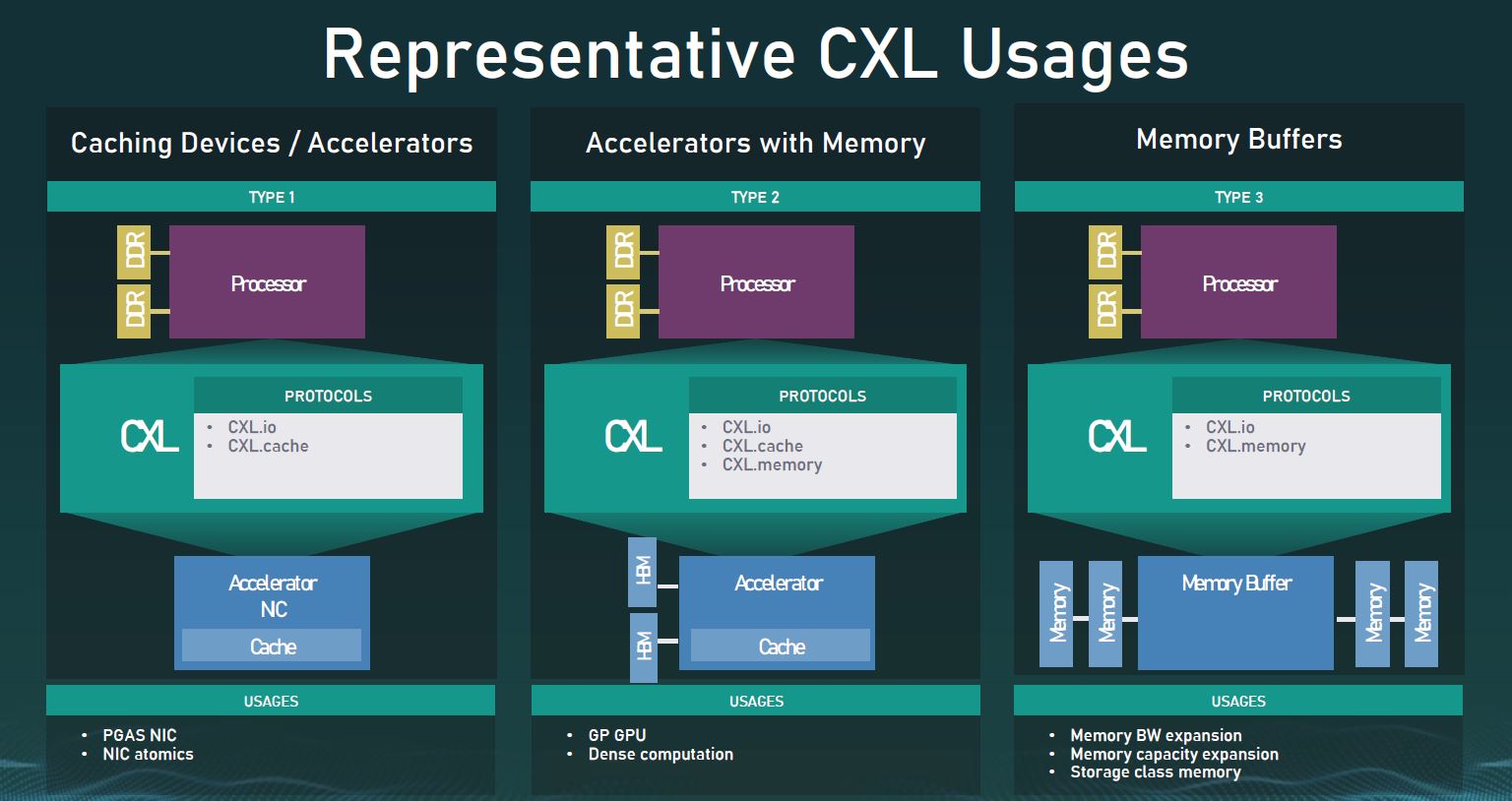

In the CXL 1.0/ 1.1 spec, we effectively got the initial point-to-point use cases for CXL. If you, for example, want a CPU and GPU, FPGA, or other accelerators to leverage their respective memory inputs more effectively, then CXL 1.0/ 1.1 are the basic building blocks to make that happen.

Current cloud providers are not just thinking about this in terms of a simple point-to-point connection. Instead, they are thinking about how this, along with Gen-Z, get hyper-scale data center to true disaggregation. We often describe CXL as the interface for inside the chassis while Gen-Z is for external expansion. That type of deployment is practically made possible with today’s announcement.

CXL 2.0 Specification Released

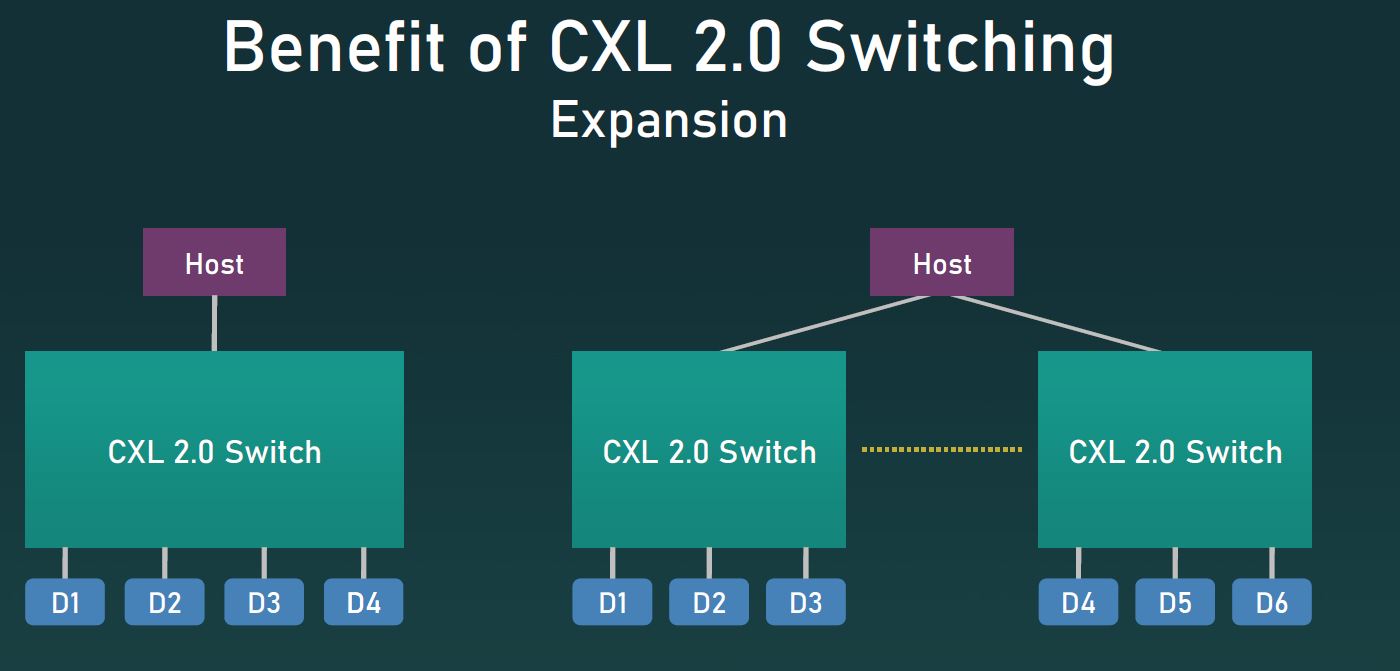

There are two big hardware announcements that are going along with the new CXL 2.0 specification: CXL 2.0 switches and retimers. The announcement itself focused on switches. Retimers are important since they allow for longer runs of CXL which will be increasingly important as speeds increase and PCB is challenged to meet the demand of modern system sizes.

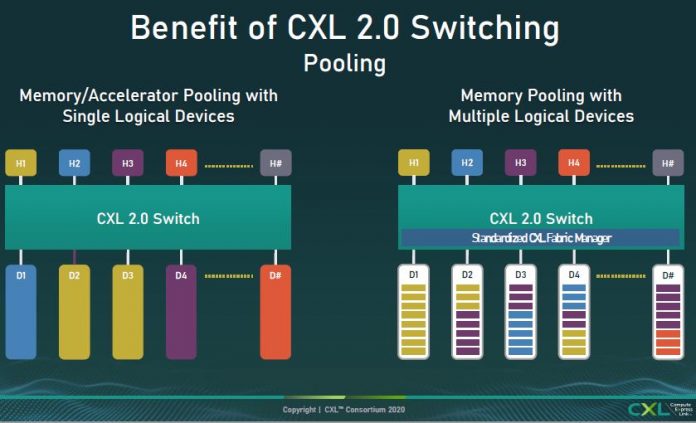

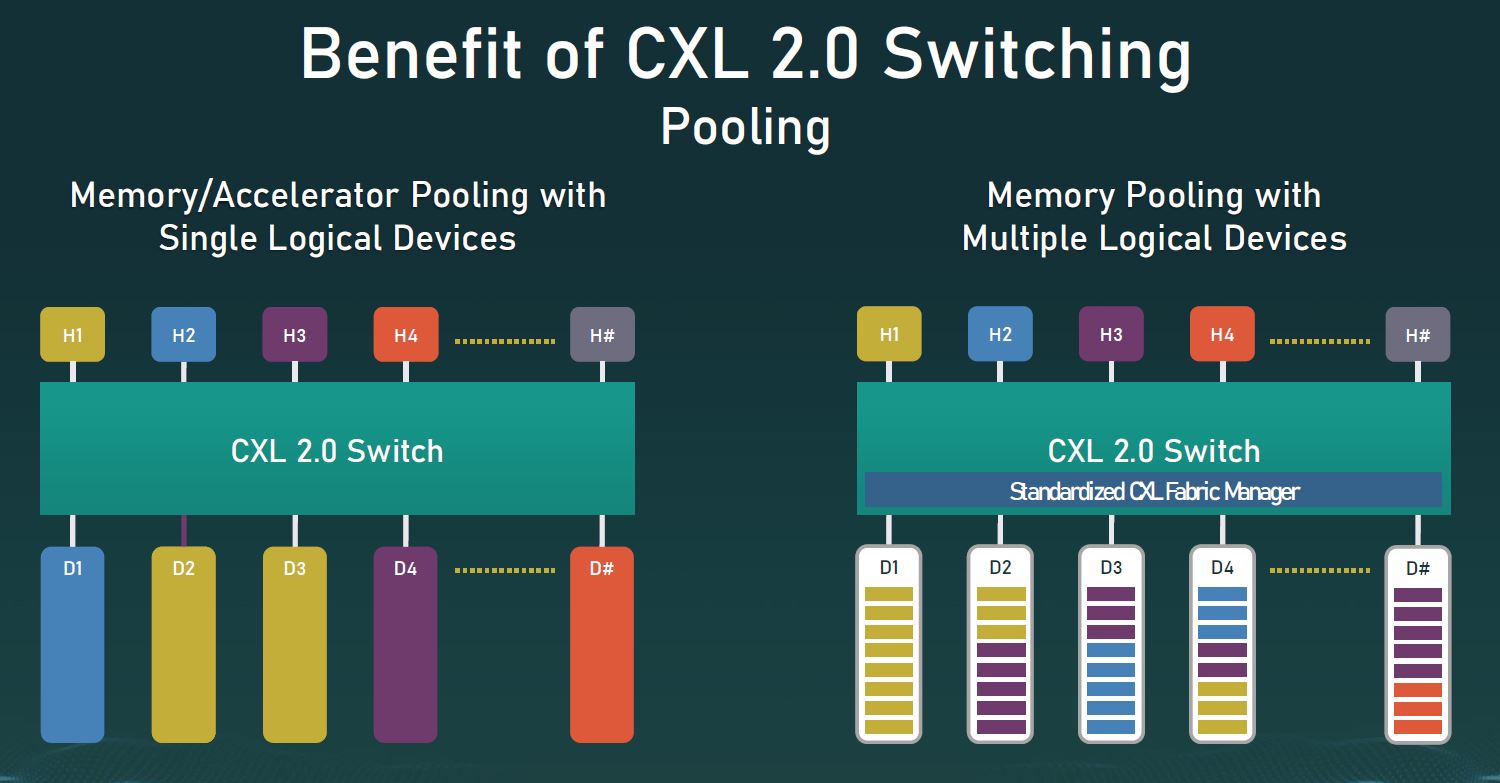

Like a PCIe switch, a CXL 2.0 switch will allow the oversubscription of a host’s PCIe Gen5/ CXL 2.0 lanes to downstream devices. We commonly see these today in accelerator systems with GPUs. At STH, some examples are the Supermicro SYS-6049GP-TRT or our DeepLearning11 project. They are also used in high-end GPU systems even with NVLink such as the NVIDIA DGX A100 and Inspur NF5488M5 we reviewed.

The other common application that these are used is in storage. For example, the Facebook/ OCP Lightning platform is well-known as the hyper-scalers scale-out NVMe JBOF solution. With CXL switching, we get the ability to share multiple hosts and multiple resources whether they are accelerators or storage. This is what leads to large disaggregated resource pools in the data center.

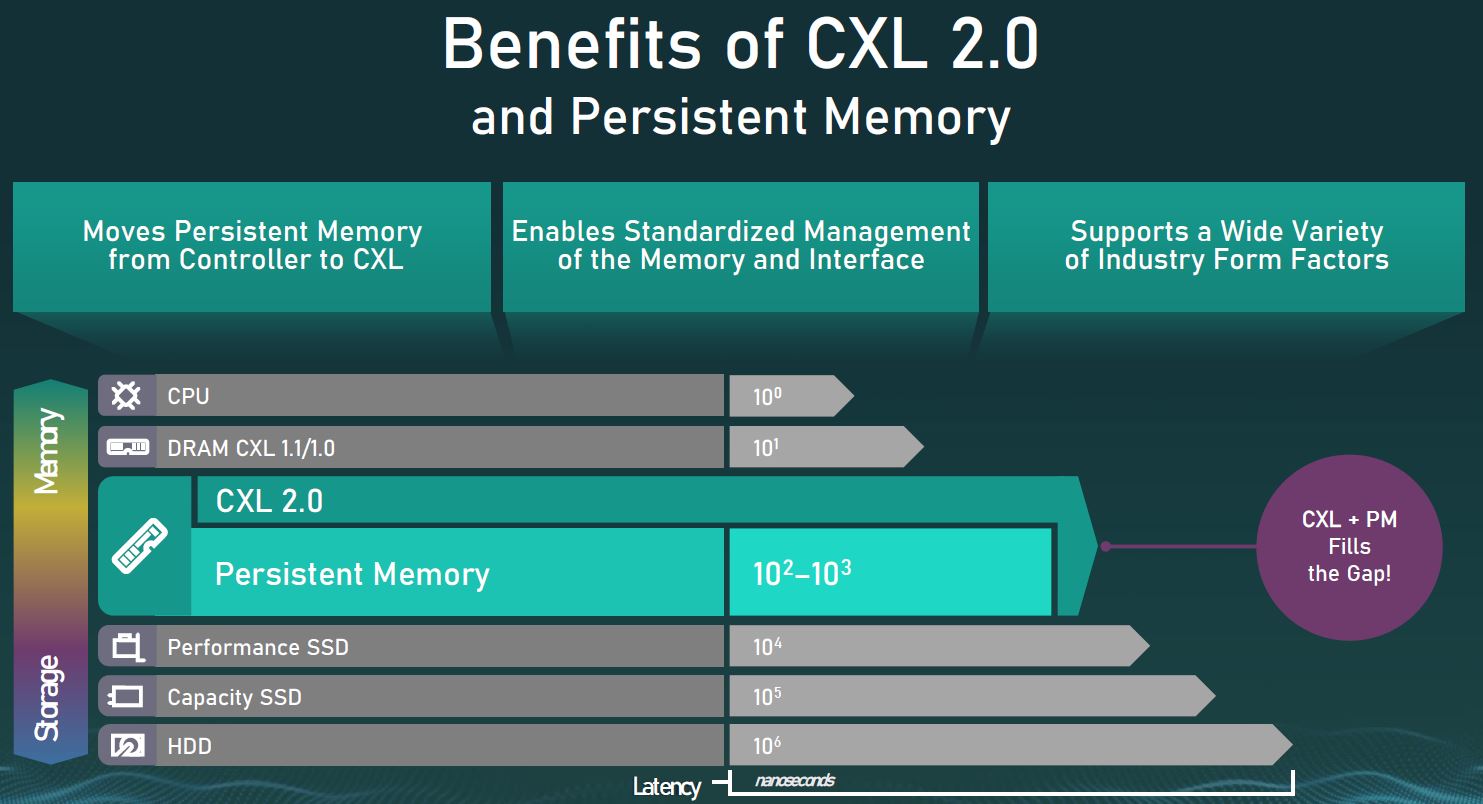

A key challenge of CXL doing load-stores across longer, higher latency links (especially on a switched/ retimed fabric) and across different types of devices is guaranteeing that data has indeed been stored on a persistent memory device. CXL 2.0 has a unique flow and software layer for this. It is not really a surprise since Intel has been working on providing software models to use Optane DCPMMs (now Optane Memory) for some time. That work has made its way to standards bodies so that other persistent memory solutions can interact in a similar way.

Why this persistent memory matters a lot for large data center operators goes beyond just data integrity. CXL memory controllers with persistent memory attached can bring persistent storage to a server, or a pool of servers. For example, today, we discuss power-loss protection or PLP on SSDs. In the future, data can be written in a persistent manner to Optane (as an example) and then flushed to NAND. This gives the application benefits of being able to complete fast sync writes and can remove most of the DRAM cache and PLP from NAND SSDs since persistence can be handled elsewhere. That translates to large TCO savings in storage.

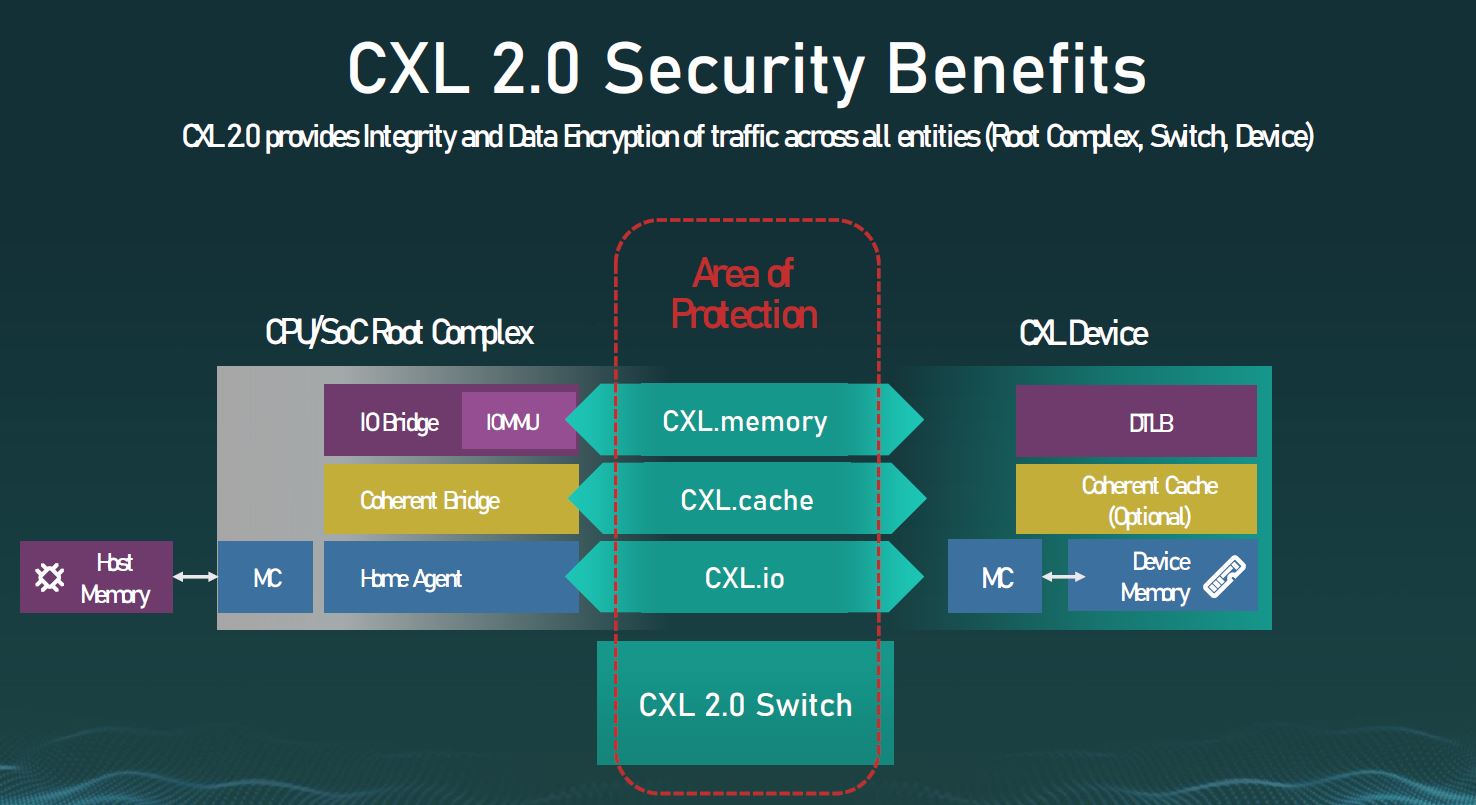

Another optional layer in the CXL 2.0 stack is security. The CXL 2.0 spec has a feature that is designed to prevent wires from being probed and easily snooped. Physical security is important and CXL is recognizing that. One other important note here is that CXL devices such as switches and retimers need to support the security spec as well.

Final Words

Overall, this is one of the big announcements that will have major impacts as we start to see CXL 2.0 ecosystems deployed likely in the 2023-2025 timelines. Your purchases of servers in late 2021/ 2022 will likely start to have CXL 1.x as part of their feature set, and that will enable some point-to-point use cases. The bigger disaggregation plays happen with the CXL 2.0 generation.