This may be a bit of a misleading title since you will most likely want this on the floor. Still, the NVIDIA DGX Station A100 brings four NVIDIA A100 80GB GPUs to a tower form factor for deployment outside of the data center.

NVIDIA DGX Station A100

Since I know many of our readers will want to just see the specs, here they are:

The basic workstation platform is using a 64-core AMD EPYC CPU. NVIDIA did not specify and since AMD EPYC 7003 “Milan” has been shipping for several weeks, perhaps that is the plan yet is being held up by AMD’s delayed public launch. Otherwise, it is strange not to call out a publicly shipping EPYC CPU. [Update 2020-11-17: NVIDIA confirmed that this is the EPYC 7742 in both the 320GB and 160GB models.]

The “up to 512GB” system memory seems odd as well. Even in an 8-channel configuration, we would expect that using 256GB LRDIMMs with EPYC would give us 2TB of memory maximum. Connectivity is also interesting. We get dual 10Gbase-T. That means we have lower-end networking than NVIDIA-Mellanox 25GbE as a starting point in a system with this level of performance. We also get four Mini-DP ports which we would not expect with the A100’s.

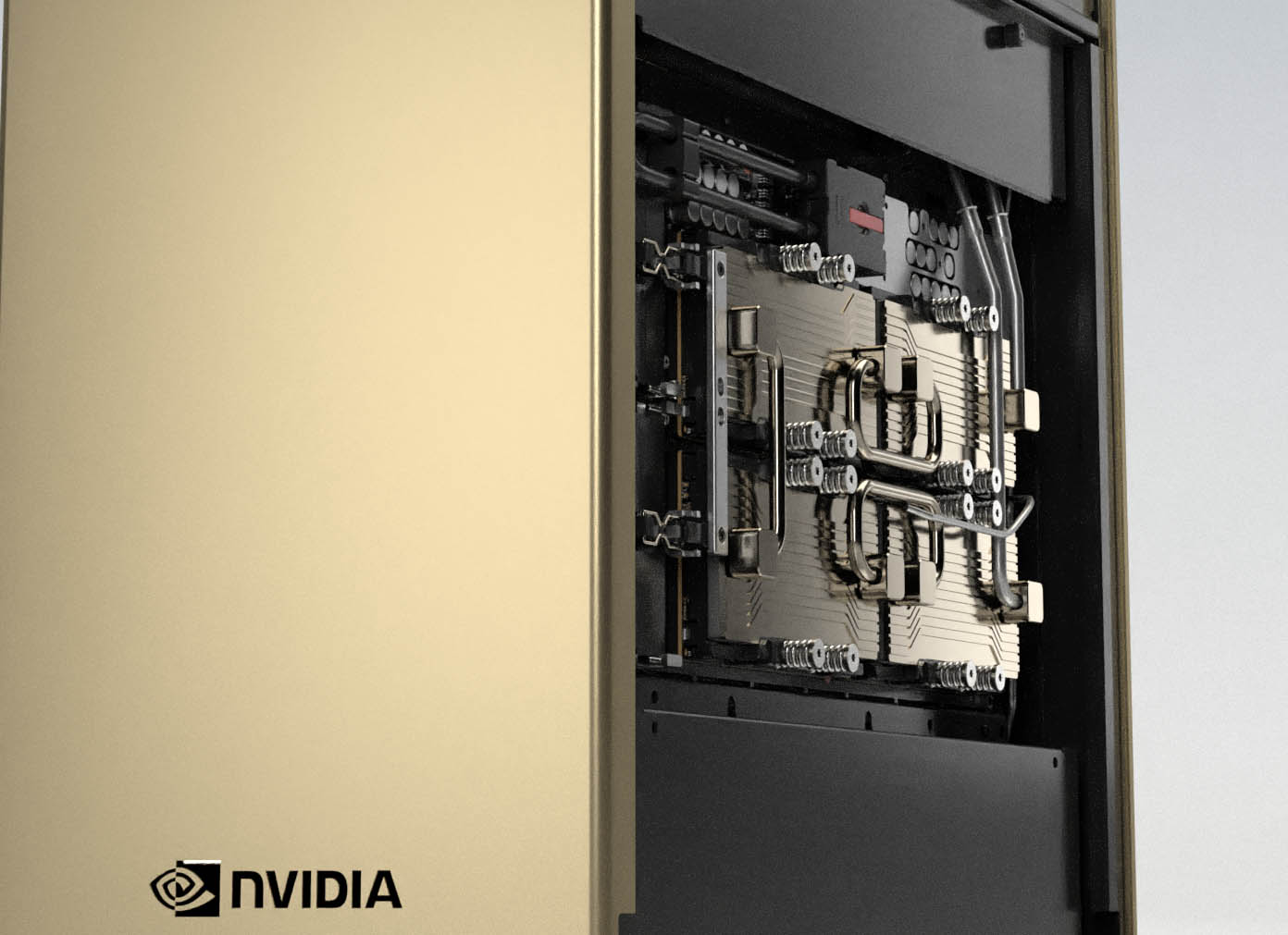

All of that is almost second chair to the main point of the system. There are four NVIDIA A100 GPUs onboard. NVIDIA has a custom, and very cool looking, water cooling system. That is important since at full power in a NVLink (but not NVSwitch) Redstone 4x GPU platform we would expect to see power consumption near the limit of a standard 15A 120V wall socket in North America.

Using the NVIDIA A100 GPUs, one can get MIG instances turning this into a multi-GPU or multi-instance machine. NVIDIA usually uses these as anchor workstations that can be sold and deployed to a customer for development before racks of A100 GPU servers can be deployed.

Final Words

Overall, this is a really interesting design. NVIDIA did not give us pricing, the previous NVIDIA DGX Station V100 was $69,000. Considering the raw cost of the GPUs alone on the NVIDIA A100 Redstone 4x GPU baseboard is likely well over $40,000 and that there is a lot of custom work on this one, we would expect similar pricing this time around.

Cliff, The product will launch with a AMD Epyc 7742 CPU (Rome).

Very interesting. I looked at some of the pictures and it appears to be a completely bespoke motherboard, with PCIe slots on the top for the normal graphics cards, but mezzanine slots for the GPU’s on the back of the board.

The cooling system is a major departure too. It appears to be a sealed refrigerant system (phase change) with all hard lines. Not a typical water cooling system.

Amazing.

The prior DGX Station with the Volta GPU’s was built on an Asus X99-E WS/10G motherboard.

Do the buyers really care if it won’t work on a residential circuit? I’d expect that if you’ve got $70k to spend on this system you’ve also got $500 to run a 208V 20A drop.

> Do the buyers really care if it won’t work on a residential circuit? I’d expect that if you’ve > got $70k to spend on this system you’ve also got $500 to run a 208V 20A drop.

Actually, yes they do.

Not all researchers have access to a datacenter or an IT/facilities department willing to install special power at their location. The fact that you can unbox the system, plug it in the wall, boot it and be up and running training models the very same day is a hugely popular feature of the system with customers. Also in the era of COVID, more data scientist and researchers are now working from home, making this a very friendly option for them.

It also means that researcher and data scientist can start working on developing their training models while a new data center supercomputer cluster is still being built by their IT department. The unit can also be placed in a research lab and rolled under a desk, again with no special power requirements – just plug and go.

Mac Pro vs DGX Station A100

https://i.postimg.cc/hG0y0qW3/Mac-Pro-vs-DGX-Station-A100.jpg

Pretty sure this one is going to need more than standard household or office power. The CPU is 225w TDP, each of the A100 80GB GPU cards are 400w TDP (x4). The refrigeration system is going to be a bit power hungry too.

The system is easily >2000 watts running full throttle. I’m going to wager, 2400 watts or better to let it really run.

The only way it runs on 120v 15A wall outlet alone is with some serious performance throttling on everything.

> The only way it runs on 120v 15A wall outlet alone is with some serious performance throttling on everything.

The GPU’s are optimized to run at a lower TDP that 400W with little performance loss. You can see some of the published benchmarks performance in the datasheet:

https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/dgx-station/nvidia-dgx-station-a100-datasheet.pdf