When the NVIDIA A100 was launched earlier in 2020, perhaps the most perplexing spec was its 40GB of HBM onboard. The previous generation NVIDIA V100 started at 16GB but eventually had a 32GB model. It seemed like 40GB was a relatively minor bump given we saw a 100% bump just within the NVIDIA V100 generation. Now we have the answer, the NVIDIA A100 80GB.

NVIDIA A100 80GB

The new NVIDIA A100 80GB doubles the memory capacity of the NVIDIA A100. Here we get 5 stacks of 16GB of HBMe for our 80GB capacity. NVIDIA says they are not using the 6th spot that you can see in the pictures for yield reasons.

On the pre-briefing, NVIDIA said that the SXM4 NVIDIA A100 80GB is still a 400W card. We also asked about the PCIe card and NVIDIA told us that that would remain a 40GB card at this time. Our thought is to not count out an 80GB card in the future for the PCIe market.

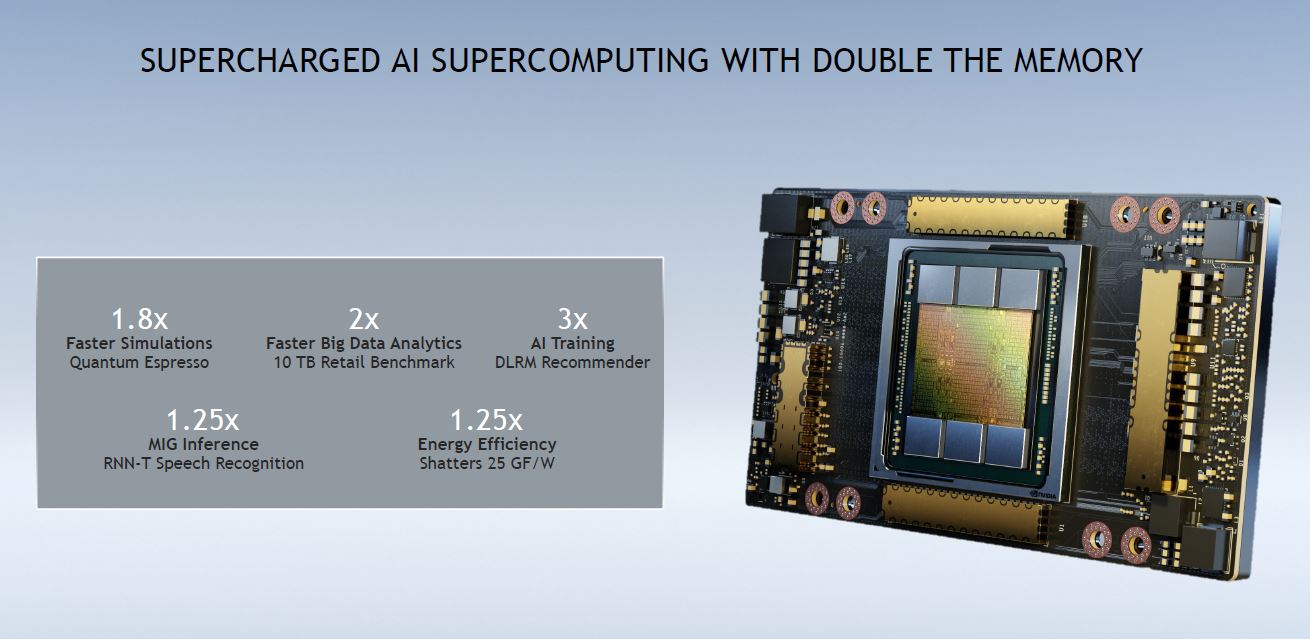

NVIDIA also offers that we can get a lot more performance depending on the workload. Obviously, those workloads that are memory-constrained will see better performance with the new model. NVIDIA is selectively choosing which benchmarks to show here.

Something we wanted to note is that these cards are not sold individually as we see with the PCIe cards. NVIDIA now sells these on the 8x GPU HGX boards as we saw in our Inspur NF5488M5 Review using the NVIDIA Tesla V100. There is also a 4x A100 NVIDIA A100 4x GPU HGX Redstone Platform that does not use the NVSwitch setup. With these new 80GB units, we now get 1.28TB of memory in the 16x GPU models (two 8x GPU NVSwitch HGX boards), 640GB in the 8x GPU HGX board, and 320GB in the 4x GPU Redstone non-NVSwitch platform.

Final Words

This move is likely a costly one. Adding 40GB of HBM2e to a card is not an inexpensive proposition. At the same time, it completely makes sense. Models are constantly getting larger so there is a continuous need for more and faster memory next to compute. Whereas the 40GB model provided modest gains over the V100 generation, the 80GB model is another major leap forward.

For customers of the 40GB model, this may seem like a rough solution. NVIDIA said it has upgrade kits since these are now sold on the HGX PCB’s it is easy. At the same time, many OEMs are just starting to get a better supply of 40GB A100’s and so customers who purchased the A100 40GB versions are probably going to start looking at what it will look like to get the 80GB version.

NVIDIA declined to give pricing saying it sells its GPU’s through channel partners. For our NVIDIA channel partner readers, you will just have to contact your NVIDIA rep.