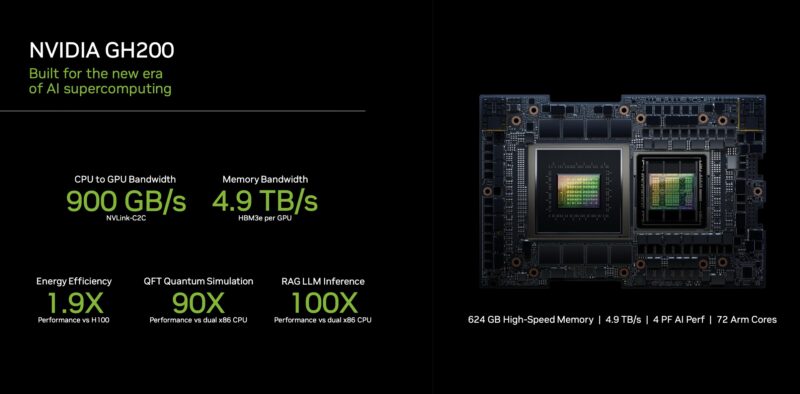

NVIDIA already launched the 141GB HBM3e variant of its H200 platform with the Grace Hopper GH200. Now, we get the more general launch of the H200 141GB ahead of its 2024 availability.

NVIDIA H200 Launched with 141GB of HBM3e at SC23

The NVIDIA H200 has at least one big change. Transitioning to HBM3e memory gives NVIDIA the ability to utilize both higher capacity HBM stacks as well as get more performance. Memory capacity and bandwidth tend to be major limiting factors in AI and HPC, so this is seen as a way to expand the frontier on the memory side.

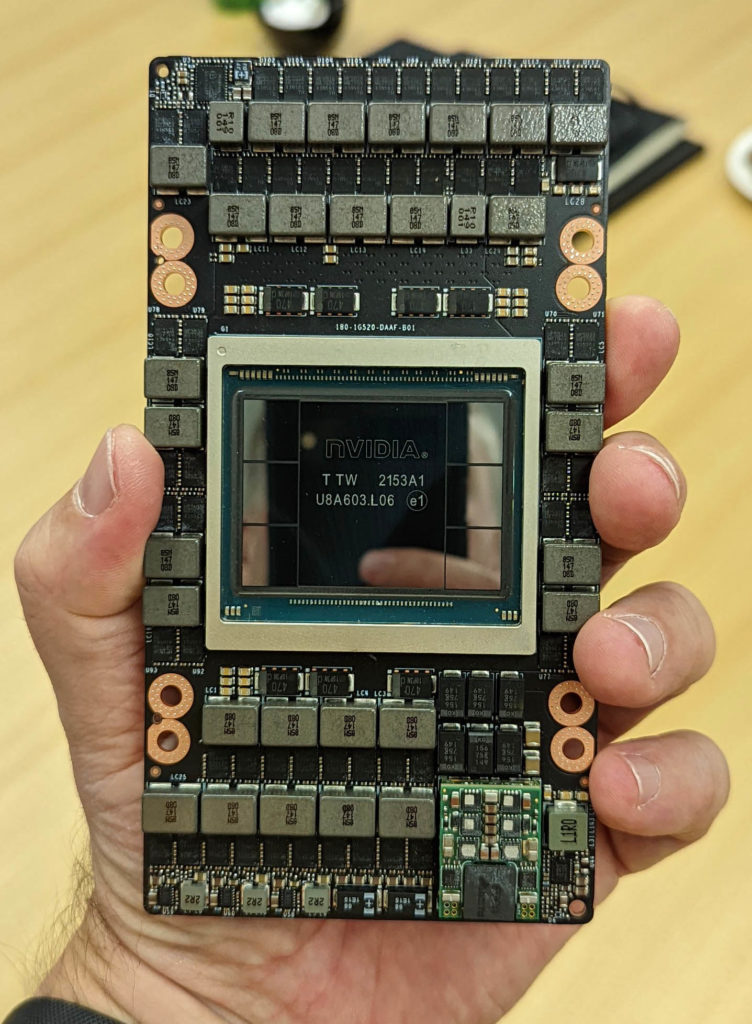

Looking at a H100 SXM5 module, we can see that there are six HBM stacks around the Hopper GPU. 141GB does not fit nicely into 6 packages evenly, so our guess is that this is really 6x 24GB HBM3e stacks. We went into this more about a quarter ago in the NVIDIA H100 144GB HBM3e piece.

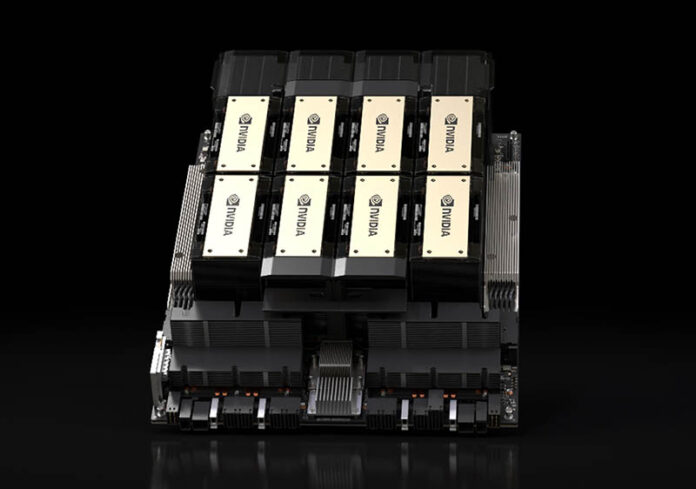

These H200 models are said to be in production now and should be with customers next year. These SXM5 modules will be packaged into eight GPU assemblies and sold to customers paying top dollar.

Of course, NVIDIA already launched the H200 as part of the Grace Hopper GH200 part that combines 72 Arm Neoverse V2 cores with the H200 GPU on a single module. That is not to be confused with the Grace Superchip as the two are not compatible.

It will be exciting to see these next year.

Final Words

Overall, it is awesome to see the refresh Hopper GPU. For those who are not familiar with this, the A100 was launched at 40GB and then upgraded to 80GB during its refresh. The V100 was launched at 16GB and eventually saw 32GB models. Instead of doubling, we are only getting a 76% uplift in this generation. That may not seem as exciting, but if you purchased a NVIDIA V100 16GB 8-way launch system, each new H200 module will now have more HBM memory for about an 8.8x increase in memory per HGX system.

If folks are wondering why we have been doing liquid-cooled HGX platforms recently like the Supermicro SYS-821GE-TNHR 8x NVIDIA H100 AI Server, the H200 refresh was one of the reasons as we expect that will push more folks to liquid cooling. The next full generation of NVIDIA GPUs will see liquid become more mainstream, so to STH readers, get ahead of the curve.