NVIDIA did something really interesting this week at SIGGRAPH, it announced a new CPU and GPU combination that signals a looming business shift. Specifically, it announced a next-gen GH200 targeting 2024, but with a twist: NVIDIA announced a future H100 GPU and signaled it is demoting Intel and AMD CPUs in the process.

NVIDIA Grace Hopper 2024 Refresh

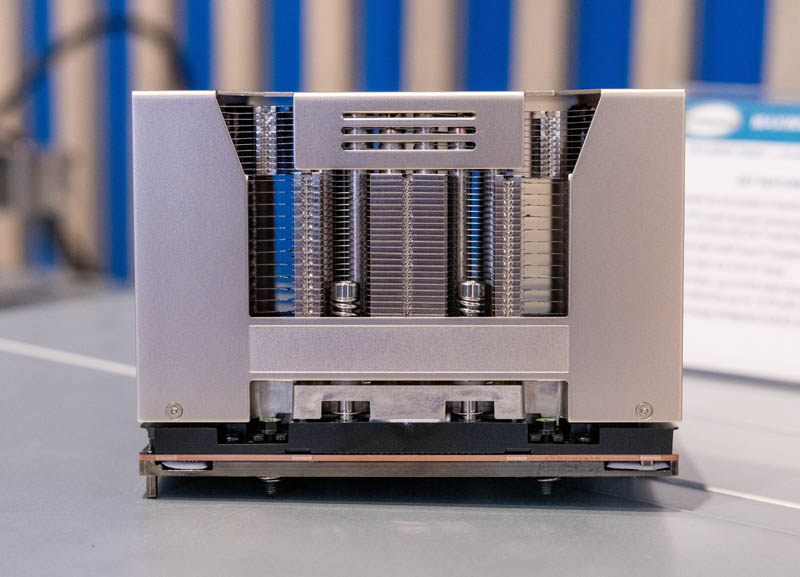

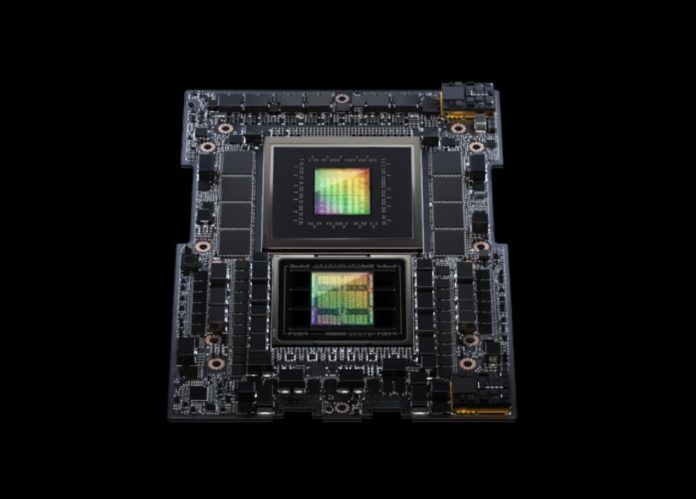

The big news is that NVIDIA has a new “dual configuration” Grace Hopper GH200, with an updated GPU component. Instead of using HBM3 as is used today, NVIDIA will use a HBM3e-based Hopper architecture.

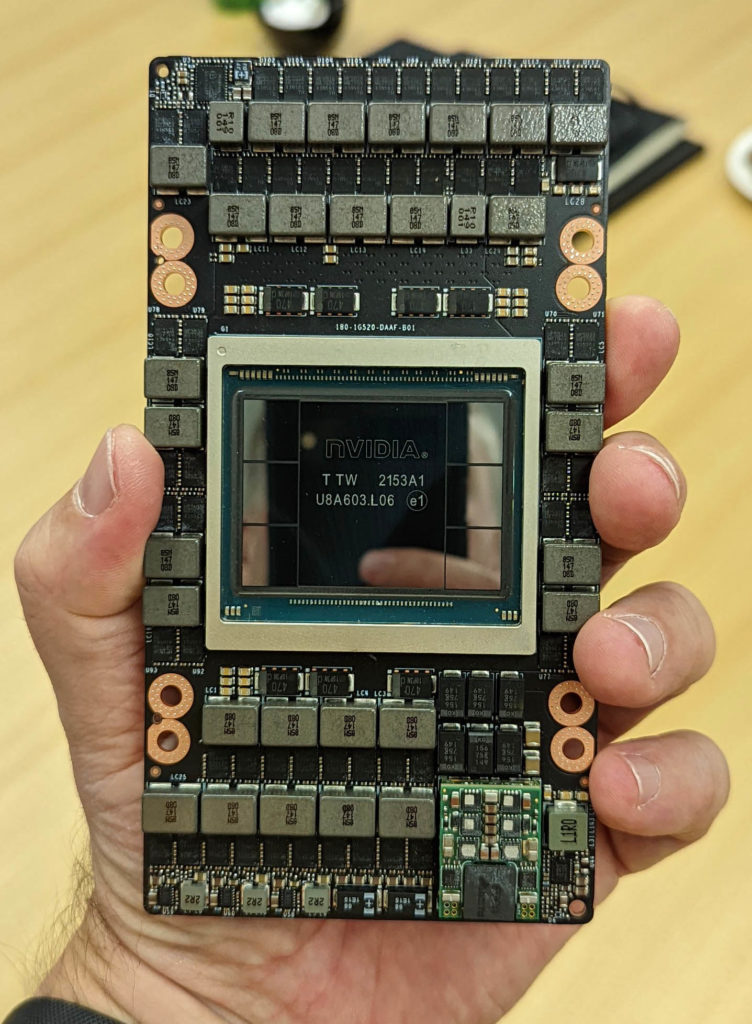

Today’s NVIDIA H100 has an 80GB of HBM3 memory. These are 5x 16GB HBM3 stacks active and that gives us 80GB total.

Looking at NVIDIA’s announcement, the next-Gen GH200:

The dual configuration — which delivers up to 3.5x more memory capacity and 3x more

bandwidth than the current generation offering — comprises a single server with 144 Arm

Neoverse cores, eight petaflops of AI performance and 282GB of the latest HBM3e

memory technology. (Source: NVIDIA)

The 144 Arm cores are easy to get to. A dual configuration as NVIDIA’s accompanying image shows, has two Grace 72-core Arm CPUs.

Splitting the figures in half then gives us 2x 72 cores NVIDIA Grace CPUs. There is then the question of 282GB of HBM3e memory. We can divide that by two and get 141GB of HBM3e per module.

That is a bit of a strange number in HBM terms. Instead, we have seen HBM3 vendors SK hynix and Micron work on upgrading the stacks of HBM from 16GB each to 24GB.

If we then take that 24GB figure and assume NVIDIA is not using an inactive die for support anymore, that gives us six HBM3e spots for 6x 24GB or 144GB. While that is not 141GB, it is close enough that it feels like that is the configuration NVIDIA is planning on for the H100 side of the next-gen Grace Hopper.

This is very normal. NVIDIA launched the V100 at 16GB and then did a mid-cycle refresh at 32GB. It then launched the NVIDIA A100 at 40GB doing a mid-cycle refresh with the A100 80GB. Given we first saw the NVIDIA H100 in early 2022, NVIDIA appears to be starting its refresh cycle for the H100 with a 144GB or 141GB model that will be featured on the refreshed GH200.

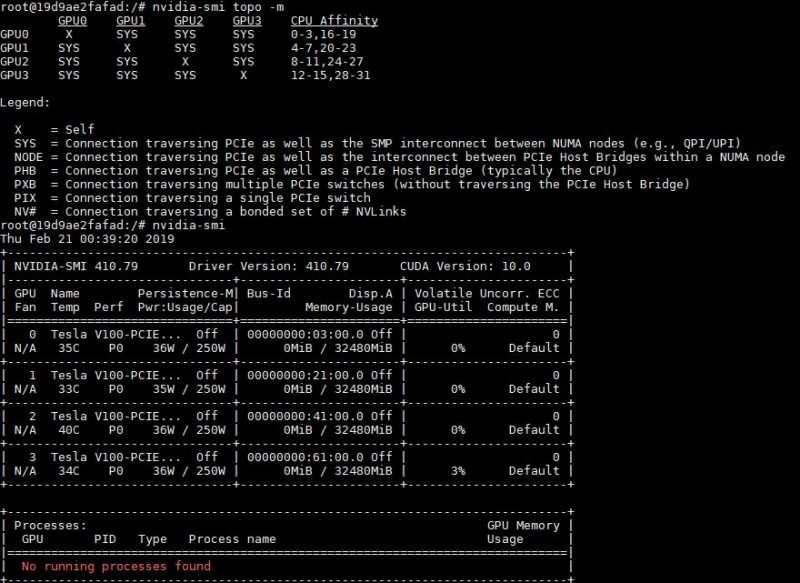

We are focusing on this announcement’s significance for two reasons. First, one of the big reasons that NVIDIA had a large gap between H100 announcement and availability was x86 CPUs. NVIDIA picked Intel for the DGX H100, its reference HGX H100 8x GPU platform. Very soon thereafter, Intel Sapphire Rapids was delayed to a security re-spin (that we do not talk about in detail in the industry) so NVIDIA’s PCIe Gen5 CPU was not available to host the H100. AMD’s EPYC Genoa ended up being announced in 2022 but became a 2023 product for most customers as AMD was rushing to pre-announce Intel. Meanwhile, NVIDIA had the world’s best GPU without a CPU to pair it with.

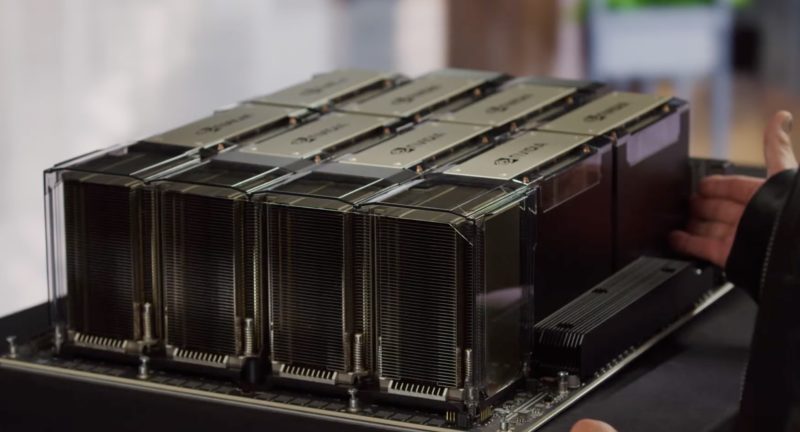

NVIDIA’s announcement of the refreshed NVIDIA H100 on the Grace Hopper platform is significant. This is the first time that NVIDIA has announced a flagship GPU first on its own Arm CPU platform. The industry has settled on the notion that NVIDIA is becoming the next IBM of yesteryear. It owns the CUDA software stack, it is building its own GPUs, CPUs, both with integrated memory, and several layers of interconnect including NVLink and InfiniBand. It is even putting Arm chips and logic into its DPUs. Beyond CUDA, it is pushing software and services through things like NVIDIA Enterprise and building its own supercomputer cloud rental service.

Final Words

Currently, NVIDIA is enjoying huge demand and pricing power. What it is showing is that it is increasingly going to favor its own CPUs and ecosystem over others. That makes perfect sense since NVIDIA can say it is open to other architectures, but then have its GPUs be better on its own platforms.

Now that the NVIDIA H100 refresh with HBM3e has been outed on Grace Hopper, the rest of the industry should be on notice. Even if it does not happen in this generation, NVIDIA currently has the power to make better versions of its GPUs available only on its CPUs and charge a large premium for both. From a business perspective, that is an awesome place to be, but it is also going to create an opportunity elsewhere as the margins increase even more.

Is there any word on how tightly coupled the two CPUs will be in the new dual configuration offering?

Nvidia’s announcement just says “a single server with 144 Arm Neoverse cores”; which I’d assume is distinct from just two nodes sharing sheet metal(though the press glamor shot looks an awful lot like two separate motherboards side by side without anything connecting them); but are we talking just a NUMA hop away if you need something on the second chip or you going out the NIC and back?

Confusing. A headline about H100 – a Hopper GPU – but an article about Grace Hopper CPU+GPU product.

Have I missed my morning coffee?

I was equally confused too. Most of the Article was about grace hopper

The confusion is because it’s a linked announcement. There Is a new Grace Hopper system with the only change being the upgraded memory on the H100, thus a new version of that GPU.

The new H100 appears to only be announced for the Grace Hopper system. It doesn’t seem that the H100 with the updated memory will currently be sold separately.

I wish that A100/A800/H100/H800 SXM could also have 144GB configs.

Dreaming of having a similar module with an Epyc and a H100 on the same PCB.

And I’ll most likely keep dreaming forever.

Derek is spot on. It’s an important news because NVDA finally uses its GPU dominance to promote its full platform (CPU+GPU+DPU+Interconnect). In other words, the new shinny and faster 141GB HBM Hopper is only available when paired with Grace.