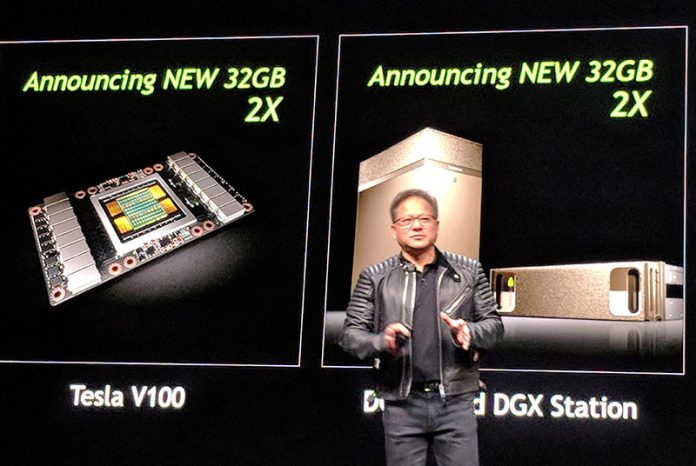

The NVIDIA Tesla V100 32GB is simply the GPU you want right now if you are a deep learning or AI engineer focused on training. At GTC 2018, NVIDIA Tesla V100 got an upgrade from 16GB at launch to 32GB of onboard HBM2 memory. That increase in the memory means that one can train larger models faster, something that most AI researchers are keen to do.

Why the NVIDIA Tesla V100 32GB

As a NVIDIA Tesla platform, the V100 utilizes CUDA natively. Today there are alternative architectures available, however, virtually the entire current deep learning / AI space is built specifically on CUDA architectures. For a researcher, this is the difference of “it just works” versus spending hours trying to figure out how to bridge the gap with another architecture. At last year’s GTC, the NVIDIA Tesla V100 was announced and NVIDIA got so far ahead of its competition that its flagship refresh a year later meant increasing the RAM from 16GB of HBM2 to 32GB of HBM2.

Having 32GB of memory locally means that more model data can be stored locally on each GPU. In turn, that means fewer GPU to host or GPU to another fabric node requests. Swapping data from host CPUs to the GPU is a relatively slow process, so loading as much data onto GPUs as possible is an architectural preference. For years, the higher-end chips such as the NVIDIA GeForce GTX 1080 Ti has been a darling of the AI community due in no small part because it had 11GB of onboard memory when the top-end Tesla GPUs had 16GB. With such a small differential, there has been an entire industry built on good-enough compute which NVIDIA has been cracking down on. Moving to 32GB now puts significant separation between the Tesla V100 32GB and the NVIDIA Titan V 12GB parts.

One of the major inhibitors to the adoption of more HBM2 memory, faster than GDDR5/ GDDR5X memory, has been pricing. The HBM2 memory manufacturers figured out that their memory was being used in $6-10,000 GPUs, high-end FPGAs and similar applications and raised prices significantly. It seems as though NVIDIA has figured out how to add another 16GB of HBM2 memory while keeping prices in-line with expectations.

As part of today’s announcement, NVIDIA also says that its DGX-1 and DGX Station are refreshed with the Tesla V100 32GB generation.