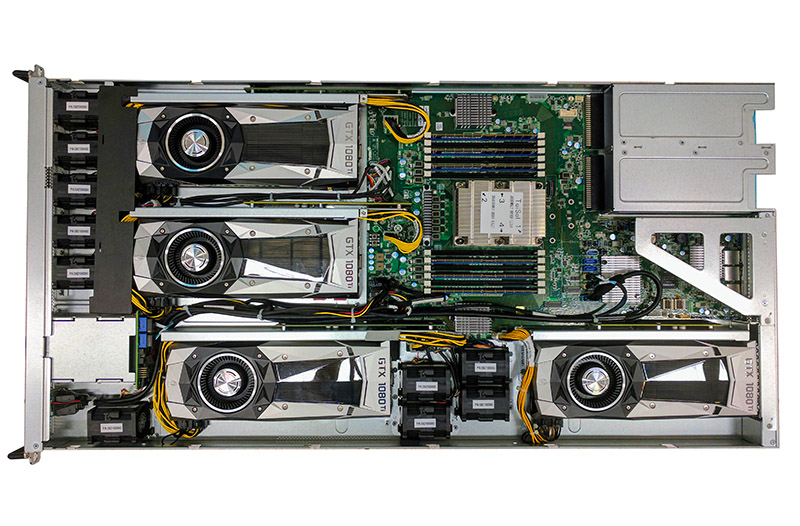

In July 2017 we published a piece about a 10x NVIDIA GeForce GTX 1080 Ti deep learning server we dubbed DeepLearning11 (DeepLearning10 was the 8x GPU version.) You can read about that here DeepLearning11: 10x NVIDIA GTX 1080 Ti Single Root Deep Learning Server. About nine months removed, there are some market factors weighing heavily on the system such that the price of components has actually gone up, significantly, in three quarters.

In this article, we are going to discuss component pricing trends impacting these deep learning systems. We are going to then address how NVIDIA is trying to discourage their sales. Finally, we are going to show how these trends impact the total cost of ownership for these types of systems.

Component Pricing

There are a few factors that are playing into this rise in deep learning systems costs. Breaking these down by category we are going to cover four areas:

- NVIDIA GPUs

- DDR4 DRAM

- SSDs

- Intel Xeon E5-2600 V4’s

NVIDIA GeForce GTX 1080 Ti 11GB Pricing

Ever since the NVIDIA GeForce GTX 1080 Ti was released, it has been a popular card with the deep learning communities. The 11GB of onboard memory was a significant step up from the GTX 1080’s 8GB footprint and there were more CUDA cores to boot. Instantly, Silicon Valley deep learning admins were scrambling to buy cards.

Over the past few months, the market has shifted. Whereas in June/ July 2017 we were paying about $700 per GTX 1080 Ti, it is very difficult to purchase a card for under $1000 due to demand. These cards are popular not just for deep learning, but also for cryptocurrency mining. As crypto mining operations snapped up cards, those companies who use GTX 1080 TI’s for their deep learning installations still needed more cards. As a result, demand has far outstripped supply and prices have skyrocketed.

We are using a $1200 per GPU value as of 9 March 2018 but we have heard from many users and vendors that they have paid in the $1500 range especially for systems that needed to be built quickly, and that is assuming they could find supply. Since there are 10 GPUs in DeepLearning11, that means the cost has gone up.

DDR4 Pricing Following DRAM Trends

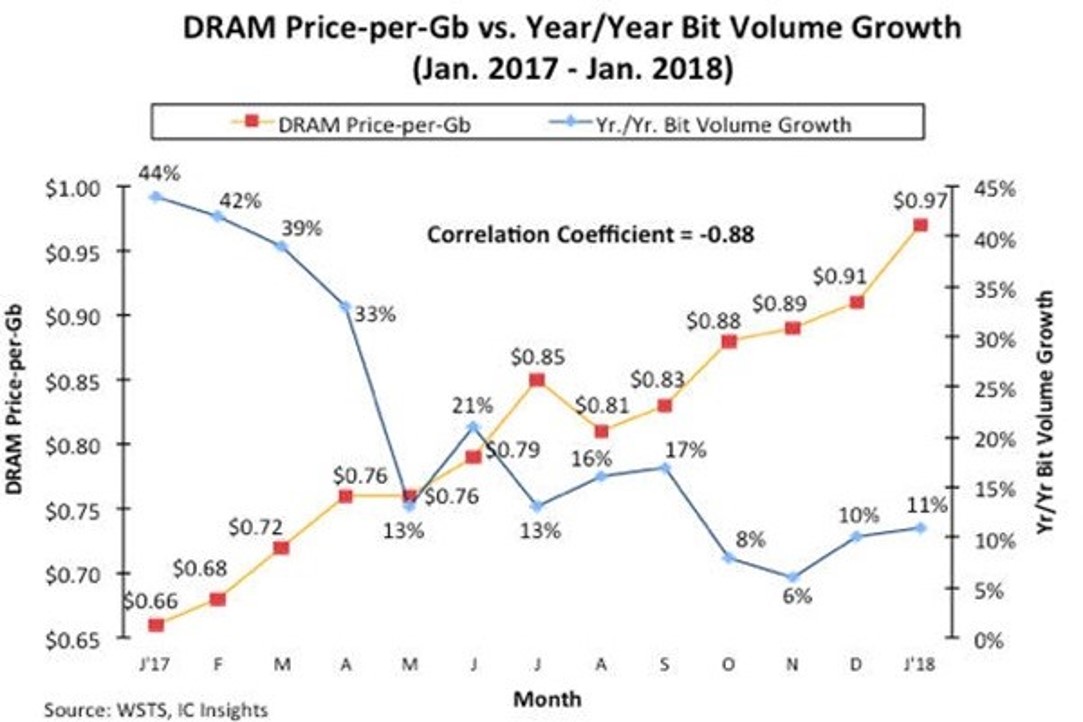

Another key cost driver is DDR4 DRAM pricing. According to IC Insights, from January 2017 to January 2018 we have seen the largest spike in per-bit DRAM pricing since the 1970’s. Unlike normal years where per-bit pricing has dropped, in 2017 prices actually increased and by a large margin.

We covered this trend more extensively in our recent piece Why Server ASPs Are Rising the 2017-2018 DDR4 DRAM Shortage. We checked our model and much of this price increase was factored into our model in June/ July 2017. The 16GB DDR4 DIMMs we were using cost about $15 more each. With 16 DIMMs that is $240. While the price increases are dwarfed by the GPU side, this still amounts to a 1.5% increase in total systems price over three quarters ago.

SSD Pricing and Rising NAND Costs

Another key trend is that NAND prices have risen in 2017. We did a double-check on the local Intel DC S3710 400GB drives and the lowest price we could find them was about $50 more per drive than three quarters ago.

While this amounts to a net $100 increase in price, a modest figure, it is yet another example of prices going up in our system. If one is not relying upon fast networked storage, a common model is to use 1.6TB+ NVMe storage in these systems which would also be impacted by the price increases.

The Intel Xeon E5-2600 V4 “Shortage”

Supermicro does have a successor to the SYS-4028GR series, the SYS-4029GP series that is based on Intel Xeon Scalable family. At the same time, production volumes of the SYS-4028GR are still higher which means that most companies are still buying the SYS-4028GR.

In one of the more eyebrow-raising trends, we are hearing is that there is a “shortage” of Intel Xeon E5-2600 V4 parts in the market as of Q1 2018. Intel increased pricing on the Xeon Scalable series to a considerable degree and so what we have been hearing is that there are many companies who are not looking primarily for CPU compute, for example, low-spec appliances, storage, and GPU compute systems, that prefer the previous generation Intel Xeon E5 V4 series cost structures.

As there are segments that are resisting the transition, we are hearing of a shortage of Intel Xeon E5 parts in the market which is driving up prices slightly for systems like these usually by tens of dollars per CPU. This is a fairly simple equation where Intel seems to be pushing otherwise unwilling users to their new platform.

We like Intel Xeon Scalable, and it is a major upgrade from the Intel Xeon E5 series but Intel also raised prices. The impact of the product segmentation and pricing misses by Intel is that players like AMD with EPYC, Marvell/Cavium with ThunderX2, and Qualcomm with Centriq 2400 are getting footholds in the market.

NVIDIA’s EULA Change for GeForce in the Datacenter

Another major occurrence was NVIDIA’s driver end user license agreement (EULA) change which stated under section 2.1.3:

“No Datacenter Deployment. The SOFTWARE is not licensed for datacenter deployment, except that blockchain processing in a datacenter is permitted.” (Source: NVIDIA.)

This was added to the EULA since NVIDIA has an interest in pushing deep learning workloads to its Tesla lines which are in its datacenter business unit. Moving revenue into the datacenter segment in turn boost performance and share price.

The deep learning teams we have spoken to virtually all use GeForce 1080 Ti’s or Titan Xp’s these days so we asked them about the EULA change. There are great questions in terms of how one can define a “datacenter.” Also, if NVIDIA were to try enforcing this EULA term with companies using GTX 1080 Ti’s and Titans they would quickly find hyper-scale companies buying tons of these GPUs working with other solutions. Overall, this is not a well-drafted line in the EULA since it is difficult to define and there is virtually no way to legally enforce it where NVIDIA ends up better off for bringing an enforcement action.

Yet there is a bigger impact: buying these systems. We have been told by many NVIDIA customers and partners that along with this change is a new set of rules for marketing NVIDIA products. NVIDIA has long pressured its larger OEM partners such as Dell EMC, HPE, Lenovo, Inspur, Supermicro and others not to sell servers with GeForce cards. In the future, features like the Tensor Core will be the effective way to segment the market but this has been a concern with the Pascal and prior generations. Companies that sell both servers and GPUs are rumored to have been stung by NVIDIA (e.g. Gigabyte), for having sold GPUs and servers together, even through thinly veiled system integrator intermediaries.

The new rules of engagement are that SI’s and VARs who previously have been buying GeForce cards for deep learning servers cannot advertise systems with GeForce and are discouraged from selling such systems. If you are looking to replicate a server like DeepLearning11 you can still request a VAR or a SI to quote the system and they should be able to do so, but there is a question of how long even that practice will last.

Three-Quarters of Price Increases in Deep Learning Servers

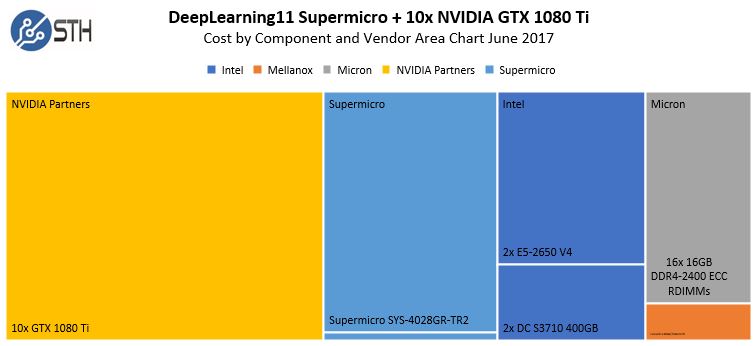

When we put everything together, we see a major shift in terms of pricing for these systems. Here is the June/July 2017 figure for the system only:

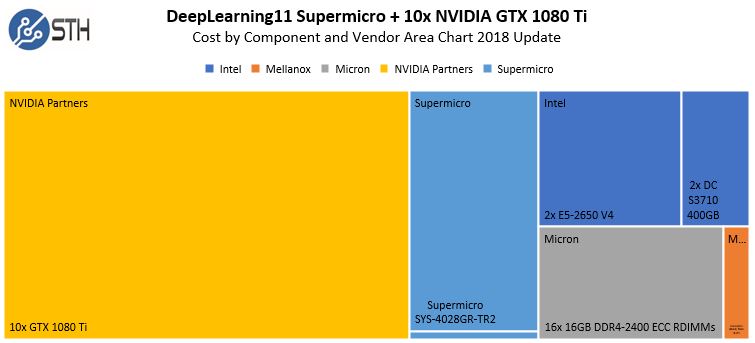

Here is the picture in March 2018:

The prices overall went up, with a single-digit percentage increase from Intel and Micron components in the system NVIDIA GPUs have gone from 43% of the overall systems costs to 54%. The same system today would cost $22,070 today versus $16,430 three quarters ago for a whopping 34% overall increase. Generally, those selling systems expect a 0.5-1.5% decrease in component costs over the life of 30-day quotes versus price increases so this is putting extreme pressure on the sales cycles.

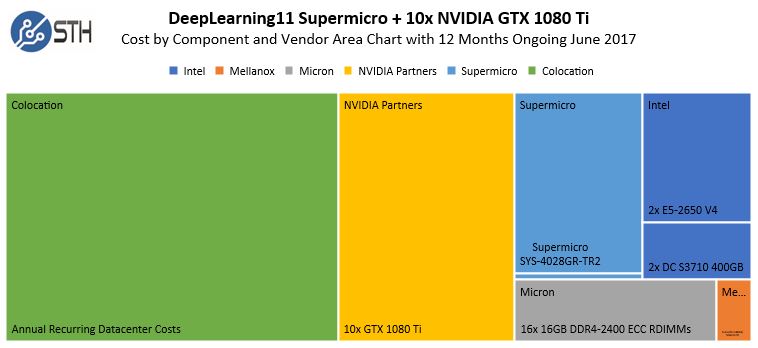

When we add in colocation costs which we are keeping constant, here is what a 12 month TCO looked like in June/ July 2017:

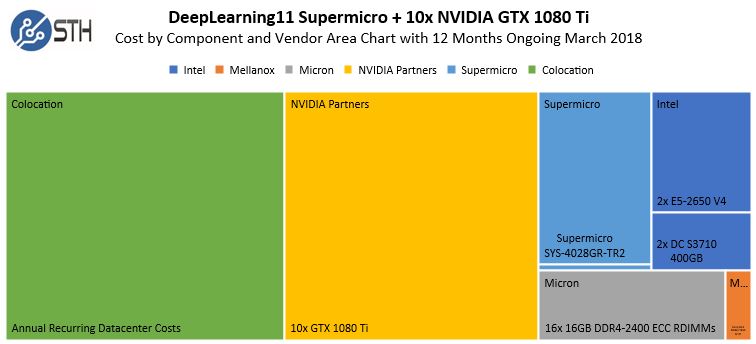

Here is a view 3 quarters later:

On an overall TCO basis, this is still about 19% higher than months ago.

In both sets of charts, you can see how the yellow NVIDIA Partners segment has increased. You can also see that the Mellanox orange component has stayed relatively consistent. Mellanox is under heavy activist investor pressure to better capitalize on its dominant position in the Infiniband space.

Final Words

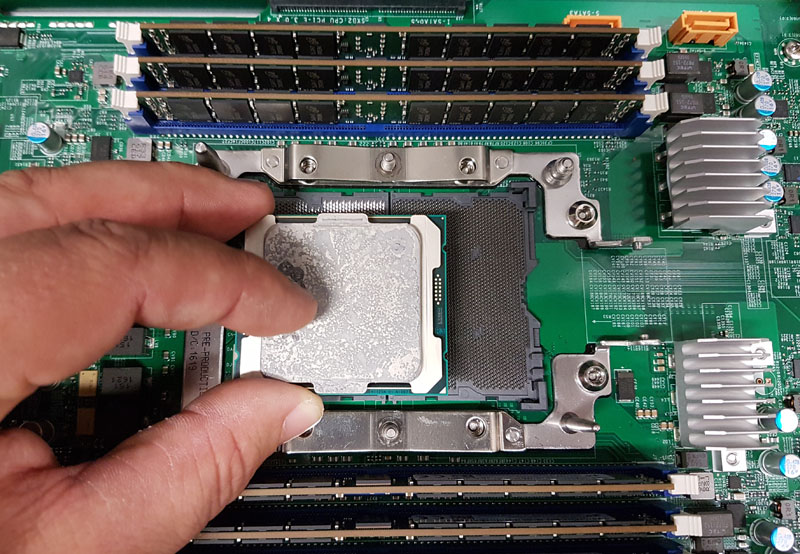

Between the CPU and GPU shortages and pricing trends for NAND and DRAM, the costs have gone up for DeepLearning11 and by a significant degree. We are also assuming here that you are building these systems yourself (they take us about 45 minutes each), since even getting the systems can be difficult. NVIDIA is discouraging their sales to push companies to the data center market. Even availability can be difficult so buying these through resellers can be tough. Resellers also have to protect quote and lead times from increased pricing and parts shortages which means if you are buying assembled systems, there is a good chance you are going to pay more than what we have here.

Lead times are way up. If you want a cluster, sometimes you need to get Titan Xps instead which sucks.