Sometimes you just want to go fast. We have been discussing 400Gbps networking recently in the context of it being a new capability that PCIe Gen5 x16 slots can handle. Today, we are going to take a look at setting that up using NDR 400Gbps Infiniband/ 400GbE.

A special thanks to PNY. We did not know this a year ago, but PNY not only sells NVIDIA workstation GPUs but also its networking components. We were working on a 400GbE switch, and in discussions, it came up that we should review these cards as part of that process. That may sound easy enough, but it is a big jump from 100GbE networking to 400GbE and the MCX75310AAS-NEAT cards are hot commodities right now because of how many folks are looking to deploy high-end networking gear.

NVIDIA ConnectX-7 400GbE and NDR Infiniband Adapter MCX75310AAS-NEAT Adapter Hardware Overview

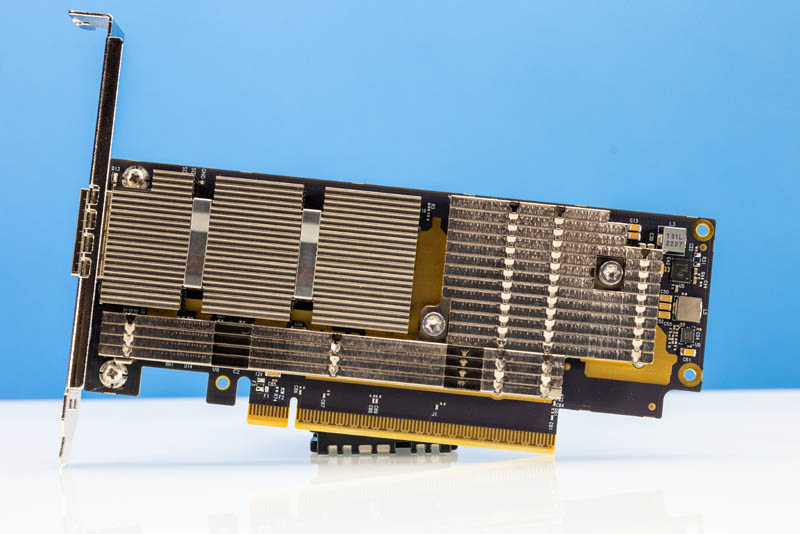

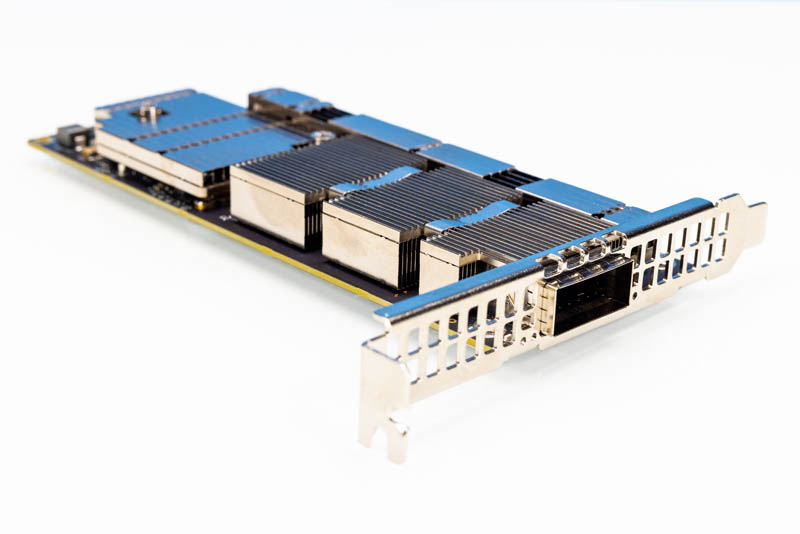

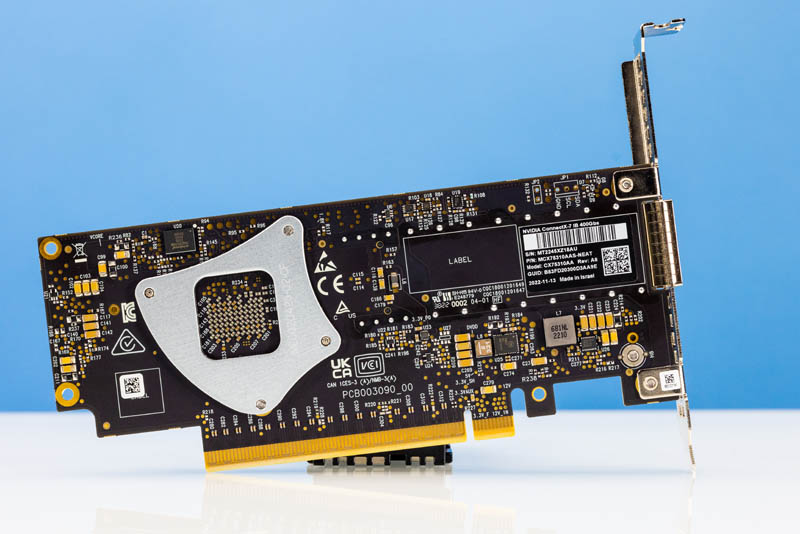

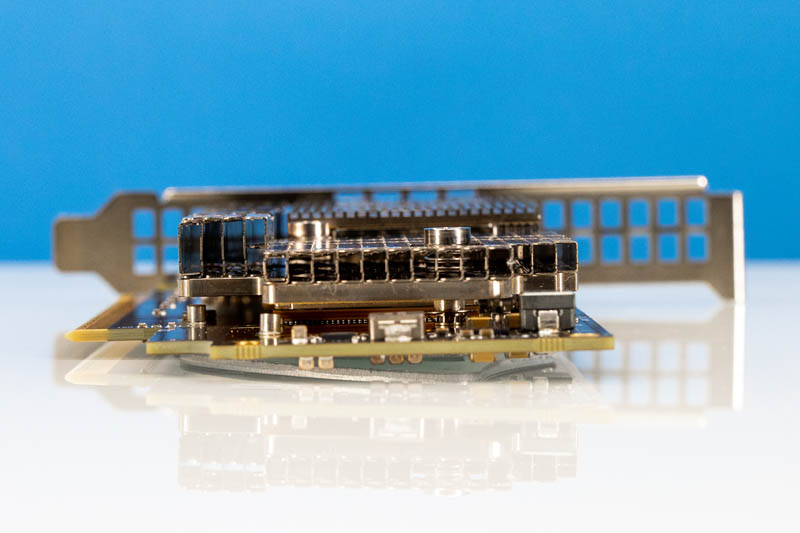

The ConnectX-7 (MCX75310AAS-NEAT) is a PCIe Gen5 x16 low-profile cards. We took photos with the full-height bracket but there is a low-profile bracket in the box.

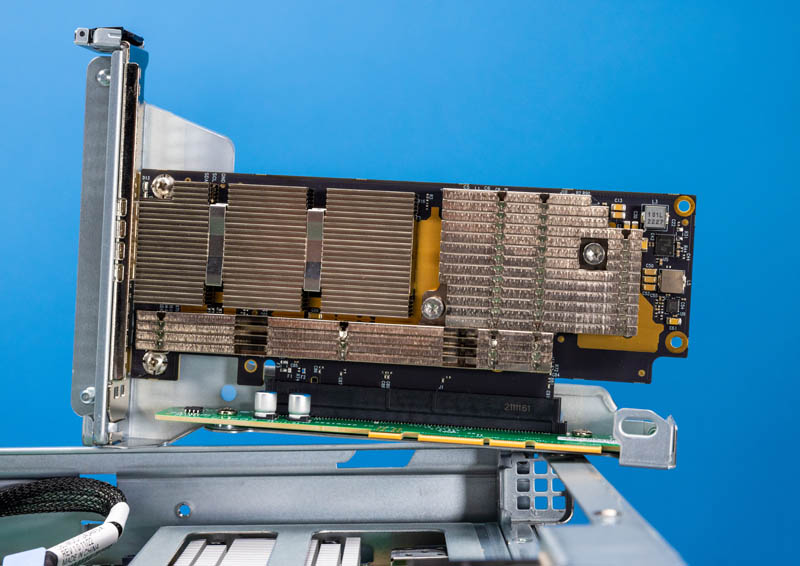

Something that should make folks take notice is the size of the cooling solution. Just to give some sense of how early we are in this, we looked up the power specs on the ConnectX-7 and could not find them. We asked NVIDIA through official channels for the specs. We are publishing this piece without them since it seems as though NVIDIA is unsure of what it is at the moment. It is a bit strange that NVIDIA does not just publish power specs on these cards in its data sheet.

Here is the back of the card with a fun heatsink backplate.

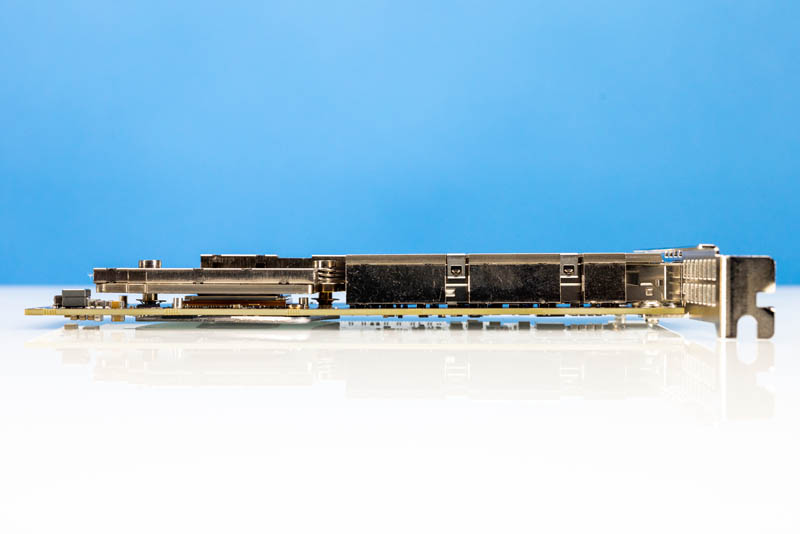

Here is a side view of the card looking from the PCIe Gen5 x16 connector.

Here is another view looking from the top of the card.

Here is a view looking from the direction airflow is expected to travel in most servers.

For some quick perspective here, this is a low-profile single-port card running at 400Gbps speeds. That is an immense amount of bandwidth.

Installing a NVIDIA ConnectX-7 400G Adapter

With a card like this, one of the most important aspects is getting it installed in a system that can utilize the speed.

Luckily we installed these in our Supermicro SYS-111C-NR 1U and Supermicro SYS-221H-TNR 2U servers, and they worked without issue.

The SYS-111C-NR made us appreciate single-socket nodes since we did not have to avoid socket-to-socket when we set up the system. At 10/40Gbps speeds and even 25/50Gbps speeds, we hear folks discuss traversing socket-to-socket links as performance challenges. With 100GbE, it became more acute and very common to have one network adapter per CPU to avoid traversal. With 400GbE speeds, the impact is significantly worse. Using dual-socket servers with a single 400GbE card it might be worth looking into the multi-host adapters that can connect directly to each CPU.

OSFP vs QSFP-DD

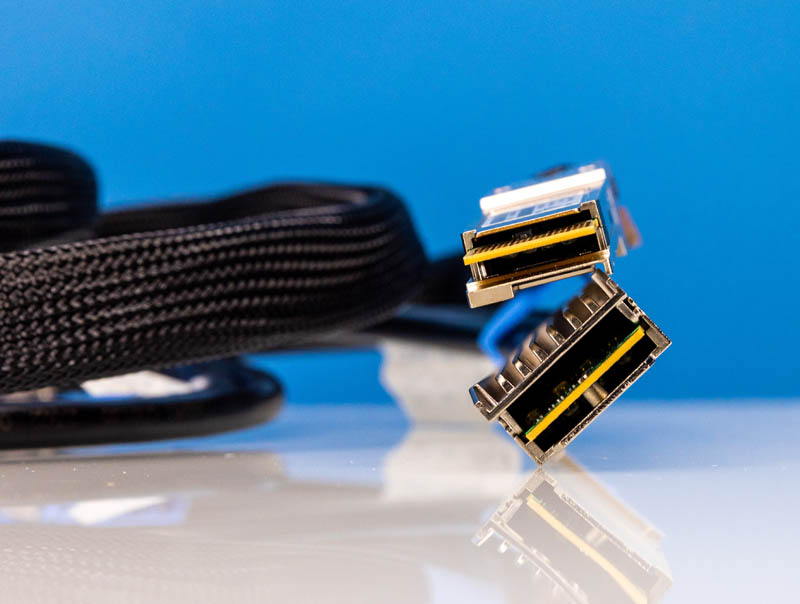

Once the cards were installed, we had the next challenge. The cards use OSFP cages. Our 400GbE switch uses QSFP-DD.

The two standards are a bit different in terms of their power levels and physical design. One can adapt QSFP-DD to OSFP, but not the other way around. If you have never seen an OSFP optic or DAC, they have their own thermal management solution. QSFP-DD on top uses heatsinks on the QSFP-DD cages. OSFP often includes the cooling solution which we have on our lab’s OSFP DACs and optics.

That brought us to a few days of panic. The $500 Amphenol OSFP DACs as well as the OSFP to QSFP-DD DACs on hand utilized the heatsink cooling solution. We sent everything off to the lab to get hooked up only to get a note back that the OSFP ends of the DACs did not fit into the OSFP ports of the ConnectX-7 cards because of the direct cooling on the DACs.

The reason NVIDIA is using OSFP is likely because of the higher power level. OSFP allows for 15W optics while QSFP-DD is 12W. Early in adoption cycles, having higher power ceilings allows for easier early adoption which is one of the reasons there are things like 24W CFP8 modules. On the other hand, we already have looked at FS 400Gbase-SR8 400GbE QSFP-DD optics so that market is moving.

A few calls later, we had cables that would work. Our key takeaway whether you are using ConnectX-7 OSFP adapters today, or if you are reading this article 5 years from now when they become inexpensive second-hand gear, is to mind the heatsink size on the OSFP end you plug into the ConnectX-7. If you are used to QSFP/ QSFP-DD where everything plugs in and works, there is a bigger challenge with running into silly issues like connector sizes. On the other hand, if you are a solution provider, this is an opportunity for professional services support. NVIDIA and resellers like PNY also sell LinkX cables which would have been an easier route. That is a great lesson learned.

Also, thank you to the anonymous STH reader who helped us out with getting the cables/ optics for a few days on loan. They wished to remain anonymous since they were not supposed to loan the 400G cables/ optics they had.

Next, let us get this all setup and working.

This just puts into perspective the challenges that Netflix had getting 400Gb/s per server in 2020. I would love to see how current CPU and 400Gbe NICs can accelerate this along with PCIe 5 and DDR5. https://people.freebsd.org/~gallatin/talks/euro2021.pdf

The cx-7 nics that are osfp based require a “flat top” connector vs a “fin top” connector that is more commonly found.

Were you able to use a DAC to connect the QSFP-DD switch to the OSFP nic? If so, how was that possible due to the 50G lanes that QSFP-DD uses vs the 100G lanes that these nics require?

I was told by the Nvidia folks that a DAC would not work in this scenario. We were told to use a combination of transceivers and fiber to connect QSFP-DD to OSFP.

I have a couple of threads in the forum where we discussed this.

I was able to connect 2 of those same 400G nics back2back using an OSFP DAC (both sides being flat top). But we were still not able to find a DAC solution for QSFP-DD switch to OSFP nic.

Any pointers would be appreciated!

That’s terrible. I’ve only seen the fin top OSFP’s. Bad design NVIDIA.

jpmomo I’m reading that as they used DACs and optics and they’re showing QSFP-DD optics in the switch. They don’t have a Quantum-2 switch so they’re probably DAC’n for the IB and fiber for the Eth.

Nate77…Thanks for the observation. I am using DAC in the context of a single cable with the transceivers attached at each end. Contrast this with the connection that is a single QSFP-DD transceiver on the switch end….then a MPO-12/APC fiber….then an OSFP Flat-top 400G transceiver.

The critical part in the non-DAC connection (transceiver….fiber…transceiver) is the transceiver Gearbox IC on the first transceiver (QSFP-DD side).

This will do the necessary conversion from 2x50G electrical to 100G optical (both using PAM4.)

regarding the issue of Flat-top vs Fin-top, the explanation that I was given was to maintain the standard pci slot width (from the pci slot cover perspective). They take care of the cooling on the NIC itself.

DiHydro…I agree that this should be a lot easier for Netflix to boost their performance with current gen hw. It would also be interesting to see how they might leverage some of the Intel SPR accelerators for their transcoding. Between the DDR5, PCIe 5.0, these cx-7 nics and the rest of this generation hw, they should see a big improvement.

Being Netflix, I would assume they have already been down this road and might even be looking at pci 6.0 and the next gen Nvidia 800G NICs!

It surprises me that these need to be manually switched between Infiniband and Ethernet. This seems to work out of the box for my very old Mellanox ConnectX-3 VPI, depending on the port/switch it’s connected to.

@nils I think this is an indication of early drivers. I used to have to set that on my ConnectX-3 cards (on Debian 9 systems) a few years ago but as of late the drivers have detected it automatically.

I’m still unaware of the usage cases for a card this fast. I can understand needing 400GbE as a trunk between switches, but I am not aware of usage cases that can need that much network traffic from a single CPU. Can someone enlighten me?

Do you have a part number / supplier for the QSFP-DD to flat-top OSFP cable? I tried three vendors who only had access to the Fin-top OSFP connector.

can you share the script that you used for those tests?