Software Setup NDR InfiniBand vs 400GbE

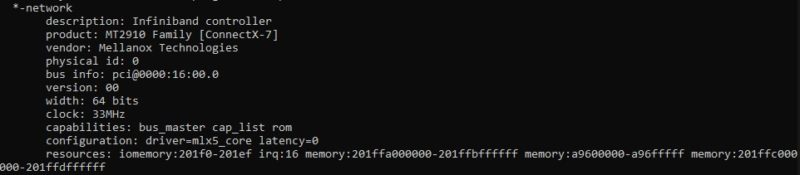

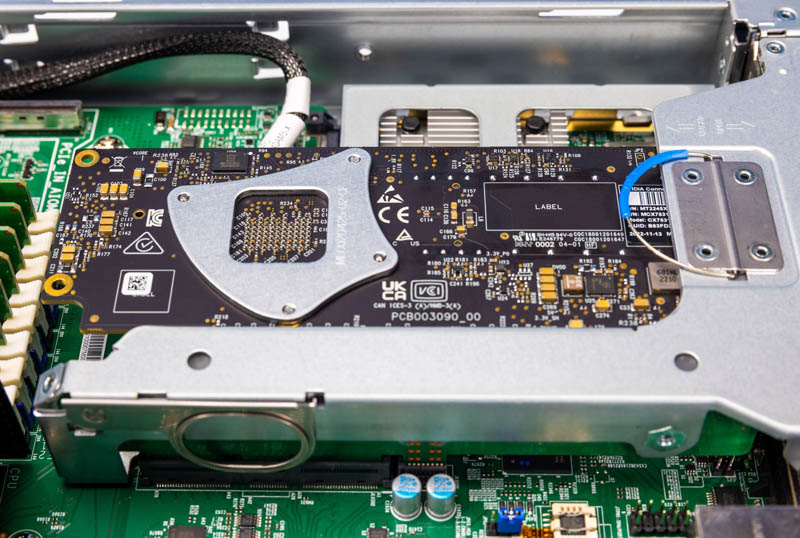

In parallel with the physical installation, we were still working on the servers on the software side. Luckily, that was the easy part. The Supermicro servers saw the MT2910 family ConnectX-7 adapters.

After a quick OFED installation and reboot, we were ready.

Since we had these running in both Ethernet through the Broadcom Tomahawk 4 switch and in Infiniband mode directly, we had to also change link types.

The process is straightforward and similar to Changing Mellanox ConnectX VPI Ports to Ethernet or InfiniBand in Linux.

Here is the basic process:

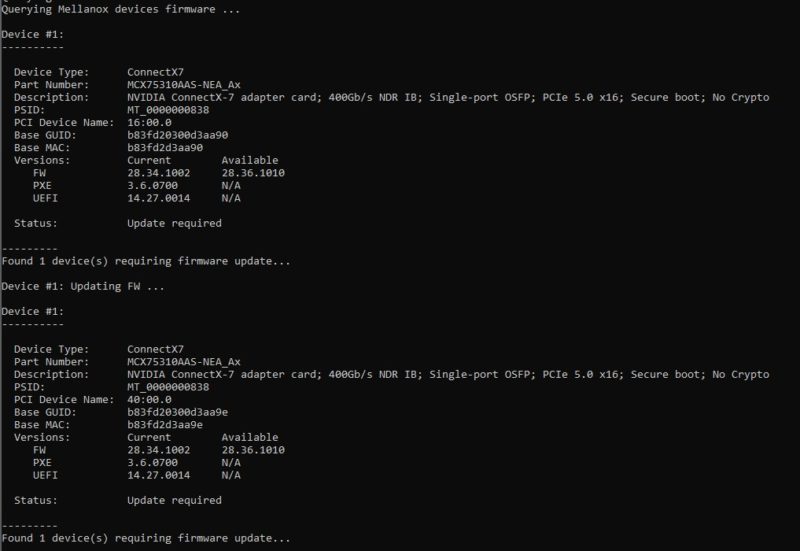

0. Install OFED and Update Firmware

This is a step we have to do just to get the cards working properly.

This is a fairly simple process. Download the version you need for your OS and then install the drivers using the script in the download. The standard installer will also update the firmware for the cards as well.

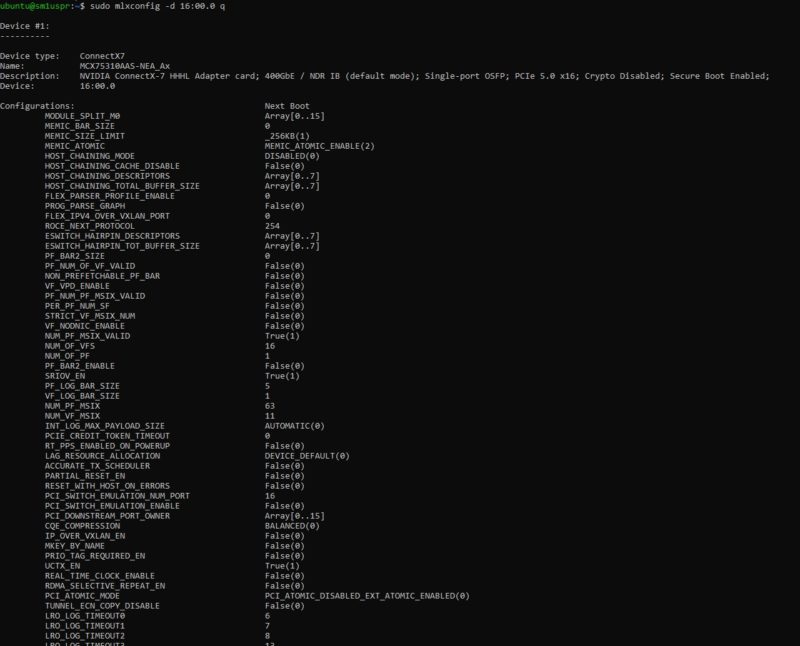

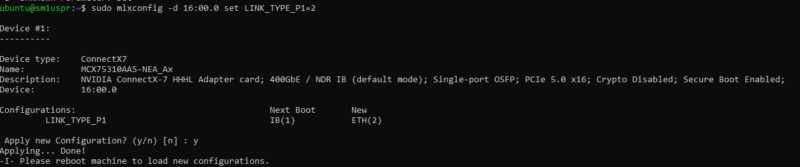

Once we reboot the server after the OFED installation, we can see the NVIDIA ConnectX-7 MCX75310AAS-NEAT has 400GbE and NDR IB. The NDR IB is noted as the default mode.

If we want Ethernet, we have to change it. To do so, it is three simple steps:

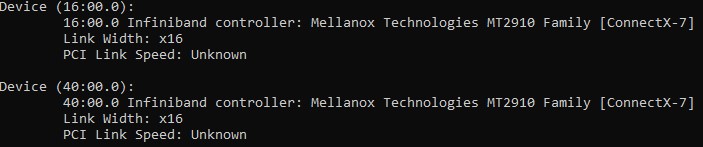

1. Find the ConnectX-7 device

Especially if you have other devices in your system, you will need to locate the proper device to change. If you just have one card, it is very easy to do so.

lspci | grep Mellanox

16:00.0 Infiniband controller: Mellanox Technologies MT2910 Family [ConnectX-7]

Here we now know that our device is at 16:00.0 (Yes we did this earlier as you can see from the screenshot above.)

2. Use mlxconfig to change the ConnectX-7 device to Ethernet from NDR Infiniband.

Next, we will use the device ID to change the link type from Infiniband.

sudo mlxconfig -d 16:00.0 set LINK_TYPE_P1=2

Here LINK_TYPE_P1=2 sets P1 (Port 1) to 2 (Ethernet). By default LINK_TYPE_P1=1 means P1 (Port 1) is set to 1 (NDR InfiniBand.) If you need to go back, you can just reverse this process.

3. Reboot system

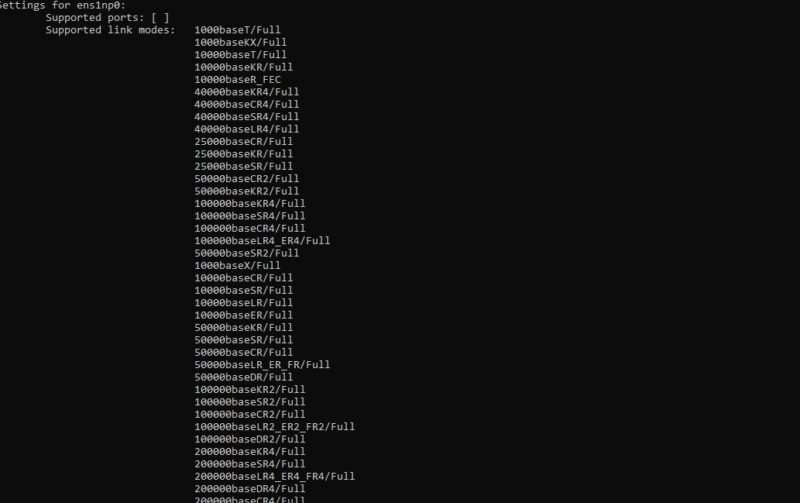

After a quick reboot, we now have a ConnectX-7 Ethernet adapter.

It is a bit funny to see that this expensive 400Gbps adapter still supports 1GbE speeds.

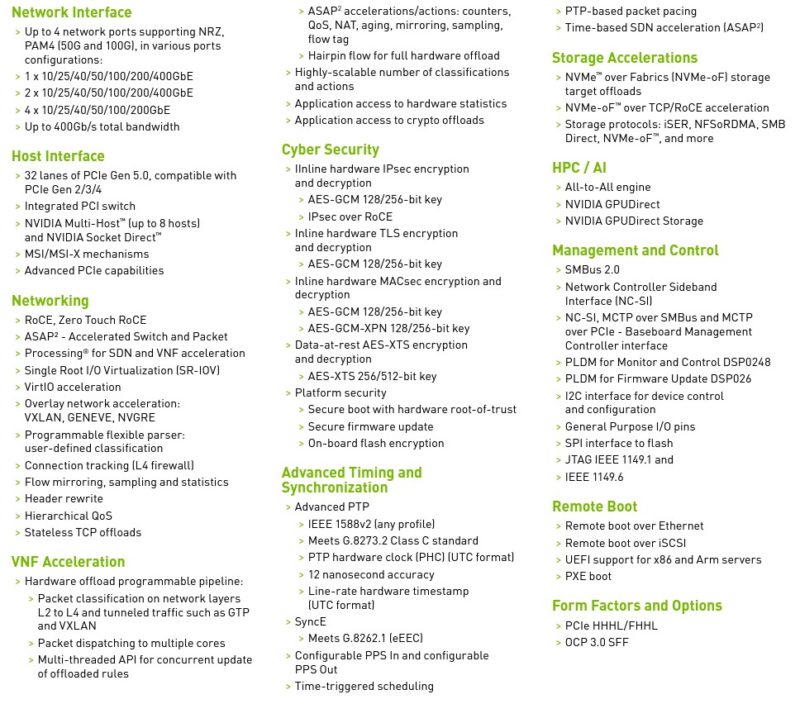

This is the basic setup. The NVIDIA ConnectX-7 cards have large feature sets these days:

We did not get a chance to really dive into these, but many on that list are likely individual articles themselves.

Performance

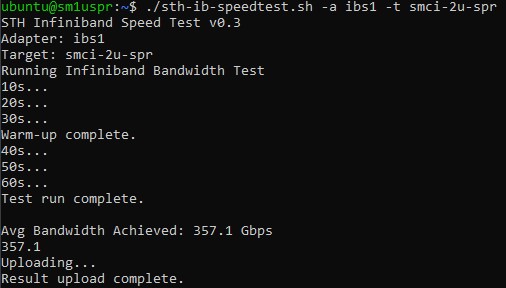

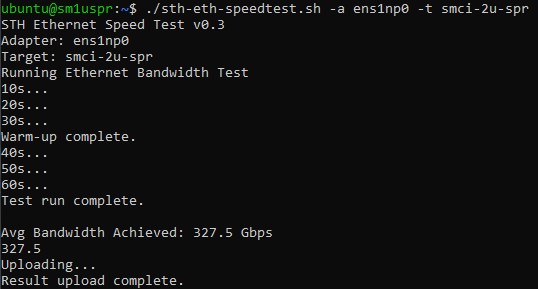

Between photographing everything (and we grabbed B-roll as well.) A phone-a-friend to get working cables, and more, we did not have a lot of time to play with the cards. Still, we got fairly awesome speeds.

There is certainly more performance available. We are also in the 300Gbps to 400Gbps range on both Infiniband and Ethernet. The Ethernet side took a bit of help to get to 400GbE speeds through the switch since it initially linked up at 200GbE speeds. Still, we did very little by way of performance tuning.

These speeds are in the range that they can hit 400Gbps, but our time to get something that was well over 3x the speed of the 100Gbps adapters we are accustomed to was surprisingly short.

Something that we should just point out here is that offloads at 400GbE speeds are very important. At 25GbE and 100GbE speeds, we saw things like DPUs to offload common networking tasks from CPUs. Modern CPU cores have gotten ~20-40% faster over the past three years while networking bandwidth has increased from 100GbE to 400GbE. Now things like RDMA offloads, OVS/ checksum offloads, and more are critical to avoid CPUs as much as possible. That is also why NVIDIA’s former Mellanox division is one of the few companies shipping 400Gbps adapters today.

Final Words (For Now)

To be frank, we ran out of time. To give some sense here, we had something like $2000 of cables, four $500 “cheap” optics, a $55,000 switch, two cards that are in the $1800+ range, $50,000 of servers, and two phone-a-friend calls for assistance. $100K of hardware, and a few weeks later, everything worked and we got silly speeds from the ConnectX-7 cards.

Our sense with this is that we would be considered “early adopters” of this technology. We had to get the cards back to PNY and the OSFP bits back to the gracious STH reader who loaned them. There is one thing for certain, this is one of those areas where we want to do more in the future so we need to get a few of these cards for the lab along with the right cables and optics.

Although NVIDIA is seemingly short on supply for these cards (we have had a request in for some time there along with for BlueField-3) we found out that PNY sells these cards and they were able to loan us these two cards for a few weeks to work through this. Our key lesson learned was also that 400GbE right now feels more like something to buy as a solution rather than trying to piece it together as we did.

Next in this 400G series, we are going to focus on the networking side and get to the 64-port 400GbE switch that we used here. Stay tuned for that in the next week or two as Patrick’s video is still being edited.

This just puts into perspective the challenges that Netflix had getting 400Gb/s per server in 2020. I would love to see how current CPU and 400Gbe NICs can accelerate this along with PCIe 5 and DDR5. https://people.freebsd.org/~gallatin/talks/euro2021.pdf

The cx-7 nics that are osfp based require a “flat top” connector vs a “fin top” connector that is more commonly found.

Were you able to use a DAC to connect the QSFP-DD switch to the OSFP nic? If so, how was that possible due to the 50G lanes that QSFP-DD uses vs the 100G lanes that these nics require?

I was told by the Nvidia folks that a DAC would not work in this scenario. We were told to use a combination of transceivers and fiber to connect QSFP-DD to OSFP.

I have a couple of threads in the forum where we discussed this.

I was able to connect 2 of those same 400G nics back2back using an OSFP DAC (both sides being flat top). But we were still not able to find a DAC solution for QSFP-DD switch to OSFP nic.

Any pointers would be appreciated!

That’s terrible. I’ve only seen the fin top OSFP’s. Bad design NVIDIA.

jpmomo I’m reading that as they used DACs and optics and they’re showing QSFP-DD optics in the switch. They don’t have a Quantum-2 switch so they’re probably DAC’n for the IB and fiber for the Eth.

Nate77…Thanks for the observation. I am using DAC in the context of a single cable with the transceivers attached at each end. Contrast this with the connection that is a single QSFP-DD transceiver on the switch end….then a MPO-12/APC fiber….then an OSFP Flat-top 400G transceiver.

The critical part in the non-DAC connection (transceiver….fiber…transceiver) is the transceiver Gearbox IC on the first transceiver (QSFP-DD side).

This will do the necessary conversion from 2x50G electrical to 100G optical (both using PAM4.)

regarding the issue of Flat-top vs Fin-top, the explanation that I was given was to maintain the standard pci slot width (from the pci slot cover perspective). They take care of the cooling on the NIC itself.

DiHydro…I agree that this should be a lot easier for Netflix to boost their performance with current gen hw. It would also be interesting to see how they might leverage some of the Intel SPR accelerators for their transcoding. Between the DDR5, PCIe 5.0, these cx-7 nics and the rest of this generation hw, they should see a big improvement.

Being Netflix, I would assume they have already been down this road and might even be looking at pci 6.0 and the next gen Nvidia 800G NICs!

It surprises me that these need to be manually switched between Infiniband and Ethernet. This seems to work out of the box for my very old Mellanox ConnectX-3 VPI, depending on the port/switch it’s connected to.

@nils I think this is an indication of early drivers. I used to have to set that on my ConnectX-3 cards (on Debian 9 systems) a few years ago but as of late the drivers have detected it automatically.

I’m still unaware of the usage cases for a card this fast. I can understand needing 400GbE as a trunk between switches, but I am not aware of usage cases that can need that much network traffic from a single CPU. Can someone enlighten me?

Do you have a part number / supplier for the QSFP-DD to flat-top OSFP cable? I tried three vendors who only had access to the Fin-top OSFP connector.

can you share the script that you used for those tests?