With ISC 2021, we have the announcement of the NVIDIA A100 80GB PCIe card. This is quite expected since we previously saw the NVIDIA A100 40GB PCIe add-in card and the NVIDIA A100 80GB (SXM) cards. It makes sense that NVIDIA would announce an A100 80GB version.

NVIDIA A100 80GB PCIe Add-in Card Launched

Although we have covered the NVIDIA A100 often on STH, the key to this announcement is that the new model will include 80GB of HBM2E memory. NVIDIA says this is good for a 25% uplift in memory bandwidth.

Something that NVIDIA mentioned on the call is that the A100 80GB PCIe was a 300W GPU. [Update for clarity.] While the 40GB model is a 250W GPU since that is what most PCIe cards are rated for, the 300W will be challenging since many servers are designed for multiple 250W but not necessarily 300W devices. The PCIe form factor is no longer able to keep up with higher-end accelerator heat dissipation and power delivery needs. As a result, this is being marketed heavily for its multi-instance GPU (MIG) capabilities. NVIDIA markets similar stats to the SXM models, but SXM modules are now hitting up to 500W and usually need to be liquid-cooled at those levels.

Final Words

Perhaps the biggest question that folks will want to answer is what the difference is between the 80GB SXM v. PCIe versions. There are really two major aspects that one needs to look at. First, the PCIe version does not have the same power capacity due to the limited form factor. That naturally limits the performance of the cards. Although NVIDIA does not discuss street pricing in these types of announcements, what we have heard with the 40GB models is that at a cluster level, costs are usually ~5-15% more for the SXM versus PCIe clusters. This is because so much of the cost is not just the cards, but also the network fabric and other aspects of running GPU clusters.

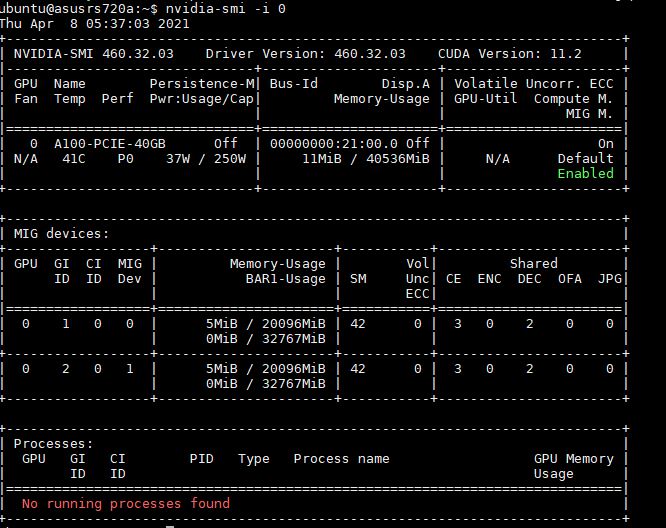

Where we instead see the NVIDIA A100 80GB excelling is using MIG and providing multiple GPUs in a single card. Here is an example using the 40GB PCIe cards in our ASUS RS720A-E11-RS24U Review:

With MIG supporting seven GPU instances per A100 and 80GB of total capacity, that allows for one to build 7x higher memory instances for tasks such as AI inferencing. While the NVIDIA T4 may be lower power, as we covered with MLPerf Inference v1.0, NVIDIA can get the performance of 7x T4’s in a single A100.