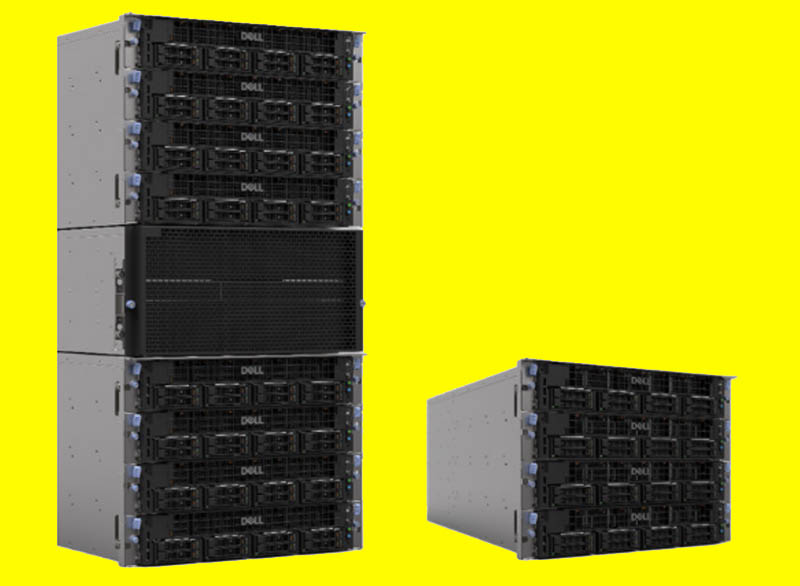

With about a month to go on the new year, and less than a month and a half until the new 4th Gen Intel Xeon Scalable (codenamed “Sapphire Rapids”) launch, Dell has a new scale-up server platform, dubbed the Dell S5000 series. As a quick note, this is not the Dell Networking S5000 series as we have reviewed products like the Dell S5248F-ON and S5148F-ON (and found the strange American MegatrAnds stickers in.) The S5000 series scales from 6 to 16 processors, but has perhaps an interesting feature: it is using 2019 era 2nd Gen Xeon Scalable “Cascade Lake” processors not the 2020 era 3rd Gen “Cooper Lake” or the 4th Gen “Sapphire Rapids” launching in a few days on 2023-01-10.

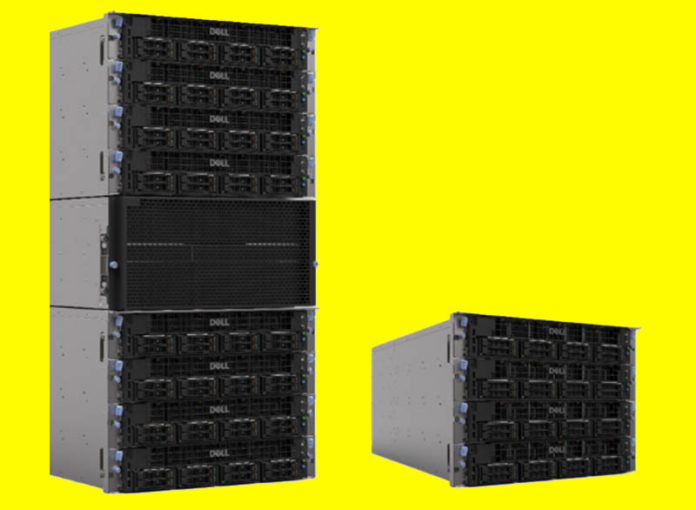

New Dell S5416 and Dell S5408 Servers Launched

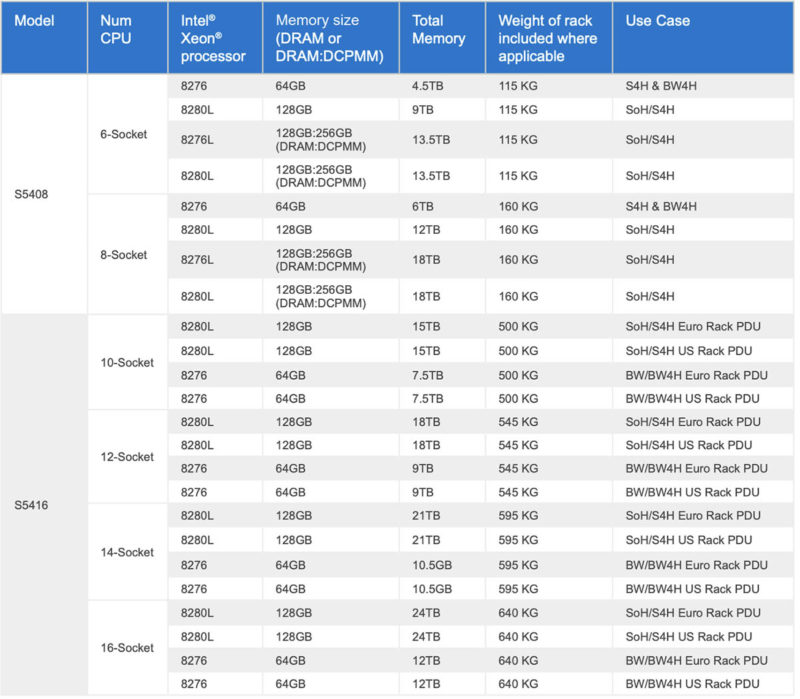

There are two main configurations. The Dell S5408 is the smaller 9U solution. This 9U solution offers either 6 or 8-socket configurations. That means we can get up to 96 DDR4 memory slots and the solution offers Optane DCPMM support. We did a piece on the Glorious Complexity of Intel Optane DIMMs. Starting in 2023, we expect Intel to launch Optane with Sapphire Rapids, but that class of storage we expect to move to the CXL bus.

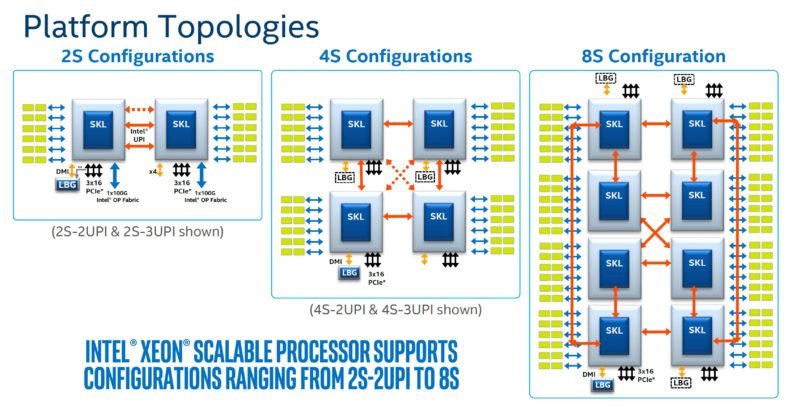

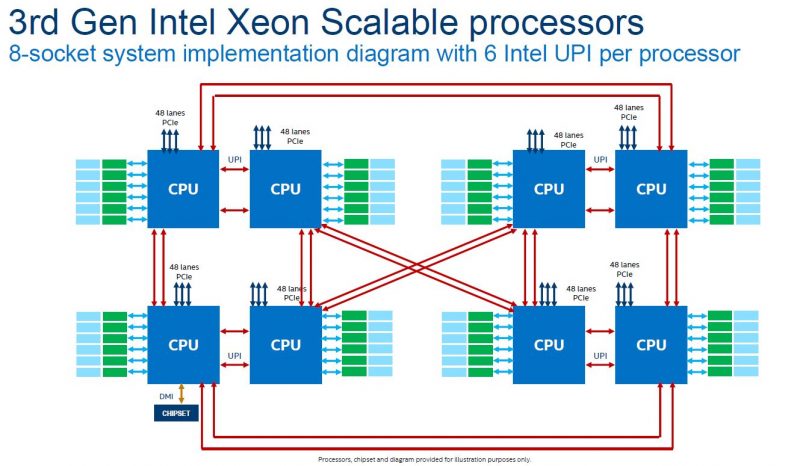

While Intel’s 3x UPI chips allow direct connections between each CPU in a 4x CPU configuration, Intel also supports a glue-less topology. Here are the common topologies for Skylake (1st Generation Intel Xeon Scalable) and Cascade Lake (2nd Generation Intel Xeon Scalable):

The Dell S5408 utilizes the Cascade Lake 2nd generation Xeon Scalable in the 8S configuration. One can also get up to 12 DDR4 slots per CPU, for 96 total in the 8S configuration. Dell supports up to 12TB of RAM or 18TB when Optane DCPMM is added.

To go bigger, the larger configuration is the Dell S5416 with up to 24TB of RAM support Interestingly there is no DCPMM on these offerings. This is a 21U configuration scaling up to 16 sockets. It utilizes the eXternal Node Controller (XNC) technology to build a larger topology. Something that is quite interesting here is that Dell is not using the 3rd Generation Intel Xeon Scalable “Cooper Lake” parts with higher memory speeds, bfloat16 support, and also twice the number of processor-to-processor UPI links.

The 2nd Generation Intel Xeon Scalable is likely going to have a longer support cycle as Cooper Lake was largely made for Facebook/ Meta. Still, that is a better 4-socket and 8-socket solution. We are not yet allowed to discuss more than that Intel Xeon Sapphire Rapids to Scale to 4 and 8 Sockets for chips launching in a few weeks.

For the configurations, here are the supported configurations from Dell:

This is the type of market where having proper hardware/ software configurations and support is extremely important as often these are extremely high-value systems for the organizations that run them.

Final Words

The solution is not a general-purpose server. This is Dell’s scale-up solution for SAP HANA TDI. It is quite interesting that Dell is launching this so close to when it can get 2x the cores and more memory capacity and >2x bandwidth with Sapphire Rapids. We have already looked at a 8x Sapphire Rapids system that you may see briefly at the launch on STH, so they are coming, and soon.

One thing to keep in mind is that although the ~2 generation newer processors and platforms are launching in this space in the next month, this is a generally slow-moving market because of the cost of these systems and the engineering, validation, and certification work that happens before they can be used.

It is always fun to see these large x86 systems so we figured we would cover this one.

If Intel supports glueless operation up to 8 sockets, what’s the glue arrangement for the 16 socket system? As best I can gather from Intel’s documentation the ‘XNC interface’ is a thing they provide on certain Xeons; but they refer to the actual external node controller as ‘OEM developed’.

Are those sufficiently expensive and specialized that the market doesn’t support that many independent variations and OEMs mostly buy from the same supplier or small list of suppliers; or are the margins on this sort of hardware high enough to maintain in-house implementations of something like this?

I think its oem from Atos BullSequana S Server

This thing kind of looks like hot garbage

SAP HANA is equally so, guess it makes sense

This is the only application I really see CXL DRAM expansion taking off in the next 2 years.

Everything else will probably take a lil longer for optimizing for latency. Having to hop two or three sockets to get to memory might have even bigger latency than CXL so for these DB servers with lots of RAM that will have an immediate impact.

On the other hand: The only CXL controller I have seen is the Montage expander (in Samsung and SK Hynix CXL products) but it does not appear to be compatible with 3DS or FB memory. One would need a ton of those to replace a server like this (DRAM wise).

I would love to know more about this class of server hardware, sadly there is very little information out there publicly.

From a computational stand point, increasing socket socket is how Intel will have to compete with AMD’s line up. The back-to-back delays of Ice Lake-SP and Sapphire Rapids has pretty much handed AMD performance leadership in this sector. It just is boggling that Dell is leveraging Cascade Lake here. I presume this will make sense in terms of cost, especially when licensing is involved.

@fuzzyfuzzyfungus The external controller is developed with the rare chipset license. The major player here used to be SGI which developed their NUMAlink chip for older Xeons that could scale up to 256 sockets. Both HPE and Dell used to rebrand SGI systems for their large socket systems but bundle in things like their management platform to be consistent with their ecosystems. This ended when HPE outright purchased SGI and brought that hardware fully under the HP SuperDome line. The limit is now 32 sockets but that is more of an effect of memory addressing (48 TB) than socket scaling. Sapphire Rapids brings an increase in physical address space which make scaling beyond 32 sockets once again feasible for their use-cases.

@Lasertoe On a Genoa server with 4 DIMM CLX expanders, a 32 TB memory pool should be possible using 256 GB modules. 48 DIMM slots from Genoa (2 sockets, 2 DIMM per channel) and then 20 memory expanders with 4 DIMM slots each. The catch is that there wouldn’t be any spare IO for networking or other devices. In terms of latency, it may not be as dire as you think if the memory expander supports multihosting (4 lane link to each CPU socket) which would keep everything either local or one hop away. Genoa with 192 cores would give a 16 socket, 448 core Cascade Lake system a run for its money in terms of performance.

Ice Lake-SP is 2S only. Genoa is 2S only. So they’re not going to be used for this application.

This is up to 448 cores in 16S. Sapphire is 480C in 8S. So you’d think 960C in a 16 config.

This is also big on memory capacity. So CXL adding even 512GB per device. 4 devices per CPU for 2TB per socket, then 8 or 16 is a lot. You’re already dealing with so many NUMA hops anyway.

@Milk: SAP HANA is a very good DB. Unlike Oracle it isn’t made of glass and breaks when you look at it crosseyed.

@Kevin G: IIRC from talking to my SAP HANA DBAs, you license the DB based on total RAM. The calculation for the RAM given to a PRD DB is the DB gets every MB of RAM for each socket needed. For example if your /hana/data volume is 500GB you need at least 500GB RAM. If you have a dual socket server, say 16c/32t, and each socket has 384GB RAM then you can only run one DB on the boat and the DB gets all 32c/64t and 768GB RAM.

Overall I feel this comes down to validation. Most companies are virtualizing their HANA appliances, whereas 7 years ago there were still a lot of standalone appliances. The most recent time I was looking into purchasing SAP HANA certified hardware for my data center, Ice Lake had been out for 6 months at the time. It was already approved for use as a standalone appliance since D1, however, it took more than a year for Ice Lake to get approval on VMware. Therefore it might be months after SPR is released until it gets VMware certification. That means you need to go with older CPUs for something like this.

@Jeremy: Yes, in your case you might need “older” systems but not this old. Cooper Lake is supported by vmware vSphere.

+ For the biggest HANA installations (and that’s what these servers are usually for) companies probably choose standalone because they have to pay millions of dollars per year for a >2TB Hana license so it doesn’t make too much sense trying to save money by virtualizing. Even AWS High Memory instances are offered as bare metal with full 8 sockets but different RAM configurations.

I had to run an look up what “SAP HANA Tailored Data Center Integration” was. It looks like the choice of Copper Lake processors and memory is based on what SAP currently has certified with their database – HANA Appliance GEN 6, 2nd Gen Intel Xeon Scalable processors & Intel Optane DC Persistent Memory, virtual PMEM with POWER9.

SAP HANA in memory DB only uses INTEL and IBM POWER as it has direct access to on chip mmu. HANA architecture determines CPU supports.

I also wanted to SAP for each piece of KIT in HANA you will only get support after it passes Key Performance Indictors.

@Lasertoe Remember there is very little difference between Cooper Lake and Cascade Lake. Most likely the reason for using Cascade Lake is Cooper Lake isn’t certified for Optane RAM in VMware. Despite what you think most companies that I know of that use HANA on physical appliances are taking those appliances virtual as they upgrade. I work for a consulting company that specializes in SAP Basis & HANA consulting. I run a data center that hosts multiple DEV/Demo HANA instances. The benefits of going vitrual outweigh any of the disadvantages.