The Intel Xeon Gold 6134 is a case study in how specialized the company’s Xeon Scalable SKU stack is. It is optimized for applications that have per-core licensing models. While the Intel Xeon Gold 6134 is one of the most expensive 8 core CPUs to date, it has features that maximize the performance of those cores. For example, while a standard Intel Xeon Scalable CPU will have 1.375MB of L3 cache per core, the Intel Xeon Gold 6134 has over 3MB of L3 cache per core. In addition, the base clock is 3.2GHz. This allows the chip to stay at higher clocks before turbo boost kicks in. With these features, Intel is able to extract more performance per core in the hope that users who pay per core for licensing can minimize those license costs.

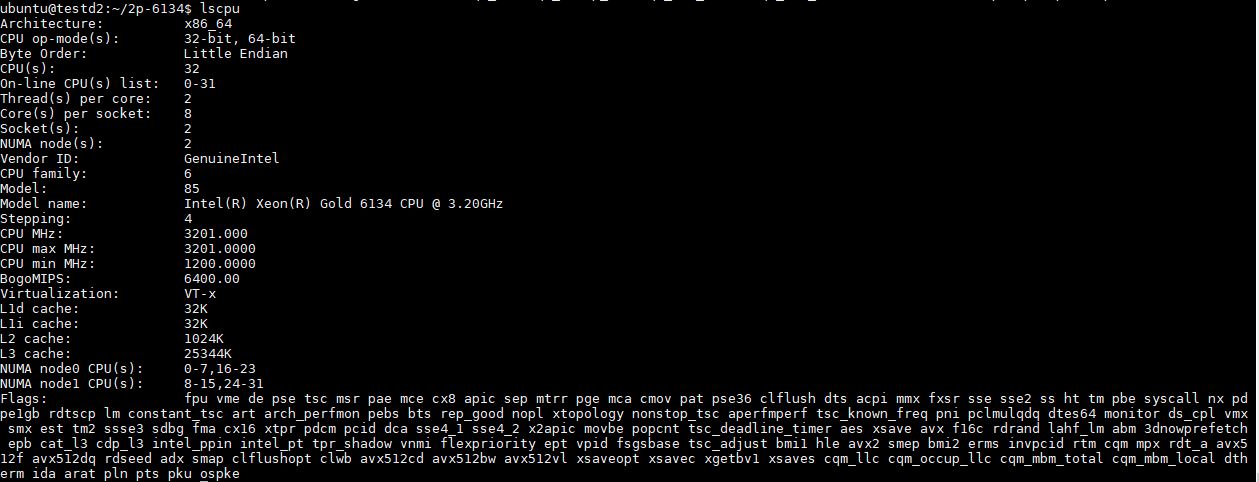

Key stats for the Intel Xeon Gold 6134: 8 cores / 16 threads, 3.2GHz base and 3.7GHz turbo with 24.75MB L3 cache. The CPU features a 130W TDP. This is a price point Intel has maintained for years. Here is the ARK page with the feature set.

Test Configuration

Here is our basic test configuration for single-socket Xeon Scalable systems:

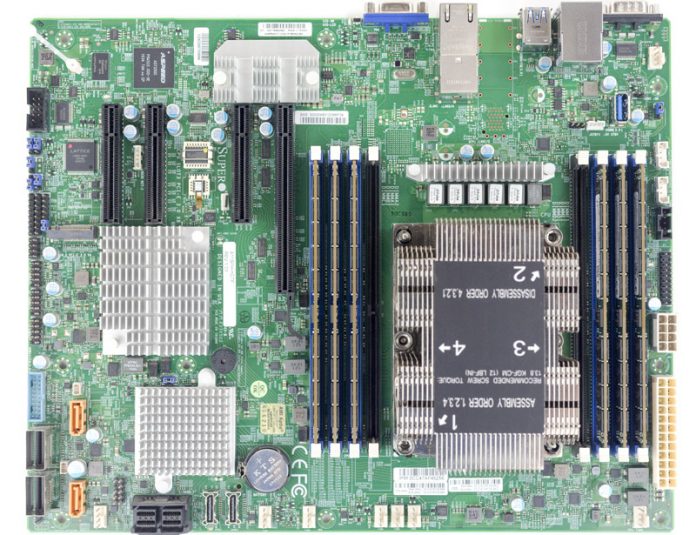

- Motherboard: Supermicro X11SPH-nCTF

- CPU: Intel Xeon Gold 6134

- RAM: 6x 16GB DDR4-2666 RDIMMs (Micron)

- SSD: Intel DC S3710 400GB

- SATADOM: Supermicro 32GB SATADOM

The performance of this setup is excellent. If you wanted a single-socket step up from the Intel Xeon E3-1200 V6 line or the Xeon Entry level Xeon E SKUs, this is certainly a great way to go as you get more cores, more PCIe lanes, more memory capacity and an expanded feature set. These chips are also dual socket capable which we expect will be the most common deployment scenario.

Before we get into our benchmarks, we wanted to note that our dataset is focused on pre-Spectre and Meltdown results at this point. Starting with our Ubuntu 18.04 generation of results we will have comparison points to the new reality. The Spectre and Meltdown patches to hurt Intel’s performance in many tests. At the same time, as of writing this article, patches are still being worked on. Likewise, software is being tuned to deal with the impacts of the patches. Given this, we are going to give the ecosystem some time to settle before publishing new numbers.

Don’t get it. Seems that even the weakest Epyc cpu beats the 6134 on every single benchmark apart from AVX512. Does that make the 6134 “less competitive apart from a single use case”?

*Doesn’t that make…

Funny how AMD is winning all bench marks hands down…. by a wide margin. AMD is back in the Game…

The author of this article is a clown.

These are specifically designed for running software with per-core licensing. While the benchmarks are mostly being shown on a total sum basis, the metric you would want to look at here is performance per core. In many of the micro benchmarks (e.g. c-ray), EPYC does very well. Larger application benchmarks (Linux Kernel Compile for example) show per-core performance on EPYC is nowhere near Intel’s frequency optimized parts.

For folks used to consumer level or purely open source software, EPYC may seem like a clear winner here. For those who pay software license per-core, EPYC can cost tens of thousands more per socket for the same amount of performance.

Thanks for the reply Patrick.

Which benchmark in this review shows per core perfomance? What is the difference exactly? It is not obvious from the tests in the review.

Take a look at Linux Kernel Compile benchmark (behaves more like an application than the micro benchmarks) and divide the Gold 6134 numbers by 8 and the EPYC 7301/ 7351P numbers and divide by 16 as an example. Intel is 60-70% faster per core on a loaded system.

Thanks Patrick. Which compiler was it used for this?

Also 7301 is about $850, 7351 about $1100 and 6134 $2200. It would be interesting to see a price/single core perf ratio and also energy consumed/single core perf under load for all (I suspect the latter would also favor the xeon).

gcc – but the answer to your question is not the price of the CPU, instead, it is the price of the licenses. Just different tools for different jobs.

Depends on the scale. If initial purchase cost + cost of running allow it, one could licence 2 cores for near the price of 1. Was it gcc8? It would be nice to see LLVM Clang 5 and Open64.