Intel today disclosed more on its network processor roadmap. Specifically, the Intel IPU (Infrastructure Processing Unit) aims to be an infrastructure processor. If IPU sounds confusing, that may be because it has a confusingly similar name to the Graphcore IPU and the Mellanox IPU line. Intel also previously used IPU as its Imaging Processing Unit. While the company is not producing DPUs like the rest of the industry, it has exotic solutions for hyper-scalers.

Intel IPU Exotic Answer to the Industry DPU

Data centers are moving toward separating the infrastructure and the application levels. Indeed, AWS has been doing this for years, as have other hyper-scalers such as Microsoft. Effectively, an infrastructure operator will use the network fabric to deliver scale-out resources to potentially untrusted third parties running applications in the data center in a composable manner. Here we can see Intel (in a great slide) showing CPUs, GPUs, storage, AI/ ML processors, and other XPU accelerators attached to its IPU and then made available over the network fabric.

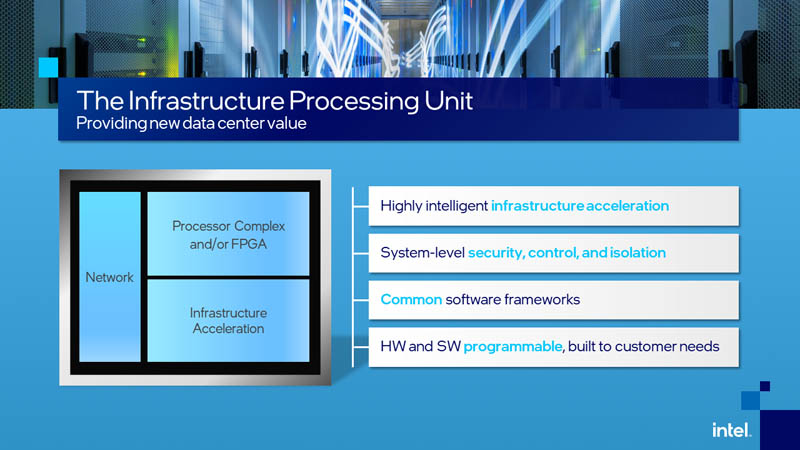

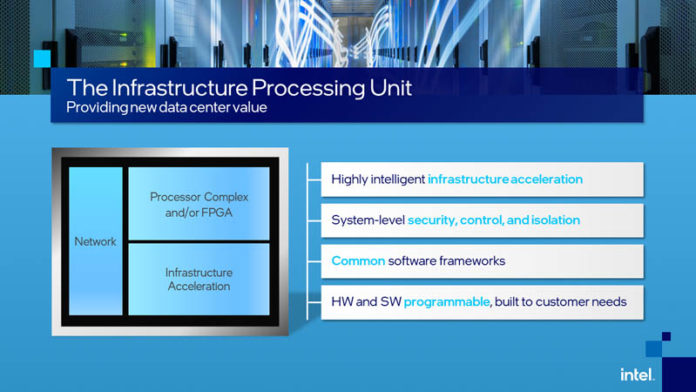

In terms of what Intel’s vision for this product involves, the company sees networking, acceleration, and a processor and/or FPGA involved.

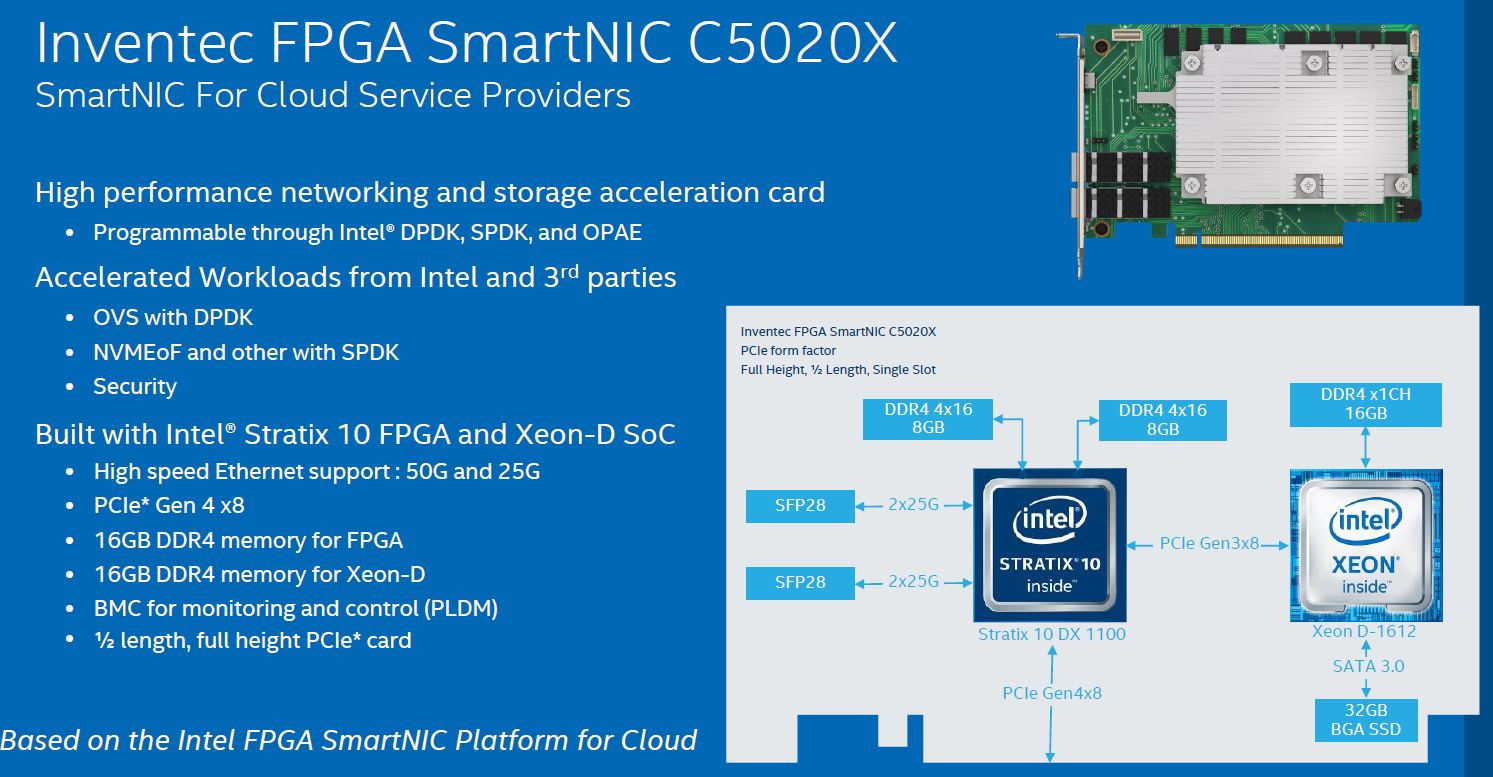

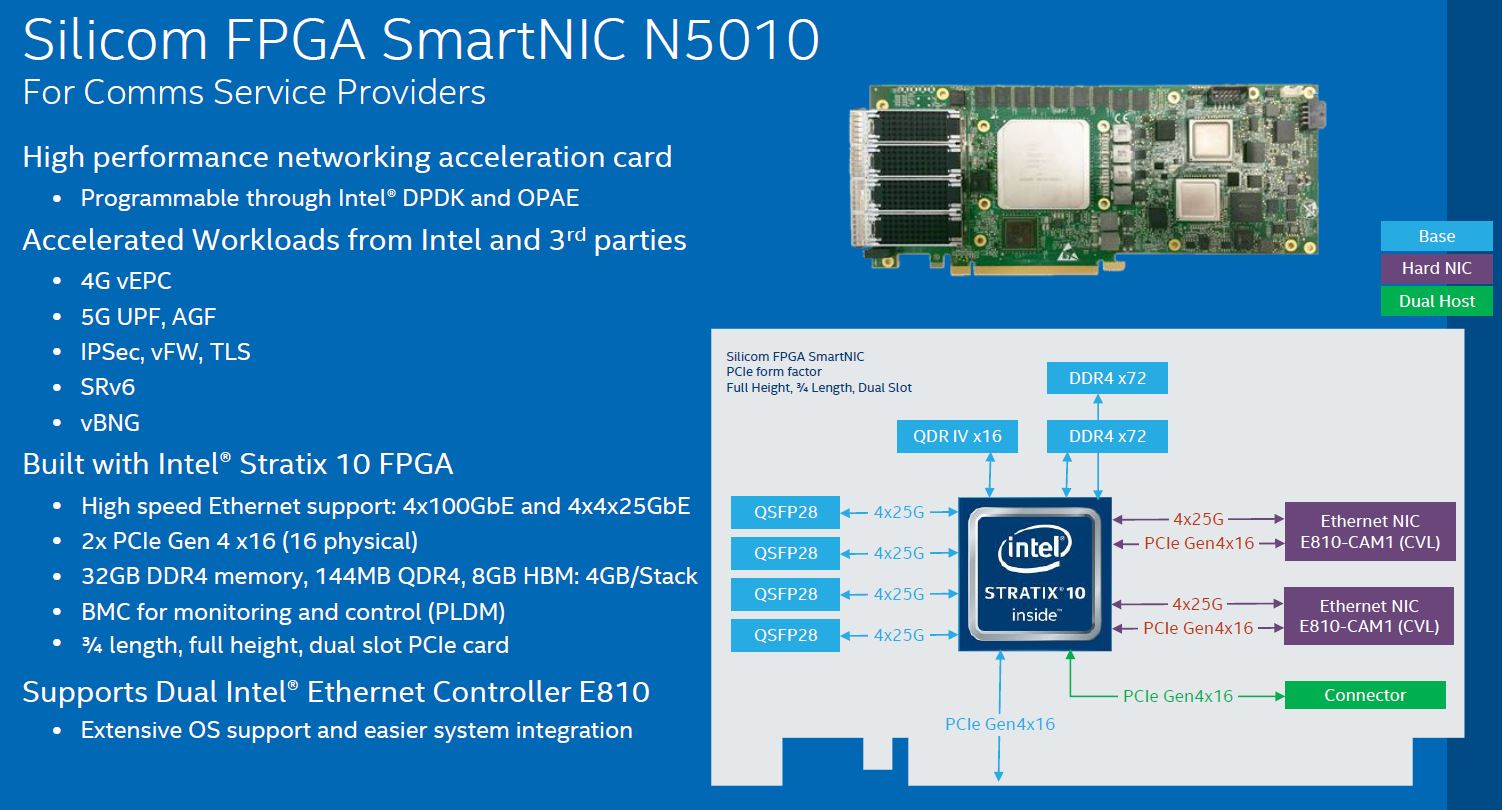

We know Intel has been active in the hyper-scale space with this. One example is the Inventec FPGA SmartNIC C5020X which combined a Stratix 10 and a Xeon D-1612 onto a single card.

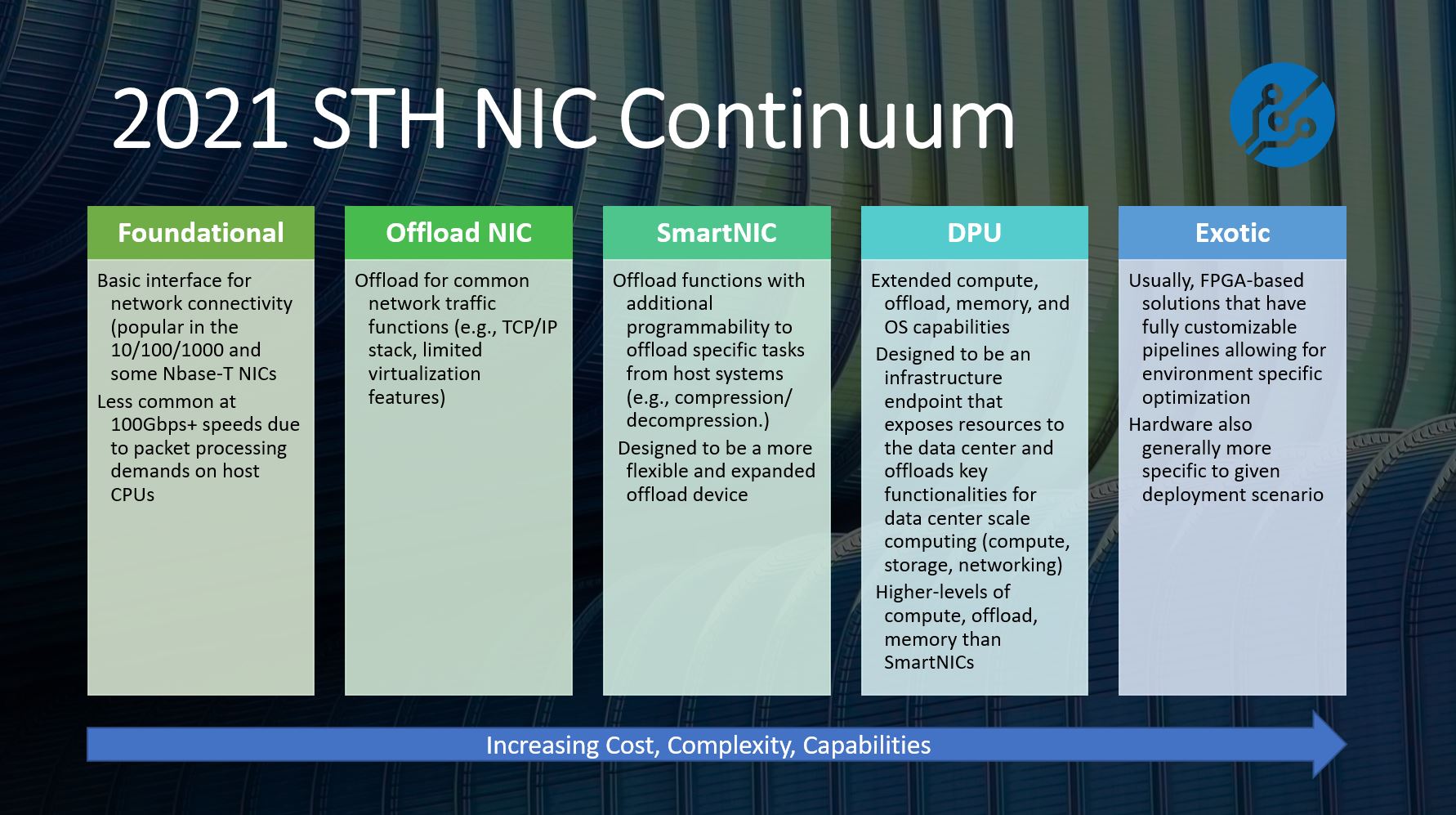

At this point, one may be wondering why the slide above uses SmartNIC, yet it is clearly a custom solution for a customer. Also, for devices that sit in this segment of the market, the overall market (NVIDIA/Mellanox, Marvell, Broadcom, Pensando, Fungible, VMware, and so forth) including Pat Gelsinger Intel’s CEO in his previous role at VMware, use DPU. There are certainly many terms which is why we created the STH NIC Continuum Framework.

We do not have Intel’s exact implementation details from today’s IPU disclosure, but we generally put FPGA-based NICs in the “Exotic” category. FPGA solutions generally have flexibility but require customer-specific teams to extract value while DPUs are being marketed more generally. If you want to learn more about the differences, aside from the article above, we have a video showing actual DPU devices.

We have been foreshadowing the fact that Intel internally has been using a different name from the rest of the industry, the IPU is it.

Intel told us last week that coming up “Intel will roll out additional FPGA-based IPU platforms and dedicated ASICs. These solutions will be enabled by a powerful software foundation that allows customers to build leading-edge cloud orchestration software.” (Source: Intel)

Although the company mentions ASICs, its Ethernet 800 Series we would consider more of an Offload NIC versus a SmartNIC. We would also put the IPU in more of the “Exotic” category rather than a DPU category based on the STH NIC Continuum framework.

Final Words

While NVIDIA and much of the rest of the industry are focused either on application-specific or general-purpose DPUs, Intel is focused on the IPU market for hyper-scale clients at the moment. Given Intel’s current CEO was extolling the virtues of DPUs with VMware Project Monterey ESXi on Arm on DPU just a few months ago when at VMware, we expect this vision is going to be Intel’s path forward.

Hopefully, in the meantime, we hope Intel clears up its marketing. Mellanox pioneered the use of IPU for this type of device but eventually moved to DPU to drive industry education and adoption. The reason we have a Continuum of NICs is that there were so many vendors calling their products different things. The industry moved to DPUs in the summer of 2020 with every major vendor adopting the term for their general-purpose infrastructure platforms. The benefit of moving away from IPU is that it gets one away from confusion with these previous efforts and confusingly similar names in the processor space such as with the Intel Image Processing Unit, Graphcore IPU, and the Mellanox (now NVIDIA) IPU.

Intel currently seems focused on selling to hyper-scale clients with FPGA-based solutions. Indeed, Pat Gelsinger did not even mention an Intel-based NIC at the VMware Project Monterey announcement. We expect to see more on this class of processors from Intel later this year whereas we are already working with NVIDIA BlueField-2 DPUs in the STH lab. Given when we will likely hear more, our best guess is that more general availability of an Intel DPU-class device may come in early 2022 when NVIDIA will have its third generation BlueField-3 device. While Intel may be leading the hyper-scale market with FPGA-based solutions, development for higher-margin non-hyperscale DPU integration is happening on Arm cores and on platforms from its competitors.

We hope Intel can create a DPU solution in the coming year, but for now, the Exotic IPU solution is a start.

IPU = Infrastructure Processing Unit, a small coprocessor or terminal node designed and configured to process data and instructions relating to infrastructure functions such as smart switching, console management and various I/O communications tasks that do not require main CPU/GPU resources in order to work.

Hat-tip to INTEL for LISTENING to the people on this one! It ain’t a “DPU”, it’s an IPU!! Let’s call ALL “DPU” things IPUs from now on, no exceptions allowed!

While the abbreviation IPU CAN refer to different things (such as an image processing unit, which could just as easily be called a video or vision processing unit with VPU as its abbreviation, shared with vector processing unit, also shortened to VPU), THIS version of IPU is MORE and BETTER descriptive than “DPU” as the terminology tells me EXACTLY what kind of data the thing is designed to process! IT SHOULD NOT MATTER WHETHER THE SPECIFIC DESIGN IMPLEMENTATION IS “EXOTIC” OR “GENERAL PURPOSE”! All that matters here is the terminology is CONSISTENT and describes the exact function of the devices WITHOUT forcing me to engage in mental gymnastics to vainly attempt to work out precisely what data this device is processing. It shouldn’t ever be vague. It should be obvious and Intel’s use of “IPU” in the context of the whole “DPU” thing makes POTENTLY CLEAR what their “DPU”-like device is doing. Again, as I have said, call these things what they are and resist as much as possible the urge to resort to vague terms that don’t tell people what their devices do. Let’s now get EVERYBODY ELSE to use “Infrastructure Processing Unit” in place of the stupidly vague “Data Processing Unit”! It gives a much more accurate description of the device’s function and what kind of data it’s processing AND IT JUST SOUNDS BETTER!

Rant over, thanks for your patience and listening.

Stephen, that isn’t what a DPU is designed to do. It’s hard to read a rant praising Intel when even Intel’s products are designed to do something different than you’re saying. I think that’s why STH is doing great work helping define categories. Maybe not perfect, but if it helps everyone get on the same lingo I don’t care what it says.

And these aren’t even small either. They’re already 8+ cores and going to be 16+ in the next year using over 65-75W. That makes them use more power than 2 Atom C3000 SoCs.

If Intel were to offer one of their QAT-enabled CPUs on an add-in card with a NIC or NICs and some sort of fast logical interface between the OS running on the expansion card and the one on the primary system would that qualify as a ‘DPU’ for the purposes of the STH classification?

I may have missed something; but that seemed to be what Nvidia was doing; but with ARM cores and their own acceleration bits, for Bluefield; and it would have general purpose OS running on it which would, I assume, keep it out of the ‘exotic’ category that any of their moves to put a big FPGA on a NIC would be placed in.

The General Purpose Compute block is interesting. CPU accelerators with sole access to their direct attached memory seems to be more scalable in performance than having more and more CPUs in a package using a bottleneck memory controller.

IDK. I remember MS trying to convince the world that “DNS” means “Digital Nervous System”.

I think they’d need not just the CPU fuzzy but they’d need 100GbE or more, OS storage, memory, they’d need higher-speed accelerators, and they’d need both the root and guest PCIe functionality from the lanes. They’d also need a bypass path to not hit the CPU cores which isn’t going to happen on a Xeon D with QAT if that’s what you’re getting at.

“Although the company mentions ASICs, its Ethernet 800 Series we would consider more of an Offload NIC versus a SmartNIC. We would also put the IPU in more of the “Exotic” category rather than a DPU category based on the STH NIC Continuum framework.”

Maybe you have to wait until Intel announces the ASIC(s) before making a firm statement like this made on a wrong assumption (in my opinion) that it will be based on the 800 series.

To be as bold as you : I expect a “package” that is a combination of a Tofino pipeline + foundational NIC chiplet (the ASIC part), FPGA chiplet, Serdes chiplet, HBM chiplet and a x86 chiplet (if they are really as far as Pat Gelsinger stated in his keynote at the same conference, else a separate Xeon-D alike) on one package substrate + accompanying drivers, linux support and p4 compiler with standard ethernet switching program included for the tofino pipeline. That would be the proof of Intels chiplet combination vision and the open software vision and would put it directly in your “DPU” category….

Btw I agree with Stephen Beets, but hell whats in a name as we are pawns of the marketing people of the big vendors….

As I mentioned before, there’s really two completely different classes of devices under the “DPU” label.

There’s the add-in-cards that are basically a NIC with extra features on the one side (like these “IPUs”, and the full fat devices that completely cut out the host system on the other side (like the fungible DPU)

I think only one of these should be called DPU, the other needs a different name.

“Show me the” chips. Until I see anything from Intel I don’t believe it. Too many schedule slips. I’ve seen the Microsoft units. I’ll just say no way an enterprise is going to ever deploy something like that.

I don’t think the STH categories are perfect, but they’ve got the best out there right now and had the balls to do it. They’re close but not perfect but online so I’d say we may as well use it as an industry. We’ll just have DPU in our RFP and if an OEM pitches an Intel FPGA IPU they’ll get a does not meet RFP requirements.

@TomK

Do you at least agree with my original claim that calling something a “data processing unit” is much too vague? Do you at least agree with my stance that companies ought to be more specific with the terminology they apply to these things? If so, where’s the problem in my ranting? Am I attacking the wrong thing here?

Also, can you give me, a tech enthusiast, a GOOD REASON why these “DPU” things need so many cores? I mean, 8+ to 16+ cores seems patently insane for something whose main purpose for being is I/O communications and management. At some point why stick one of these inside your server? Just buy yet another server and dedicate it to this task exclusively. Will I be wrong to claim that this might, just might, represent a HUGE waste of technology anyway? Why do we need this in the first place when you’re gonna have 256+ core main CPUs anyway? Is it really that big an issue to have some of those cores tied up in management and I/O functions? Maybe I’m missing every point. I don’t do this for a living. Sorry for being such a pain.