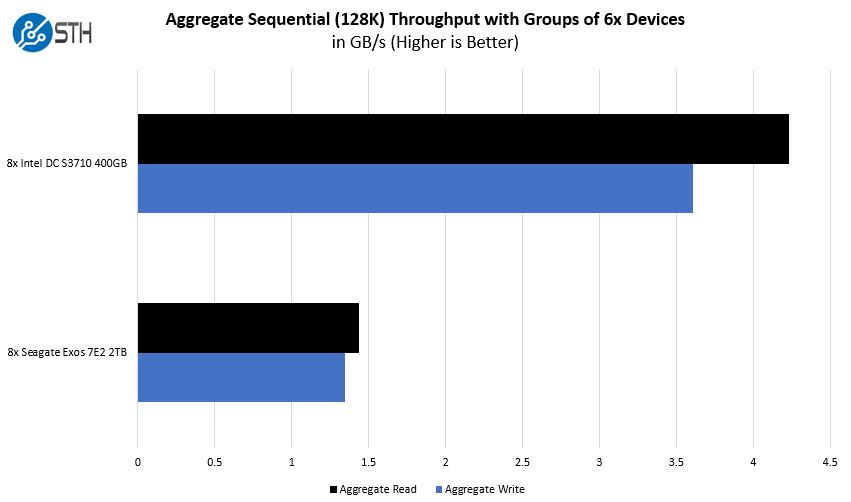

Inspur Systems NF5280M5 Storage Performance

Our Inspur Systems NF5280M5 was a 12x 3.5″ configuration. This configuration is focused on SATA/ SAS storage rather than NVMe. Inspur also offers higher-end NVMe storage and 2.5″ storage configurations that we were not able to test.

Overall, we saw performance in-line with what we would expect on the storage side using these drives. The Inspur Systems NF5280M5 was able to handle our storage configurations without issue.

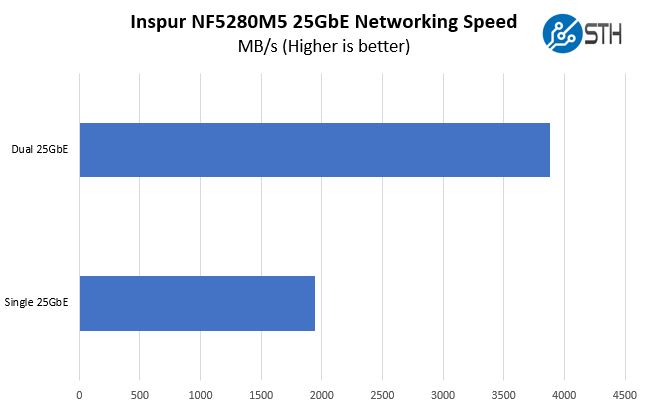

Inspur Systems NF5280M5 Networking Performance

We used the Inspur Systems NF5280M5 with a dual-port Mellanox ConnectX-4 Lx 25GbE OCP NIC. The server itself supports riser configurations for more, this is simply all we could put in our test system given the configuration we were using. At the same time, it represents what we expect will be a very popular solution for the server.

25GbE infrastructure has become extremely popular. It provides a lower-latency connection than legacy 10GbE/ 40GbE. One also gets higher switch port bandwidth density than with the older standard. As a result, we are seeing many of our readers deploy 25GbE today. Next year, as PCIe Gen4 becomes more widespread, this may change. For now, a dual 25GbE connection is a good match for a PCIe Gen3 x8 slot which will make it a popular choice for Intel servers.

Inspur Systems NF5280M5 Test Configuration

For our review, we are using the same configuration we have been using for our 2nd Generation Intel Xeon Scalable CPU dual-socket reviews, we are using the following configuration:

- System: Inspur Systems NF5280M5

- CPUs: Intel Xeon Platinum 8280, Intel Xeon Platinum 8268, Intel Xeon Platinum 8260, Intel Xeon Gold 6242, Intel Xeon Gold 6230, Intel Xeon Gold 5220

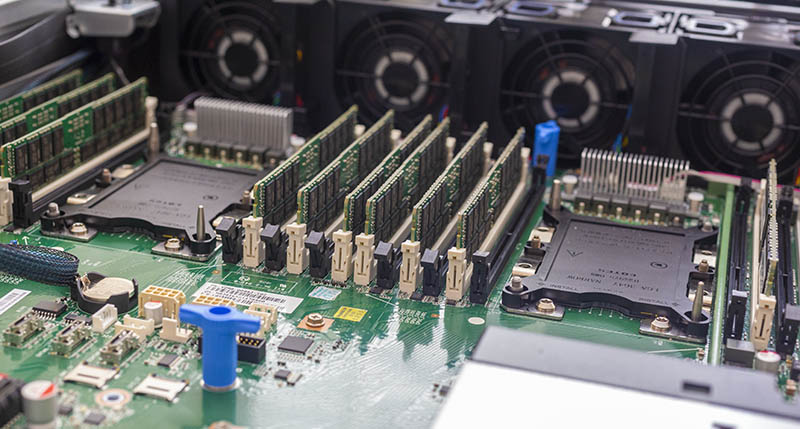

- RAM: 12x 32GB DDR4-2933 ECC RDIMMs

- Storage: 2x Intel DC S3520 480GB OS

- PCIe Networking: Mellanox ConnectX-4 Lx 25GbE

A quick note here, we did not utilize the Intel Optane DCPMM here because we had standard chips. Using Intel Optane DCPMM even with two 128GB modules per CPU to stay well below the 1TB per CPU memory limit would have meant our memory would work at only DDR4-2666 speeds.

Not all dual-socket Intel Xeon servers can handle the dual Intel Xeon 8280 processors and their 205W TDP. The Inspur NF5280M5 is able to scale that high. We only tested higher-end Xeon CPUs in this server, but it also supports Xeon Bronze and Xeon Silver SKUs as well as the first generation of Intel Xeon Scalable processors. We highly suggest using second-generation SKUs with the server if you are reading this review. Intel added significant amounts of performance in the lower segments of the SKU stack with the new CPUs.

Next, we are going to take a look at the Inspur Systems NF5280M5 power consumption before looking at the STH Server Spider for the system and concluding with our final words.

Is Inspur going to do an AMD EPYC 7002 Rome server? I’d like to see that reviewed if so. If not, they’re behind.

Hot Swap Internal Fans?

Who is going to pull a rack server out and remove the cover with out shutting it down first?

Basically everyone who wants to avoid unnecessary down-time.

(Servers with hot-swap components are designed to run without a lid for short periods of time.)