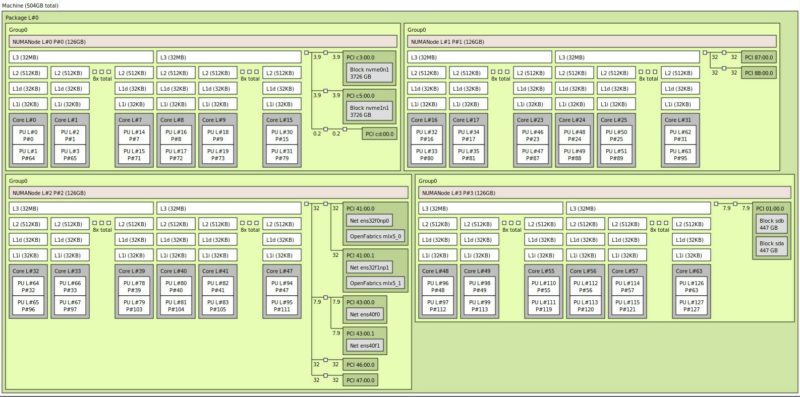

Inspur NF3180A6 Topology

For folks who are interested, here is the topology of the system, with a twist.

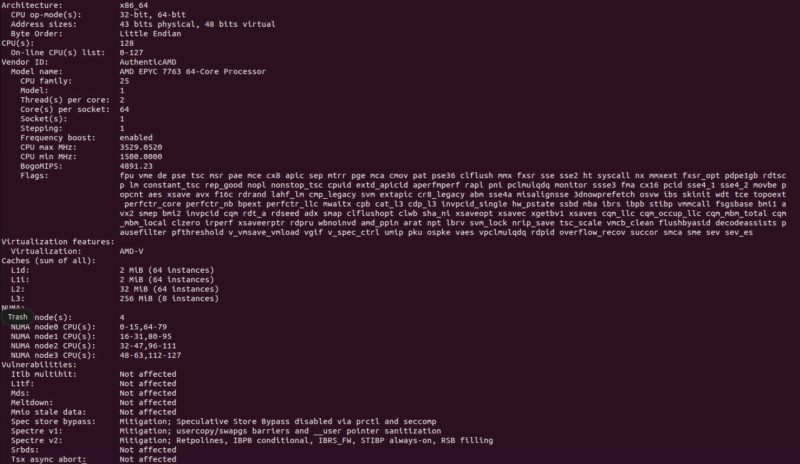

The system came with NPS=4 set instead of NPS=1. That means that the AMD EPYC 7763 was split into four NUMA nodes as we can see in the above topology map and the below lscpu output.

This is an option that keeps data more localized to a set of cores and memory channels. The default is NPS=1 where the entire EPYC is a single NUMA node. Still, what the topology map allows us to see is how the PCIe devices are split among the four CPU NUMA nodes that are created with the NPS=4 option. Since this is a single-socket AMD EPYC 7003 “Milan” platform, all of the PCIe and memory connectivity goes directly to the single CPU and there is no PCH. This was a bit more exciting to show our readers than the NPS=1 version.

Next, let us get to management.

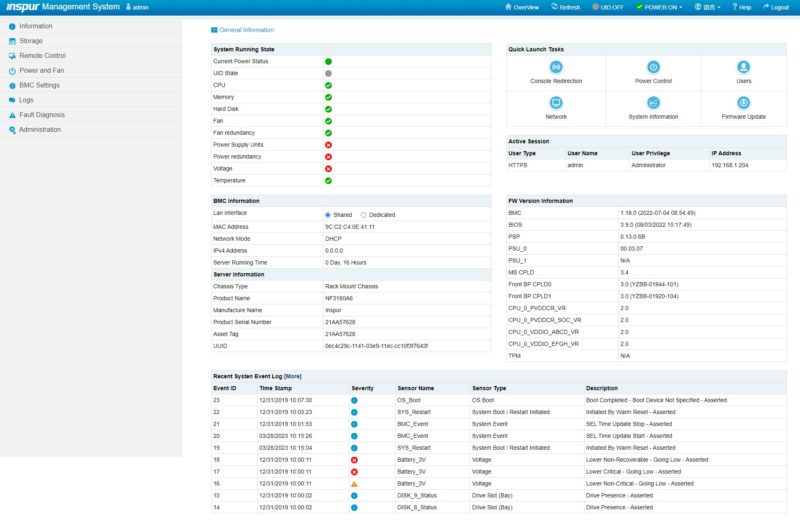

Inspur NF3180A6 Management

Inspur’s primary management is via IPMI and Redfish APIs. We have shown this before, so we are not going to go into too much extra, but will have a recap here. That is what most hyper-scale and CSP customers will utilize to manage their systems. Inspur also includes a robust and customized web management platform with its management solution.

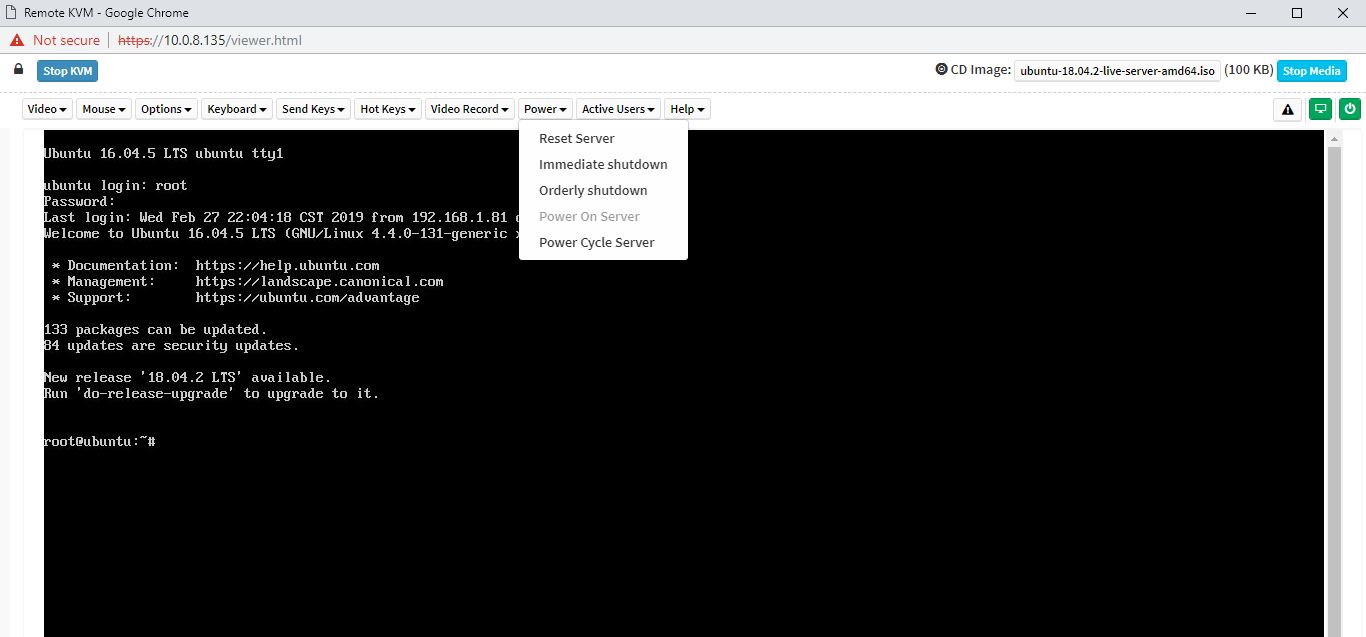

There are key features we would expect from any modern server. These include the ability to power cycle a system and remotely mount virtual media. Inspur also has a HTML5 iKVM solution that has these features included. Some other server vendors do not have fully-featured HTML5 iKVM including virtual media support as of this review being published.

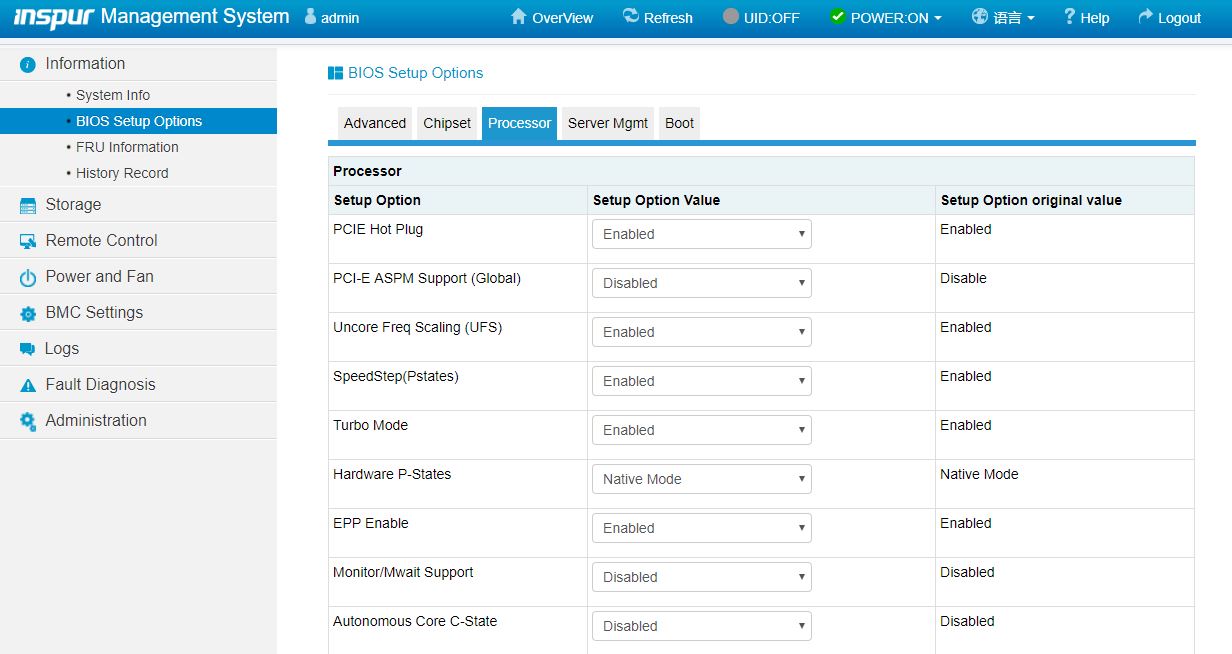

Another feature worth noting is the ability to set BIOS settings via the web interface. That is a feature we see in solutions from top-tier vendors like Dell EMC, HPE, and Lenovo, but many vendors in the market do not have.

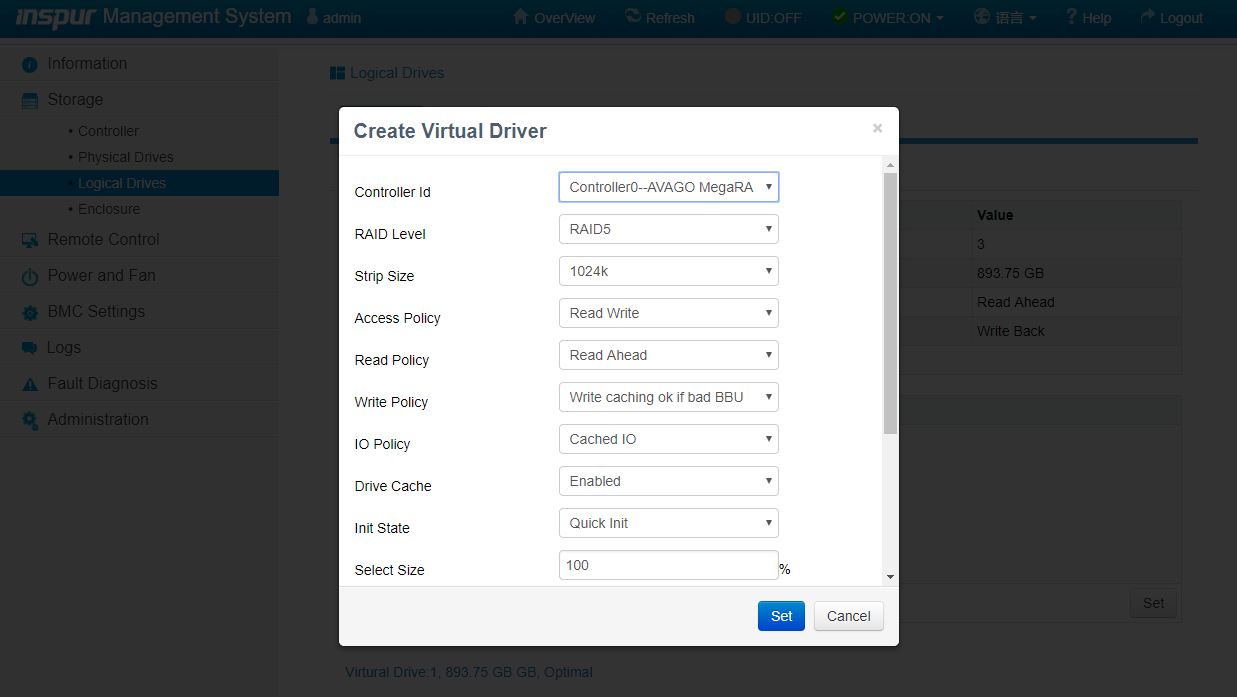

Another web management feature that differentiates Inspur from lower-tier OEMs is the ability to create virtual disks and manage storage directly from the web management interface. Some solutions allow administrators to do this via Redfish APIs, but not web management. This is another great inclusion here.

Based on comments in our previous articles, many of our readers have not used an Inspur Systems server and therefore have not seen the management interface. We have an 8-minute video clicking through the interface and doing a quick tour of the Inspur Systems management interface:

It is certainly not the most entertaining subject, however, if you are considering these systems, you may want to know what the web management interface is on each machine and that tour can be helpful.

Next, let us discuss performance.

Inspur NF3180A6 Performance

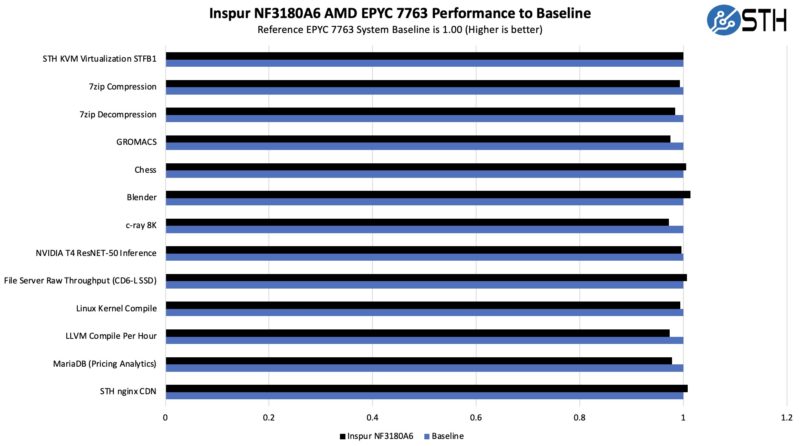

In terms of performance, the AMD EPYC 7763 has been out for some time, so the performance is fairly well-known. We just wanted to run the server through our benchmark suite to validate the cooling capability allowing the processor to turbo.

This is a fairly good performance, and it seems like the AMD EPYC 7763 is performing well. We think that the EPYC 7763 is probably a higher-end part than many users will choose since the “P” SKUs are lower-cost options.

Next, let us get to power consumption.

Is this a “your mileage can and will vary; better do your benchmarking and validating” type question; or is there an at least approximate consensus on the breakdown in role between contemporary Milan-bases systems and contemporary Genoa ones?

RAM is certainly cheaper per GB on the former; but that’s a false economy if the cores are starved for bandwidth and end up being seriously handicapped; and there are some nice-to-have generational improvements on Genoa.

Is choosing to buy Milan today a slightly niche(if perhaps quite a common niche; like memcached, where you need plenty of RAM but don’t get much extra credit for RAM performance beyond what the network interfaces can expose) move; or is it still very much something one might do for, say, general VM farming so long as there isn’t any HPC going on, because it’s still plenty fast and not having your VM hosts run out of RAM is of central importance?

Interesting, it looks like the OCP 3.0 slot for the NIC is feed via cables to those PCIe headers at the front of the motherboard. I know we’ve been seeing this on a lot of the PCIe Gen 5 boards, I’m sure it will only get more prevalent as it’s probably more economical in the end.

One thing I’ve wondered is, will we get to a point where the OCP/ PCIe card edge connectors are abandoned, and all cards have is power connectors and slimline cable connectors? The big downside is that those connectors are way less robust than the current ‘fingers’.

I think AMD and Intel are still shipping more Milan and Ice than Genoa and Sapphire. Servers are not like the consumer side where demand shifts almost immediately with the new generation.