Inspur NF3180A6 Internal Hardware Overview

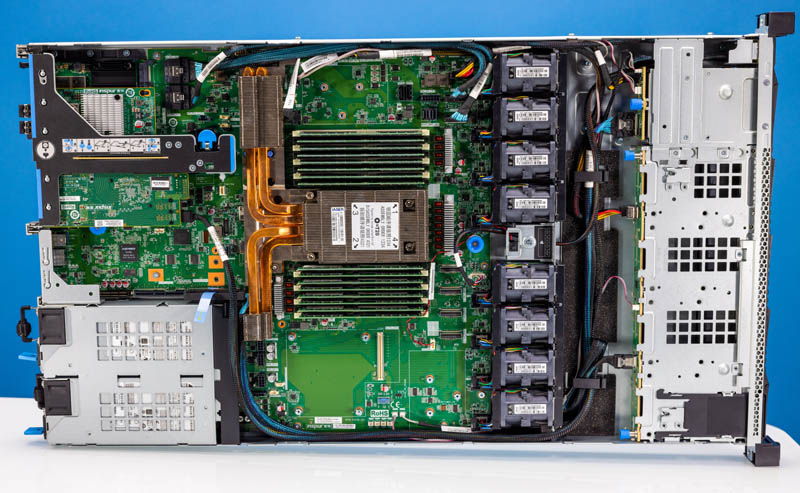

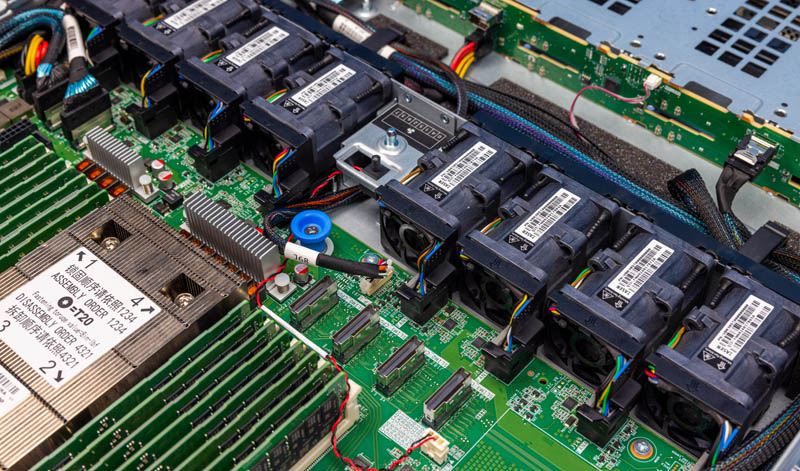

Here is a look inside the server with the front drive bays to the right and the rear I/O to the left to help orient our readers in this section.

Behind the drive bays is a fan partition. You can see the honeycomb airflow guide on the front of the fans as well.

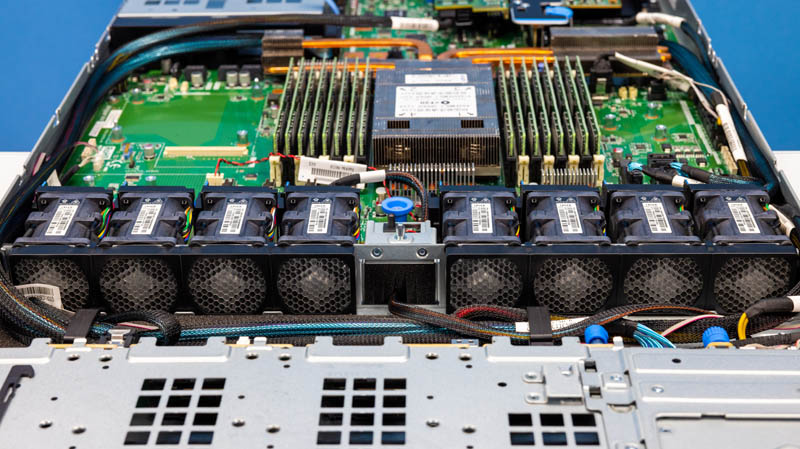

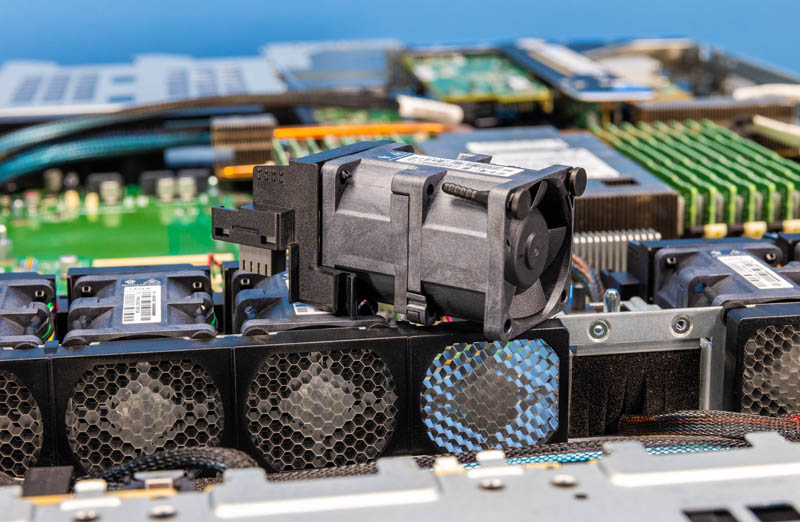

This fan partition is made up of eight dual fan modules. A nice feature is that these modules are a higher-end design that does not utilize cabled connections to the motherboard. This is common in 2U servers, but many 1U servers do not have this feature.

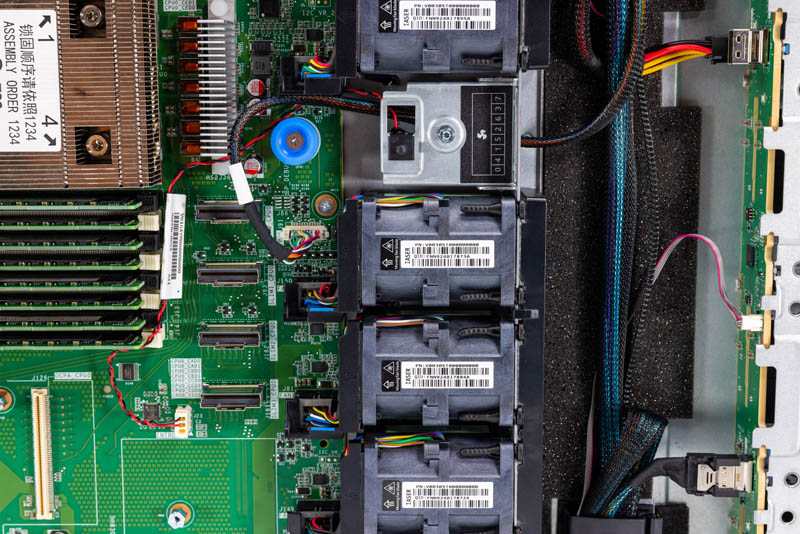

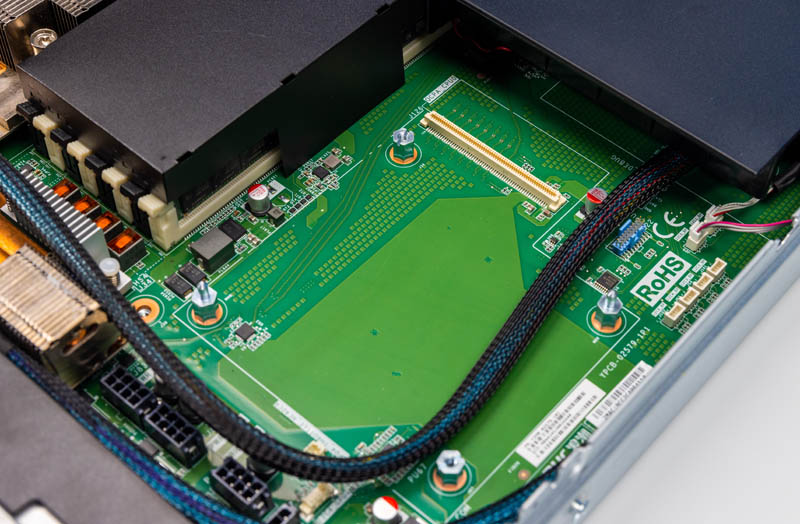

Behind the fans are PCIe connectors that this system is not using. Our sense is that if we had a denser NVMe configuration these would be populated with cables for front NVMe.

Here is another angle showing how the fans and this PCIe Gen4 I/O area works.

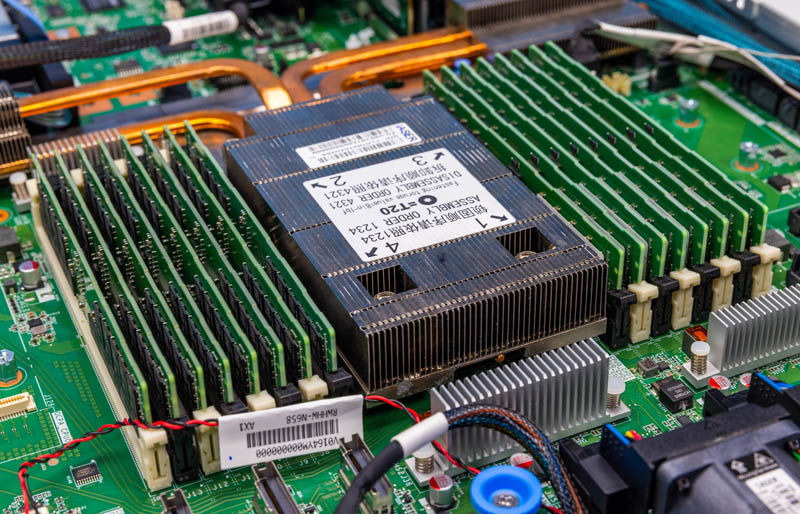

Behind that partition, the big feature is the single AMD EPYC 7002/ 7003 SP3 socket. In our server, we have a single AMD EPYC 7763 64-core part. While that is still a PCIe Gen4/ DDR4 generation CPU, it has 64-cores so in normal x86 (non-accelerated) computing, it is competitive with many of the Sapphire Rapids Xeon parts. Another huge benefit is that as a single-socket server, one can use “P” SKUs that offer significant discounts for single-socket-only servers.

The server also uses DDR4-3200 (1DPC) and DDR4-2933 (2DPC) making it much lower cost than the new DDR5 generation. Our system is filled with 16x DDR4 DIMMs for a 2DPC setup.

Here is the server with the full airflow guide configuration There is a baffle that guides air from fans to the CPU heat sink. There are also guides around the DDR4 memory so that the memory does not use too much airflow.

Here is a slightly different angle to help give some perspective.

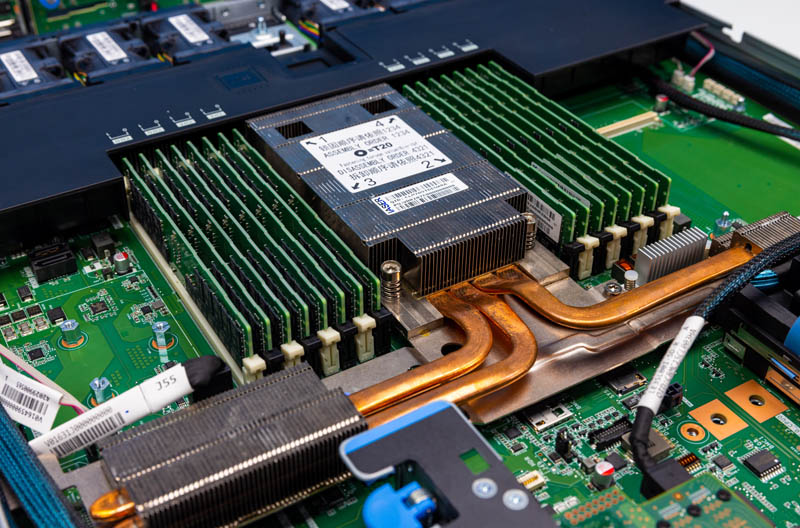

At this point, many of our readers will notice a key feature, the heatsink. The heatsink had extensions via heat pipes to help add surface area for cooling.

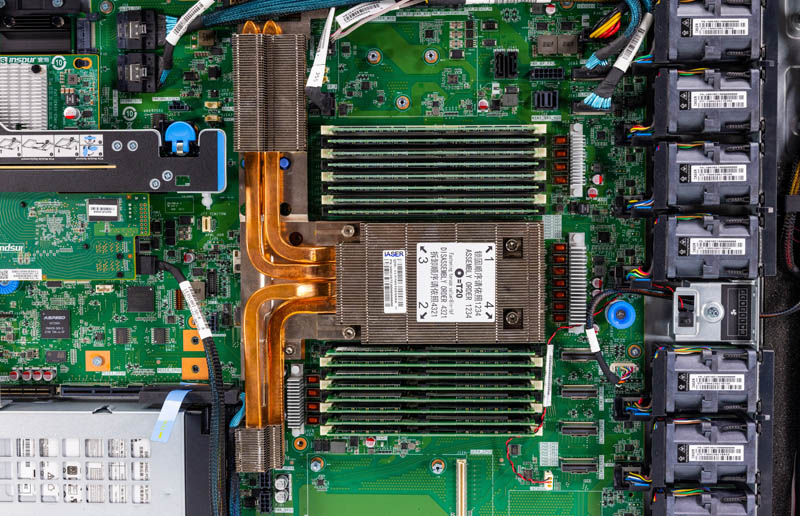

Here is a top view of the AMD EPYC processor, memory, and cooling just for some sense of scale.

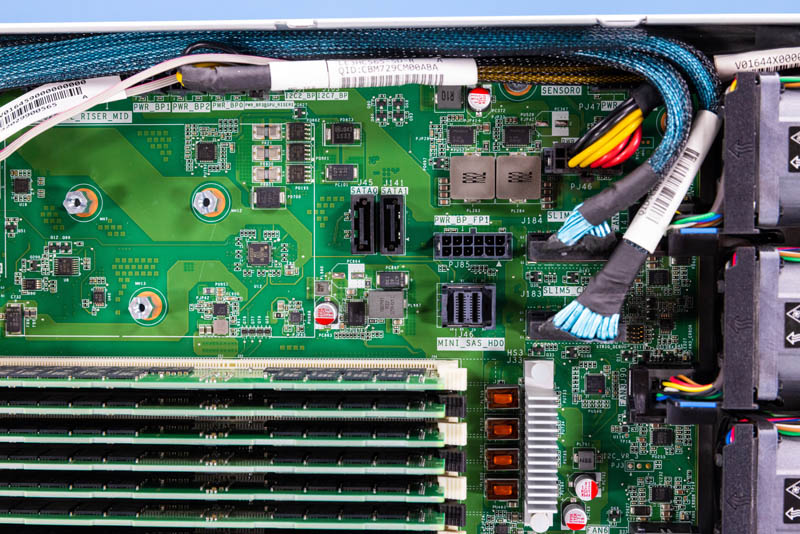

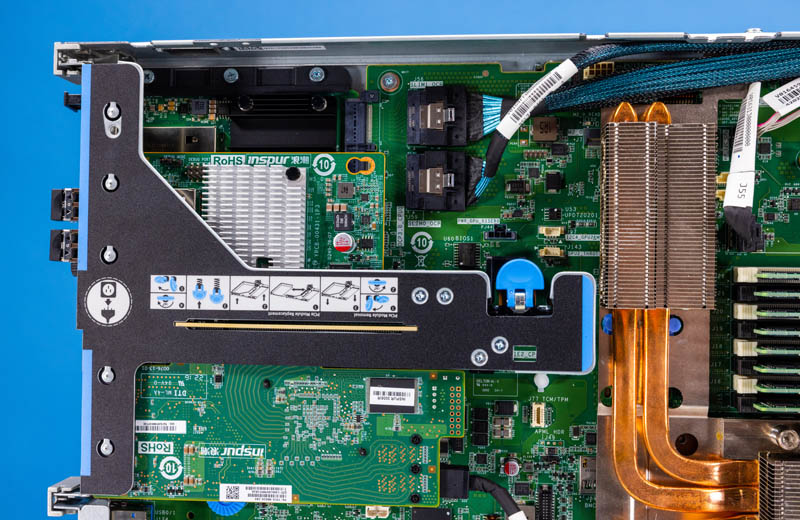

Next to the memory, we have an OCP slot for internal mezzanine cards like a SAS controller.

There is a fairly large area in the server dedicated to this function.

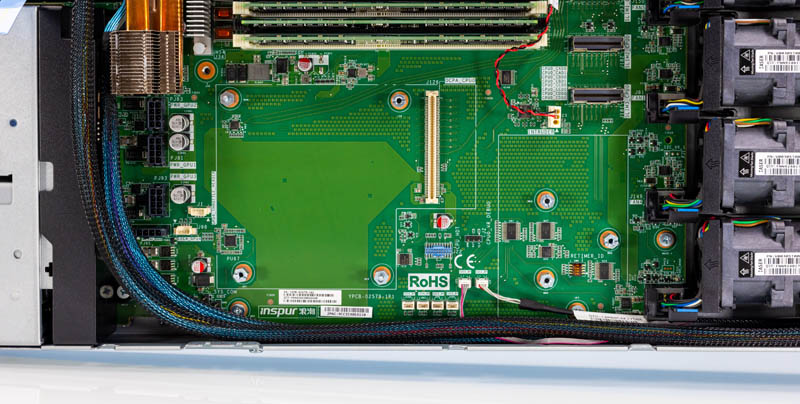

That OCP area is in front of the power supplies. Next to the power supplies we saw this large PCIe connector with a big pull tab.

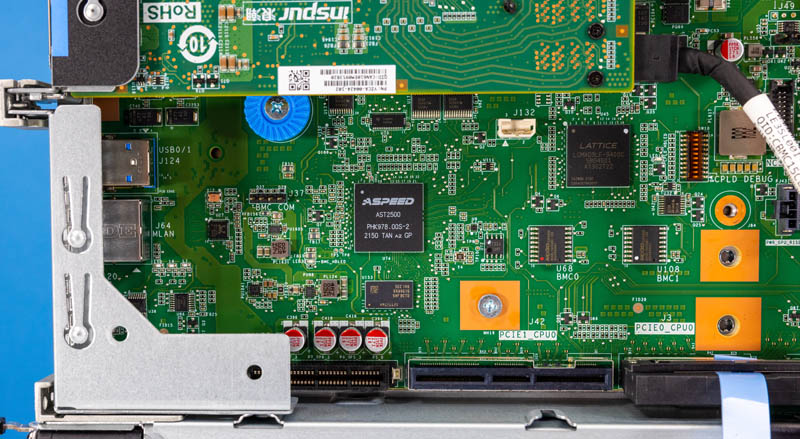

Here is that plus the ASPEED AST2500 BMC.

On the other side, next to the DIMMs, we have a number of connectors. That includes two 7-pin SATA connectors.

Behind this area is the cooler extension and then additional I/O.

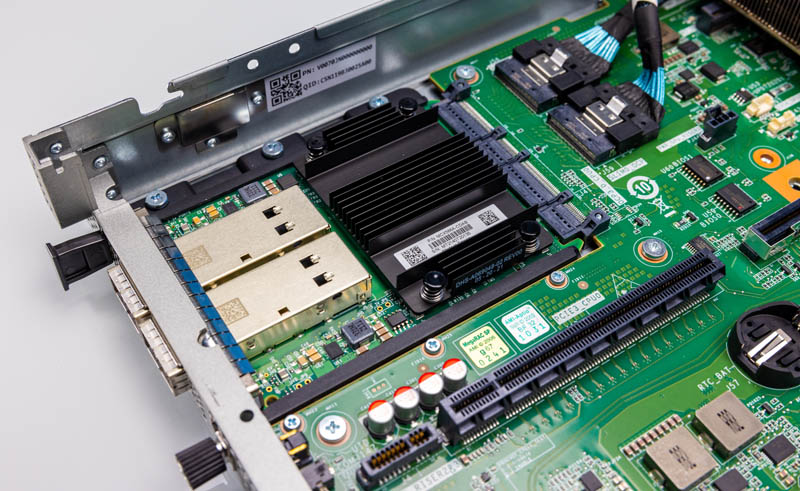

Part of that is the OCP NIC 3.0 slot. That has a NVIDIA ConnectX-5 100GbE card installed.

Next, let us get to the system topology.

Is this a “your mileage can and will vary; better do your benchmarking and validating” type question; or is there an at least approximate consensus on the breakdown in role between contemporary Milan-bases systems and contemporary Genoa ones?

RAM is certainly cheaper per GB on the former; but that’s a false economy if the cores are starved for bandwidth and end up being seriously handicapped; and there are some nice-to-have generational improvements on Genoa.

Is choosing to buy Milan today a slightly niche(if perhaps quite a common niche; like memcached, where you need plenty of RAM but don’t get much extra credit for RAM performance beyond what the network interfaces can expose) move; or is it still very much something one might do for, say, general VM farming so long as there isn’t any HPC going on, because it’s still plenty fast and not having your VM hosts run out of RAM is of central importance?

Interesting, it looks like the OCP 3.0 slot for the NIC is feed via cables to those PCIe headers at the front of the motherboard. I know we’ve been seeing this on a lot of the PCIe Gen 5 boards, I’m sure it will only get more prevalent as it’s probably more economical in the end.

One thing I’ve wondered is, will we get to a point where the OCP/ PCIe card edge connectors are abandoned, and all cards have is power connectors and slimline cable connectors? The big downside is that those connectors are way less robust than the current ‘fingers’.

I think AMD and Intel are still shipping more Milan and Ice than Genoa and Sapphire. Servers are not like the consumer side where demand shifts almost immediately with the new generation.