Traditional 2.5″ form factors that encompass hard drives and SSDs are on notice. As the market transitions to new generations of PCIe connectivity, EDSFF is going to start taking share from the 2.5″ SSD market. While one could say this is a “future” technology since it will be more prevalent in a few years, that is not necessarily the case. We have the 1U half-petabyte Supermicro EDSFF server in this hands-on look. While it is around a half-petabyte using today’s 15-16TB EDSFF drives, the 30TB+ EDSFF drives are on the horizon which will make these 1PB/ U platforms. In this article, we are going to take a look at the system along with the 15TB Intel SSDs that are being used in the systems today. While this may be more prevalent in the future, you can buy these servers and drives today.

Video Version

This is part of our visit to the Supermicro HQ post-lockdown series. We previously looked at the Supermicro 3rd Gen Xeon Scalable Server which we showcased alongside the Cooper Lake launch. We also looked at the Simply Double platform and the company’s new 60-Bay Top Loading Storage Server. As a part of that series, we have an accompanying video:

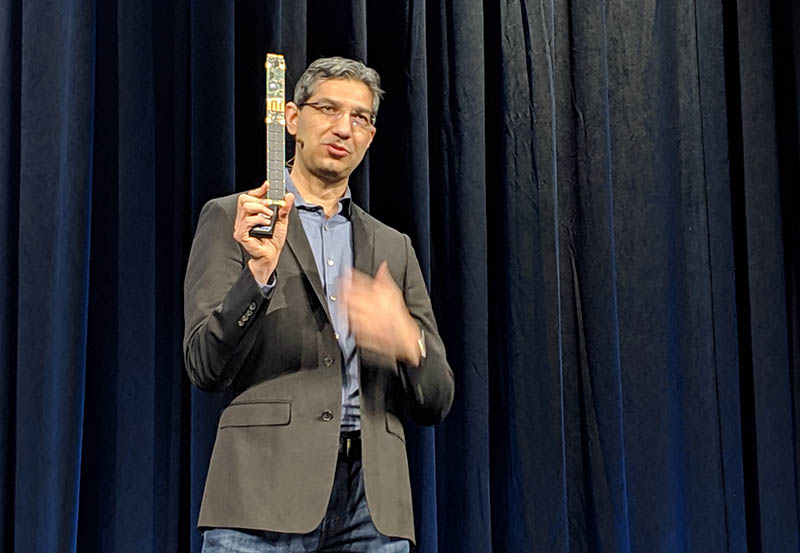

We are going to have more detail in this article, but want to provide the option to listen. As a quick note, Supermicro filmed the video bits at their HQ, provided the systems in their demo room, and their product managers that were able to make it. We did a whole series but are tagging this as sponsored since we relied upon their facilities instead of our own. I was able to pick the products we would look at and have editorial control of the pieces (nobody is reviewing these pieces outside of STH before they go live either.) In full transparency, this was the only way to get something like this done, including looking at a number of products in one shot, without going to a trade show during shelter-in-place. Look for more in this series coming to STH over the coming weeks.

What Makes EDSFF Different and Important

I wanted to take a moment to talk about why EDSFF is different. Your typical 2.5” drive bay was designed for SATA and SAS rotating hard drives. The 2.5” form factor is a size that the industry thought could service both enterprise storage and the notebook segment.

Things have changed. We rarely see notebooks with 2.5” drives these days. Buying SAS hard drives for performance enterprise storage means you are buying drives with lower performance, endurance, capacity, and reliability, with higher power consumption.

EDSFF is designed to be a PCIe standard for SSDs. Primarily, these are NVMe SSDs. The longer and thinner form factor better aligns with the fact that instead of spinning disks, we now have NAND chips on PCB. EDSFF allows for better placement of these NAND packages for optimal cooling. Cooling is important because it can dictate how much power a device can use and the thermals it can dissipate. The better the cooling, the more power a device can use and therefore more performance it can achieve.

Beyond the typical PCIe x4 NVMe SSD, EDSFF is designed for other storage as well. Intel has discussed Optane in EDSFF as another storage type. Beyond that, Intel has also shown off FPGAs in ruler form factors. EDSFF is for storage today, but it is ready for accelerators tomorrow with optional connectivity beyond 4-lane PCIe. Other vendors are starting to get into the EDSFF arena, but Intel has been shipping the ruler form factors to major customers for some time.

1U Half-Petabyte Supermicro EDSFF Server

The server we are looking at is the Supermicro SSG-1029P-NEL32R. This is a 1U server that has 32x EDSFF E1.Long slots grouped into sets of 8 drives. One can see that there are extra spaces in the chassis for airflow.

The drives we saw were Intel 15.36TB units. Intel has been saying that they would have 30TB+ drives for years (see: The Intel Ruler SSD: Already Moving Markets) but as we understand it you can order the system with 15.36TB drives today, but 30.72TB drives are not yet available. When they are, this effectively becomes a 1PB in 1U server.

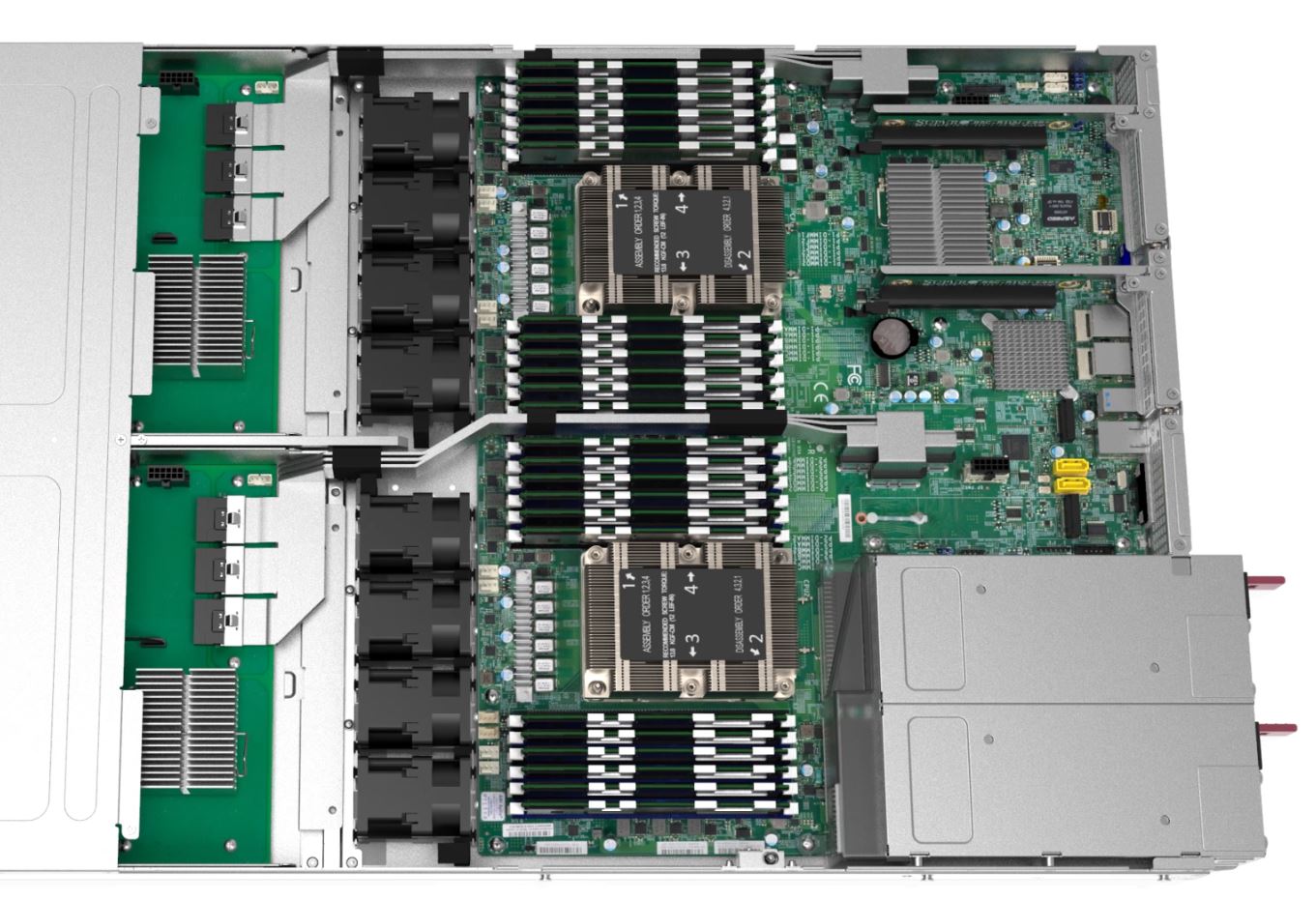

Looking inside the system, we see the PCIe Gen3 expanders to the middle of the chassis (left in the image below) that are cabled to the main motherboard. There is then a fan partition and two Intel Xeon Scalable (1st and 2nd Gen) processors. Each CPU gets a full 12 DIMM slots which means we get up to 24 DIMMs and can use Intel Optane DCPMM / Optane PMem 100 with the system as well.

We also get dual redundant power supplies and each CPU also gets access to a PCIe Gen3 x16 slot. Part of the concept here is that one can get PCIe lanes to the PCIe Expander and drives then PCIe lanes to a 100Gbps NIC all on the same Xeon CPU avoiding inter-socket NUMA traversal.

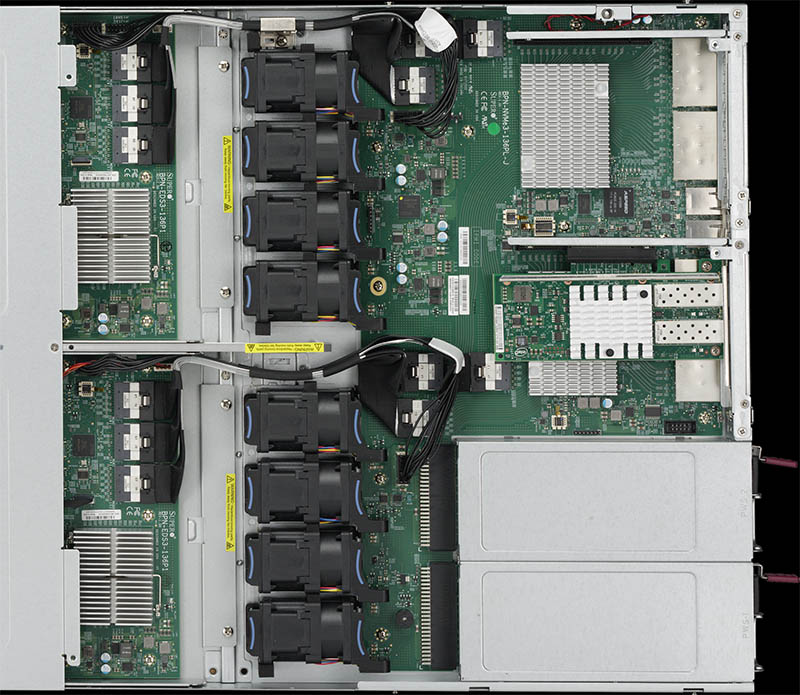

We wanted to take a quick note here and just mention that there are other options as well. For example, Supermicro has solutions such as the SSG-136R-NEL32JBF are built in a similar manner except without the server component to make a JBOF configuration. Below you can see an example of the JBOF configuration:

A key item to note here is the modularity. While these show the difference of the rear of the system being customized for server or JBOF applications, the cabling to the PCIe switch boards in the middle of the system is important as well. This allows Supermicro to use the same switch PCBs with different configurations. It is also what can make integration with new PCIe and CPU generations easier as we move to PCIe Gen4.

The Supermicro SSG-1029P-NEL32R utilizes a PCIe Gen3 x4 connector for each drive. Again, this is a PCIe connector so one can, in theory, use other PCIe EDSFF devices in this system so long as they fit in the thermal/ power envelopes. Today the application is SSDs, but tomorrow, the industry is looking to a more expansive ecosystem.

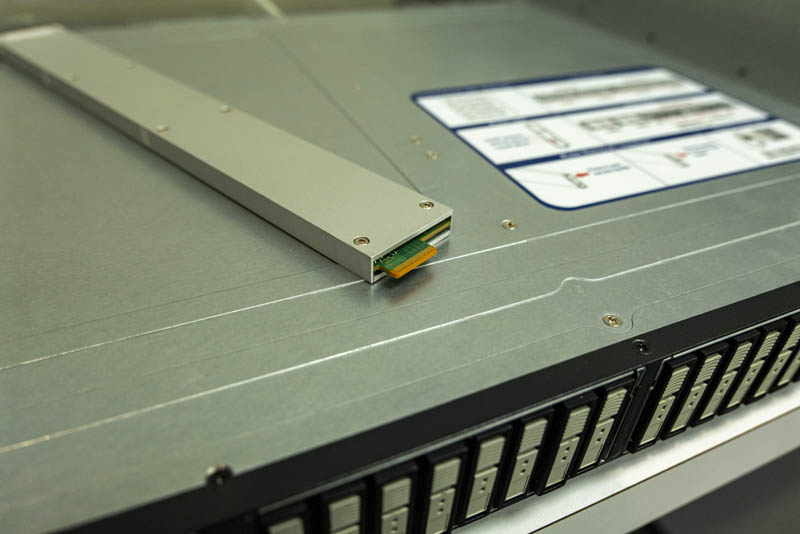

Something we wanted to take a quick moment to show is the latch. Here is a picture of the latch closed and the 15.36TB Intel SSD atop the system.

Here is the latch extended atop the system.

While a latch may seem strange to highlight, it turns out that it is actually a big deal. Where we had drive trays with 2.5″ and 3.5″ drives, with EDSFF we do not. As a result, the latching mechanisms are a big deal among server vendors and they are screwed onto the front of these drives. Being able to provide smooth hot-swap functionality on a device this long requires a mechanically sound latching mechanism.

Final Words

The ecosystem is looking at EDSFF to provide more than just storage even to accelerators and perhaps even memory in the CXL PCIe Gen5 era. 2.5″ storage form factors will be limited in the not-too-distant future as the PCIe interface is transitioning from PCIe Gen 3 to Gen 4 in 2019/2020 and then the PCIe Gen5 transition will likely start in 2021. Handling what will effectively be a 4x interface bandwidth jump will require devices to use more power and that is where EDSFF will become extremely important.

While that is a look at the future, the impact is here today. Supermicro says it has some large government/ defense sector as well as some service provider customers for EDSFF today. We also know Facebook is adopting EDSFF in its next-gen platform and Microsoft is as well. At some point, being able to fit 32x 15.36TB drives in 1U of rack space is great for density.

While we looked specifically at the new Supermicro 1U EDSFF storage server, the company also has JBOFs and even a 2U4N BigTwin E1.S EDSFF solution.

Is EDSFF actually “the standard”?

I know that Intel promoted it heavily, but Samsung is promoting its own NF1 as a “standard”.

P.S. Please check your site, the pages are being refreshed on a timer, which makes posting comments difficult, as the refresh cause the comments to be lost.

BinkyTo that’s old info. Samsung moved to EDSFF. I only remember this because STH was the one that covered it using EDSFF in that Inspur-Samsung article https://www.servethehome.com/samsung-pm9a3-pcie-gen4-nvme-data-center-ssds-released-inspur/

Intel, kioxia (via last week’s), and Samsung. Facebook, Microsoft and others using EDSFF so that’s the standard right?

I see a server with all of that IO I wonder… when can I have one with dual EPYC CPUs and PCIe 4.0?

@Jeff You’re absolutely right. AMD did a great job of predicting this transition and building a system to enable it. When will Xeon’s PCIe lane count increase in a significant way? Paying more per core for a Xeon while also having to pay for PCIe switch chips (plus a chipset) is not going to make sense for many organizations just to end up with less bandwidth.

So where does one buy EDSFF drives?

@Jeff This seems like the ideal platform fo single-socket Epyc, though you would still need a switch for 4x PCIe4 per drive. With dual Epyc, you could maintain a direct connection to every single drive without a switch, and still have capacity for a PCIe4 x16 per socket, assuming only 3x IF connections between sockets instead of 4x.

@emerth There are a few webpages that have a list of places that provides the drives.

A system with 32 8TB drives is U$1/4M from here: https://www.aspsys.com/servers/supermicro-1u-superserver-1029p-nr32r-p3884.htm – 1/4 PB for 1/4M.

Emerth

Typical outlets like Provantage. Typically, individual units won’t be available outside a complete system like the one in the article.

David Freeman

No. Dual Epyc uses 64 of the 128 PCIe4 lanes to connect to the other CPU. So 128 Lanes in single or dual socket. Since the nature of storage is that it is bursty, rather than constantly operating at line speed – a 1:1 connection is not necessary. a PLX switch works fine and does not impact the transfer rates. Alot of people have this misconception.

Intel doesn’t use PCIe lanes to connect 2-8 sockets (UPI is dedicated bandwidth) – which is also why the AMD is limited to 2 sockets.

Ice Lake SP (Xeon) dual socket will also expose 128 PCIe4 lanes – since the 64 lanes each CPU has are not used for CPU to CPU communications.

Jonathan

Ice Lake SP (Xeon) will expose 128 PCIe4 lanes in a dual socket configuration. Dual socket Epyc will expose 128 PCIe4 lanes – since 64 lanes are required for the AMD to be used in a 2 socket configuration.

Next Gen Xeon (after Comet Lake and Ice Lake) will be Sapphire Rapids which will be PCIe5 – so half the lanes for the same bandwidth.

While the idea is great and being a ready solution, I would definitely not buy such a overpriced and bottlenecked product.

There are two banks of 16 drives for each CPU. Each bank gets fed only 3 SlimLine (x8 PCIe lanes) cables then passes through a PCIe switch.

A drive needs x4 lanes, 16 drives will need x64 lanes. This product feeds the x64 PCIe lane demand though a x24 PCIe lane bottleneck.

One of the other reason has only 3x SlimLine cables is that the Intel CPUs don’t provide enough PCIe lanes, unlike AMD 128 PCIe CPUs.

I’ve already found a solution where you can setup a system with 32x NVMe SSDs using only four PCIe x16 slots and provide dedicated speed, and not burning $100K+ hole in your pocket.